Models Supported by Ultralytics

Welcome to Ultralytics' model documentation! We offer support for a wide range of models, each tailored to specific tasks like object detection, instance segmentation, image classification, pose estimation, and multi-object tracking. If you're interested in contributing your model architecture to Ultralytics, check out our Contributing Guide.

Featured Models

Here are some of the key models supported:

- YOLOv3: The third iteration of the YOLO model family, originally by Joseph Redmon, known for its efficient real-time object detection capabilities.

- YOLOv4: A darknet-native update to YOLOv3, released by Alexey Bochkovskiy in 2020.

- YOLOv5: An improved version of the YOLO architecture by Ultralytics, offering better performance and speed trade-offs compared to previous versions.

- YOLOv6: Released by Meituan in 2022, and in use in many of the company's autonomous delivery robots.

- YOLOv7: Updated YOLO models released in 2022 by the authors of YOLOv4. Only inference is supported.

- YOLOv8: A versatile model featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

- YOLOv9: An experimental model trained on the Ultralytics YOLOv5 codebase implementing Programmable Gradient Information (PGI).

- YOLOv10: By Tsinghua University, featuring NMS-free training and efficiency-accuracy driven architecture, delivering state-of-the-art performance and latency.

- YOLO11: Ultralytics' YOLO models delivering high performance across multiple tasks including detection, segmentation, pose estimation, tracking, and classification.

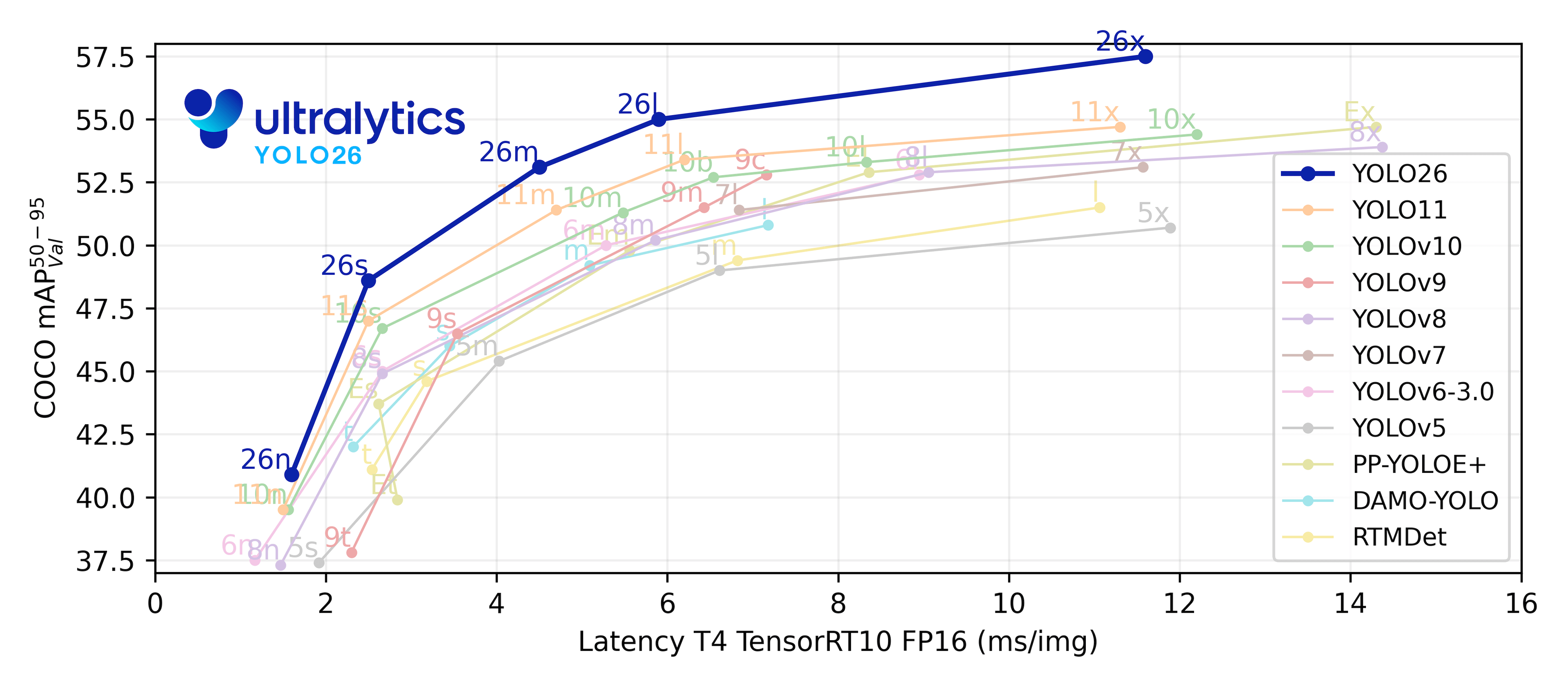

- YOLO26 🚀 NEW: Ultralytics' latest next-generation YOLO model optimized for edge deployment with end-to-end NMS-free inference.

- Segment Anything Model (SAM): Meta's original Segment Anything Model (SAM).

- Segment Anything Model 2 (SAM2): The next generation of Meta's Segment Anything Model for videos and images.

- Segment Anything Model 3 (SAM3) 🚀 NEW: Meta's third generation Segment Anything Model with Promptable Concept Segmentation for text and image exemplar-based segmentation.

- Mobile Segment Anything Model (MobileSAM): MobileSAM for mobile applications, by Kyung Hee University.

- Fast Segment Anything Model (FastSAM): FastSAM by Image & Video Analysis Group, Institute of Automation, Chinese Academy of Sciences.

- YOLO-NAS: YOLO Neural Architecture Search (NAS) Models.

- Real-Time Detection Transformers (RT-DETR): Baidu's PaddlePaddle real-time Detection Transformer (RT-DETR) models.

- YOLO-World: Real-time Open Vocabulary Object Detection models from Tencent AI Lab.

- YOLOE: An improved open-vocabulary object detector that maintains YOLO's real-time performance while detecting arbitrary classes beyond its training data.

Watch: Run Ultralytics YOLO models in just a few lines of code.

Getting Started: Usage Examples

This example provides simple YOLO training and inference examples. For full documentation on these and other modes see the Predict, Train, Val and Export docs pages.

Note the below example spotlights YOLO11 Detect models for object detection. For additional supported tasks see the Segment, Classify and Pose docs.

Example

PyTorch pretrained *.pt models as well as configuration *.yaml files can be passed to the YOLO(), SAM(), NAS() and RTDETR() classes to create a model instance in Python:

from ultralytics import YOLO

# Load a COCO-pretrained YOLO26n model

model = YOLO("yolo26n.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the YOLO26n model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

CLI commands are available to directly run the models:

# Load a COCO-pretrained YOLO26n model and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolo26n.pt data=coco8.yaml epochs=100 imgsz=640

# Load a COCO-pretrained YOLO26n model and run inference on the 'bus.jpg' image

yolo predict model=yolo26n.pt source=path/to/bus.jpg

Contributing New Models

Interested in contributing your model to Ultralytics? Great! We're always open to expanding our model portfolio.

Fork the Repository: Start by forking the Ultralytics GitHub repository.

Clone Your Fork: Clone your fork to your local machine and create a new branch to work on.

Implement Your Model: Add your model following the coding standards and guidelines provided in our Contributing Guide.

Test Thoroughly: Make sure to test your model rigorously, both in isolation and as part of the pipeline.

Create a Pull Request: Once you're satisfied with your model, create a pull request to the main repository for review.

Code Review & Merging: After review, if your model meets our criteria, it will be merged into the main repository.

For detailed steps, consult our Contributing Guide.

FAQ

What is the latest Ultralytics YOLO model?

The latest Ultralytics YOLO model is YOLO26, released in January 2026. YOLO26 features end-to-end NMS-free inference, optimized edge deployment, and supports all five tasks (detection, segmentation, classification, pose estimation, and OBB) plus open-vocabulary versions. For stable production workloads, both YOLO26 and YOLO11 are recommended choices.

How can I train a YOLO model on custom data?

Training a YOLO model on custom data can be easily accomplished using Ultralytics' libraries. Here's a quick example:

Example

from ultralytics import YOLO

# Load a YOLO model

model = YOLO("yolo26n.pt") # or any other YOLO model

# Train the model on custom dataset

results = model.train(data="custom_data.yaml", epochs=100, imgsz=640)

yolo train model=yolo26n.pt data='custom_data.yaml' epochs=100 imgsz=640

For more detailed instructions, visit the Train documentation page.

Which YOLO versions are supported by Ultralytics?

Ultralytics supports a comprehensive range of YOLO (You Only Look Once) versions from YOLOv3 to YOLO11, along with models like YOLO-NAS, SAM, and RT-DETR. Each version is optimized for various tasks such as detection, segmentation, and classification. For detailed information on each model, refer to the Models Supported by Ultralytics documentation.

Why should I use Ultralytics Platform for machine learning projects?

Ultralytics Platform provides a no-code, end-to-end platform for training, deploying, and managing YOLO models. It simplifies complex workflows, enabling users to focus on model performance and application. The HUB also offers cloud training capabilities, comprehensive dataset management, and user-friendly interfaces for both beginners and experienced developers.

What types of tasks can Ultralytics YOLO models perform?

Ultralytics YOLO models are versatile and can perform tasks including object detection, instance segmentation, classification, pose estimation, and oriented object detection (OBB). The latest model, YOLO26, supports all five tasks plus open-vocabulary detection. For details on specific tasks, refer to the Task pages.