Deploy YOLO26 on Mobile & Edge with ExecuTorch

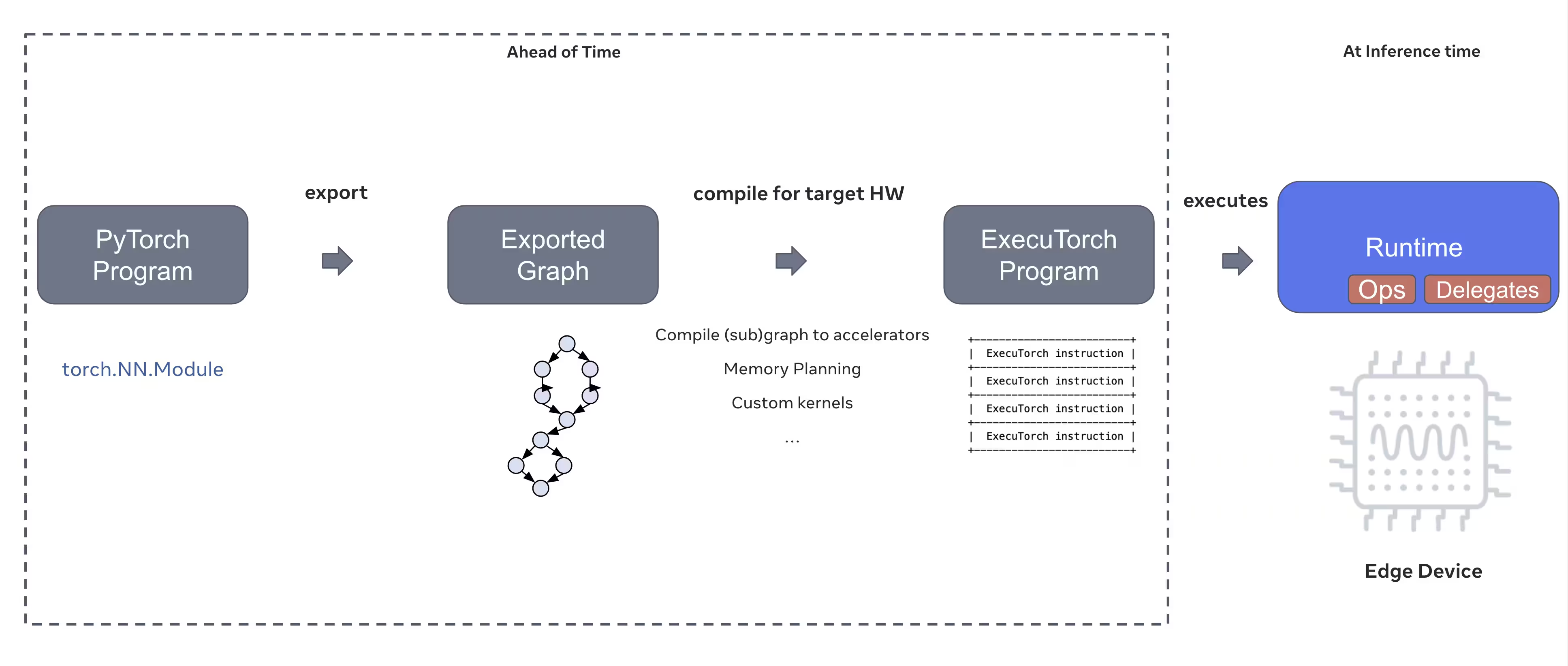

Deploying computer vision models on edge devices like smartphones, tablets, and embedded systems requires an optimized runtime that balances performance with resource constraints. ExecuTorch, PyTorch's solution for edge computing, enables efficient on-device inference for Ultralytics YOLO models.

This guide outlines how to export Ultralytics YOLO models to ExecuTorch format, enabling you to deploy your models on mobile and edge devices with optimized performance.

Why export to ExecuTorch?

ExecuTorch is PyTorch's end-to-end solution for enabling on-device inference capabilities across mobile and edge devices. Built with the goal of being portable and efficient, ExecuTorch can be used to run PyTorch programs on a wide variety of computing platforms.

Key features of ExecuTorch

ExecuTorch provides several powerful features for deploying Ultralytics YOLO models on edge devices:

Portable Model Format: ExecuTorch uses the

.pte(PyTorch ExecuTorch) format, which is optimized for size and loading speed on resource-constrained devices.XNNPACK Backend: Default integration with XNNPACK provides highly optimized inference on mobile CPUs, delivering excellent performance without requiring specialized hardware.

Quantization Support: Built-in support for quantization techniques to reduce model size and improve inference speed while maintaining accuracy.

Memory Efficiency: Optimized memory management reduces runtime memory footprint, making it suitable for devices with limited RAM.

Model Metadata: Exported models include metadata (image size, class names, etc.) in a separate YAML file for easy integration.

Deployment Options with ExecuTorch

ExecuTorch models can be deployed across various edge and mobile platforms:

Mobile Applications: Deploy on iOS and Android applications with native performance, enabling real-time object detection in mobile apps.

Embedded Systems: Run on embedded Linux devices like Raspberry Pi, NVIDIA Jetson, and other ARM-based systems with optimized performance.

Edge AI Devices: Deploy on specialized edge AI hardware with custom delegates for accelerated inference.

IoT Devices: Integrate into IoT devices for on-device inference without cloud connectivity requirements.

Exporting Ultralytics YOLO26 Models to ExecuTorch

Converting Ultralytics YOLO26 models to ExecuTorch format enables efficient deployment on mobile and edge devices.

Installation

ExecuTorch export requires Python 3.10 or higher and specific dependencies:

Installation

# Install Ultralytics package

pip install ultralytics

For detailed instructions and best practices related to the installation process, check our YOLO26 Installation guide. While installing the required packages for YOLO26, if you encounter any difficulties, consult our Common Issues guide for solutions and tips.

Usage

Exporting YOLO26 models to ExecuTorch is straightforward:

Usage

from ultralytics import YOLO

# Load the YOLO26 model

model = YOLO("yolo26n.pt")

# Export the model to ExecuTorch format

model.export(format="executorch") # creates 'yolo26n_executorch_model' directory

executorch_model = YOLO("yolo26n_executorch_model")

results = executorch_model.predict("https://ultralytics.com/images/bus.jpg")

# Export a YOLO26n PyTorch model to ExecuTorch format

yolo export model=yolo26n.pt format=executorch # creates 'yolo26n_executorch_model' directory

# Run inference with the exported model

yolo predict model=yolo26n_executorch_model source=https://ultralytics.com/images/bus.jpg

ExecuTorch exports generate a directory that includes a .pte file and metadata. Use the ExecuTorch runtime in your mobile or embedded application to load the .pte model and perform inference.

Export Arguments

When exporting to ExecuTorch format, you can specify the following arguments:

| Argument | Type | Default | Description |

|---|---|---|---|

imgsz | int or list | 640 | Image size for model input (height, width) |

device | str | 'cpu' | Device to use for export ('cpu') |

Output Structure

The ExecuTorch export creates a directory containing the model and metadata:

yolo26n_executorch_model/

├── yolo26n.pte # ExecuTorch model file

└── metadata.yaml # Model metadata (classes, image size, etc.)

Using Exported ExecuTorch Models

After exporting your model, you'll need to integrate it into your target application using the ExecuTorch runtime.

Mobile Integration

For mobile applications (iOS/Android), you'll need to:

- Add ExecuTorch Runtime: Include the ExecuTorch runtime library in your mobile project

- Load Model: Load the

.ptefile in your application - Run Inference: Process images and get predictions

Example iOS integration (Objective-C/C++):

// iOS uses C++ APIs for model loading and inference

// See https://pytorch.org/executorch/stable/using-executorch-ios.html for complete examples

#include <executorch/extension/module/module.h>

using namespace ::executorch::extension;

// Load the model

Module module("/path/to/yolo26n.pte");

// Create input tensor

float input[1 * 3 * 640 * 640];

auto tensor = from_blob(input, {1, 3, 640, 640});

// Run inference

const auto result = module.forward(tensor);

Example Android integration (Kotlin):

import org.pytorch.executorch.EValue

import org.pytorch.executorch.Module

import org.pytorch.executorch.Tensor

// Load the model

val module = Module.load("/path/to/yolo26n.pte")

// Prepare input tensor

val inputTensor = Tensor.fromBlob(floatData, longArrayOf(1, 3, 640, 640))

val inputEValue = EValue.from(inputTensor)

// Run inference

val outputs = module.forward(inputEValue)

val scores = outputs[0].toTensor().dataAsFloatArray

Embedded Linux

For embedded Linux systems, use the ExecuTorch C++ API:

#include <executorch/extension/module/module.h>

// Load model

auto module = torch::executor::Module("yolo26n.pte");

// Prepare input

std::vector<float> input_data = preprocessImage(image);

auto input_tensor = torch::executor::Tensor(input_data, {1, 3, 640, 640});

// Run inference

auto outputs = module.forward({input_tensor});

For more details on integrating ExecuTorch into your applications, visit the ExecuTorch Documentation.

Performance Optimization

Model Size Optimization

To reduce model size for deployment:

- Use Smaller Models: Start with YOLO26n (nano) for the smallest footprint

- Lower Input Resolution: Use smaller image sizes (e.g.,

imgsz=320orimgsz=416) - Quantization: Apply quantization techniques (supported in future ExecuTorch versions)

Inference Speed Optimization

For faster inference:

- XNNPACK Backend: The default XNNPACK backend provides optimized CPU inference

- Hardware Acceleration: Use platform-specific delegates (e.g., CoreML for iOS)

- Batch Processing: Process multiple images when possible

Benchmarks

The Ultralytics team benchmarked YOLO26 models, comparing speed and accuracy between PyTorch and ExecuTorch.

Performance

| Model | Format | Status | Size (MB) | metrics/mAP50-95(B) | Inference time (ms/im) |

|---|---|---|---|---|---|

| YOLO26n | PyTorch | ✅ | 5.3 | 0.4790 | 314.80 |

| YOLO26n | ExecuTorch | ✅ | 9.4 | 0.4800 | 142 |

| YOLO26s | PyTorch | ✅ | 19.5 | 0.5730 | 930.90 |

| YOLO26s | ExecuTorch | ✅ | 36.5 | 0.5780 | 376.1 |

Benchmarked with Ultralytics 8.4.9

Note

Inference time does not include pre/ post-processing.

Troubleshooting

Common Issues

Issue: Python version error

Solution: ExecuTorch requires Python 3.10 or higher. Upgrade your Python installation:

# Using conda

conda create -n executorch python=3.10

conda activate executorch

Issue: Export fails during first run

Solution: ExecuTorch may need to download and compile components on first use. Ensure you have:

pip install --upgrade executorch

Issue: Import errors for ExecuTorch modules

Solution: Ensure ExecuTorch is properly installed:

pip install executorch --force-reinstall

For more troubleshooting help, visit the Ultralytics GitHub Issues or the ExecuTorch Documentation.

Summary

Exporting YOLO26 models to ExecuTorch format enables efficient deployment on mobile and edge devices. With PyTorch-native integration, cross-platform support, and optimized performance, ExecuTorch is an excellent choice for edge AI applications.

Key takeaways:

- ExecuTorch provides PyTorch-native edge deployment with excellent performance

- Export is simple with

format='executorch'parameter - Models are optimized for mobile CPUs via XNNPACK backend

- Supports iOS, Android, and embedded Linux platforms

- Requires Python 3.10+ and FlatBuffers compiler

FAQ

How do I export a YOLO26 model to ExecuTorch format?

Export a YOLO26 model to ExecuTorch using either Python or CLI:

from ultralytics import YOLO

model = YOLO("yolo26n.pt")

model.export(format="executorch")

or

yolo export model=yolo26n.pt format=executorch

What are the system requirements for ExecuTorch export?

ExecuTorch export requires:

- Python 3.10 or higher

executorchpackage (install viapip install executorch)- PyTorch (installed automatically with ultralytics)

Note: During the first export, ExecuTorch will download and compile necessary components including the FlatBuffers compiler automatically.

Can I run inference with ExecuTorch models directly in Python?

ExecuTorch models (.pte files) are designed for deployment on mobile and edge devices using the ExecuTorch runtime. They cannot be directly loaded with YOLO() for inference in Python. You need to integrate them into your target application using the ExecuTorch runtime libraries.

What platforms are supported by ExecuTorch?

ExecuTorch supports:

- Mobile: iOS and Android

- Embedded Linux: Raspberry Pi, NVIDIA Jetson, and other ARM devices

- Desktop: Linux, macOS, and Windows (for development)

How does ExecuTorch compare to TFLite for mobile deployment?

Both ExecuTorch and TFLite are excellent for mobile deployment:

- ExecuTorch: Better PyTorch integration, native PyTorch workflow, growing ecosystem

- TFLite: More mature, wider hardware support, more deployment examples

Choose ExecuTorch if you're already using PyTorch and want a native deployment path. Choose TFLite for maximum compatibility and mature tooling.

Can I use ExecuTorch models with GPU acceleration?

Yes! ExecuTorch supports hardware acceleration through various backends:

- Mobile GPU: Via Vulkan, Metal, or OpenCL delegates

- NPU/DSP: Via platform-specific delegates

- Default: XNNPACK for optimized CPU inference

Refer to the ExecuTorch Documentation for backend-specific setup.