Basit Yardımcı Araçlar

ultralytics paketi, iş akışlarınızı desteklemek, geliştirmek ve hızlandırmak için çeşitli araçlar sağlar. Kullanılabilir daha birçok araç olmasına rağmen, bu kılavuz, geliştiriciler için en kullanışlı olanlardan bazılarını vurgulayarak Ultralytics araçlarıyla programlama için pratik bir referans görevi görür.

İzle: Ultralytics Yardımcı Araçları | Otomatik Etiketleme, Explorer API ve Veri Kümesi Dönüştürme

Veri

Otomatik Etiketleme / Açıklamalar

Veri kümesi etiketleme, kaynak yoğun ve zaman alıcı bir süreçtir. Makul miktarda veri üzerinde eğitilmiş bir Ultralytics YOLO nesne algılama modeliniz varsa, ek verileri segmentasyon formatında otomatik olarak etiketlemek için SAM ile birlikte kullanabilirsiniz.

from ultralytics.data.annotator import auto_annotate

auto_annotate(

data="path/to/new/data",

det_model="yolo26n.pt",

sam_model="mobile_sam.pt",

device="cuda",

output_dir="path/to/save_labels",

)

Bu fonksiyon herhangi bir değer döndürmez. Daha fazla ayrıntı için:

- Şuna bakın: için referans bölümü

annotator.auto_annotatefonksiyonun nasıl çalıştığı hakkında daha fazla bilgi için. - Şununla birlikte kullanın: fonksiyon

segments2boxesnesne algılama sınırlayıcı kutular oluşturmak için de.

Veri Kümesi Açıklamalarını Görselleştirin

Bu fonksiyon, yanlış algılama sonuçlarına yol açabilecek yanlış açıklamaları belirlemeye ve düzeltmeye yardımcı olarak, eğitimden önce bir görüntüdeki YOLO açıklamalarını görselleştirir. Sınırlayıcı kutular çizer, nesneleri sınıf adlarıyla etiketler ve daha iyi okunabilirlik için arka planın parlaklığına göre metin rengini ayarlar.

from ultralytics.data.utils import visualize_image_annotations

label_map = { # Define the label map with all annotated class labels.

0: "person",

1: "car",

}

# Visualize

visualize_image_annotations(

"path/to/image.jpg", # Input image path.

"path/to/annotations.txt", # Annotation file path for the image.

label_map,

)

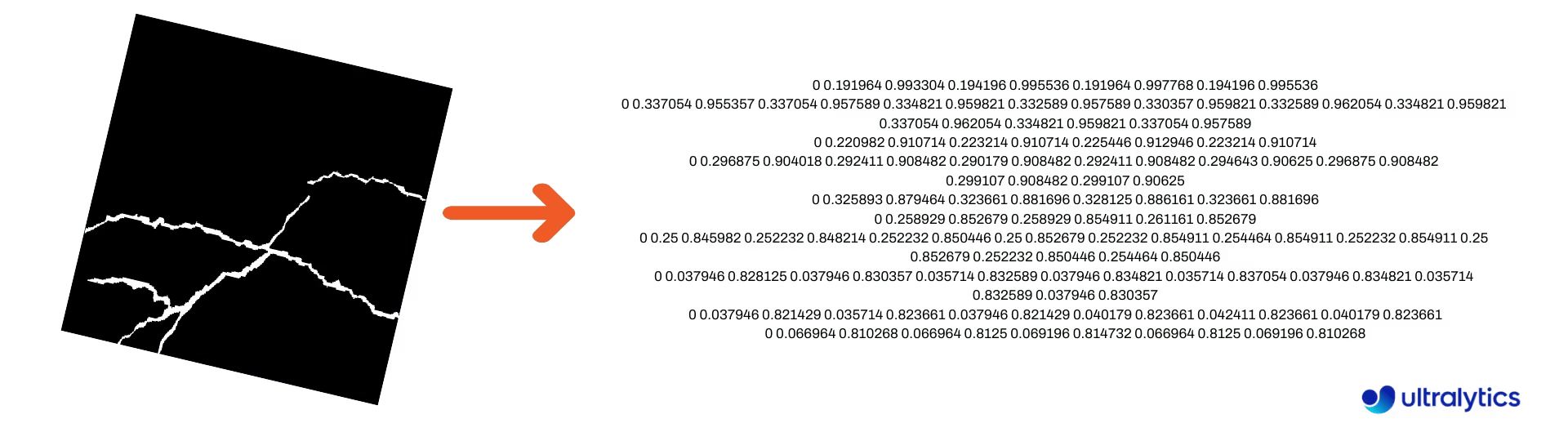

Segmentasyon Maskelerini YOLO Biçimine Dönüştür

Bir segmentasyon maskesi görüntüleri veri kümesini Ultralytics YOLO segmentasyon formatına dönüştürmek için bunu kullanın. Bu fonksiyon, ikili format maske görüntülerini içeren dizini alır ve bunları YOLO segmentasyon formatına dönüştürür.

Dönüştürülen maskeler, belirtilen çıktı dizinine kaydedilecektir.

from ultralytics.data.converter import convert_segment_masks_to_yolo_seg

# The classes here is the total classes in the dataset.

# for COCO dataset we have 80 classes.

convert_segment_masks_to_yolo_seg(masks_dir="path/to/masks_dir", output_dir="path/to/output_dir", classes=80)

COCO'yu YOLO Formatına Dönüştür

Dönüştürmek için bunu kullanın COCO JSON açıklamalarını YOLO formatına dönüştürür. Nesne algılama (sınırlayıcı kutu) veri kümeleri için her ikisini de ayarlayın use_segments ve use_keypoints için False.

from ultralytics.data.converter import convert_coco

convert_coco(

"coco/annotations/",

use_segments=False,

use_keypoints=False,

cls91to80=True,

)

Hakkında ek bilgi için convert_coco fonksiyonu, referans sayfasını ziyaret edin.

Sınırlayıcı Kutu Boyutlarını Alın

import cv2

from ultralytics import YOLO

from ultralytics.utils.plotting import Annotator

model = YOLO("yolo26n.pt") # Load pretrain or fine-tune model

# Process the image

source = cv2.imread("path/to/image.jpg")

results = model(source)

# Extract results

annotator = Annotator(source, example=model.names)

for box in results[0].boxes.xyxy.cpu():

width, height, area = annotator.get_bbox_dimension(box)

print(f"Bounding Box Width {width.item()}, Height {height.item()}, Area {area.item()}")

Sınırlayıcı Kutuları Segmentlere Dönüştür

Mevcut x y w h sınırlayıcı kutu verileri, yolo_bbox2segment fonksiyonu. Görüntüler ve açıklamalar için dosyaları aşağıdaki gibi düzenleyin:

data

|__ images

├─ 001.jpg

├─ 002.jpg

├─ ..

└─ NNN.jpg

|__ labels

├─ 001.txt

├─ 002.txt

├─ ..

└─ NNN.txt

from ultralytics.data.converter import yolo_bbox2segment

yolo_bbox2segment(

im_dir="path/to/images",

save_dir=None, # saved to "labels-segment" in images directory

sam_model="sam_b.pt",

)

Şurayı ziyaret edin: yolo_bbox2segment referans sayfası fonksiyon hakkında daha fazla bilgi için.

Segmentleri Sınırlayıcı Kutulara Dönüştür

Eğer kullanan bir veri kümeniz varsa segmentasyon veri kümesi formatı, bunları kolayca dik (veya yatay) sınırlayıcı kutulara dönüştürebilirsiniz (x y w h biçimiyle (format) kullanabilirsiniz.

import numpy as np

from ultralytics.utils.ops import segments2boxes

segments = np.array(

[

[805, 392, 797, 400, ..., 808, 714, 808, 392],

[115, 398, 113, 400, ..., 150, 400, 149, 298],

[267, 412, 265, 413, ..., 300, 413, 299, 412],

]

)

segments2boxes([s.reshape(-1, 2) for s in segments])

# >>> array([[ 741.66, 631.12, 133.31, 479.25],

# [ 146.81, 649.69, 185.62, 502.88],

# [ 281.81, 636.19, 118.12, 448.88]],

# dtype=float32) # xywh bounding boxes

Bu fonksiyonun nasıl çalıştığını anlamak için referans sayfasını ziyaret edin.

Araçlar

Görüntü Sıkıştırma

Tek bir resim dosyasını, en boy oranını ve kalitesini koruyarak daha küçük bir boyuta sıkıştırın. Giriş resmi maksimum boyuttan küçükse, yeniden boyutlandırılmaz.

from pathlib import Path

from ultralytics.data.utils import compress_one_image

for f in Path("path/to/dataset").rglob("*.jpg"):

compress_one_image(f)

Otomatik Bölünmüş Veri Kümesi

Bir veri kümesini otomatik olarak böler train/val/test böler ve ortaya çıkan bölmeleri şuraya kaydeder autosplit_*.txt dosyalar. Bu fonksiyon rastgele örnekleme kullanır, bu da kullanılırken hariç tutulur. fraction eğitim için argüman.

from ultralytics.data.split import autosplit

autosplit(

path="path/to/images",

weights=(0.9, 0.1, 0.0), # (train, validation, test) fractional splits

annotated_only=False, # split only images with annotation file when True

)

Bu fonksiyonla ilgili ek ayrıntılar için Referans sayfasına bakın.

Segment-polygon'dan İkili Maskeye

Tek bir çokgeni (liste olarak) belirtilen görüntü boyutunda bir ikili maskeye dönüştürün. Çokgen şu biçimde olmalıdır: [N, 2], nerede N sayısıdır (x, y) Poligon konturunu tanımlayan noktalar.

Uyarı

N her zaman gerekir çift sayı olmalıdır.

import numpy as np

from ultralytics.data.utils import polygon2mask

imgsz = (1080, 810)

polygon = np.array([805, 392, 797, 400, ..., 808, 714, 808, 392]) # (238, 2)

mask = polygon2mask(

imgsz, # tuple

[polygon], # input as list

color=255, # 8-bit binary

downsample_ratio=1,

)

Sınırlayıcı Kutular

Sınırlayıcı Kutu (Yatay) Örnekleri

Sınırlayıcı kutu verilerini yönetmek için, Bboxes sınıfı, kutu koordinat formatları arasında dönüşüm yapmaya, kutu boyutlarını ölçeklendirmeye, alanları hesaplamaya, ofsetler eklemeye ve daha fazlasına yardımcı olur.

import numpy as np

from ultralytics.utils.instance import Bboxes

boxes = Bboxes(

bboxes=np.array(

[

[22.878, 231.27, 804.98, 756.83],

[48.552, 398.56, 245.35, 902.71],

[669.47, 392.19, 809.72, 877.04],

[221.52, 405.8, 344.98, 857.54],

[0, 550.53, 63.01, 873.44],

[0.0584, 254.46, 32.561, 324.87],

]

),

format="xyxy",

)

boxes.areas()

# >>> array([ 4.1104e+05, 99216, 68000, 55772, 20347, 2288.5])

boxes.convert("xywh")

print(boxes.bboxes)

# >>> array(

# [[ 413.93, 494.05, 782.1, 525.56],

# [ 146.95, 650.63, 196.8, 504.15],

# [ 739.6, 634.62, 140.25, 484.85],

# [ 283.25, 631.67, 123.46, 451.74],

# [ 31.505, 711.99, 63.01, 322.91],

# [ 16.31, 289.67, 32.503, 70.41]]

# )

Şuna bakın: Bboxes referans bölümü daha fazla özellik ve yöntem için.

İpucu

Aşağıdaki işlevlerin çoğu (ve daha fazlası) şunlar kullanılarak erişilebilir: Bboxes sınıf, ancak doğrudan fonksiyonlarla çalışmayı tercih ediyorsanız, bunları bağımsız olarak nasıl içe aktaracağınızla ilgili sonraki alt bölümlere bakın.

Kutuları Ölçeklendirme

Bir görüntüyü büyütürken veya küçültürken, karşılık gelen sınırlayıcı kutu koordinatlarını uygun şekilde ölçekleyerek eşleştirebilirsiniz. ultralytics.utils.ops.scale_boxes.

import cv2 as cv

import numpy as np

from ultralytics.utils.ops import scale_boxes

image = cv.imread("ultralytics/assets/bus.jpg")

h, w, c = image.shape

resized = cv.resize(image, None, (), fx=1.2, fy=1.2)

new_h, new_w, _ = resized.shape

xyxy_boxes = np.array(

[

[22.878, 231.27, 804.98, 756.83],

[48.552, 398.56, 245.35, 902.71],

[669.47, 392.19, 809.72, 877.04],

[221.52, 405.8, 344.98, 857.54],

[0, 550.53, 63.01, 873.44],

[0.0584, 254.46, 32.561, 324.87],

]

)

new_boxes = scale_boxes(

img1_shape=(h, w), # original image dimensions

boxes=xyxy_boxes, # boxes from original image

img0_shape=(new_h, new_w), # resized image dimensions (scale to)

ratio_pad=None,

padding=False,

xywh=False,

)

print(new_boxes)

# >>> array(

# [[ 27.454, 277.52, 965.98, 908.2],

# [ 58.262, 478.27, 294.42, 1083.3],

# [ 803.36, 470.63, 971.66, 1052.4],

# [ 265.82, 486.96, 413.98, 1029],

# [ 0, 660.64, 75.612, 1048.1],

# [ 0.0701, 305.35, 39.073, 389.84]]

# )

Sınırlayıcı Kutu Format Dönüşümleri

XYXY → XYWH

Sınırlayıcı kutu koordinatlarını (x1, y1, x2, y2) biçiminden (x, y, genişlik, yükseklik) biçimine dönüştürün; burada (x1, y1) sol üst köşe ve (x2, y2) sağ alt köşedir.

import numpy as np

from ultralytics.utils.ops import xyxy2xywh

xyxy_boxes = np.array(

[

[22.878, 231.27, 804.98, 756.83],

[48.552, 398.56, 245.35, 902.71],

[669.47, 392.19, 809.72, 877.04],

[221.52, 405.8, 344.98, 857.54],

[0, 550.53, 63.01, 873.44],

[0.0584, 254.46, 32.561, 324.87],

]

)

xywh = xyxy2xywh(xyxy_boxes)

print(xywh)

# >>> array(

# [[ 413.93, 494.05, 782.1, 525.56],

# [ 146.95, 650.63, 196.8, 504.15],

# [ 739.6, 634.62, 140.25, 484.85],

# [ 283.25, 631.67, 123.46, 451.74],

# [ 31.505, 711.99, 63.01, 322.91],

# [ 16.31, 289.67, 32.503, 70.41]]

# )

Tüm Sınırlayıcı Kutu Dönüşümleri

from ultralytics.utils.ops import (

ltwh2xywh,

ltwh2xyxy,

xywh2ltwh, # xywh → top-left corner, w, h

xywh2xyxy,

xywhn2xyxy, # normalized → pixel

xyxy2ltwh, # xyxy → top-left corner, w, h

xyxy2xywhn, # pixel → normalized

)

for func in (ltwh2xywh, ltwh2xyxy, xywh2ltwh, xywh2xyxy, xywhn2xyxy, xyxy2ltwh, xyxy2xywhn):

print(help(func)) # print function docstrings

Her bir fonksiyonun doküman dizisine bakın veya şurayı ziyaret edin: ultralytics.utils.ops referans sayfası daha fazlasını okumak için.

Çizim

Açıklama yardımcı programları

Ultralytics bir içerir Annotator Çeşitli veri türlerini açıklamak için kullanılan sınıf. En iyi şu şekilde kullanılır: nesne tespiti sınırlayıcı kutuları, poz anahtar noktalarıve yönlendirilmiş sınırlandırma kutuları.

Kutu Açıklaması

Ultralytics YOLO kullanarak python Örnekleri 🚀

import cv2 as cv

import numpy as np

from ultralytics.utils.plotting import Annotator, colors

names = {

0: "person",

5: "bus",

11: "stop sign",

}

image = cv.imread("ultralytics/assets/bus.jpg")

ann = Annotator(

image,

line_width=None, # default auto-size

font_size=None, # default auto-size

font="Arial.ttf", # must be ImageFont compatible

pil=False, # use PIL, otherwise uses OpenCV

)

xyxy_boxes = np.array(

[

[5, 22.878, 231.27, 804.98, 756.83], # class-idx x1 y1 x2 y2

[0, 48.552, 398.56, 245.35, 902.71],

[0, 669.47, 392.19, 809.72, 877.04],

[0, 221.52, 405.8, 344.98, 857.54],

[0, 0, 550.53, 63.01, 873.44],

[11, 0.0584, 254.46, 32.561, 324.87],

]

)

for nb, box in enumerate(xyxy_boxes):

c_idx, *box = box

label = f"{str(nb).zfill(2)}:{names.get(int(c_idx))}"

ann.box_label(box, label, color=colors(c_idx, bgr=True))

image_with_bboxes = ann.result()

import cv2 as cv

import numpy as np

from ultralytics.utils.plotting import Annotator, colors

obb_names = {10: "small vehicle"}

obb_image = cv.imread("datasets/dota8/images/train/P1142__1024__0___824.jpg")

obb_boxes = np.array(

[

[0, 635, 560, 919, 719, 1087, 420, 803, 261], # class-idx x1 y1 x2 y2 x3 y2 x4 y4

[0, 331, 19, 493, 260, 776, 70, 613, -171],

[9, 869, 161, 886, 147, 851, 101, 833, 115],

]

)

ann = Annotator(

obb_image,

line_width=None, # default auto-size

font_size=None, # default auto-size

font="Arial.ttf", # must be ImageFont compatible

pil=False, # use PIL, otherwise uses OpenCV

)

for obb in obb_boxes:

c_idx, *obb = obb

obb = np.array(obb).reshape(-1, 4, 2).squeeze()

label = f"{obb_names.get(int(c_idx))}"

ann.box_label(

obb,

label,

color=colors(c_idx, True),

)

image_with_obb = ann.result()

Adlar şuradan kullanılabilir: model.names ne zaman algılama sonuçlarıyla çalışma.

Ayrıca bkz. Annotator Referans Sayfası ek içgörü için.

Ultralytics Sweep Açıklaması

Ultralytics Yardımcı Programlarını Kullanarak Süpürme Açıklaması

import cv2

import numpy as np

from ultralytics import YOLO

from ultralytics.solutions.solutions import SolutionAnnotator

from ultralytics.utils.plotting import colors

# User defined video path and model file

cap = cv2.VideoCapture("path/to/video.mp4")

model = YOLO(model="yolo26s-seg.pt") # Model file, e.g., yolo26s.pt or yolo26m-seg.pt

if not cap.isOpened():

print("Error: Could not open video.")

exit()

# Initialize the video writer object.

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter("ultralytics.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

masks = None # Initialize variable to store masks data

f = 0 # Initialize frame count variable for enabling mouse event.

line_x = w # Store width of line.

dragging = False # Initialize bool variable for line dragging.

classes = model.names # Store model classes names for plotting.

window_name = "Ultralytics Sweep Annotator"

def drag_line(event, x, _, flags, param):

"""Mouse callback function to enable dragging a vertical sweep line across the video frame."""

global line_x, dragging

if event == cv2.EVENT_LBUTTONDOWN or (flags & cv2.EVENT_FLAG_LBUTTON):

line_x = max(0, min(x, w))

dragging = True

while cap.isOpened(): # Loop over the video capture object.

ret, im0 = cap.read()

if not ret:

break

f = f + 1 # Increment frame count.

count = 0 # Re-initialize count variable on every frame for precise counts.

results = model.track(im0, persist=True)[0]

if f == 1:

cv2.namedWindow(window_name)

cv2.setMouseCallback(window_name, drag_line)

annotator = SolutionAnnotator(im0)

if results.boxes.is_track:

if results.masks is not None:

masks = [np.array(m, dtype=np.int32) for m in results.masks.xy]

boxes = results.boxes.xyxy.tolist()

track_ids = results.boxes.id.int().cpu().tolist()

clss = results.boxes.cls.cpu().tolist()

for mask, box, cls, t_id in zip(masks or [None] * len(boxes), boxes, clss, track_ids):

color = colors(t_id, True) # Assign different color to each tracked object.

label = f"{classes[cls]}:{t_id}"

if mask is not None and mask.size > 0:

if box[0] > line_x:

count += 1

cv2.polylines(im0, [mask], True, color, 2)

x, y = mask.min(axis=0)

(w_m, _), _ = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)

cv2.rectangle(im0, (x, y - 20), (x + w_m, y), color, -1)

cv2.putText(im0, label, (x, y - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 1)

else:

if box[0] > line_x:

count += 1

annotator.box_label(box=box, color=color, label=label)

# Generate draggable sweep line

annotator.sweep_annotator(line_x=line_x, line_y=h, label=f"COUNT:{count}")

cv2.imshow(window_name, im0)

video_writer.write(im0)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

# Release the resources

cap.release()

video_writer.release()

cv2.destroyAllWindows()

Hakkında ek ayrıntılar bulun sweep_annotator referans bölümümüzdeki yöntem burada.

Uyarlanabilir etiket Açıklaması

Uyarı

Başlangıç: Ultralytics v8.3.167, circle_label ve text_label birleşik bir araçla değiştirildi adaptive_label fonksiyonu. Artık açıklama türünü kullanarak belirleyebilirsiniz shape argümanı:

- Dikdörtgen:

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="rect") - Daire:

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="circle")

İzle: Python Canlı Demolarıyla Metin ve Daire Ek Açıklamalarına Derinlemesine Kılavuz | Ultralytics Ek Açıklamaları 🚀

Ultralytics Yardımcı Programlarını Kullanarak Uyarlanabilir etiket Açıklaması

import cv2

from ultralytics import YOLO

from ultralytics.solutions.solutions import SolutionAnnotator

from ultralytics.utils.plotting import colors

model = YOLO("yolo26s.pt")

names = model.names

cap = cv2.VideoCapture("path/to/video.mp4")

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

writer = cv2.VideoWriter("Ultralytics circle annotation.avi", cv2.VideoWriter_fourcc(*"MJPG"), fps, (w, h))

while True:

ret, im0 = cap.read()

if not ret:

break

annotator = SolutionAnnotator(im0)

results = model.predict(im0)[0]

boxes = results.boxes.xyxy.cpu()

clss = results.boxes.cls.cpu().tolist()

for box, cls in zip(boxes, clss):

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="circle")

writer.write(im0)

cv2.imshow("Ultralytics circle annotation", im0)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

writer.release()

cap.release()

cv2.destroyAllWindows()

import cv2

from ultralytics import YOLO

from ultralytics.solutions.solutions import SolutionAnnotator

from ultralytics.utils.plotting import colors

model = YOLO("yolo26s.pt")

names = model.names

cap = cv2.VideoCapture("path/to/video.mp4")

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

writer = cv2.VideoWriter("Ultralytics text annotation.avi", cv2.VideoWriter_fourcc(*"MJPG"), fps, (w, h))

while True:

ret, im0 = cap.read()

if not ret:

break

annotator = SolutionAnnotator(im0)

results = model.predict(im0)[0]

boxes = results.boxes.xyxy.cpu()

clss = results.boxes.cls.cpu().tolist()

for box, cls in zip(boxes, clss):

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="rect")

writer.write(im0)

cv2.imshow("Ultralytics text annotation", im0)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

writer.release()

cap.release()

cv2.destroyAllWindows()

Şuna bakın: SolutionAnnotator Referans Sayfası ek içgörü için.

Çeşitli

Kod Profilleme

Çalıştırmak/işlemek için kodun süresini kontrol edin, örneğin with veya bir dekoratör olarak.

from ultralytics.utils.ops import Profile

with Profile(device="cuda:0") as dt:

pass # operation to measure

print(dt)

# >>> "Elapsed time is 9.5367431640625e-07 s"

Ultralytics Desteklenen Formatlar

Ultralytics'te desteklenen görüntü veya video formatlarını programlı olarak kullanmanız mı gerekiyor? Gerekirse bu sabitleri kullanın:

from ultralytics.data.utils import IMG_FORMATS, VID_FORMATS

print(IMG_FORMATS)

# {'avif', 'bmp', 'dng', 'heic', 'jp2', 'jpeg', 'jpeg2000', 'jpg', 'mpo', 'png', 'tif', 'tiff', 'webp'}

print(VID_FORMATS)

# {'asf', 'avi', 'gif', 'm4v', 'mkv', 'mov', 'mp4', 'mpeg', 'mpg', 'ts', 'wmv', 'webm'}

Bölünebilir Yap

Şuna en yakın tam sayıyı hesaplayın: x tarafından eşit olarak bölünebilen y.

from ultralytics.utils.ops import make_divisible

make_divisible(7, 3)

# >>> 9

make_divisible(7, 2)

# >>> 8

SSS

Makine öğrenimi iş akışlarını geliştirmek için Ultralytics paketinde hangi yardımcı programlar bulunur?

Ultralytics paketi, makine öğrenimi iş akışlarını kolaylaştırmak ve optimize etmek için tasarlanmış yardımcı programlar içerir. Temel yardımcı programlar arasında veri kümelerini etiketlemek için otomatik etiketleme, COCO'dan YOLO biçimine convert_coco ile dönüştürme, görüntüleri sıkıştırma ve veri kümesini otomatik olarak bölme yer alır. Bu araçlar, manuel çabayı azaltır, tutarlılığı sağlar ve veri işleme verimliliğini artırır.

Veri kümemi otomatik olarak etiketlemek için Ultralytics'i nasıl kullanabilirim?

Önceden eğitilmiş bir Ultralytics YOLO nesne detect modeliniz varsa, veri kümenizi segmentation formatında otomatik olarak etiketlemek için SAM modeliyle birlikte kullanabilirsiniz. İşte bir örnek:

from ultralytics.data.annotator import auto_annotate

auto_annotate(

data="path/to/new/data",

det_model="yolo26n.pt",

sam_model="mobile_sam.pt",

device="cuda",

output_dir="path/to/save_labels",

)

Daha fazla ayrıntı için auto_annotate referans bölümünü inceleyin.

Ultralytics'te COCO veri kümesi açıklamalarını YOLO formatına nasıl dönüştürebilirim?

COCO JSON açıklamalarını nesne algılama için YOLO biçimine dönüştürmek için şunu kullanabilirsiniz: convert_coco araç. İşte örnek bir kod parçacığı:

from ultralytics.data.converter import convert_coco

convert_coco(

"coco/annotations/",

use_segments=False,

use_keypoints=False,

cls91to80=True,

)

Ek bilgi için convert_coco referans sayfasına bakın.

Ultralytics paketinde YOLO Veri Keşfedicisi'nin amacı nedir?

YOLO Gezgini içinde tanıtılan güçlü bir araçtır 8.1.0 veri kümesi anlayışını geliştirmek için güncelleme. Veri kümenizdeki nesne örneklerini bulmak için metin sorgularını kullanmanıza olanak tanıyarak verilerinizi analiz etmeyi ve yönetmeyi kolaylaştırır. Bu araç, veri kümesi bileşimi ve dağılımı hakkında değerli bilgiler sağlayarak model eğitimini ve performansını iyileştirmeye yardımcı olur.

Ultralytics'te sınırlayıcı kutuları segmentlere nasıl dönüştürebilirim?

Mevcut sınırlayıcı kutu verilerini (içinde x y w h biçiminden (format) segmentlere dönüştürmek için şunu kullanabilirsiniz: yolo_bbox2segment fonksiyonu. Dosyalarınızın resimler ve etiketler için ayrı dizinlerle düzenlendiğinden emin olun.

from ultralytics.data.converter import yolo_bbox2segment

yolo_bbox2segment(

im_dir="path/to/images",

save_dir=None, # saved to "labels-segment" in the images directory

sam_model="sam_b.pt",

)

Daha fazla bilgi için yolo_bbox2segment referans sayfasını ziyaret edin.