KITTI Dataset

The kitti dataset is one of the most influential benchmark datasets for autonomous driving and computer vision. Released by the Karlsruhe Institute of Technology and Toyota Technological Institute at Chicago, it contains stereo camera, LiDAR, and GPS/IMU data collected from real-world driving scenarios.

Watch: How to Train Ultralytics YOLO26 on the KITTI Dataset 🚀

It is widely used for evaluating algorithms in object detection, depth estimation, optical flow, and visual odometry. The dataset is fully compatible with Ultralytics YOLO26 for 2D object detection tasks and can be easily integrated into the Ultralytics platform for training and evaluation.

Dataset Structure

Warning

Kitti original test set is excluded here since it does not contain ground-truth annotations.

In total, the dataset includes 7,481 images, each paired with detailed annotations for objects such as cars, pedestrians, cyclists, and other road elements. The dataset is divided into two main subsets:

- Training set: Contains 5,985 images with annotated labels used for model training.

- Validation set: Includes 1,496 images with corresponding annotations used for performance evaluation and benchmarking.

Applications

Kitti dataset enables advancements in autonomous driving and robotics, supporting tasks like:

- Autonomous vehicle perception: Training models to detect and track vehicles, pedestrians, and obstacles for safe navigation in self-driving systems.

- 3D scene understanding: Supporting depth estimation, stereo vision, and 3D object localization to help machines understand spatial environments.

- Optical flow and motion prediction: Enabling motion analysis to predict the movement of objects and improve trajectory planning in dynamic environments.

- Computer vision benchmarking: Serving as a standard benchmark for evaluating performance across multiple vision tasks, including object detection, and tracking.

Dataset YAML

Ultralytics defines the kitti dataset configuration using a YAML file. This file specifies dataset paths, class labels, and metadata required for training. The configuration file is available at https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/kitti.yaml.

ultralytics/cfg/datasets/kitti.yaml

# Ultralytics 🚀 AGPL-3.0 License - https://ultralytics.com/license

# KITTI dataset by Karlsruhe Institute of Technology and Toyota Technological Institute at Chicago

# Documentation: https://docs.ultralytics.com/datasets/detect/kitti/

# Example usage: yolo train data=kitti.yaml

# parent

# ├── ultralytics

# └── datasets

# └── kitti ← downloads here (390.5 MB)

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: kitti # dataset root dir

train: images/train # train images (relative to 'path') 5985 images

val: images/val # val images (relative to 'path') 1496 images

names:

0: car

1: van

2: truck

3: pedestrian

4: person_sitting

5: cyclist

6: tram

7: misc

# Download script/URL (optional)

download: https://github.com/ultralytics/assets/releases/download/v0.0.0/kitti.zip

Usage

To train a YOLO26n model on the kitti dataset for 100 epochs with an image size of 640, use the following commands. For more details, refer to the Training page.

Train Example

from ultralytics import YOLO

# Load a pretrained YOLO26 model

model = YOLO("yolo26n.pt")

# Train on kitti dataset

results = model.train(data="kitti.yaml", epochs=100, imgsz=640)

yolo detect train data=kitti.yaml model=yolo26n.pt epochs=100 imgsz=640

You can also perform evaluation, inference, and export tasks directly from the command line or Python API using the same configuration file.

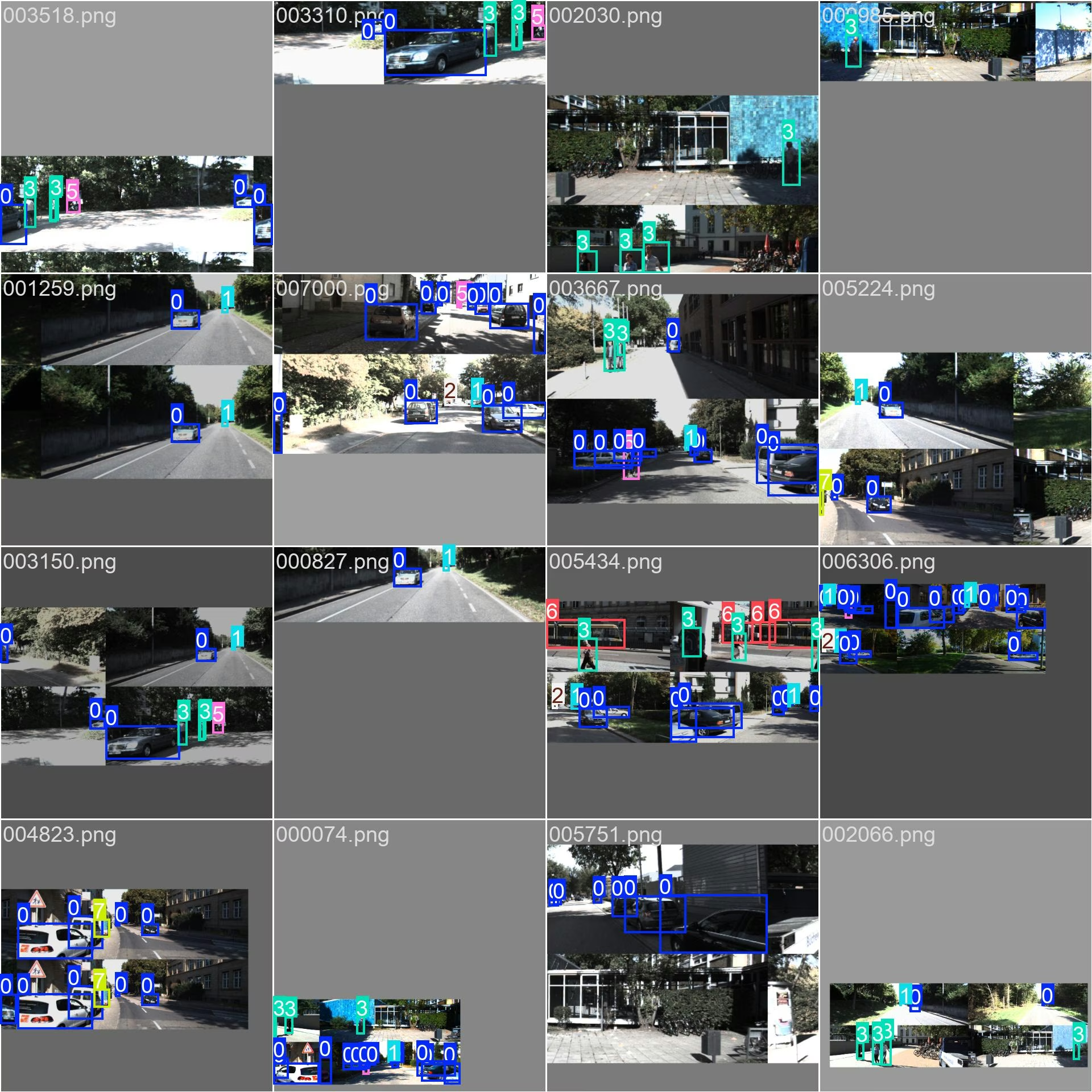

Sample Images and Annotations

The kitti dataset provides diverse driving scenarios. Each image includes bounding box annotations for 2D object detection tasks. The examples showcase the dataset's rich variety, enabling robust model generalization across diverse real-world conditions.

Citations and Acknowledgments

If you use the kitti dataset in your research, please cite the following paper:

Quote

@article{Geiger2013IJRR,

author = {Andreas Geiger and Philip Lenz and Christoph Stiller and Raquel Urtasun},

title = {Vision meets Robotics: The KITTI Dataset},

journal = {International Journal of Robotics Research (IJRR)},

year = {2013}

}

We acknowledge the KITTI Vision Benchmark Suite for providing this comprehensive dataset that continues to shape progress in computer vision, robotics, and autonomous systems. Visit the kitti website for more information.

FAQs

What is the kitti dataset used for?

The kitti dataset is primarily used for computer vision research in autonomous driving, supporting tasks like object detection, depth estimation, optical flow, and 3D localization.

How many images are included in the kitti dataset?

The dataset includes 5,985 labeled training images and 1,496 validation images captured across urban, rural, and highway scenes. The original test set is excluded here since it does not contain ground-truth annotations.

Which object classes are annotated in the dataset?

kitti includes annotations for objects such as cars, pedestrians, cyclists, trucks, trams, and miscellaneous road users.

Can I train Ultralytics YOLO26 models using the kitti dataset?

Yes, kitti is fully compatible with Ultralytics YOLO26. You can train and validate, models directly using the provided YAML configuration file.

Where can I find the kitti dataset configuration file?

You can access the YAML file at https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/kitti.yaml.