Optimisation des inférences YOLO26 avec le moteur DeepSparse de Neural Magic

Lors du déploiement de modèles de détection d'objets tels que Ultralytics YOLO26 sur divers matériels, vous pouvez rencontrer des problèmes uniques comme l'optimisation. C'est là qu'intervient l'intégration de YOLO26 avec le moteur DeepSparse de Neural Magic. Elle transforme la manière dont les modèles YOLO26 sont exécutés et permet des performances de niveau GPU directement sur les CPU.

Ce guide vous montre comment déployer YOLO26 avec DeepSparse de Neural Magic, comment exécuter des inférences, et aussi comment évaluer les performances pour s'assurer qu'elles sont optimisées.

Fin de vie de SparseML

Neural Magic était acquise par Red Hat en janvier 2025, et déprécie les versions communautaires de leurs deepsparse, sparseml, sparsezoo, et sparsify bibliothèques. Pour plus d'informations, consultez l'avis publié dans le Readme sur le sparseml Dépôt GitHub.

DeepSparse de Neural Magic

DeepSparse de Neural Magic est un environnement d'exécution d'inférence conçu pour optimiser l'exécution des réseaux neuronaux sur les CPU. Il applique des techniques avancées telles que la densité clairsemée, l'élagage et la quantification pour réduire considérablement les demandes de calcul tout en maintenant la précision. DeepSparse offre une solution agile pour une exécution efficace et évolutive des réseaux neuronaux sur divers appareils.

Avantages de l'intégration de DeepSparse de Neural Magic avec YOLO26

Avant de nous plonger dans le déploiement de YOLO26 avec DeepSparse, comprenons les avantages de l'utilisation de DeepSparse. Parmi les avantages clés, on trouve :

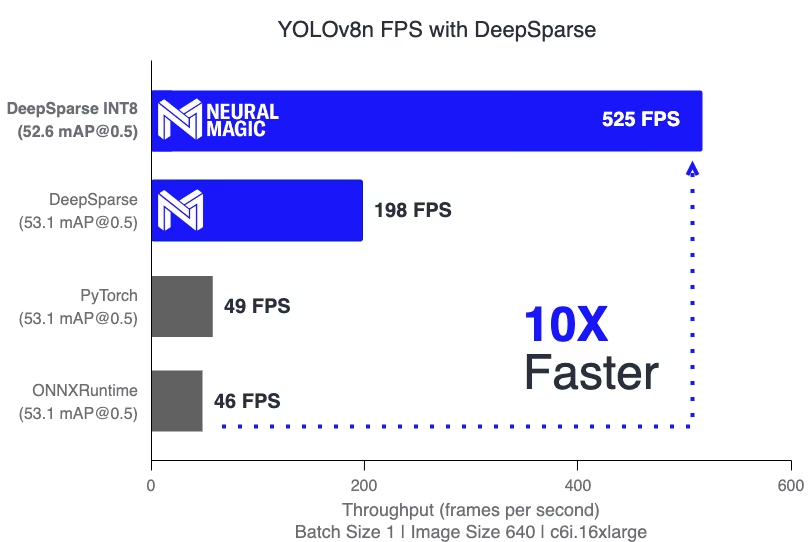

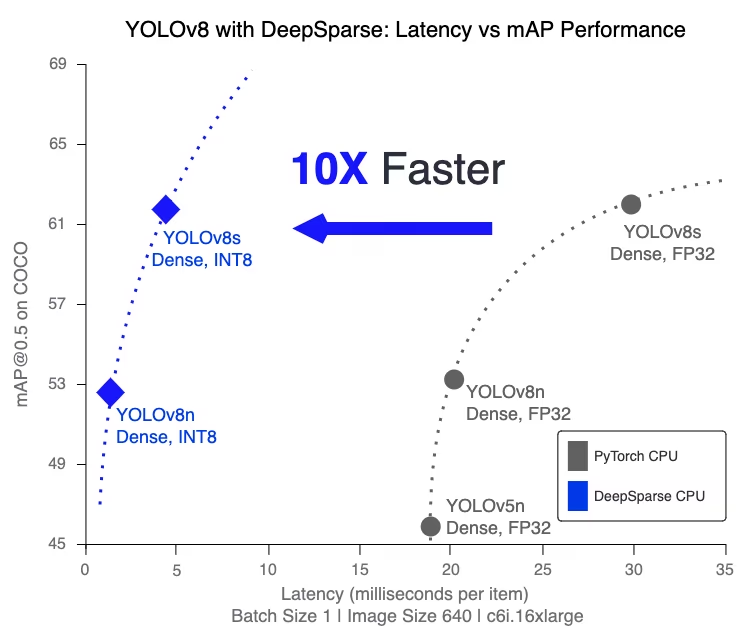

- Vitesse d'inférence améliorée : Atteint jusqu'à 525 FPS (sur YOLO11n), accélérant considérablement les capacités d'inférence de YOLO par rapport aux méthodes traditionnelles.

- Efficacité du modèle optimisée : Utilise l'élagage (pruning) et la quantification pour améliorer l'efficacité de YOLO26, réduisant la taille du modèle et les exigences de calcul tout en maintenant la précision.

Haute Performance sur les CPU Standards : Fournit des performances de type GPU sur les CPU, offrant une option plus accessible et économique pour diverses applications.

Intégration et déploiement simplifiés : Offre des outils conviviaux pour une intégration facile de YOLO26 dans les applications, y compris des fonctionnalités d'annotation d'images et de vidéos.

Prise en charge de divers types de modèles : Compatible avec les modèles YOLO26 standards et optimisés pour la parcimonie, ajoutant de la flexibilité au déploiement.

Solution évolutive et rentable : Réduit les dépenses opérationnelles et offre un déploiement évolutif de modèles avancés de détection d'objets.

Comment fonctionne la technologie DeepSparse de Neural Magic ?

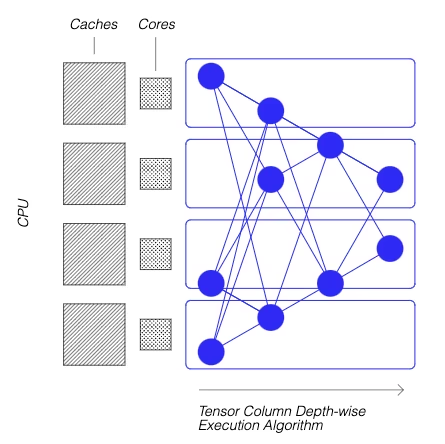

La technologie DeepSparse de Neural Magic s'inspire de l'efficacité du cerveau humain dans le calcul des réseaux neuronaux. Elle adopte deux principes clés du cerveau comme suit :

Parcimonie : Le processus de sparsification consiste à élaguer les informations redondantes des réseaux de deep learning, ce qui permet d’obtenir des modèles plus petits et plus rapides sans compromettre la précision. Cette technique réduit considérablement la taille du réseau et les besoins de calcul.

Localité de référence : DeepSparse utilise une méthode d'exécution unique, divisant le réseau en colonnes de tenseurs. Ces colonnes sont exécutées en profondeur, tenant entièrement dans le cache du CPU. Cette approche imite l'efficacité du cerveau, minimisant le mouvement des données et maximisant l'utilisation du cache du CPU.

Création d'une version parcimonieuse de YOLO26 entraînée sur un jeu de données personnalisé

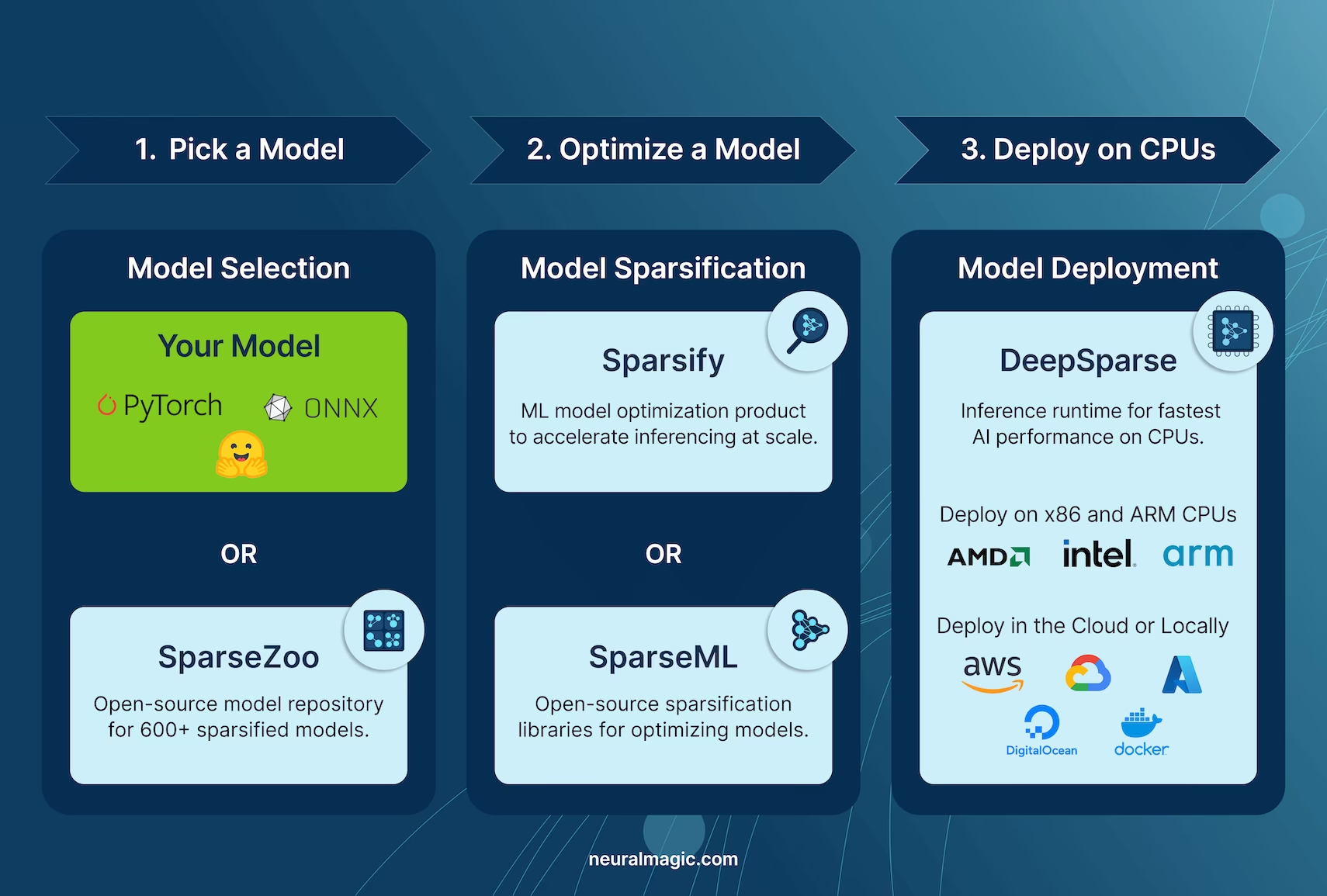

SparseZoo, un dépôt de modèles open-source de Neural Magic, offre une collection de points de contrôle de modèles YOLO26 pré-parcimonieux. Avec SparseML, intégré de manière transparente à Ultralytics, les utilisateurs peuvent facilement affiner ces points de contrôle parcimonieux sur leurs ensembles de données spécifiques à l'aide d'une interface en ligne de commande simple.

Consultez la documentation SparseML YOLO26 de Neural Magic pour plus de détails.

Utilisation : Déploiement de YOLO26 avec DeepSparse

Le déploiement de YOLO26 avec DeepSparse de Neural Magic implique quelques étapes simples. Avant de vous plonger dans les instructions d'utilisation, assurez-vous de consulter la gamme de modèles YOLO26 offerts par Ultralytics. Cela vous aidera à choisir le modèle le plus approprié pour les exigences de votre projet. Voici comment vous pouvez commencer.

Étape 1 : Installation

Pour installer les packages requis, exécutez :

Installation

# Install the required packages

pip install deepsparse[yolov8]

Étape 2 : Exportation de YOLO26 au format ONNX

Le moteur DeepSparse nécessite des modèles YOLO26 au format ONNX. L'exportation de votre modèle vers ce format est essentielle pour la compatibilité avec DeepSparse. Utilisez la commande suivante pour exporter les modèles YOLO26 :

Exportation de modèle

# Export YOLO26 model to ONNX format

yolo task=detect mode=export model=yolo26n.pt format=onnx opset=13

Cette commande enregistrera le yolo26n.onnx modèle sur votre disque.

Étape 3 : Déploiement et exécution des inférences

Avec votre modèle YOLO26 au format ONNX, vous pouvez déployer et exécuter des inférences avec DeepSparse. Cela peut être fait facilement avec leur API Python intuitive :

Déploiement et exécution d'inférences

from deepsparse import Pipeline

# Specify the path to your YOLO26 ONNX model

model_path = "path/to/yolo26n.onnx"

# Set up the DeepSparse Pipeline

yolo_pipeline = Pipeline.create(task="yolov8", model_path=model_path)

# Run the model on your images

images = ["path/to/image.jpg"]

pipeline_outputs = yolo_pipeline(images=images)

Étape 4 : Analyse comparative des performances

Il est important de vérifier que votre modèle YOLO26 fonctionne de manière optimale sur DeepSparse. Vous pouvez évaluer les performances de votre modèle pour analyser le débit et la latence :

Évaluation comparative

# Benchmark performance

deepsparse.benchmark model_path="path/to/yolo26n.onnx" --scenario=sync --input_shapes="[1,3,640,640]"

Étape 5 : Fonctionnalités supplémentaires

DeepSparse offre des fonctionnalités supplémentaires pour l'intégration pratique de YOLO26 dans les applications, telles que l'annotation d'images et l'évaluation de jeux de données.

Fonctionnalités supplémentaires

# For image annotation

deepsparse.yolov8.annotate --source "path/to/image.jpg" --model_filepath "path/to/yolo26n.onnx"

# For evaluating model performance on a dataset

deepsparse.yolov8.eval --model_path "path/to/yolo26n.onnx"

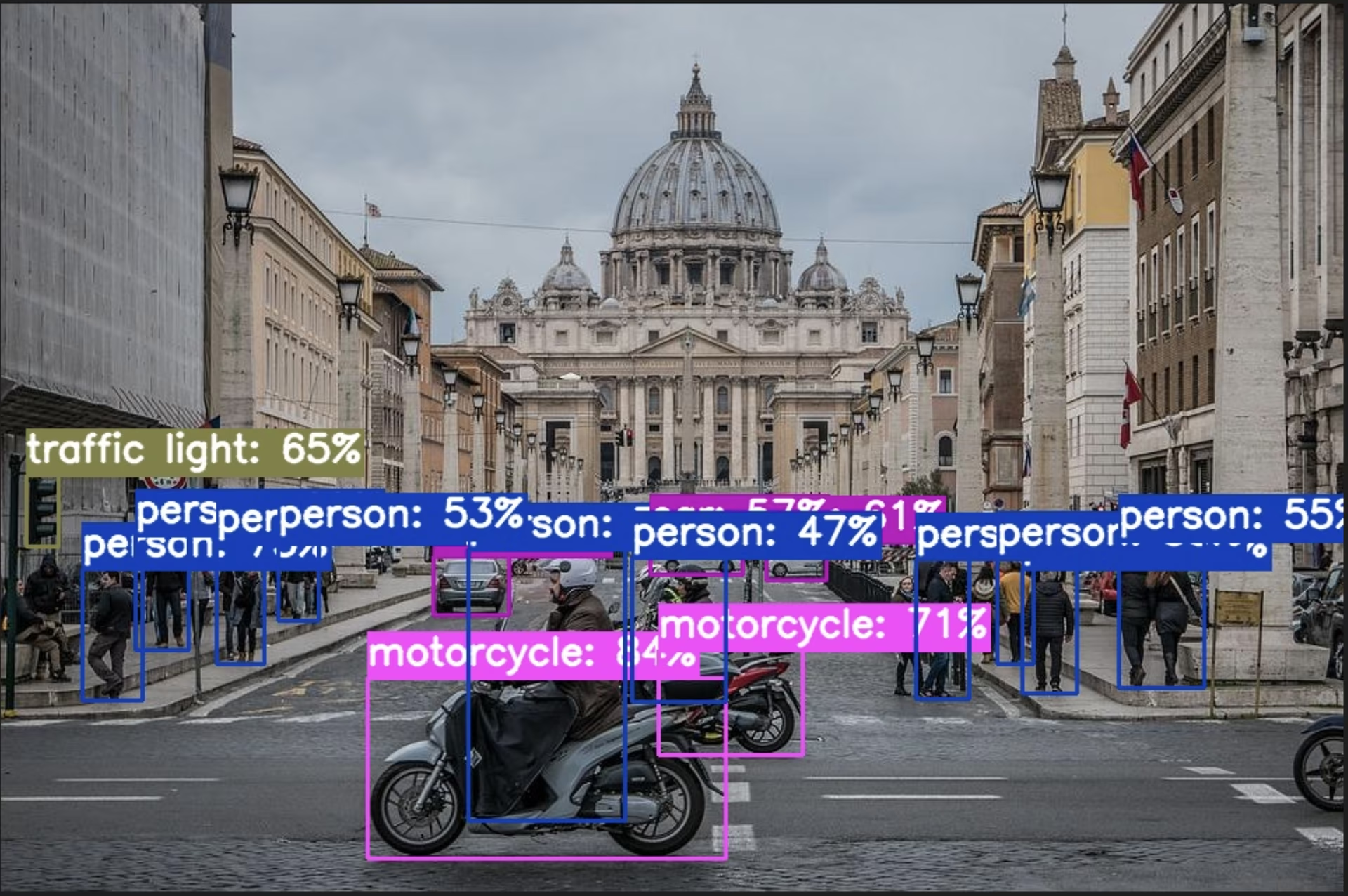

L'exécution de la commande d'annotation traite l'image que vous avez spécifiée, détecte les objets et enregistre l'image annotée avec des cadres de délimitation et des classifications. L'image annotée sera stockée dans un dossier de résultats d'annotation. Cela permet de fournir une représentation visuelle des capacités de détection du modèle.

Après avoir exécuté la commande d'évaluation, vous recevrez des métriques de sortie détaillées telles que la précision, le rappel et le mAP (moyenne de la précision moyenne). Cela offre une vue complète des performances de votre modèle sur l'ensemble de données et est particulièrement utile pour l'affinage et l'optimisation de vos modèles YOLO26 pour des cas d'utilisation spécifiques, garantissant une grande précision et efficacité.

Résumé

Ce guide a exploré l'intégration de YOLO26 d'Ultralytics avec le moteur DeepSparse de Neural Magic. Il a mis en évidence comment cette intégration améliore les performances de YOLO26 sur les plateformes CPU, offrant une efficacité de niveau GPU et des techniques avancées de parcimonie des réseaux neuronaux.

Pour des informations plus détaillées et une utilisation avancée, visitez la documentation DeepSparse de Neural Magic. Vous pouvez également explorer le guide d'intégration de YOLO26 et regarder une session de présentation sur YouTube.

De plus, pour une compréhension plus large des diverses intégrations de YOLO26, visitez la page du guide d'intégration d'Ultralytics, où vous pourrez découvrir une gamme d'autres possibilités d'intégration passionnantes.

FAQ

Qu'est-ce que le moteur DeepSparse de Neural Magic et comment optimise-t-il les performances de YOLO26 ?

Le moteur DeepSparse de Neural Magic est un runtime d'inférence conçu pour optimiser l'exécution des réseaux neuronaux sur les CPU grâce à des techniques avancées telles que la parcimonie, l'élagage (pruning) et la quantification. En intégrant DeepSparse à YOLO26, vous pouvez atteindre des performances similaires à celles des GPU sur des CPU standards, améliorant considérablement la vitesse d'inférence, l'efficacité du modèle et les performances globales tout en maintenant la précision. Pour plus de détails, consultez la section DeepSparse de Neural Magic.

Comment installer les paquets nécessaires pour déployer YOLO26 avec DeepSparse de Neural Magic ?

L'installation des paquets requis pour déployer YOLO26 avec DeepSparse de Neural Magic est simple. Vous pouvez facilement les installer via la CLI. Voici la commande à exécuter :

pip install deepsparse[yolov8]

Une fois installés, suivez les étapes fournies dans la section Installation pour configurer votre environnement et commencer à utiliser DeepSparse avec YOLO26.

Comment convertir les modèles YOLO26 au format ONNX pour une utilisation avec DeepSparse ?

Pour convertir les modèles YOLO26 au format ONNX, nécessaire pour la compatibilité avec DeepSparse, vous pouvez utiliser la commande CLI suivante :

yolo task=detect mode=export model=yolo26n.pt format=onnx opset=13

Cette commande exportera votre modèle YOLO26 (yolo26n.pt) vers un format (yolo26n.onnx) qui peut être utilisé par le moteur DeepSparse. Plus d'informations sur l'exportation de modèles sont disponibles dans la section Section Exportation de modèle.

Comment évaluer les performances de YOLO26 sur le moteur DeepSparse ?

L'évaluation des performances de YOLO26 sur DeepSparse vous aide à analyser le débit et la latence pour garantir l'optimisation de votre modèle. Vous pouvez utiliser la commande CLI suivante pour exécuter un benchmark :

deepsparse.benchmark model_path="path/to/yolo26n.onnx" --scenario=sync --input_shapes="[1,3,640,640]"

Cette commande vous fournira des mesures de performance essentielles. Pour plus de détails, consultez la section Benchmarking Performance.

Pourquoi devrais-je utiliser DeepSparse de Neural Magic avec YOLO26 pour les tâches de détection d'objets ?

L'intégration de DeepSparse de Neural Magic avec YOLO26 offre plusieurs avantages :

- Vitesse d'inférence améliorée : Atteint jusqu'à 525 FPS (sur YOLO11n), démontrant les capacités d'optimisation de DeepSparse.

- Efficacité du modèle optimisée : Utilise des techniques d'éparpillement, d'élagage et de quantification pour réduire la taille du modèle et les besoins de calcul tout en conservant la précision.

- Haute Performance sur les CPU Standards : Offre des performances semblables à celles d'un GPU sur du matériel CPU économique.

- Intégration simplifiée : Outils conviviaux pour un déploiement et une intégration faciles.

- Flexibilité : Prend en charge les modèles YOLO26 standard et optimisés pour la parcimonie.

- Rentabilité : Réduit les dépenses opérationnelles grâce à une utilisation efficace des ressources.

Pour une exploration plus approfondie de ces avantages, consultez la section Avantages de l'intégration de DeepSparse de Neural Magic avec YOLO26.