简单实用工具

字段 ultralytics 该软件包提供各种实用工具,以支持、增强和加速您的工作流程。虽然还有更多可用的工具,但本指南重点介绍了一些对开发人员最有用的工具,作为使用 Ultralytics 工具进行编程的实用参考。

观看: Ultralytics 工具 | 自动标注、Explorer API 和数据集转换

数据

自动标记 / 注释

数据集标注是一个资源密集且耗时的过程。如果您有一个在合理数量的数据上训练的 Ultralytics YOLO 目标检测 模型,您可以将其与 SAM 结合使用,以分割格式自动标注其他数据。

from ultralytics.data.annotator import auto_annotate

auto_annotate(

data="path/to/new/data",

det_model="yolo26n.pt",

sam_model="mobile_sam.pt",

device="cuda",

output_dir="path/to/save_labels",

)

此函数不返回任何值。更多详情:

- 请参阅 参考部分,用于

annotator.auto_annotate以获得关于该函数如何运作的更多信息。 - 与以下内容结合使用 函数

segments2boxes以生成对象检测边界框。

可视化数据集标注

此函数在训练前可视化图像上的 YOLO 注释,有助于识别和纠正任何可能导致不正确检测结果的错误注释。它绘制边界框,使用类名标记对象,并根据背景的亮度调整文本颜色,以提高可读性。

from ultralytics.data.utils import visualize_image_annotations

label_map = { # Define the label map with all annotated class labels.

0: "person",

1: "car",

}

# Visualize

visualize_image_annotations(

"path/to/image.jpg", # Input image path.

"path/to/annotations.txt", # Annotation file path for the image.

label_map,

)

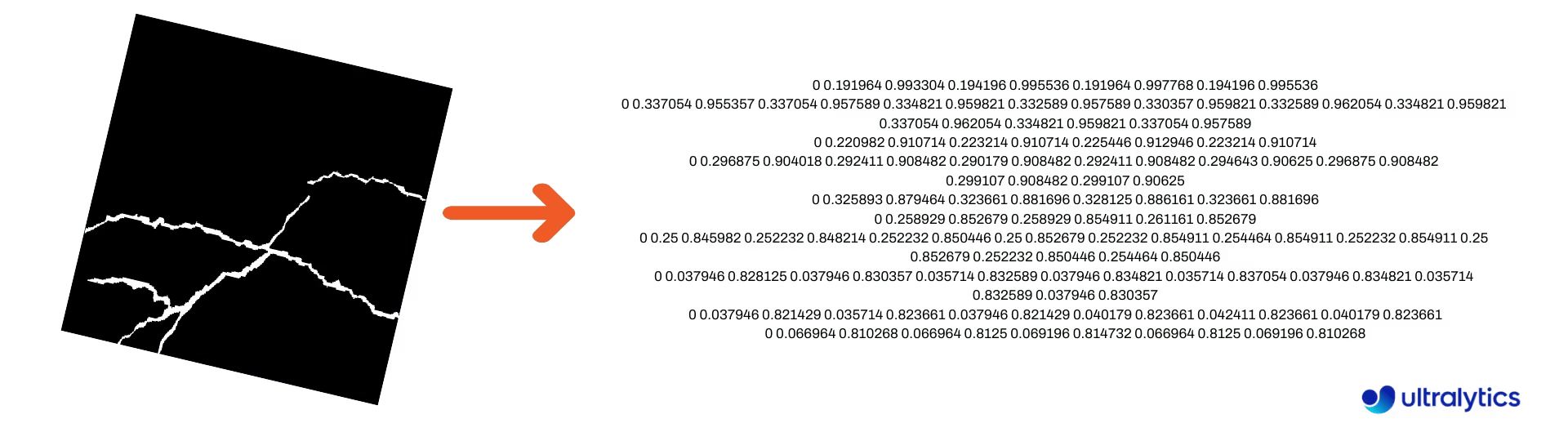

将分割掩码转换为 YOLO 格式

使用此方法将分割掩码图像数据集转换为 Ultralytics YOLO 分割格式。此函数接受包含二进制格式掩码图像的目录,并将它们转换为 YOLO 分割格式。

转换后的掩码将保存在指定的输出目录中。

from ultralytics.data.converter import convert_segment_masks_to_yolo_seg

# The classes here is the total classes in the dataset.

# for COCO dataset we have 80 classes.

convert_segment_masks_to_yolo_seg(masks_dir="path/to/masks_dir", output_dir="path/to/output_dir", classes=80)

将 COCO 转换为 YOLO 格式

使用此方法进行转换 COCO JSON 注释转换为 YOLO 格式。对于对象检测(边界框)数据集,请同时设置 use_segments 和 use_keypoints 到 False.

from ultralytics.data.converter import convert_coco

convert_coco(

"coco/annotations/",

use_segments=False,

use_keypoints=False,

cls91to80=True,

)

有关 convert_coco 函数的更多信息, 请访问参考页面.

获取边界框尺寸

import cv2

from ultralytics import YOLO

from ultralytics.utils.plotting import Annotator

model = YOLO("yolo26n.pt") # Load pretrain or fine-tune model

# Process the image

source = cv2.imread("path/to/image.jpg")

results = model(source)

# Extract results

annotator = Annotator(source, example=model.names)

for box in results[0].boxes.xyxy.cpu():

width, height, area = annotator.get_bbox_dimension(box)

print(f"Bounding Box Width {width.item()}, Height {height.item()}, Area {area.item()}")

将边界框转换为分割

使用现有的 x y w h 边界框数据,使用 yolo_bbox2segment 函数。按如下方式组织图像和注释的文件:

data

|__ images

├─ 001.jpg

├─ 002.jpg

├─ ..

└─ NNN.jpg

|__ labels

├─ 001.txt

├─ 002.txt

├─ ..

└─ NNN.txt

from ultralytics.data.converter import yolo_bbox2segment

yolo_bbox2segment(

im_dir="path/to/images",

save_dir=None, # saved to "labels-segment" in images directory

sam_model="sam_b.pt",

)

请访问 yolo_bbox2segment 参考页面 以获取有关该函数的更多信息。

将分割转换为边界框

如果您有一个使用 分割数据集格式的数据集,您可以轻松地将这些转换为直立(或水平)的边界框(x y w h 格式)。

import numpy as np

from ultralytics.utils.ops import segments2boxes

segments = np.array(

[

[805, 392, 797, 400, ..., 808, 714, 808, 392],

[115, 398, 113, 400, ..., 150, 400, 149, 298],

[267, 412, 265, 413, ..., 300, 413, 299, 412],

]

)

segments2boxes([s.reshape(-1, 2) for s in segments])

# >>> array([[ 741.66, 631.12, 133.31, 479.25],

# [ 146.81, 649.69, 185.62, 502.88],

# [ 281.81, 636.19, 118.12, 448.88]],

# dtype=float32) # xywh bounding boxes

要了解此函数的工作原理,请访问参考页面。

实用工具

图像压缩

压缩单个图像文件到较小的尺寸,同时保持其纵横比和质量。如果输入图像小于最大尺寸,则不会调整大小。

from pathlib import Path

from ultralytics.data.utils import compress_one_image

for f in Path("path/to/dataset").rglob("*.jpg"):

compress_one_image(f)

自动分割数据集

自动将数据集拆分为 train/val/test 多个拆分,并将生成的拆分保存到 autosplit_*.txt 文件。此函数使用随机抽样,在使用以下命令时排除: fraction 参数进行训练时,不包括随机抽样。.

from ultralytics.data.split import autosplit

autosplit(

path="path/to/images",

weights=(0.9, 0.1, 0.0), # (train, validation, test) fractional splits

annotated_only=False, # split only images with annotation file when True

)

有关此函数的更多详细信息,请参见参考页面。

分割多边形到二元掩码

将单个多边形(作为列表)转换为指定图像大小的二值掩码。多边形应采用以下形式 [N, 2],其中 N 是 (x, y) 定义多边形轮廓的点数。

警告

N 必须始终 为偶数。

import numpy as np

from ultralytics.data.utils import polygon2mask

imgsz = (1080, 810)

polygon = np.array([805, 392, 797, 400, ..., 808, 714, 808, 392]) # (238, 2)

mask = polygon2mask(

imgsz, # tuple

[polygon], # input as list

color=255, # 8-bit binary

downsample_ratio=1,

)

边界框

边界框(水平)实例

要管理边界框数据, Bboxes 类有助于在框坐标格式之间转换、缩放框尺寸、计算面积、包括偏移量等。

import numpy as np

from ultralytics.utils.instance import Bboxes

boxes = Bboxes(

bboxes=np.array(

[

[22.878, 231.27, 804.98, 756.83],

[48.552, 398.56, 245.35, 902.71],

[669.47, 392.19, 809.72, 877.04],

[221.52, 405.8, 344.98, 857.54],

[0, 550.53, 63.01, 873.44],

[0.0584, 254.46, 32.561, 324.87],

]

),

format="xyxy",

)

boxes.areas()

# >>> array([ 4.1104e+05, 99216, 68000, 55772, 20347, 2288.5])

boxes.convert("xywh")

print(boxes.bboxes)

# >>> array(

# [[ 413.93, 494.05, 782.1, 525.56],

# [ 146.95, 650.63, 196.8, 504.15],

# [ 739.6, 634.62, 140.25, 484.85],

# [ 283.25, 631.67, 123.46, 451.74],

# [ 31.505, 711.99, 63.01, 322.91],

# [ 16.31, 289.67, 32.503, 70.41]]

# )

请参阅 Bboxes 参考部分 了解更多属性和方法。

提示

以下许多功能(以及更多)都可以通过 Bboxes 类,但如果您更喜欢直接使用这些函数,请参阅以下小节,了解如何独立导入它们。

缩放框

当放大或缩小图像时,您可以使用以下方法适当地缩放相应的边界框坐标以匹配: ultralytics.utils.ops.scale_boxes.

import cv2 as cv

import numpy as np

from ultralytics.utils.ops import scale_boxes

image = cv.imread("ultralytics/assets/bus.jpg")

h, w, c = image.shape

resized = cv.resize(image, None, (), fx=1.2, fy=1.2)

new_h, new_w, _ = resized.shape

xyxy_boxes = np.array(

[

[22.878, 231.27, 804.98, 756.83],

[48.552, 398.56, 245.35, 902.71],

[669.47, 392.19, 809.72, 877.04],

[221.52, 405.8, 344.98, 857.54],

[0, 550.53, 63.01, 873.44],

[0.0584, 254.46, 32.561, 324.87],

]

)

new_boxes = scale_boxes(

img1_shape=(h, w), # original image dimensions

boxes=xyxy_boxes, # boxes from original image

img0_shape=(new_h, new_w), # resized image dimensions (scale to)

ratio_pad=None,

padding=False,

xywh=False,

)

print(new_boxes)

# >>> array(

# [[ 27.454, 277.52, 965.98, 908.2],

# [ 58.262, 478.27, 294.42, 1083.3],

# [ 803.36, 470.63, 971.66, 1052.4],

# [ 265.82, 486.96, 413.98, 1029],

# [ 0, 660.64, 75.612, 1048.1],

# [ 0.0701, 305.35, 39.073, 389.84]]

# )

边界框格式转换

XYXY → XYWH

将边界框坐标从 (x1, y1, x2, y2) 格式转换为 (x, y, width, height) 格式,其中 (x1, y1) 是左上角,(x2, y2) 是右下角。

import numpy as np

from ultralytics.utils.ops import xyxy2xywh

xyxy_boxes = np.array(

[

[22.878, 231.27, 804.98, 756.83],

[48.552, 398.56, 245.35, 902.71],

[669.47, 392.19, 809.72, 877.04],

[221.52, 405.8, 344.98, 857.54],

[0, 550.53, 63.01, 873.44],

[0.0584, 254.46, 32.561, 324.87],

]

)

xywh = xyxy2xywh(xyxy_boxes)

print(xywh)

# >>> array(

# [[ 413.93, 494.05, 782.1, 525.56],

# [ 146.95, 650.63, 196.8, 504.15],

# [ 739.6, 634.62, 140.25, 484.85],

# [ 283.25, 631.67, 123.46, 451.74],

# [ 31.505, 711.99, 63.01, 322.91],

# [ 16.31, 289.67, 32.503, 70.41]]

# )

所有边界框转换

from ultralytics.utils.ops import (

ltwh2xywh,

ltwh2xyxy,

xywh2ltwh, # xywh → top-left corner, w, h

xywh2xyxy,

xywhn2xyxy, # normalized → pixel

xyxy2ltwh, # xyxy → top-left corner, w, h

xyxy2xywhn, # pixel → normalized

)

for func in (ltwh2xywh, ltwh2xyxy, xywh2ltwh, xywh2xyxy, xywhn2xyxy, xyxy2ltwh, xyxy2xywhn):

print(help(func)) # print function docstrings

请参阅每个函数的文档字符串或访问 ultralytics.utils.ops 参考页面 以阅读更多内容。

绘图

注释实用程序

Ultralytics 包含一个 Annotator 类,用于注释各种数据类型。最好与以下内容一起使用 对象检测边界框, 姿势估计关键点和 定向边界框.

框标注

使用 Ultralytics YOLO 🚀 的 Python 示例

import cv2 as cv

import numpy as np

from ultralytics.utils.plotting import Annotator, colors

names = {

0: "person",

5: "bus",

11: "stop sign",

}

image = cv.imread("ultralytics/assets/bus.jpg")

ann = Annotator(

image,

line_width=None, # default auto-size

font_size=None, # default auto-size

font="Arial.ttf", # must be ImageFont compatible

pil=False, # use PIL, otherwise uses OpenCV

)

xyxy_boxes = np.array(

[

[5, 22.878, 231.27, 804.98, 756.83], # class-idx x1 y1 x2 y2

[0, 48.552, 398.56, 245.35, 902.71],

[0, 669.47, 392.19, 809.72, 877.04],

[0, 221.52, 405.8, 344.98, 857.54],

[0, 0, 550.53, 63.01, 873.44],

[11, 0.0584, 254.46, 32.561, 324.87],

]

)

for nb, box in enumerate(xyxy_boxes):

c_idx, *box = box

label = f"{str(nb).zfill(2)}:{names.get(int(c_idx))}"

ann.box_label(box, label, color=colors(c_idx, bgr=True))

image_with_bboxes = ann.result()

import cv2 as cv

import numpy as np

from ultralytics.utils.plotting import Annotator, colors

obb_names = {10: "small vehicle"}

obb_image = cv.imread("datasets/dota8/images/train/P1142__1024__0___824.jpg")

obb_boxes = np.array(

[

[0, 635, 560, 919, 719, 1087, 420, 803, 261], # class-idx x1 y1 x2 y2 x3 y2 x4 y4

[0, 331, 19, 493, 260, 776, 70, 613, -171],

[9, 869, 161, 886, 147, 851, 101, 833, 115],

]

)

ann = Annotator(

obb_image,

line_width=None, # default auto-size

font_size=None, # default auto-size

font="Arial.ttf", # must be ImageFont compatible

pil=False, # use PIL, otherwise uses OpenCV

)

for obb in obb_boxes:

c_idx, *obb = obb

obb = np.array(obb).reshape(-1, 4, 2).squeeze()

label = f"{obb_names.get(int(c_idx))}"

ann.box_label(

obb,

label,

color=colors(c_idx, True),

)

image_with_obb = ann.result()

名称可以从以下位置使用 model.names 当 处理检测结果时。

另请参阅 Annotator 参考页面 以获得更多见解。

Ultralytics Sweep Annotation

使用 Ultralytics Utilities 进行 Sweep 标注

import cv2

import numpy as np

from ultralytics import YOLO

from ultralytics.solutions.solutions import SolutionAnnotator

from ultralytics.utils.plotting import colors

# User defined video path and model file

cap = cv2.VideoCapture("path/to/video.mp4")

model = YOLO(model="yolo26s-seg.pt") # Model file, e.g., yolo26s.pt or yolo26m-seg.pt

if not cap.isOpened():

print("Error: Could not open video.")

exit()

# Initialize the video writer object.

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter("ultralytics.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

masks = None # Initialize variable to store masks data

f = 0 # Initialize frame count variable for enabling mouse event.

line_x = w # Store width of line.

dragging = False # Initialize bool variable for line dragging.

classes = model.names # Store model classes names for plotting.

window_name = "Ultralytics Sweep Annotator"

def drag_line(event, x, _, flags, param):

"""Mouse callback function to enable dragging a vertical sweep line across the video frame."""

global line_x, dragging

if event == cv2.EVENT_LBUTTONDOWN or (flags & cv2.EVENT_FLAG_LBUTTON):

line_x = max(0, min(x, w))

dragging = True

while cap.isOpened(): # Loop over the video capture object.

ret, im0 = cap.read()

if not ret:

break

f = f + 1 # Increment frame count.

count = 0 # Re-initialize count variable on every frame for precise counts.

results = model.track(im0, persist=True)[0]

if f == 1:

cv2.namedWindow(window_name)

cv2.setMouseCallback(window_name, drag_line)

annotator = SolutionAnnotator(im0)

if results.boxes.is_track:

if results.masks is not None:

masks = [np.array(m, dtype=np.int32) for m in results.masks.xy]

boxes = results.boxes.xyxy.tolist()

track_ids = results.boxes.id.int().cpu().tolist()

clss = results.boxes.cls.cpu().tolist()

for mask, box, cls, t_id in zip(masks or [None] * len(boxes), boxes, clss, track_ids):

color = colors(t_id, True) # Assign different color to each tracked object.

label = f"{classes[cls]}:{t_id}"

if mask is not None and mask.size > 0:

if box[0] > line_x:

count += 1

cv2.polylines(im0, [mask], True, color, 2)

x, y = mask.min(axis=0)

(w_m, _), _ = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)

cv2.rectangle(im0, (x, y - 20), (x + w_m, y), color, -1)

cv2.putText(im0, label, (x, y - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 1)

else:

if box[0] > line_x:

count += 1

annotator.box_label(box=box, color=color, label=label)

# Generate draggable sweep line

annotator.sweep_annotator(line_x=line_x, line_y=h, label=f"COUNT:{count}")

cv2.imshow(window_name, im0)

video_writer.write(im0)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

# Release the resources

cap.release()

video_writer.release()

cv2.destroyAllWindows()

查找有关该的更多详细信息 sweep_annotator 我们参考部分中的方法 这里.

自适应标签注释

警告

从以下版本开始 Ultralytics v8.3.167, circle_label 和 text_label 已被统一的取代 adaptive_label 函数。 现在您可以使用以下命令指定注释类型 shape 参数:

- 矩形:

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="rect") - 圆形:

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="circle")

观看: 使用 Python 实时演示进行文本和圆形注释的深入指南 | Ultralytics Annotations 🚀

使用 Ultralytics 实用程序的自适应标签注释

import cv2

from ultralytics import YOLO

from ultralytics.solutions.solutions import SolutionAnnotator

from ultralytics.utils.plotting import colors

model = YOLO("yolo26s.pt")

names = model.names

cap = cv2.VideoCapture("path/to/video.mp4")

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

writer = cv2.VideoWriter("Ultralytics circle annotation.avi", cv2.VideoWriter_fourcc(*"MJPG"), fps, (w, h))

while True:

ret, im0 = cap.read()

if not ret:

break

annotator = SolutionAnnotator(im0)

results = model.predict(im0)[0]

boxes = results.boxes.xyxy.cpu()

clss = results.boxes.cls.cpu().tolist()

for box, cls in zip(boxes, clss):

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="circle")

writer.write(im0)

cv2.imshow("Ultralytics circle annotation", im0)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

writer.release()

cap.release()

cv2.destroyAllWindows()

import cv2

from ultralytics import YOLO

from ultralytics.solutions.solutions import SolutionAnnotator

from ultralytics.utils.plotting import colors

model = YOLO("yolo26s.pt")

names = model.names

cap = cv2.VideoCapture("path/to/video.mp4")

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

writer = cv2.VideoWriter("Ultralytics text annotation.avi", cv2.VideoWriter_fourcc(*"MJPG"), fps, (w, h))

while True:

ret, im0 = cap.read()

if not ret:

break

annotator = SolutionAnnotator(im0)

results = model.predict(im0)[0]

boxes = results.boxes.xyxy.cpu()

clss = results.boxes.cls.cpu().tolist()

for box, cls in zip(boxes, clss):

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="rect")

writer.write(im0)

cv2.imshow("Ultralytics text annotation", im0)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

writer.release()

cap.release()

cv2.destroyAllWindows()

请参阅 SolutionAnnotator 参考页面 以获得更多见解。

其他

代码分析

检查代码运行/处理的持续时间,可以使用 with 或作为装饰器。

from ultralytics.utils.ops import Profile

with Profile(device="cuda:0") as dt:

pass # operation to measure

print(dt)

# >>> "Elapsed time is 9.5367431640625e-07 s"

Ultralytics 支持的格式

需要在Ultralytics中以编程方式使用支持的图像或视频格式吗?如果需要,请使用以下常量:

from ultralytics.data.utils import IMG_FORMATS, VID_FORMATS

print(IMG_FORMATS)

# {'avif', 'bmp', 'dng', 'heic', 'jp2', 'jpeg', 'jpeg2000', 'jpg', 'mpo', 'png', 'tif', 'tiff', 'webp'}

print(VID_FORMATS)

# {'asf', 'avi', 'gif', 'm4v', 'mkv', 'mov', 'mp4', 'mpeg', 'mpg', 'ts', 'wmv', 'webm'}

可整除

计算最接近的整数 x 可以被整除 y.

from ultralytics.utils.ops import make_divisible

make_divisible(7, 3)

# >>> 9

make_divisible(7, 2)

# >>> 8

常见问题

Ultralytics 软件包中包含哪些实用工具来增强机器学习工作流程?

Ultralytics 软件包包含旨在简化和优化机器学习工作流的实用工具。主要实用工具包括用于数据集标注的 自动标注、使用 convert_coco 将 COCO 转换为 YOLO 格式、图像压缩以及数据集自动分割。这些工具可减少手动工作量,确保一致性,并提高数据处理效率。

如何使用 Ultralytics 自动标注我的数据集?

如果您有一个预训练的 Ultralytics YOLO 目标检测模型,可以将其与SAM模型结合使用,以分割格式自动标注您的数据集。以下是一个示例:

from ultralytics.data.annotator import auto_annotate

auto_annotate(

data="path/to/new/data",

det_model="yolo26n.pt",

sam_model="mobile_sam.pt",

device="cuda",

output_dir="path/to/save_labels",

)

有关更多详细信息,请查看auto_annotate 参考部分。

如何在 Ultralytics 中将 COCO 数据集标注转换为 YOLO 格式?

要将 COCO JSON 注释转换为 YOLO 格式以进行目标 detect,您可以使用 convert_coco 实用程序。这是一个示例代码片段:

from ultralytics.data.converter import convert_coco

convert_coco(

"coco/annotations/",

use_segments=False,

use_keypoints=False,

cls91to80=True,

)

有关更多信息,请访问convert_coco 参考页面。

Ultralytics 软件包中 YOLO Data Explorer 的用途是什么?

字段 YOLO Explorer 是 8.1.0 更新中引入的强大工具,旨在增强对数据集的理解。它允许您使用文本查询在数据集中查找对象实例,从而更轻松地分析和管理数据。此工具提供对数据集组成和分布的宝贵见解,有助于改进模型训练和性能。

如何在 Ultralytics 中将边界框转换为分割?

要转换现有的边界框数据(以 x y w h 格式)转换为分割,您可以使用 yolo_bbox2segment 函数。确保您的文件按照图片和标签分别存放于不同的目录中。

from ultralytics.data.converter import yolo_bbox2segment

yolo_bbox2segment(

im_dir="path/to/images",

save_dir=None, # saved to "labels-segment" in the images directory

sam_model="sam_b.pt",

)

更多信息,请访问yolo_bbox2segment 参考页面。