インスタンスセグメンテーション

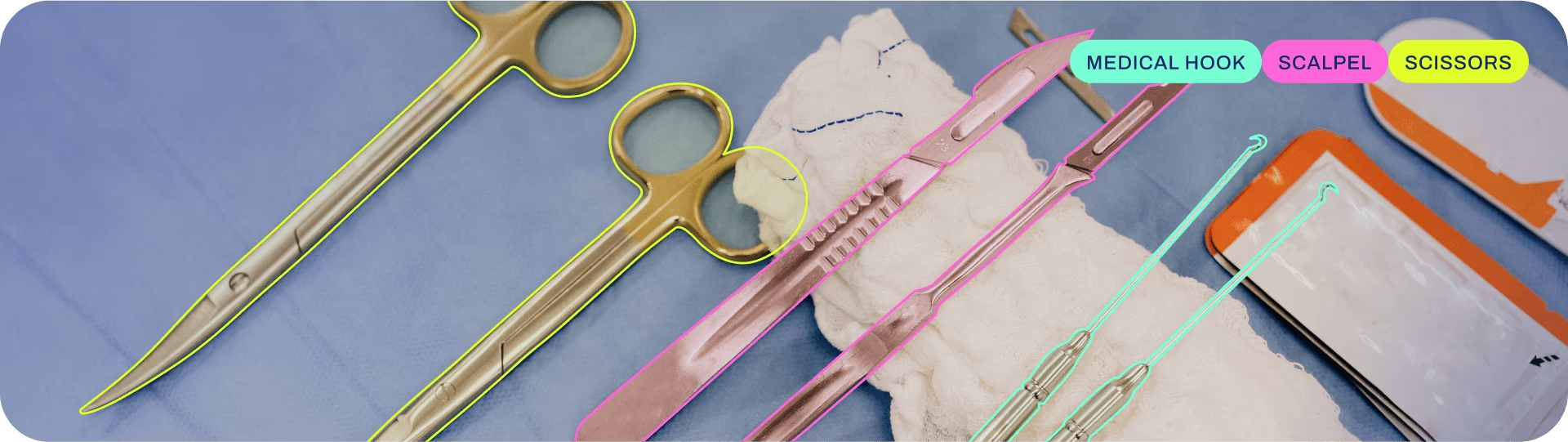

インスタンスセグメンテーションは、物体検出よりもさらに一歩進んで、画像内の個々の物体を識別し、画像の残りの部分からセグメント化します。

インスタンスセグメンテーションモデルの出力は、画像内の各オブジェクトを囲むマスクまたは輪郭のセットであり、クラスラベルと各オブジェクトの信頼度スコアとともに提供されます。インスタンスセグメンテーションは、画像内のオブジェクトがどこにあるかだけでなく、その正確な形状も知る必要がある場合に役立ちます。

見る: Pythonで事前学習済みのUltralytics YOLOモデルを使用してセグメンテーションを実行します。

ヒント

YOLO26 Segmentモデルは、 -seg 接尾辞、すなわち、 yolo26n-seg.pt、そして事前学習されています COCO.

モデル

YOLO26の事前学習済みSegmentモデルをここに示します。Detect、Segment、PoseモデルはCOCOデータセットで事前学習されており、ClassifyモデルはImageNetデータセットで事前学習されています。

モデルは、最初の使用時に最新のUltralytics リリースから自動的にダウンロードされます。

| モデル | サイズ (ピクセル) | mAPbox 50-95(e2e) | mAPmask 50-95(e2e) | 速度 CPU ONNX (ms) | 速度 T4 TensorRT10 (ms) | params (M) | FLOPs (B) |

|---|---|---|---|---|---|---|---|

| YOLO26n-seg | 640 | 39.6 | 33.9 | 53.3 ± 0.5 | 2.1 ± 0.0 | 2.7 | 9.1 |

| YOLO26s-seg | 640 | 47.3 | 40.0 | 118.4 ± 0.9 | 3.3 ± 0.0 | 10.4 | 34.2 |

| YOLO26m-seg | 640 | 52.5 | 44.1 | 328.2 ± 2.4 | 6.7 ± 0.1 | 23.6 | 121.5 |

| YOLO26l-seg | 640 | 54.4 | 45.5 | 387.0 ± 3.7 | 8.0 ± 0.1 | 28.0 | 139.8 |

| YOLO26x-seg | 640 | 56.5 | 47.0 | 787.0 ± 6.8 | 16.4 ± 0.1 | 62.8 | 313.5 |

- mAPval 値は、シングルモデルのシングルスケールにおける COCO val2017 データセット。

再現方法yolo val segment data=coco.yaml device=0 - 速度 COCO val画像を用いて平均化された Amazon EC2 P4d インスタンス。

再現方法yolo val segment data=coco.yaml batch=1 device=0|cpu

トレーニング

COCO8-segデータセットでYOLO26n-segを画像サイズ640で100 エポックトレーニングします。利用可能な引数の全リストについては、設定ページを参照してください。

例

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-seg.yaml") # build a new model from YAML

model = YOLO("yolo26n-seg.pt") # load a pretrained model (recommended for training)

model = YOLO("yolo26n-seg.yaml").load("yolo26n.pt") # build from YAML and transfer weights

# Train the model

results = model.train(data="coco8-seg.yaml", epochs=100, imgsz=640)

# Build a new model from YAML and start training from scratch

yolo segment train data=coco8-seg.yaml model=yolo26n-seg.yaml epochs=100 imgsz=640

# Start training from a pretrained *.pt model

yolo segment train data=coco8-seg.yaml model=yolo26n-seg.pt epochs=100 imgsz=640

# Build a new model from YAML, transfer pretrained weights to it and start training

yolo segment train data=coco8-seg.yaml model=yolo26n-seg.yaml pretrained=yolo26n-seg.pt epochs=100 imgsz=640

データセット形式

YOLOセグメンテーションデータセット形式の詳細は、データセットガイドで確認できます。既存のデータセットを他の形式(COCOなど)からYOLO形式に変換するには、UltralyticsのJSON2YOLOツールを使用してください。

Val

学習済みYOLO26n-segモデルを検証する 精度 COCO8-segデータセットで。引数は必要ありません。なぜなら model 学習内容を保持 data および引数をモデル属性として。

例

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-seg.pt") # load an official model

model = YOLO("path/to/best.pt") # load a custom model

# Validate the model

metrics = model.val() # no arguments needed, dataset and settings remembered

metrics.box.map # map50-95(B)

metrics.box.map50 # map50(B)

metrics.box.map75 # map75(B)

metrics.box.maps # a list containing mAP50-95(B) for each category

metrics.seg.map # map50-95(M)

metrics.seg.map50 # map50(M)

metrics.seg.map75 # map75(M)

metrics.seg.maps # a list containing mAP50-95(M) for each category

yolo segment val model=yolo26n-seg.pt # val official model

yolo segment val model=path/to/best.pt # val custom model

予測

学習済みYOLO26n-segモデルを使用して画像に対して予測を実行します。

例

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-seg.pt") # load an official model

model = YOLO("path/to/best.pt") # load a custom model

# Predict with the model

results = model("https://ultralytics.com/images/bus.jpg") # predict on an image

# Access the results

for result in results:

xy = result.masks.xy # mask in polygon format

xyn = result.masks.xyn # normalized

masks = result.masks.data # mask in matrix format (num_objects x H x W)

yolo segment predict model=yolo26n-seg.pt source='https://ultralytics.com/images/bus.jpg' # predict with official model

yolo segment predict model=path/to/best.pt source='https://ultralytics.com/images/bus.jpg' # predict with custom model

詳細な predict モードの詳細については、 予測 ページ。

エクスポート

YOLO26n-segモデルをONNX、CoreMLなどの異なる形式にエクスポートします。

例

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-seg.pt") # load an official model

model = YOLO("path/to/best.pt") # load a custom-trained model

# Export the model

model.export(format="onnx")

yolo export model=yolo26n-seg.pt format=onnx # export official model

yolo export model=path/to/best.pt format=onnx # export custom-trained model

利用可能なYOLO26-segエクスポート形式は以下の表に示されています。以下の方法で任意の形式にエクスポートできます。 format 引数、すなわち、 format='onnx' または format='engine'。エクスポートされたモデルで直接予測または検証できます。つまり、 yolo predict model=yolo26n-seg.onnxエクスポート完了後、モデルの使用例が表示されます。

| 形式 | format 引数 | モデル | メタデータ | 引数 |

|---|---|---|---|---|

| PyTorch | - | yolo26n-seg.pt | ✅ | - |

| TorchScript | torchscript | yolo26n-seg.torchscript | ✅ | imgsz, half, dynamic, optimize, nms, batch, device |

| ONNX | onnx | yolo26n-seg.onnx | ✅ | imgsz, half, dynamic, simplify, opset, nms, batch, device |

| OpenVINO | openvino | yolo26n-seg_openvino_model/ | ✅ | imgsz, half, dynamic, int8, nms, batch, data, fraction, device |

| TensorRT | engine | yolo26n-seg.engine | ✅ | imgsz, half, dynamic, simplify, workspace, int8, nms, batch, data, fraction, device |

| CoreML | coreml | yolo26n-seg.mlpackage | ✅ | imgsz, dynamic, half, int8, nms, batch, device |

| TF SavedModel | saved_model | yolo26n-seg_saved_model/ | ✅ | imgsz, keras, int8, nms, batch, device |

| TF GraphDef | pb | yolo26n-seg.pb | ❌ | imgsz, batch, device |

| TF Lite | tflite | yolo26n-seg.tflite | ✅ | imgsz, half, int8, nms, batch, data, fraction, device |

| TF Edge TPU | edgetpu | yolo26n-seg_edgetpu.tflite | ✅ | imgsz, device |

| TF.js | tfjs | yolo26n-seg_web_model/ | ✅ | imgsz, half, int8, nms, batch, device |

| PaddlePaddle | paddle | yolo26n-seg_paddle_model/ | ✅ | imgsz, batch, device |

| MNN | mnn | yolo26n-seg.mnn | ✅ | imgsz, batch, int8, half, device |

| NCNN | ncnn | yolo26n-seg_ncnn_model/ | ✅ | imgsz, half, batch, device |

| IMX500 | imx | yolo26n-seg_imx_model/ | ✅ | imgsz, int8, data, fraction, device |

| RKNN | rknn | yolo26n-seg_rknn_model/ | ✅ | imgsz, batch, name, device |

| ExecuTorch | executorch | yolo26n-seg_executorch_model/ | ✅ | imgsz, device |

| Axelera | axelera | yolo26n-seg_axelera_model/ | ✅ | imgsz, int8, data, fraction, device |

詳細な export 詳細は エクスポート ページ。

よくある質問

カスタムデータセットでYOLO26セグメンテーションモデルをトレーニングするにはどうすればよいですか?

カスタムデータセットでYOLO26セグメンテーションモデルをトレーニングするには、まずデータセットをYOLOセグメンテーション形式で準備する必要があります。JSON2YOLOのようなツールを使用して、他の形式のデータセットを変換できます。データセットの準備ができたら、PythonまたはCLIコマンドを使用してモデルをトレーニングできます。

例

from ultralytics import YOLO

# Load a pretrained YOLO26 segment model

model = YOLO("yolo26n-seg.pt")

# Train the model

results = model.train(data="path/to/your_dataset.yaml", epochs=100, imgsz=640)

yolo segment train data=path/to/your_dataset.yaml model=yolo26n-seg.pt epochs=100 imgsz=640

その他の利用可能な引数については、設定ページをご確認ください。

YOLO26における物体検出とインスタンスセグメンテーションの違いは何ですか?

オブジェクトdetectは、画像内のオブジェクトをバウンディングボックスで囲むことで識別し、位置を特定します。一方、インスタンスセグメンテーションは、バウンディングボックスを識別するだけでなく、各オブジェクトの正確な形状も明確にします。YOLO26インスタンスセグメンテーションモデルは、detectされた各オブジェクトの輪郭を示すマスクまたは輪郭を提供し、医用画像処理や自動運転など、オブジェクトの正確な形状を知ることが重要なタスクで特に役立ちます。

インスタンスセグメンテーションにYOLO26を使用する理由は何ですか?

Ultralytics YOLO26は、高精度とリアルタイム性能で知られる最先端モデルであり、インスタンスセグメンテーションタスクに最適です。YOLO26 SegmentモデルはCOCOデータセットで事前学習されており、様々なオブジェクトに対して堅牢な性能を保証します。さらに、YOLOはトレーニング、検証、予測、エクスポート機能をシームレスに統合してサポートしており、研究および産業アプリケーションの両方で非常に多用途です。

事前トレーニング済みのYOLOセグメンテーションモデルをロードして検証するにはどうすればよいですか?

事前学習済みのYOLOセグメンテーションモデルのロードと検証は簡単です。pythonとCLIの両方を使用してこれを行う方法を次に示します:

例

from ultralytics import YOLO

# Load a pretrained model

model = YOLO("yolo26n-seg.pt")

# Validate the model

metrics = model.val()

print("Mean Average Precision for boxes:", metrics.box.map)

print("Mean Average Precision for masks:", metrics.seg.map)

yolo segment val model=yolo26n-seg.pt

これらの手順により、モデルのパフォーマンスを評価するために不可欠なMean Average Precision (mAP)のような検証メトリクスが得られます。

YOLOセグメンテーションモデルをONNX形式にエクスポートするにはどうすればよいですか?

YOLOセグメンテーションモデルをONNX形式にエクスポートするのは簡単で、PythonまたはCLIコマンドを使用して実行できます。

例

from ultralytics import YOLO

# Load a pretrained model

model = YOLO("yolo26n-seg.pt")

# Export the model to ONNX format

model.export(format="onnx")

yolo export model=yolo26n-seg.pt format=onnx

さまざまな形式へのエクスポートの詳細については、エクスポートページを参照してください。