Model Export with Ultralytics YOLO

Introduction

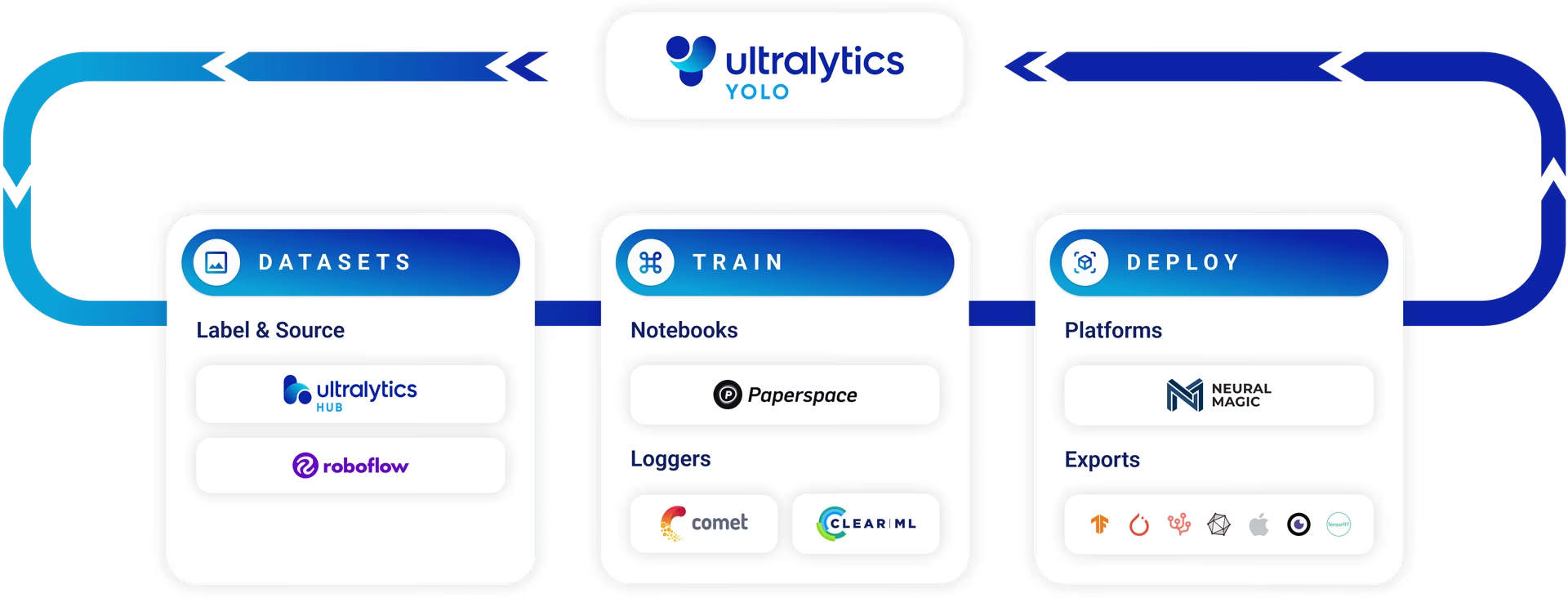

The ultimate goal of training a model is to deploy it for real-world applications. Export mode in Ultralytics YOLO26 offers a versatile range of options for exporting your trained model to different formats, making it deployable across various platforms and devices. This comprehensive guide aims to walk you through the nuances of model exporting, showcasing how to achieve maximum compatibility and performance.

Watch: How to Export Ultralytics YOLO26 in different formats for Deployment | ONNX, TensorRT, CoreML 🚀

Why Choose YOLO26's Export Mode?

- Versatility: Export to multiple formats including ONNX, TensorRT, CoreML, and more.

- Performance: Gain up to 5x GPU speedup with TensorRT and 3x CPU speedup with ONNX or OpenVINO.

- Compatibility: Make your model universally deployable across numerous hardware and software environments.

- Ease of Use: Simple CLI and Python API for quick and straightforward model exporting.

Key Features of Export Mode

Here are some of the standout functionalities:

- One-Click Export: Simple commands for exporting to different formats.

- Batch Export: Export batched-inference capable models.

- Optimized Inference: Exported models are optimized for quicker inference times.

- Tutorial Videos: In-depth guides and tutorials for a smooth exporting experience.

Usage Examples

Export a YOLO26n model to a different format like ONNX or TensorRT. See the Arguments section below for a full list of export arguments.

Example

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n.pt") # load an official model

model = YOLO("path/to/best.pt") # load a custom-trained model

# Export the model

model.export(format="onnx")

yolo export model=yolo26n.pt format=onnx # export official model

yolo export model=path/to/best.pt format=onnx # export custom-trained model

Arguments

This table details the configurations and options available for exporting YOLO models to different formats. These settings are critical for optimizing the exported model's performance, size, and compatibility across various platforms and environments. Proper configuration ensures that the model is ready for deployment in the intended application with optimal efficiency.

| Argument | Type | Default | Description |

|---|---|---|---|

format | str | 'torchscript' | Target format for the exported model, such as 'onnx', 'torchscript', 'engine' (TensorRT), or others. Each format enables compatibility with different deployment environments. |

imgsz | int or tuple | 640 | Desired image size for the model input. Can be an integer for square images (e.g., 640 for 640×640) or a tuple (height, width) for specific dimensions. |

keras | bool | False | Enables export to Keras format for TensorFlow SavedModel, providing compatibility with TensorFlow serving and APIs. |

optimize | bool | False | Applies optimization for mobile devices when exporting to TorchScript, potentially reducing model size and improving inference performance. Not compatible with NCNN format or CUDA devices. |

half | bool | False | Enables FP16 (half-precision) quantization, reducing model size and potentially speeding up inference on supported hardware. Not compatible with INT8 quantization or CPU-only exports. Only available for certain formats, e.g. ONNX (see below). |

int8 | bool | False | Activates INT8 quantization, further compressing the model and speeding up inference with minimal accuracy loss, primarily for edge devices. When used with TensorRT, performs post-training quantization (PTQ). |

dynamic | bool | False | Allows dynamic input sizes for TorchScript, ONNX, OpenVINO, TensorRT, and CoreML exports, enhancing flexibility in handling varying image dimensions. Automatically set to True when using TensorRT with INT8. |

simplify | bool | True | Simplifies the model graph for ONNX exports with onnxslim, potentially improving performance and compatibility with inference engines. |

opset | int | None | Specifies the ONNX opset version for compatibility with different ONNX parsers and runtimes. If not set, uses the latest supported version. |

workspace | float or None | None | Sets the maximum workspace size in GiB for TensorRT optimizations, balancing memory usage and performance. Use None for auto-allocation by TensorRT up to device maximum. |

nms | bool | False | Adds Non-Maximum Suppression (NMS) to the exported model when supported (see Export Formats), improving detection post-processing efficiency. Not available for end2end models. |

batch | int | 1 | Specifies export model batch inference size or the maximum number of images the exported model will process concurrently in predict mode. For Edge TPU exports, this is automatically set to 1. |

device | str | None | Specifies the device for exporting: GPU (device=0), CPU (device=cpu), MPS for Apple silicon (device=mps) or DLA for NVIDIA Jetson (device=dla:0 or device=dla:1). TensorRT exports automatically use GPU. |

data | str | 'coco8.yaml' | Path to the dataset configuration file, essential for INT8 quantization calibration. If not specified with INT8 enabled, coco8.yaml will be used as a fallback for calibration. |

fraction | float | 1.0 | Specifies the fraction of the dataset to use for INT8 quantization calibration. Allows for calibrating on a subset of the full dataset, useful for experiments or when resources are limited. If not specified with INT8 enabled, the full dataset will be used. |

end2end | bool | None | Overrides the end-to-end mode in YOLO models that support NMS-free inference (YOLO26, YOLOv10). Setting it to False lets you export these models to be compatible with the traditional NMS-based postprocessing pipeline. |

Adjusting these parameters allows for customization of the export process to fit specific requirements, such as deployment environment, hardware constraints, and performance targets. Selecting the appropriate format and settings is essential for achieving the best balance between model size, speed, and accuracy.

Export Formats

Available YOLO26 export formats are in the table below. You can export to any format using the format argument, i.e., format='onnx' or format='engine'. You can predict or validate directly on exported models, i.e., yolo predict model=yolo26n.onnx. Usage examples are shown for your model after export completes.

| Format | format Argument | Model | Metadata | Arguments |

|---|---|---|---|---|

| PyTorch | - | yolo26n.pt | ✅ | - |

| TorchScript | torchscript | yolo26n.torchscript | ✅ | imgsz, half, dynamic, optimize, nms, batch, device |

| ONNX | onnx | yolo26n.onnx | ✅ | imgsz, half, dynamic, simplify, opset, nms, batch, device |

| OpenVINO | openvino | yolo26n_openvino_model/ | ✅ | imgsz, half, dynamic, int8, nms, batch, data, fraction, device |

| TensorRT | engine | yolo26n.engine | ✅ | imgsz, half, dynamic, simplify, workspace, int8, nms, batch, data, fraction, device |

| CoreML | coreml | yolo26n.mlpackage | ✅ | imgsz, dynamic, half, int8, nms, batch, device |

| TF SavedModel | saved_model | yolo26n_saved_model/ | ✅ | imgsz, keras, int8, nms, batch, device |

| TF GraphDef | pb | yolo26n.pb | ❌ | imgsz, batch, device |

| TF Lite | tflite | yolo26n.tflite | ✅ | imgsz, half, int8, nms, batch, data, fraction, device |

| TF Edge TPU | edgetpu | yolo26n_edgetpu.tflite | ✅ | imgsz, device |

| TF.js | tfjs | yolo26n_web_model/ | ✅ | imgsz, half, int8, nms, batch, device |

| PaddlePaddle | paddle | yolo26n_paddle_model/ | ✅ | imgsz, batch, device |

| MNN | mnn | yolo26n.mnn | ✅ | imgsz, batch, int8, half, device |

| NCNN | ncnn | yolo26n_ncnn_model/ | ✅ | imgsz, half, batch, device |

| IMX500 | imx | yolo26n_imx_model/ | ✅ | imgsz, int8, data, fraction, device |

| RKNN | rknn | yolo26n_rknn_model/ | ✅ | imgsz, batch, name, device |

| ExecuTorch | executorch | yolo26n_executorch_model/ | ✅ | imgsz, device |

| Axelera | axelera | yolo26n_axelera_model/ | ✅ | imgsz, int8, data, fraction, device |

FAQ

How do I export a YOLO26 model to ONNX format?

Exporting a YOLO26 model to ONNX format is straightforward with Ultralytics. It provides both Python and CLI methods for exporting models.

Example

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n.pt") # load an official model

model = YOLO("path/to/best.pt") # load a custom-trained model

# Export the model

model.export(format="onnx")

yolo export model=yolo26n.pt format=onnx # export official model

yolo export model=path/to/best.pt format=onnx # export custom-trained model

For more details on the process, including advanced options like handling different input sizes, refer to the ONNX integration guide.

What are the benefits of using TensorRT for model export?

Using TensorRT for model export offers significant performance improvements. YOLO26 models exported to TensorRT can achieve up to a 5x GPU speedup, making it ideal for real-time inference applications.

- Versatility: Optimize models for a specific hardware setup.

- Speed: Achieve faster inference through advanced optimizations.

- Compatibility: Integrate smoothly with NVIDIA hardware.

To learn more about integrating TensorRT, see the TensorRT integration guide.

How do I enable INT8 quantization when exporting my YOLO26 model?

INT8 quantization is an excellent way to compress the model and speed up inference, especially on edge devices. Here's how you can enable INT8 quantization:

Example

from ultralytics import YOLO

model = YOLO("yolo26n.pt") # Load a model

model.export(format="engine", int8=True)

yolo export model=yolo26n.pt format=engine int8=True # export TensorRT model with INT8 quantization

INT8 quantization can be applied to various formats, such as TensorRT, OpenVINO, and CoreML. For optimal quantization results, provide a representative dataset using the data parameter.

Why is dynamic input size important when exporting models?

Dynamic input size allows the exported model to handle varying image dimensions, providing flexibility and optimizing processing efficiency for different use cases. When exporting to formats like ONNX or TensorRT, enabling dynamic input size ensures that the model can adapt to different input shapes seamlessly.

To enable this feature, use the dynamic=True flag during export:

Example

from ultralytics import YOLO

model = YOLO("yolo26n.pt")

model.export(format="onnx", dynamic=True)

yolo export model=yolo26n.pt format=onnx dynamic=True

Dynamic input sizing is particularly useful for applications where input dimensions may vary, such as video processing or when handling images from different sources.

What are the key export arguments to consider for optimizing model performance?

Understanding and configuring export arguments is crucial for optimizing model performance:

format:The target format for the exported model (e.g.,onnx,torchscript,tensorflow).imgsz:Desired image size for the model input (e.g.,640or(height, width)).half:Enables FP16 quantization, reducing model size and potentially speeding up inference.optimize:Applies specific optimizations for mobile or constrained environments.int8:Enables INT8 quantization, highly beneficial for edge AI deployments.

For deployment on specific hardware platforms, consider using specialized export formats like TensorRT for NVIDIA GPUs, CoreML for Apple devices, or Edge TPU for Google Coral devices.

What do the output tensors represent in exported YOLO models?

When you export a YOLO model to formats like ONNX or TensorRT, the output tensor structure depends on the model task. Understanding these outputs is important for custom inference implementations.

For detection models (e.g., yolo26n.pt), the output is typically a single tensor shaped like (batch_size, 4 + num_classes, num_predictions) where the channels represent box coordinates plus per-class scores, and num_predictions depends on the export input resolution (and can be dynamic).

For segmentation models (e.g., yolo26n-seg.pt), you'll typically get two outputs: the first tensor shaped like (batch_size, 4 + num_classes + mask_dim, num_predictions) (boxes, class scores, and mask coefficients), and the second tensor shaped like (batch_size, mask_dim, proto_h, proto_w) containing mask prototypes used with the coefficients to generate instance masks. Sizes depend on the export input resolution (and can be dynamic).

For pose models (e.g., yolo26n-pose.pt), the output tensor is typically shaped like (batch_size, 4 + num_classes + keypoint_dims, num_predictions), where keypoint_dims depends on the pose specification (e.g., number of keypoints and whether confidence is included), and num_predictions depends on the export input resolution (and can be dynamic).

The examples in the ONNX inference examples demonstrate how to process these outputs for each model type.