CoreML Export for YOLO26 Models

Deploying computer vision models on Apple devices like iPhones and Macs requires a format that ensures seamless performance.

Watch: How to Export Ultralytics YOLO26 to CoreML for 2x Fast Inference on Apple Devices 🚀

The CoreML export format allows you to optimize your Ultralytics YOLO26 models for efficient object detection in iOS and macOS applications. In this guide, we'll walk you through the steps for converting your models to the CoreML format, making it easier for your models to perform well on Apple devices.

CoreML

CoreML is Apple's foundational machine learning framework that builds upon Accelerate, BNNS, and Metal Performance Shaders. It provides a machine-learning model format that seamlessly integrates into iOS applications and supports tasks such as image analysis, natural language processing, audio-to-text conversion, and sound analysis.

Applications can take advantage of Core ML without the need to have a network connection or API calls because the Core ML framework works using on-device computing. This means model inference can be performed locally on the user's device.

Key Features of CoreML Models

Apple's CoreML framework offers robust features for on-device machine learning. Here are the key features that make CoreML a powerful tool for developers:

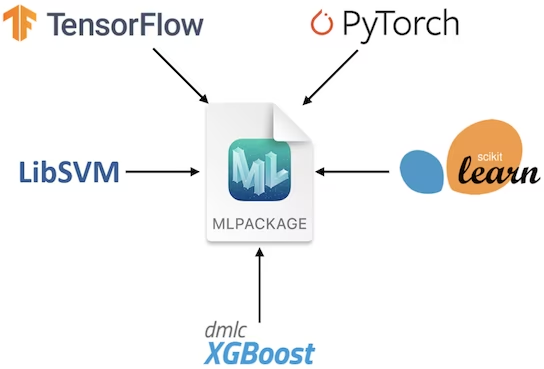

- Comprehensive Model Support: Converts and runs models from popular frameworks like TensorFlow, PyTorch, scikit-learn, XGBoost, and LibSVM.

On-device Machine Learning: Ensures data privacy and swift processing by executing models directly on the user's device, eliminating the need for network connectivity.

Performance and Optimization: Uses the device's CPU, GPU, and Neural Engine for optimal performance with minimal power and memory usage. Offers tools for model compression and optimization while maintaining accuracy.

Ease of Integration: Provides a unified format for various model types and a user-friendly API for seamless integration into apps. Supports domain-specific tasks through frameworks like Vision and Natural Language.

Advanced Features: Includes on-device training capabilities for personalized experiences, asynchronous predictions for interactive ML experiences, and model inspection and validation tools.

CoreML Deployment Options

Before we look at the code for exporting YOLO26 models to the CoreML format, let's understand where CoreML models are usually used.

CoreML offers various deployment options for machine learning models, including:

On-Device Deployment: This method directly integrates CoreML models into your iOS app. It's particularly advantageous for ensuring low latency, enhanced privacy (since data remains on the device), and offline functionality. This approach, however, may be limited by the device's hardware capabilities, especially for larger and more complex models, and it can be executed in the following two ways:

Embedded Models: These models are included in the app bundle and are immediately accessible. They are ideal for small models that do not require frequent updates.

Downloaded Models: These models are fetched from a server as needed. This approach is suitable for larger models or those needing regular updates. It helps keep the app bundle size smaller.

Cloud-Based Deployment: CoreML models are hosted on servers and accessed by the iOS app through API requests. This scalable and flexible option enables easy model updates without app revisions. It's ideal for complex models or large-scale apps requiring regular updates. However, it does require an internet connection and may pose latency and security issues.

Exporting YOLO26 Models to CoreML

Exporting YOLO26 to CoreML enables optimized, on-device machine learning performance within Apple's ecosystem, offering benefits in terms of efficiency, security, and seamless integration with iOS, macOS, watchOS, and tvOS platforms.

Installation

To install the required package, run:

Installation

# Install the required package for YOLO26

pip install ultralytics

For detailed instructions and best practices related to the installation process, check our YOLO26 Installation guide. While installing the required packages for YOLO26, if you encounter any difficulties, consult our Common Issues guide for solutions and tips.

Usage

Before diving into the usage instructions, be sure to check out the range of YOLO26 models offered by Ultralytics. This will help you choose the most appropriate model for your project requirements.

Usage

from ultralytics import YOLO

# Load the YOLO26 model

model = YOLO("yolo26n.pt")

# Export the model to CoreML format

model.export(format="coreml") # creates 'yolo26n.mlpackage'

# Load the exported CoreML model

coreml_model = YOLO("yolo26n.mlpackage")

# Run inference

results = coreml_model("https://ultralytics.com/images/bus.jpg")

# Export a YOLO26n PyTorch model to CoreML format

yolo export model=yolo26n.pt format=coreml # creates 'yolo26n.mlpackage'

# Run inference with the exported model

yolo predict model=yolo26n.mlpackage source='https://ultralytics.com/images/bus.jpg'

Export Arguments

| Argument | Type | Default | Description |

|---|---|---|---|

format | str | 'coreml' | Target format for the exported model, defining compatibility with various deployment environments. |

imgsz | int or tuple | 640 | Desired image size for the model input. Can be an integer for square images or a tuple (height, width) for specific dimensions. |

half | bool | False | Enables FP16 (half-precision) quantization, reducing model size and potentially speeding up inference on supported hardware. |

int8 | bool | False | Activates INT8 quantization, further compressing the model and speeding up inference with minimal accuracy loss, primarily for edge devices. |

nms | bool | False | Adds Non-Maximum Suppression (NMS), essential for accurate and efficient detection post-processing. |

batch | int | 1 | Specifies export model batch inference size or the max number of images the exported model will process concurrently in predict mode. |

device | str | None | Specifies the device for exporting: GPU (device=0), CPU (device=cpu), MPS for Apple silicon (device=mps). |

Tip

Please make sure to use a macOS or x86 Linux machine when exporting to CoreML.

For more details about the export process, visit the Ultralytics documentation page on exporting.

Deploying Exported YOLO26 CoreML Models

Having successfully exported your Ultralytics YOLO26 models to CoreML, the next critical phase is deploying these models effectively. For detailed guidance on deploying CoreML models in various environments, check out these resources:

CoreML Tools: This guide includes instructions and examples to convert models from TensorFlow, PyTorch, and other libraries to Core ML.

ML and Vision: A collection of comprehensive videos that cover various aspects of using and implementing CoreML models.

Integrating a Core ML Model into Your App: A comprehensive guide on integrating a CoreML model into an iOS application, detailing steps from preparing the model to implementing it in the app for various functionalities.

Summary

In this guide, we went over how to export Ultralytics YOLO26 models to CoreML format. By following the steps outlined in this guide, you can ensure maximum compatibility and performance when exporting YOLO26 models to CoreML.

For further details on usage, visit the CoreML official documentation.

Also, if you'd like to know more about other Ultralytics YOLO26 integrations, visit our integration guide page. You'll find plenty of valuable resources and insights there.

FAQ

How do I export YOLO26 models to CoreML format?

To export your Ultralytics YOLO26 models to CoreML format, you'll first need to ensure you have the ultralytics package installed. You can install it using:

Installation

pip install ultralytics

Next, you can export the model using the following Python or CLI commands:

Usage

from ultralytics import YOLO

model = YOLO("yolo26n.pt")

model.export(format="coreml")

yolo export model=yolo26n.pt format=coreml

For further details, refer to the Exporting YOLO26 Models to CoreML section of our documentation.

What are the benefits of using CoreML for deploying YOLO26 models?

CoreML provides numerous advantages for deploying Ultralytics YOLO26 models on Apple devices:

- On-device Processing: Enables local model inference on devices, ensuring data privacy and minimizing latency.

- Performance Optimization: Leverages the full potential of the device's CPU, GPU, and Neural Engine, optimizing both speed and efficiency.

- Ease of Integration: Offers a seamless integration experience with Apple's ecosystems, including iOS, macOS, watchOS, and tvOS.

- Versatility: Supports a wide range of machine learning tasks such as image analysis, audio processing, and natural language processing using the CoreML framework.

For more details on integrating your CoreML model into an iOS app, check out the guide on Integrating a Core ML Model into Your App.

What are the deployment options for YOLO26 models exported to CoreML?

Once you export your YOLO26 model to CoreML format, you have multiple deployment options:

On-Device Deployment: Directly integrate CoreML models into your app for enhanced privacy and offline functionality. This can be done as:

- Embedded Models: Included in the app bundle, accessible immediately.

- Downloaded Models: Fetched from a server as needed, keeping the app bundle size smaller.

Cloud-Based Deployment: Host CoreML models on servers and access them via API requests. This approach supports easier updates and can handle more complex models.

For detailed guidance on deploying CoreML models, refer to CoreML Deployment Options.

How does CoreML ensure optimized performance for YOLO26 models?

CoreML ensures optimized performance for Ultralytics YOLO26 models by utilizing various optimization techniques:

- Hardware Acceleration: Uses the device's CPU, GPU, and Neural Engine for efficient computation.

- Model Compression: Provides tools for compressing models to reduce their footprint without compromising accuracy.

- Adaptive Inference: Adjusts inference based on the device's capabilities to maintain a balance between speed and performance.

For more information on performance optimization, visit the CoreML official documentation.

Can I run inference directly with the exported CoreML model?

Yes, you can run inference directly using the exported CoreML model. Below are the commands for Python and CLI:

Running Inference

from ultralytics import YOLO

coreml_model = YOLO("yolo26n.mlpackage")

results = coreml_model("https://ultralytics.com/images/bus.jpg")

yolo predict model=yolo26n.mlpackage source='https://ultralytics.com/images/bus.jpg'

For additional information, refer to the Usage section of the CoreML export guide.