YOLO26 Model Training Made Simple with Paperspace Gradient

Training computer vision models like YOLO26 can be complicated. It involves managing large datasets, using different types of computer hardware like GPUs, TPUs, and CPUs, and making sure data flows smoothly during the training process. Typically, developers end up spending a lot of time managing their computer systems and environments. It can be frustrating when you just want to focus on building the best model.

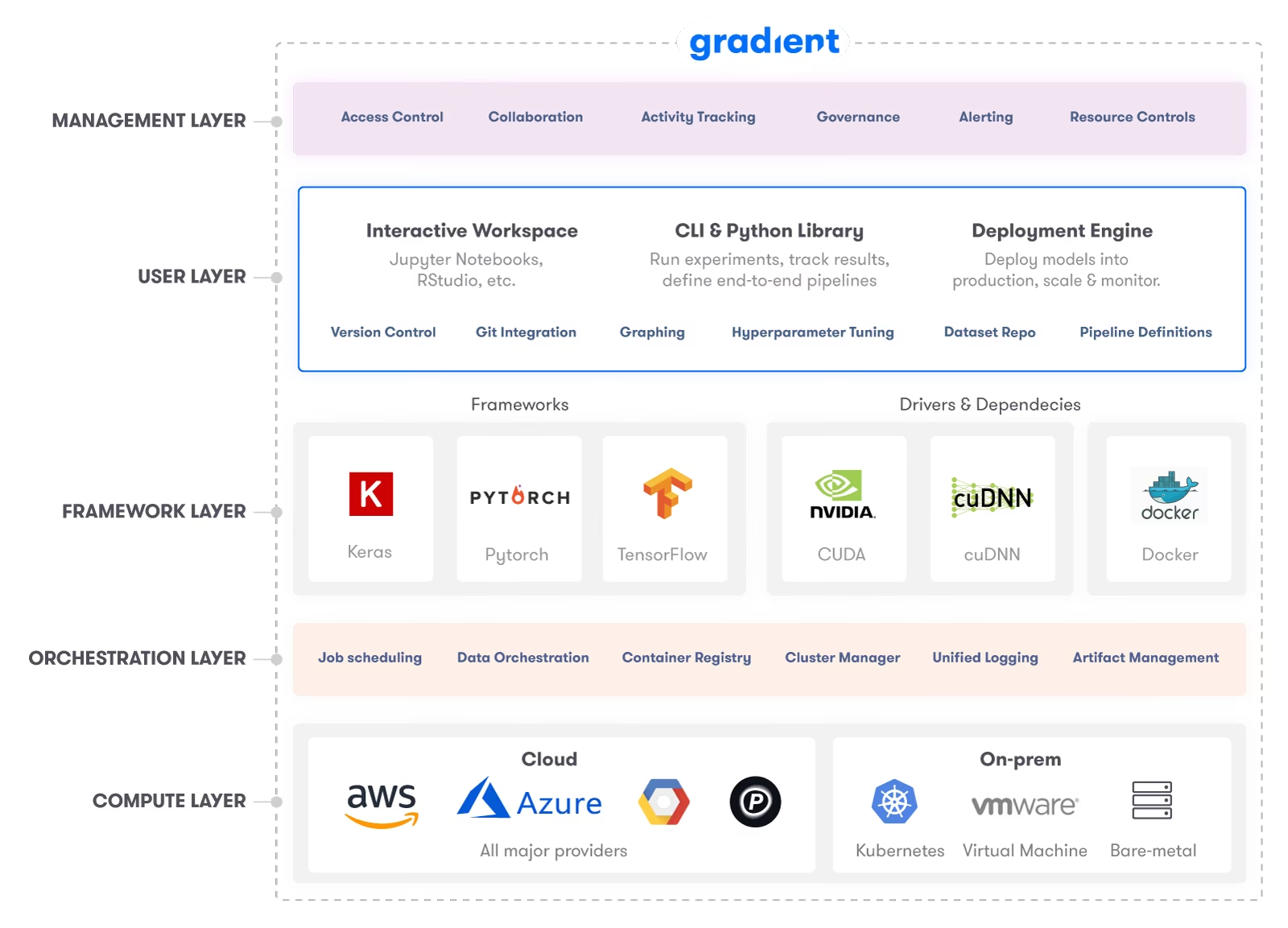

This is where a platform like Paperspace Gradient can make things simpler. Paperspace Gradient is a MLOps platform that lets you build, train, and deploy machine learning models all in one place. With Gradient, developers can focus on training their YOLO26 models without the hassle of managing infrastructure and environments.

Paperspace

Paperspace, launched in 2014 by University of Michigan graduates and acquired by DigitalOcean in 2023, is a cloud platform specifically designed for machine learning. It provides users with powerful GPUs, collaborative Jupyter notebooks, a container service for deployments, automated workflows for machine learning tasks, and high-performance virtual machines. These features aim to streamline the entire machine learning development process, from coding to deployment.

Paperspace Gradient

Paperspace Gradient is a suite of tools designed to make working with AI and machine learning in the cloud much faster and easier. Gradient addresses the entire machine learning lifecycle, from building and training models to deploying them.

Within its toolkit, it includes support for Google's TPUs via a job runner, comprehensive support for Jupyter notebooks and containers, and new programming language integrations. Its focus on language integration particularly stands out, allowing users to easily adapt their existing Python projects to use the most advanced GPU infrastructure available.

Training YOLO26 Using Paperspace Gradient

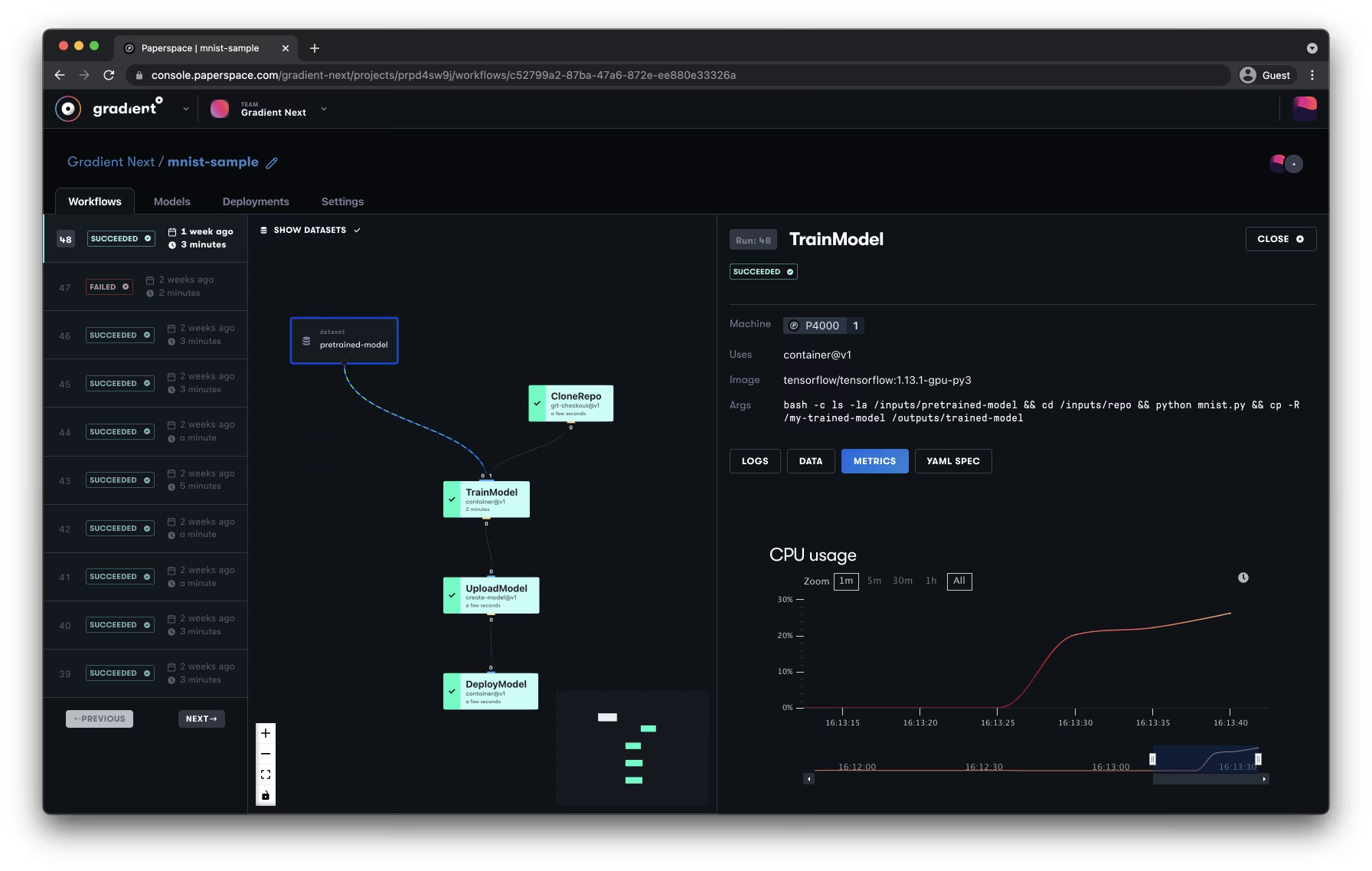

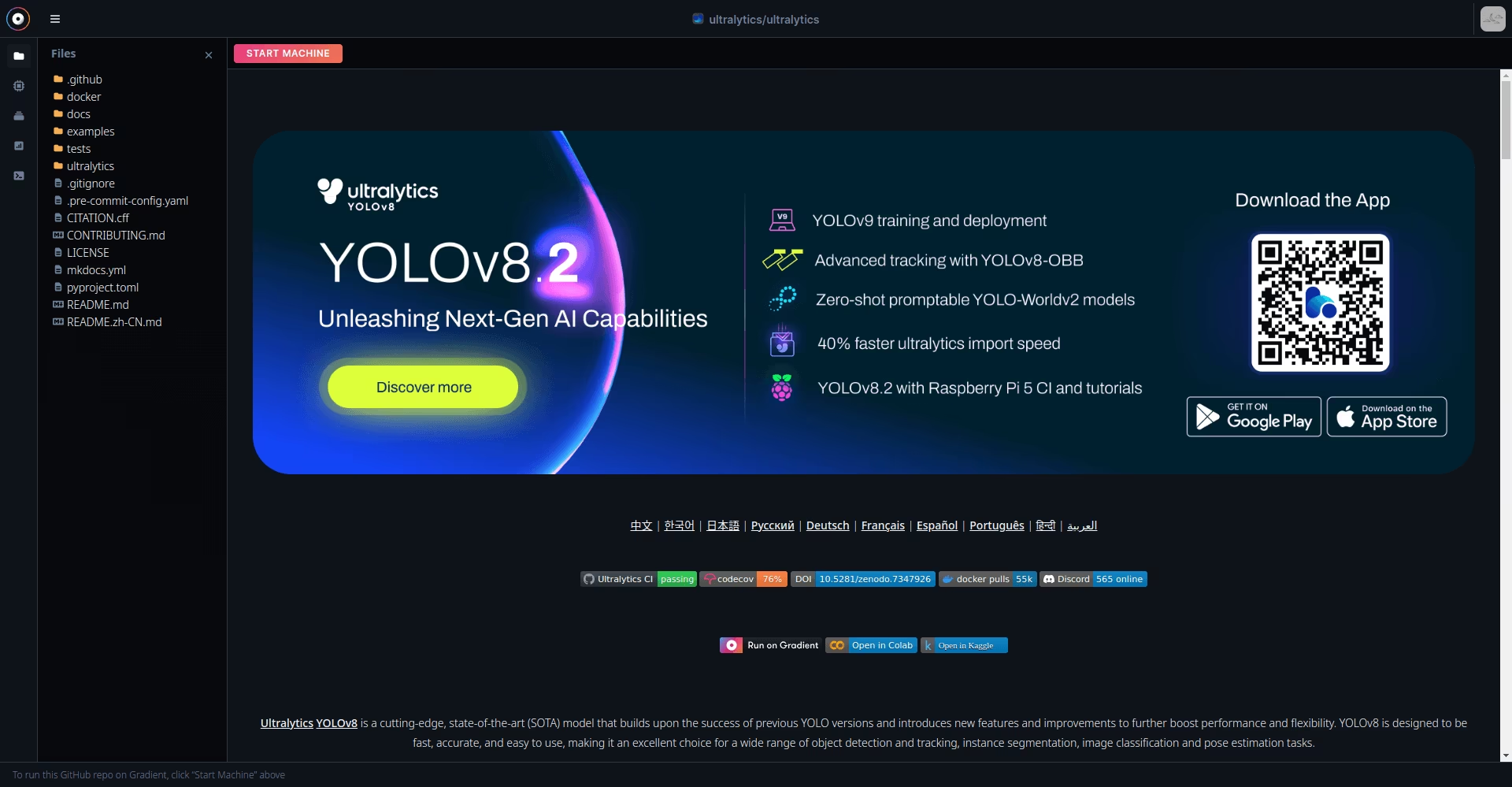

Paperspace Gradient makes training a YOLO26 model possible with a few clicks. Thanks to the integration, you can access the Paperspace console and start training your model immediately. For a detailed understanding of the model training process and best practices, refer to our YOLO26 Model Training guide.

Sign in and then click on the "Start Machine" button shown in the image below. In a few seconds, a managed GPU environment will start up, and then you can run the notebook's cells.

Explore more capabilities of YOLO26 and Paperspace Gradient in a discussion with Glenn Jocher, Ultralytics founder, and James Skelton from Paperspace. Watch the discussion below.

Watch: Ultralytics Live Session 7: It's All About the Environment: Optimizing YOLO26 Training With Gradient

Key Features of Paperspace Gradient

As you explore the Paperspace console, you'll see how each step of the machine-learning workflow is supported and enhanced. Here are some things to look out for:

One-Click Notebooks: Gradient provides pre-configured Jupyter Notebooks specifically tailored for YOLO26, eliminating the need for environment setup and dependency management. Simply choose the desired notebook and start experimenting immediately.

Hardware Flexibility: Choose from a range of machine types with varying CPU, GPU, and TPU configurations to suit your training needs and budget. Gradient handles all the backend setup, allowing you to focus on model development.

Experiment Tracking: Gradient automatically tracks your experiments, including hyperparameters, metrics, and code changes. This allows you to easily compare different training runs, identify optimal configurations, and reproduce successful results.

Dataset Management: Efficiently manage your datasets directly within Gradient. Upload, version, and pre-process data with ease, streamlining the data preparation phase of your project.

Model Serving: Deploy your trained YOLO26 models as REST APIs with just a few clicks. Gradient handles the infrastructure, allowing you to easily integrate your object detection models into your applications.

Real-time Monitoring: Monitor the performance and health of your deployed models through Gradient's intuitive dashboard. Gain insights into inference speed, resource utilization, and potential errors.

Why Should You Use Gradient for Your YOLO26 Projects?

While many options are available for training, deploying, and evaluating YOLO26 models, the integration with Paperspace Gradient offers a unique set of advantages that separates it from other solutions. Let's explore what makes this integration unique:

Enhanced Collaboration: Shared workspaces and version control facilitate seamless teamwork and ensure reproducibility, allowing your team to work together effectively and maintain a clear history of your project.

Low-Cost GPUs: Gradient provides access to high-performance GPUs at significantly lower costs than major cloud providers or on-premise solutions. With per-second billing, you only pay for the resources you actually use, optimizing your budget.

Predictable Costs: Gradient's on-demand pricing ensures cost transparency and predictability. You can scale your resources up or down as needed and only pay for the time you use, avoiding unnecessary expenses.

No Commitments: You can adjust your instance types anytime to adapt to changing project requirements and optimize the cost-performance balance. There are no lock-in periods or commitments, providing maximum flexibility.

Summary

This guide explored the Paperspace Gradient integration for training YOLO26 models. Gradient provides the tools and infrastructure to accelerate your AI development journey from effortless model training and evaluation to streamlined deployment options.

For further exploration, visit Paperspace's official documentation.

Also, visit the Ultralytics integration guide page to learn more about different YOLO26 integrations. It's full of insights and tips to take your computer vision projects to the next level.

FAQ

How do I train a YOLO26 model using Paperspace Gradient?

Training a YOLO26 model with Paperspace Gradient is straightforward and efficient. First, sign in to the Paperspace console. Next, click the "Start Machine" button to initiate a managed GPU environment. Once the environment is ready, you can run the notebook's cells to start training your YOLO26 model. For detailed instructions, refer to our YOLO26 Model Training guide.

What are the advantages of using Paperspace Gradient for YOLO26 projects?

Paperspace Gradient offers several unique advantages for training and deploying YOLO26 models:

- Hardware Flexibility: Choose from various CPU, GPU, and TPU configurations.

- One-Click Notebooks: Use pre-configured Jupyter Notebooks for YOLO26 without worrying about environment setup.

- Experiment Tracking: Automatic tracking of hyperparameters, metrics, and code changes.

- Dataset Management: Efficiently manage your datasets within Gradient.

- Model Serving: Deploy models as REST APIs easily.

- Real-time Monitoring: Monitor model performance and resource utilization through a dashboard.

Why should I choose Ultralytics YOLO26 over other object detection models?

Ultralytics YOLO26 stands out for its real-time object detection capabilities and high accuracy. Its seamless integration with platforms like Paperspace Gradient enhances productivity by simplifying the training and deployment process. YOLO26 supports various use cases, from security systems to retail inventory management. Discover the full range of YOLO26's capabilities and benefits in our YOLO26 overview.

Can I deploy my YOLO26 model on edge devices using Paperspace Gradient?

Yes, you can deploy YOLO26 models on edge devices using Paperspace Gradient. The platform supports various deployment formats like TFLite and Edge TPU, which are optimized for edge devices. After training your model on Gradient, refer to our export guide for instructions on converting your model to the desired format.

How does experiment tracking in Paperspace Gradient help improve YOLO26 training?

Experiment tracking in Paperspace Gradient streamlines the model development process by automatically logging hyperparameters, metrics, and code changes. This allows you to easily compare different training runs, identify optimal configurations, and reproduce successful experiments. Similar functionality can be found in other experiment tracking tools that integrate with Ultralytics YOLO26.