MLflow Integration for Ultralytics YOLO

Introduction

Experiment logging is a crucial aspect of machine learning workflows that enables tracking of various metrics, parameters, and artifacts. It helps to enhance model reproducibility, debug issues, and improve model performance. Ultralytics YOLO, known for its real-time object detection capabilities, now offers integration with MLflow, an open-source platform for complete machine learning lifecycle management.

This documentation page is a comprehensive guide to setting up and utilizing the MLflow logging capabilities for your Ultralytics YOLO project.

What is MLflow?

MLflow is an open-source platform developed by Databricks for managing the end-to-end machine learning lifecycle. It includes tools for tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow is designed to work with any machine learning library and programming language.

Features

- Metrics Logging: Logs metrics at the end of each epoch and at the end of the training.

- Parameter Logging: Logs all the parameters used in the training.

- Artifacts Logging: Logs model artifacts, including weights and configuration files, at the end of the training.

Setup and Prerequisites

Ensure MLflow is installed. If not, install it using pip:

pip install mlflow

Make sure that MLflow logging is enabled in Ultralytics settings. Usually, this is controlled by the settings mlflow key. See the settings page for more info.

Update Ultralytics MLflow Settings

Within the Python environment, call the update method on the settings object to change your settings:

from ultralytics import settings

# Update a setting

settings.update({"mlflow": True})

# Reset settings to default values

settings.reset()

If you prefer using the command-line interface, the following commands will allow you to modify your settings:

# Update a setting

yolo settings mlflow=True

# Reset settings to default values

yolo settings reset

How to Use

Commands

Set a Project Name: You can set the project name via an environment variable:

export MLFLOW_EXPERIMENT_NAME=YOUR_EXPERIMENT_NAMEOr use the

project=<project>argument when training a YOLO model, i.e.yolo train project=my_project.Set a Run Name: Similar to setting a project name, you can set the run name via an environment variable:

export MLFLOW_RUN=YOUR_RUN_NAMEOr use the

name=<name>argument when training a YOLO model, i.e.yolo train project=my_project name=my_name.Start Local MLflow Server: To start tracking, use:

mlflow server --backend-store-uri runs/mlflowThis will start a local server at

http://127.0.0.1:5000by default and save all mlflow logs to the 'runs/mlflow' directory. To specify a different URI, set theMLFLOW_TRACKING_URIenvironment variable.Kill MLflow Server Instances: To stop all running MLflow instances, run:

ps aux | grep 'mlflow' | grep -v 'grep' | awk '{print $2}' | xargs kill -9

Logging

The logging is taken care of by the on_pretrain_routine_end, on_fit_epoch_end, and on_train_end callback functions. These functions are automatically called during the respective stages of the training process, and they handle the logging of parameters, metrics, and artifacts.

Examples

Logging Custom Metrics: You can add custom metrics to be logged by modifying the

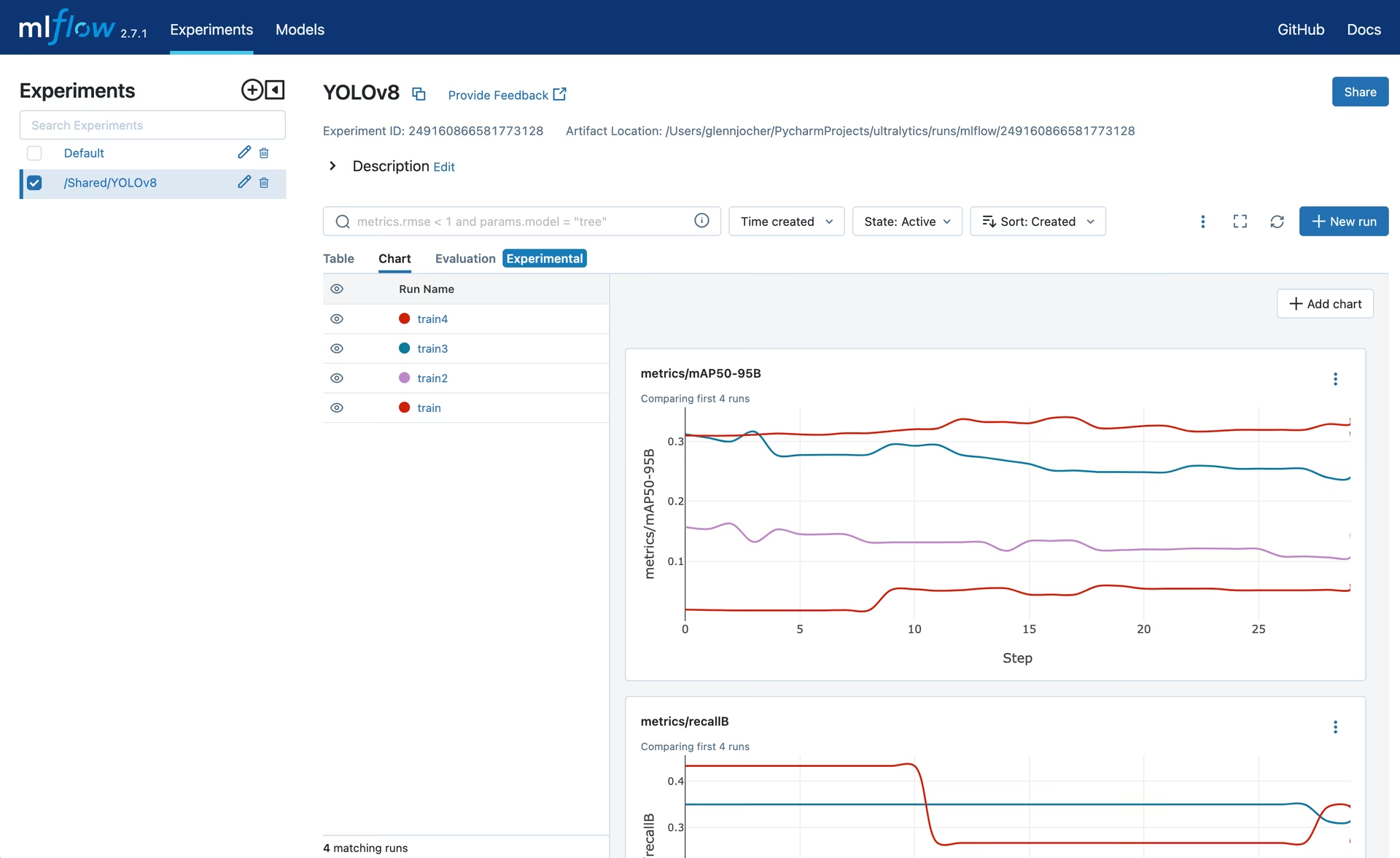

trainer.metricsdictionary beforeon_fit_epoch_endis called.View Experiment: To view your logs, navigate to your MLflow server (usually

http://127.0.0.1:5000) and select your experiment and run.

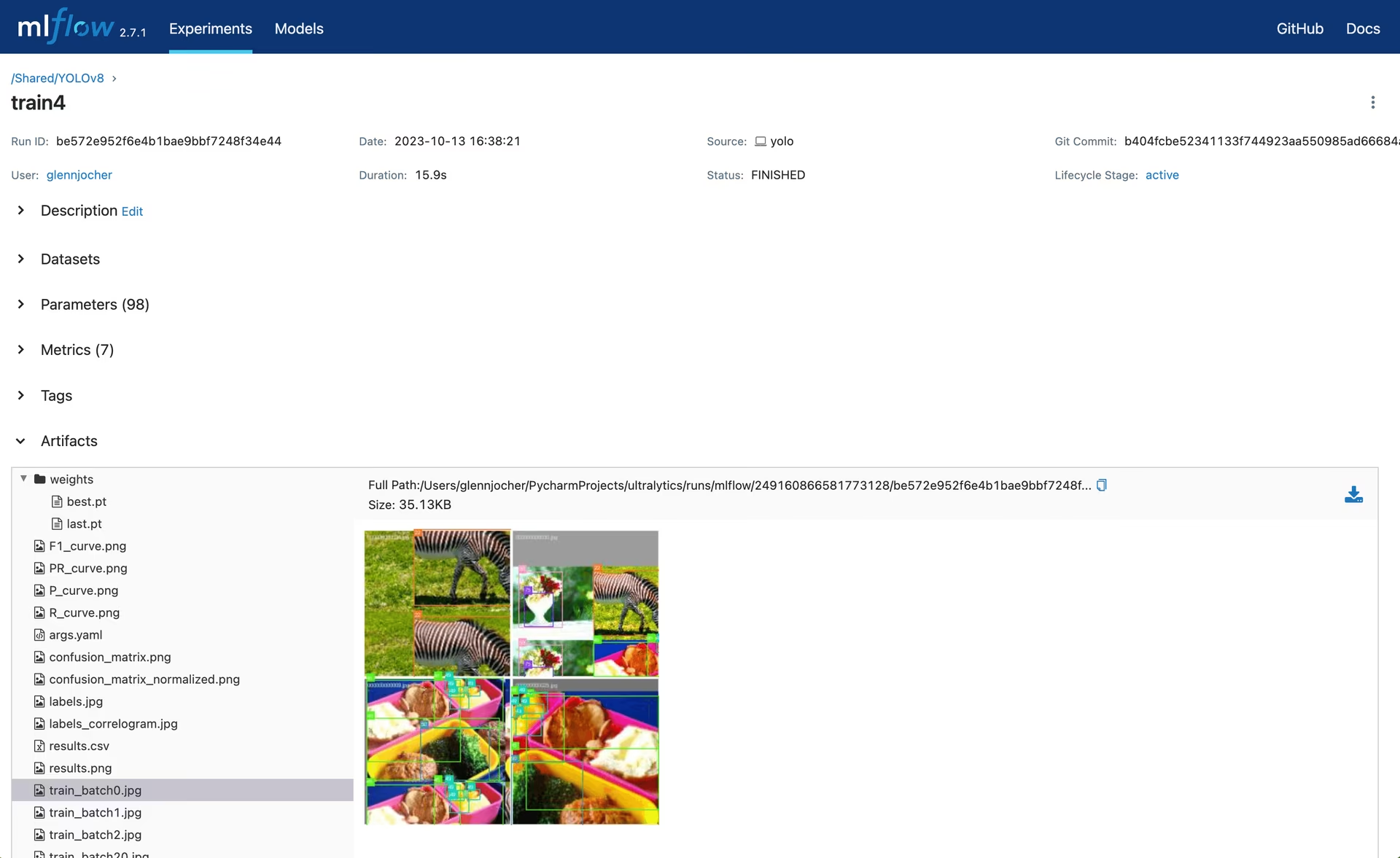

View Run: Runs are individual models inside an experiment. Click on a Run and see the Run details, including uploaded artifacts and model weights.

Disabling MLflow

To turn off MLflow logging:

yolo settings mlflow=False

Conclusion

MLflow logging integration with Ultralytics YOLO offers a streamlined way to keep track of your machine learning experiments. It empowers you to monitor performance metrics and manage artifacts effectively, thus aiding in robust model development and deployment. For further details please visit the MLflow official documentation.

FAQ

How do I set up MLflow logging with Ultralytics YOLO?

To set up MLflow logging with Ultralytics YOLO, you first need to ensure MLflow is installed. You can install it using pip:

pip install mlflow

Next, enable MLflow logging in Ultralytics settings. This can be controlled using the mlflow key. For more information, see the settings guide.

Update Ultralytics MLflow Settings

from ultralytics import settings

# Update a setting

settings.update({"mlflow": True})

# Reset settings to default values

settings.reset()

# Update a setting

yolo settings mlflow=True

# Reset settings to default values

yolo settings reset

Finally, start a local MLflow server for tracking:

mlflow server --backend-store-uri runs/mlflow

What metrics and parameters can I log using MLflow with Ultralytics YOLO?

Ultralytics YOLO with MLflow supports logging various metrics, parameters, and artifacts throughout the training process:

- Metrics Logging: Tracks metrics at the end of each epoch and upon training completion.

- Parameter Logging: Logs all parameters used in the training process.

- Artifacts Logging: Saves model artifacts like weights and configuration files after training.

For more detailed information, visit the Ultralytics YOLO tracking documentation.

Can I disable MLflow logging once it is enabled?

Yes, you can disable MLflow logging for Ultralytics YOLO by updating the settings. Here's how you can do it using the CLI:

yolo settings mlflow=False

For further customization and resetting settings, refer to the settings guide.

How can I start and stop an MLflow server for Ultralytics YOLO tracking?

To start an MLflow server for tracking your experiments in Ultralytics YOLO, use the following command:

mlflow server --backend-store-uri runs/mlflow

This command starts a local server at http://127.0.0.1:5000 by default. If you need to stop running MLflow server instances, use the following bash command:

ps aux | grep 'mlflow' | grep -v 'grep' | awk '{print $2}' | xargs kill -9

Refer to the commands section for more command options.

What are the benefits of integrating MLflow with Ultralytics YOLO for experiment tracking?

Integrating MLflow with Ultralytics YOLO offers several benefits for managing your machine learning experiments:

- Enhanced Experiment Tracking: Easily track and compare different runs and their outcomes.

- Improved Model Reproducibility: Ensure that your experiments are reproducible by logging all parameters and artifacts.

- Performance Monitoring: Visualize performance metrics over time to make data-driven decisions for model improvements.

- Streamlined Workflow: Automate the logging process to focus more on model development rather than manual tracking.

- Collaborative Development: Share experiment results with team members for better collaboration and knowledge sharing.

For an in-depth look at setting up and leveraging MLflow with Ultralytics YOLO, explore the MLflow Integration for Ultralytics YOLO documentation.