YOLO26モデルのTensorRTエクスポート

高性能環境にコンピュータビジョンモデルをデプロイするには、速度と効率を最大化する形式が必要になる場合があります。これは、NVIDIA GPUにモデルをデプロイする場合に特に当てはまります。

TensorRTエクスポート形式を使用することで、Ultralytics YOLO26モデルをNVIDIAハードウェア上での迅速かつ効率的な推論のために強化できます。このガイドでは、変換プロセスに関するわかりやすい手順を提供し、ディープラーニングプロジェクトでNVIDIAの高度なテクノロジーを最大限に活用するのに役立ちます。

TensorRT

TensorRTは、NVIDIAが開発した、高速な深層学習推論のために設計された高度なソフトウェア開発キット(SDK)です。物体検出のようなリアルタイムアプリケーションに最適です。

このツールキットは、NVIDIA GPUの深層学習モデルを最適化し、より高速で効率的なオペレーションを実現します。TensorRTモデルは、レイヤーの融合、精度キャリブレーション(INT8およびFP16)、動的テンソルメモリ管理、カーネルの自動チューニングなどの手法を含むTensorRT最適化を受けます。深層学習モデルをTensorRT形式に変換することで、開発者はNVIDIA GPUの可能性を最大限に引き出すことができます。

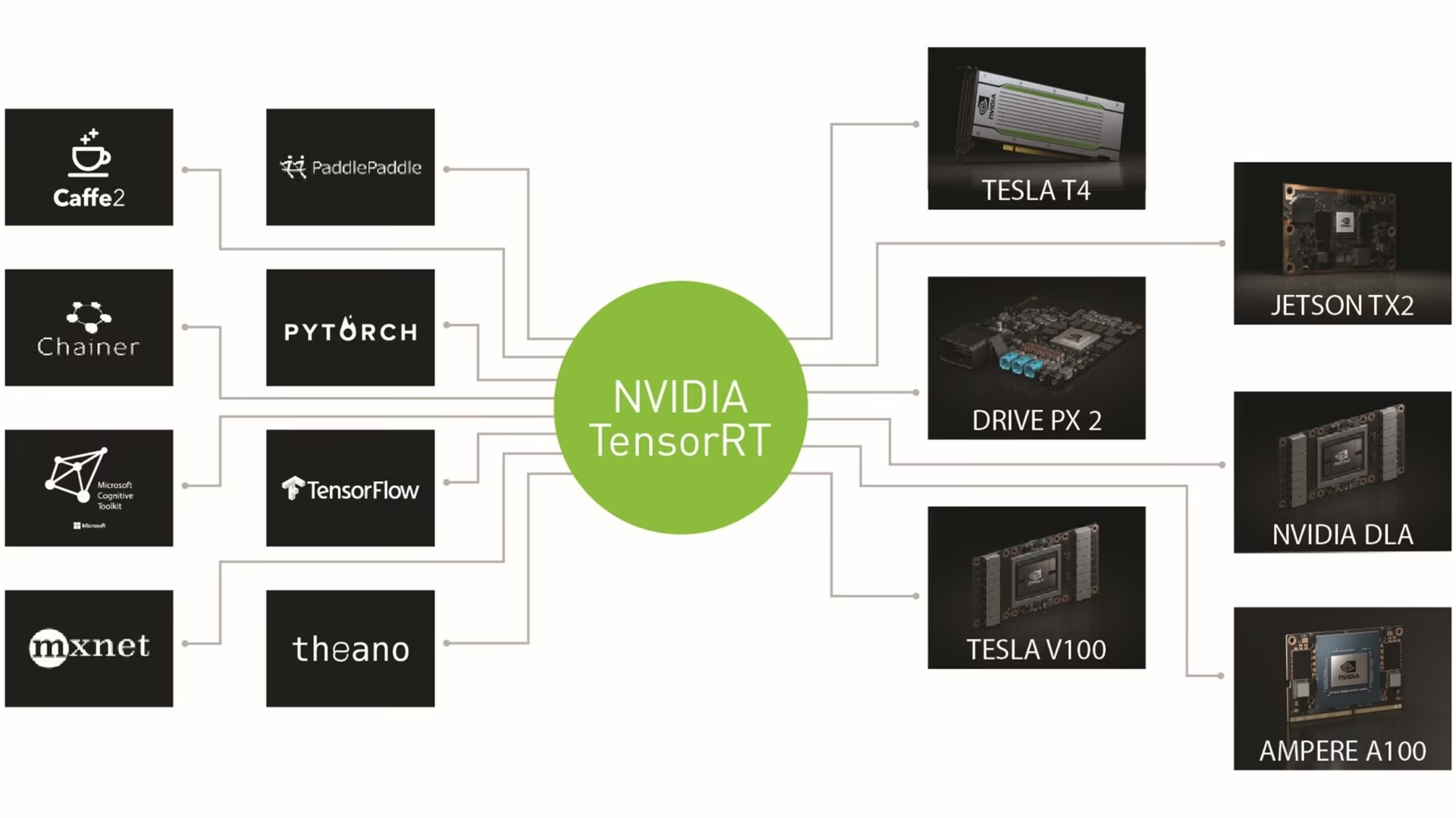

TensorRTは、TensorFlow、PyTorch、ONNXなど、さまざまなモデル形式との互換性があることで知られており、開発者はさまざまなフレームワークからのモデルを統合および最適化するための柔軟なソリューションを利用できます。この多様性により、多様なハードウェアおよびソフトウェア環境全体で効率的なモデルのデプロイメントが可能になります。

TensorRTモデルの主な特徴

TensorRTモデルは、高速深層学習推論における効率と有効性に貢献する、次のような主要な機能を提供します。

精度キャリブレーション: TensorRTは精度キャリブレーションをサポートしており、特定の精度要件に合わせてモデルを微調整できます。これには、INT8やFP16のような低精度形式のサポートが含まれており、許容可能な精度レベルを維持しながら、推論速度をさらに向上させることができます。

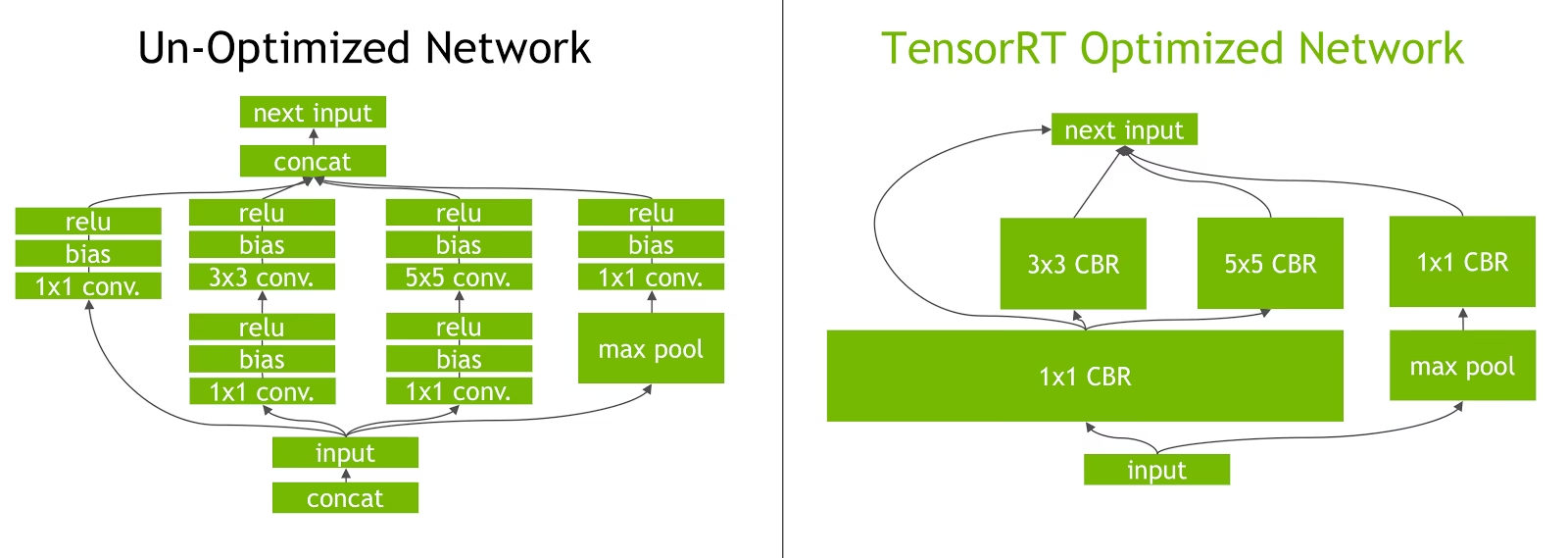

レイヤー融合: TensorRTの最適化プロセスには、レイヤー融合が含まれており、ニューラルネットワークの複数のレイヤーが単一の操作に結合されます。これにより、メモリへのアクセスと計算を最小限に抑えることで、計算のオーバーヘッドが削減され、推論速度が向上します。

動的Tensorメモリ管理: TensorRTは、推論中のtensorメモリの使用量を効率的に管理し、メモリオーバーヘッドを削減し、メモリアロケーションを最適化します。これにより、GPUメモリの使用効率が向上します。

自動カーネルチューニング: TensorRTは、モデルの各層に対して最も最適化されたGPUカーネルを選択するために自動カーネルチューニングを適用します。この適応的なアプローチにより、モデルはGPUの計算能力を最大限に活用できます。

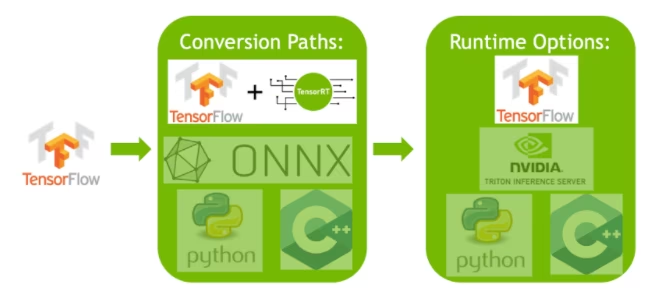

TensorRTでのデプロイメントオプション

YOLO26モデルをTensorRT形式にエクスポートするためのコードを見る前に、TensorRTモデルが通常どこで使用されるかを理解しましょう。

TensorRTはいくつかのデプロイメントオプションを提供しており、各オプションは統合の容易さ、パフォーマンスの最適化、柔軟性のバランスが異なっています。

- TensorFlow内でのデプロイ: このメソッドは、TensorRTをTensorFlowに統合し、最適化されたモデルを使い慣れたTensorFlow環境で実行できるようにします。TF-TRTはサポートされているレイヤーとサポートされていないレイヤーの混在を効率的に処理できるため、このようなモデルに役立ちます。

スタンドアロンTensorRT Runtime API: きめ細かい制御を提供し、パフォーマンスが重要なアプリケーションに最適です。より複雑ですが、サポートされていないオペレーターのカスタム実装が可能です。

NVIDIA Triton Inference Server: さまざまなフレームワークのモデルをサポートするオプションです。特にクラウドまたはエッジ推論に適しており、同時モデル実行やモデル分析などの機能を提供します。

YOLO26モデルのTensorRTへのエクスポート

YOLO26モデルをTensorRT形式に変換することで、実行効率を向上させ、パフォーマンスを最適化できます。

インストール

必要なパッケージをインストールするには、以下を実行します:

インストール

# Install the required package for YOLO26

pip install ultralytics

インストールプロセスに関する詳細な手順とベストプラクティスについては、当社のYOLO26インストールガイドを確認してください。YOLO26に必要なパッケージをインストールする際に何らかの問題に遭遇した場合は、解決策とヒントについては、当社の一般的な問題ガイドを参照してください。

使用法

使用方法の説明に入る前に、Ultralyticsが提供するYOLO26モデルのラインナップを必ずご確認ください。これにより、プロジェクトの要件に最も適したモデルを選択できます。

使用法

from ultralytics import YOLO

# Load the YOLO26 model

model = YOLO("yolo26n.pt")

# Export the model to TensorRT format

model.export(format="engine") # creates 'yolo26n.engine'

# Load the exported TensorRT model

tensorrt_model = YOLO("yolo26n.engine")

# Run inference

results = tensorrt_model("https://ultralytics.com/images/bus.jpg")

# Export a YOLO26n PyTorch model to TensorRT format

yolo export model=yolo26n.pt format=engine # creates 'yolo26n.engine'

# Run inference with the exported model

yolo predict model=yolo26n.engine source='https://ultralytics.com/images/bus.jpg'

エクスポート引数

| 引数 | 種類 | デフォルト | 説明 |

|---|---|---|---|

format | str | 'engine' | エクスポートされたモデルのターゲット形式。さまざまなデプロイメント環境との互換性を定義します。 |

imgsz | int または tuple | 640 | モデル入力に必要な画像サイズ。正方形の画像の場合は整数、タプルの場合は (height, width) 特定の寸法の場合。 |

half | bool | False | FP16(半精度)量子化を有効にし、モデルサイズを縮小し、サポートされているハードウェアでの推論を高速化する可能性があります。 |

int8 | bool | False | INT8量子化を有効にすると、モデルがさらに圧縮され、精度の低下を最小限に抑えながら推論が高速化されます。主にエッジデバイス向けです。 |

dynamic | bool | False | 動的な入力サイズを許可し、さまざまな画像寸法を処理する際の柔軟性を高めます。 |

simplify | bool | True | でモデルグラフを簡素化します onnxslimことで、パフォーマンスと互換性が向上する可能性があります。 |

workspace | float または None | None | TensorRT の最適化のための最大ワークスペースサイズを GiB 単位で設定し、メモリ使用量とパフォーマンスのバランスを取ります。使用方法: None TensorRTによる自動割り当てで、デバイスの最大値まで対応します。 |

nms | bool | False | 正確かつ効率的なdetect後処理に不可欠なNon-Maximum Suppression (NMS)を追加します。 |

batch | int | 1 | エクスポートされたモデルのバッチ推論サイズ、またはエクスポートされたモデルが同時に処理する画像の最大数を指定します。 predict モードを参照してください。 |

data | str | 'coco8.yaml' | へのパス データセット 構成ファイル(デフォルト: coco8.yaml)は、量子化に不可欠です。 |

fraction | float | 1.0 | INT8量子化のキャリブレーションに使用するデータセットの割合を指定します。リソースが限られている場合や実験を行う場合に役立つ、データセットのサブセットでのキャリブレーションが可能です。INT8を有効にして指定しない場合、データセット全体が使用されます。 |

device | str | None | エクスポート先のデバイス(GPU(device=0)、NVIDIA Jetson 用 DLA (device=dla:0 または device=dla:1)。 |

ヒント

TensorRTにエクスポートする際は、CUDAをサポートするGPUを使用してください。

エクスポートプロセスの詳細については、エクスポートに関するUltralyticsドキュメントページをご覧ください。

INT8 量子化による TensorRT のエクスポート

TensorRT を使用して Ultralytics YOLO モデルを INT8 精度でエクスポートすると、トレーニング後の量子化(PTQ)が実行されます。TensorRT は PTQ のキャリブレーションを使用します。これは、YOLO モデルが代表的な入力データで推論を処理するときに、各アクティベーションテンソル内のアクティベーションの分布を測定し、その分布を使用して各テンソルのスケール値を推定します。量子化の候補となる各アクティベーションテンソルには、キャリブレーションプロセスによって推測される関連付けられたスケールがあります。

暗黙的に量子化されたネットワークを処理する場合、TensorRTはレイヤーの実行時間を最適化するために、INT8を機会的に使用します。レイヤーがINT8でより高速に実行され、そのデータ入力および出力に量子化スケールが割り当てられている場合、INT8精度を持つカーネルがそのレイヤーに割り当てられます。それ以外の場合、TensorRTは、そのレイヤーの実行時間がより速くなるFP32またはFP16のいずれかの精度をカーネルに選択します。

ヒント

TensorRTモデルの重みをデプロイに使用するのと同じデバイスでINT8精度でエクスポートすることが重要です。これは、キャリブレーションの結果がデバイスによって異なる可能性があるためです。

INT8エクスポートの設定

使用時に指定された引数 エクスポート Ultralytics YOLOモデルの場合 大幅に エクスポートされたモデルのパフォーマンスに影響を与えます。デフォルトの引数と同様に、利用可能なデバイスリソースに基づいて選択する必要があります。 する必要があります ほとんどの場合に有効です Ampere(またはそれ以降)のNVIDIAディスクリートGPU。使用されるキャリブレーションアルゴリズムは "MINMAX_CALIBRATION" そして、利用可能なオプションに関する詳細を読むことができます。 TensorRT Developer Guideに記載されています。Ultralyticsのテストでは、以下が判明しました。 "MINMAX_CALIBRATION" が最良の選択であり、エクスポートはこのアルゴリズムを使用するように修正されています。

workspace: モデルの重みを変換する際に、デバイスのメモリー割り当てのサイズ(GiB単位)を制御します。調整:

workspace値は、キャリブレーションのニーズとリソースの利用可能性に応じて調整してください。workspaceキャリブレーション時間が長くなる可能性がありますが、TensorRTがより広範囲な最適化戦術を検討できるようになり、モデルのパフォーマンスが向上する可能性があります。 精度。逆に、より小さいworkspaceキャリブレーション時間を短縮できますが、最適化戦略が制限され、量子化されたモデルの品質に影響を与える可能性があります。デフォルトは

workspace=Noneこれにより、TensorRTが自動的にメモリを割り当てることができます。手動で構成する場合、キャリブレーションがクラッシュした場合(警告なしに終了)、この値を大きくする必要がある場合があります。TensorRTはレポートします

UNSUPPORTED_STATEエクスポート中に、値がworkspaceはデバイスで利用可能なメモリよりも大きいため、の値はworkspace下げるか、に設定する必要がありますNone.もし

workspaceが最大値に設定され、キャリブレーションが失敗またはクラッシュする場合は、以下を使用することを検討してください。None自動割り当ての場合、または以下の値を減らすことで対応します。imgszおよびbatchメモリ要件を削減します。INT8のキャリブレーションはデバイスごとに固有であることを覚えておいてください。キャリブレーションのために「ハイエンド」GPUを借りると、別のデバイスで推論を実行した場合にパフォーマンスが低下する可能性があります。

batch:推論に使用される最大バッチサイズ。推論中に、より小さいバッチを使用できますが、指定されたサイズを超えるバッチは受け入れられません。

注

キャリブレーション中、 batch 指定されていない場合は、提供されたサイズが使用されます。小さなバッチを使用すると、キャリブレーション中に不正確なスケーリングが発生する可能性があります。これは、プロセスが見るデータに基づいて調整されるためです。小さなバッチでは、値の全範囲を捉えきれない可能性があり、最終的なキャリブレーションに問題が生じる可能性があります。したがって、 batch サイズは自動的に2倍になります。サイズが バッチサイズ が指定されています batch=1、キャリブレーションは以下で実行されます。 batch=1 * 2 キャリブレーションのスケーリングエラーを減らすため。

NVIDIAによる実験の結果、INT8量子化キャリブレーションでは、モデルのデータを代表する500枚以上のキャリブレーション画像を使用することを推奨しています。これはガイドラインであり、 難しい 要件、そして データセットで良好な結果を得るために何が必要かを実験する必要があります。 TensorRTでINT8キャリブレーションを行うにはキャリブレーションデータが必要なため、必ず data 引数は、のとき int8=True TensorRTを使用する場合 data="my_dataset.yaml"、の画像を使用します。 検証 キャリブレーションに使用します。値が渡されない場合 data INT8量子化によるTensorRTへのエクスポートでは、デフォルトでは次のいずれかが使用されます。 モデルタスクに基づいた「small」なサンプルデータセット エラーをスローする代わりに。

例

from ultralytics import YOLO

model = YOLO("yolo26n.pt")

model.export(

format="engine",

dynamic=True, # (1)!

batch=8, # (2)!

workspace=4, # (3)!

int8=True,

data="coco.yaml", # (4)!

)

# Load the exported TensorRT INT8 model

model = YOLO("yolo26n.engine", task="detect")

# Run inference

result = model.predict("https://ultralytics.com/images/bus.jpg")

- 動的軸でのエクスポート。これは、エクスポート時にデフォルトで有効になります。

int8=True明示的に設定されていなくても有効になります。詳しくは エクスポート引数 詳細については。 - エクスポートされたモデルの最大バッチサイズを8に設定し、キャリブレーションします

batch = 2 * 8キャリブレーション中のスケーリングエラーを回避するため。 - 変換プロセスでデバイス全体を割り当てる代わりに、4 GiBのメモリを割り当てます。

- キャリブレーションにはCOCOデータセットを使用します。具体的には、検証に使用される画像(合計5,000枚)です。

# Export a YOLO26n PyTorch model to TensorRT format with INT8 quantization

yolo export model=yolo26n.pt format=engine batch=8 workspace=4 int8=True data=coco.yaml # creates 'yolo26n.engine'

# Run inference with the exported TensorRT quantized model

yolo predict model=yolo26n.engine source='https://ultralytics.com/images/bus.jpg'

キャリブレーションキャッシュ

TensorRTはキャリブレーションを生成します .cache これは、同じデータを使用して将来のモデルウェイトのエクスポートを高速化するために再利用できますが、データが大幅に異なる場合、または batch 値が大幅に変更された場合。このような状況では、既存の .cache 名前を変更して別のディレクトリに移動するか、完全に削除する必要があります。

TensorRT INT8 で YOLO を使用する利点

モデルサイズの削減: FP32からINT8への量子化により、モデルサイズを4倍削減でき(ディスク上またはメモリ内)、ダウンロード時間の短縮につながります。ストレージ要件が低くなり、モデル展開時のメモリフットプリントが削減されます。

低消費電力: INT8でエクスポートされたYOLOモデルの精度を下げた演算は、特にバッテリー駆動デバイスの場合、FP32モデルと比較して消費電力を抑えることができます。

推論速度の向上: TensorRTは、ターゲットハードウェア向けにモデルを最適化するため、GPU、組み込みデバイス、およびアクセラレータでの推論速度が向上する可能性があります。

推論速度に関する注意

TensorRT INT8にエクスポートされたモデルを使用した最初の数回の推論呼び出しでは、通常よりも長い前処理、推論、および/または後処理時間がかかることが予想されます。これは、以下を変更する場合にも発生する可能性があります。 imgsz 推論中、特に imgsz は、エクスポート時に指定されたもの(エクスポート)と同じではありません。 imgsz は、TensorRTの「最適」プロファイルとして設定されています。

TensorRT INT8でYOLOを使用する場合の欠点

評価指標の低下: より低い精度を使用するということは

mAP,Precision,Recallまたは任意の モデルのパフォーマンスを評価するために使用されるその他のメトリック はやや悪くなる可能性があります。以下を参照してください。 パフォーマンス結果のセクション の違いを比較するため。mAP50およびmAP50-95さまざまなデバイスの小さなサンプルでINT8を使用してエクスポートする場合。開発時間の増加: データセットとデバイスに対するINT8キャリブレーションの「最適な」設定を見つけるには、かなりの量のテストが必要になる場合があります。

ハードウェア依存性: キャリブレーションとパフォーマンスの向上はハードウェアに大きく依存する可能性があり、モデルの重みは移植性が低くなります。

Ultralytics YOLO TensorRTエクスポートのパフォーマンス

NVIDIA A100

パフォーマンス

Ubuntu 22.04.3 LTSでテスト済み。 python 3.10.12, ultralytics==8.2.4, tensorrt==8.6.1.post1

検出ドキュメントで、80の事前学習済みクラスを含むCOCOでトレーニングされたこれらのモデルの使用例をご覧ください。

注

推論時間は以下に対して表示されます mean, min (最速)と max 事前学習済み重みを使用した各テストにおいて (最も遅い) yolov8n.engine

| 適合率 | Evalテスト | 平均 (ミリ秒) | 最小 | 最大 (ms) | mAPval 50(B) | mAPval 50-95(B) | batch | サイズ (ピクセル) |

|---|---|---|---|---|---|---|---|

| FP32 | 予測 | 0.52 | 0.51 | 0.56 | 8 | 640 | ||

| FP32 | COCOval | 0.52 | 0.52 | 0.37 | 1 | 640 | |

| FP16 | 予測 | 0.34 | 0.34 | 0.41 | 8 | 640 | ||

| FP16 | COCOval | 0.33 | 0.52 | 0.37 | 1 | 640 | |

| INT8 | 予測 | 0.28 | 0.27 | 0.31 | 8 | 640 | ||

| INT8 | COCOval | 0.29 | 0.47 | 0.33 | 1 | 640 |

セグメンテーションドキュメントで、80の事前学習済みクラスを含むCOCOでトレーニングされたこれらのモデルの使用例をご覧ください。

注

推論時間は以下に対して表示されます mean, min (最速)と max 事前学習済み重みを使用した各テストにおいて (最も遅い) yolov8n-seg.engine

| 適合率 | Evalテスト | 平均 (ミリ秒) | 最小 | 最大 (ms) | mAPval 50(B) | mAPval 50-95(B) | mAPval 50(M) | mAPval 50-95(M) | batch | サイズ (ピクセル) |

|---|---|---|---|---|---|---|---|---|---|

| FP32 | 予測 | 0.62 | 0.61 | 0.68 | 8 | 640 | ||||

| FP32 | COCOval | 0.63 | 0.52 | 0.36 | 0.49 | 0.31 | 1 | 640 | |

| FP16 | 予測 | 0.40 | 0.39 | 0.44 | 8 | 640 | ||||

| FP16 | COCOval | 0.43 | 0.52 | 0.36 | 0.49 | 0.30 | 1 | 640 | |

| INT8 | 予測 | 0.34 | 0.33 | 0.37 | 8 | 640 | ||||

| INT8 | COCOval | 0.36 | 0.46 | 0.32 | 0.43 | 0.27 | 1 | 640 |

分類ドキュメントで、1000の事前学習済みクラスを含むImageNetでトレーニングされたこれらのモデルの使用例をご覧ください。

注

推論時間は以下に対して表示されます mean, min (最速)と max 事前学習済み重みを使用した各テストにおいて (最も遅い) yolov8n-cls.engine

| 適合率 | Evalテスト | 平均 (ミリ秒) | 最小 | 最大 (ms) | top-1 | top-5 | batch | サイズ (ピクセル) |

|---|---|---|---|---|---|---|---|

| FP32 | 予測 | 0.26 | 0.25 | 0.28 | 8 | 640 | ||

| FP32 | ImageNetval | 0.26 | 0.35 | 0.61 | 1 | 640 | |

| FP16 | 予測 | 0.18 | 0.17 | 0.19 | 8 | 640 | ||

| FP16 | ImageNetval | 0.18 | 0.35 | 0.61 | 1 | 640 | |

| INT8 | 予測 | 0.16 | 0.15 | 0.57 | 8 | 640 | ||

| INT8 | ImageNetval | 0.15 | 0.32 | 0.59 | 1 | 640 |

COCOで学習されたこれらのモデルの使用例については、ポーズ推定ドキュメントを参照してください。COCOには「人物」という1つの事前学習済みクラスが含まれています。

注

推論時間は以下に対して表示されます mean, min (最速)と max 事前学習済み重みを使用した各テストにおいて (最も遅い) yolov8n-pose.engine

| 適合率 | Evalテスト | 平均 (ミリ秒) | 最小 | 最大 (ms) | mAPval 50(B) | mAPval 50-95(B) | mAPval 50(P) | mAPval 50-95(P) | batch | サイズ (ピクセル) |

|---|---|---|---|---|---|---|---|---|---|

| FP32 | 予測 | 0.54 | 0.53 | 0.58 | 8 | 640 | ||||

| FP32 | COCOval | 0.55 | 0.91 | 0.69 | 0.80 | 0.51 | 1 | 640 | |

| FP16 | 予測 | 0.37 | 0.35 | 0.41 | 8 | 640 | ||||

| FP16 | COCOval | 0.36 | 0.91 | 0.69 | 0.80 | 0.51 | 1 | 640 | |

| INT8 | 予測 | 0.29 | 0.28 | 0.33 | 8 | 640 | ||||

| INT8 | COCOval | 0.30 | 0.90 | 0.68 | 0.78 | 0.47 | 1 | 640 |

指向性検出ドキュメントで、15の事前学習済みクラスを含むDOTAv1でトレーニングされたこれらのモデルの使用例をご覧ください。

注

推論時間は以下に対して表示されます mean, min (最速)と max 事前学習済み重みを使用した各テストにおいて (最も遅い) yolov8n-obb.engine

| 適合率 | Evalテスト | 平均 (ミリ秒) | 最小 | 最大 (ms) | mAPval 50(B) | mAPval 50-95(B) | batch | サイズ (ピクセル) |

|---|---|---|---|---|---|---|---|

| FP32 | 予測 | 0.52 | 0.51 | 0.59 | 8 | 640 | ||

| FP32 | DOTAv1val | 0.76 | 0.50 | 0.36 | 1 | 640 | |

| FP16 | 予測 | 0.34 | 0.33 | 0.42 | 8 | 640 | ||

| FP16 | DOTAv1val | 0.59 | 0.50 | 0.36 | 1 | 640 | |

| INT8 | 予測 | 0.29 | 0.28 | 0.33 | 8 | 640 | ||

| INT8 | DOTAv1val | 0.32 | 0.45 | 0.32 | 1 | 640 |

コンシューマーGPU

検出性能 (COCO)

Windows 10.0.19045でテスト済み。 python 3.10.9, ultralytics==8.2.4, tensorrt==10.0.0b6

注

推論時間は以下に対して表示されます mean, min (最速)と max 事前学習済み重みを使用した各テストにおいて (最も遅い) yolov8n.engine

| 適合率 | Evalテスト | 平均 (ミリ秒) | 最小 | 最大 (ms) | mAPval 50(B) | mAPval 50-95(B) | batch | サイズ (ピクセル) |

|---|---|---|---|---|---|---|---|

| FP32 | 予測 | 1.06 | 0.75 | 1.88 | 8 | 640 | ||

| FP32 | COCOval | 1.37 | 0.52 | 0.37 | 1 | 640 | |

| FP16 | 予測 | 0.62 | 0.75 | 1.13 | 8 | 640 | ||

| FP16 | COCOval | 0.85 | 0.52 | 0.37 | 1 | 640 | |

| INT8 | 予測 | 0.52 | 0.38 | 1.00 | 8 | 640 | ||

| INT8 | COCOval | 0.74 | 0.47 | 0.33 | 1 | 640 |

Windows 10.0.22631でテスト済み。 python 3.11.9, ultralytics==8.2.4, tensorrt==10.0.1

注

推論時間は以下に対して表示されます mean, min (最速)と max 事前学習済み重みを使用した各テストにおいて (最も遅い) yolov8n.engine

| 適合率 | Evalテスト | 平均 (ミリ秒) | 最小 | 最大 (ms) | mAPval 50(B) | mAPval 50-95(B) | batch | サイズ (ピクセル) |

|---|---|---|---|---|---|---|---|

| FP32 | 予測 | 1.76 | 1.69 | 1.87 | 8 | 640 | ||

| FP32 | COCOval | 1.94 | 0.52 | 0.37 | 1 | 640 | |

| FP16 | 予測 | 0.86 | 0.75 | 1.00 | 8 | 640 | ||

| FP16 | COCOval | 1.43 | 0.52 | 0.37 | 1 | 640 | |

| INT8 | 予測 | 0.80 | 0.75 | 1.00 | 8 | 640 | ||

| INT8 | COCOval | 1.35 | 0.47 | 0.33 | 1 | 640 |

Pop!_OS 22.04 LTSでテスト済み。 python 3.10.12, ultralytics==8.2.4, tensorrt==8.6.1.post1

注

推論時間は以下に対して表示されます mean, min (最速)と max 事前学習済み重みを使用した各テストにおいて (最も遅い) yolov8n.engine

| 適合率 | Evalテスト | 平均 (ミリ秒) | 最小 | 最大 (ms) | mAPval 50(B) | mAPval 50-95(B) | batch | サイズ (ピクセル) |

|---|---|---|---|---|---|---|---|

| FP32 | 予測 | 2.84 | 2.84 | 2.85 | 8 | 640 | ||

| FP32 | COCOval | 2.94 | 0.52 | 0.37 | 1 | 640 | |

| FP16 | 予測 | 1.09 | 1.09 | 1.10 | 8 | 640 | ||

| FP16 | COCOval | 1.20 | 0.52 | 0.37 | 1 | 640 | |

| INT8 | 予測 | 0.75 | 0.74 | 0.75 | 8 | 640 | ||

| INT8 | COCOval | 0.76 | 0.47 | 0.33 | 1 | 640 |

組み込みデバイス

検出性能 (COCO)

JetPack 6.0(L4T 36.3)Ubuntu 22.04.4 LTSでテスト済み。 python 3.10.12, ultralytics==8.2.16, tensorrt==10.0.1

注

推論時間は以下に対して表示されます mean, min (最速)と max 事前学習済み重みを使用した各テストにおいて (最も遅い) yolov8n.engine

| 適合率 | Evalテスト | 平均 (ミリ秒) | 最小 | 最大 (ms) | mAPval 50(B) | mAPval 50-95(B) | batch | サイズ (ピクセル) |

|---|---|---|---|---|---|---|---|

| FP32 | 予測 | 6.11 | 6.10 | 6.29 | 8 | 640 | ||

| FP32 | COCOval | 6.17 | 0.52 | 0.37 | 1 | 640 | |

| FP16 | 予測 | 3.18 | 3.18 | 3.20 | 8 | 640 | ||

| FP16 | COCOval | 3.19 | 0.52 | 0.37 | 1 | 640 | |

| INT8 | 予測 | 2.30 | 2.29 | 2.35 | 8 | 640 | ||

| INT8 | COCOval | 2.32 | 0.46 | 0.32 | 1 | 640 |

情報

セットアップと構成の詳細については、Ultralytics YOLOを使用したNVIDIA Jetsonのクイックスタートガイドをご覧ください。

情報

セットアップと構成の詳細については、NVIDIA DGX SparkとUltralytics YOLOに関するクイックスタートガイドを参照してください。

評価方法

これらのモデルのエクスポート方法とテスト方法については、以下のセクションを展開して情報を参照してください。

エクスポート設定

エクスポート設定引数の詳細については、エクスポートモードを参照してください。

from ultralytics import YOLO

model = YOLO("yolo26n.pt")

# TensorRT FP32

out = model.export(format="engine", imgsz=640, dynamic=True, verbose=False, batch=8, workspace=2)

# TensorRT FP16

out = model.export(format="engine", imgsz=640, dynamic=True, verbose=False, batch=8, workspace=2, half=True)

# TensorRT INT8 with calibration `data` (i.e. COCO, ImageNet, or DOTAv1 for appropriate model task)

out = model.export(

format="engine", imgsz=640, dynamic=True, verbose=False, batch=8, workspace=2, int8=True, data="coco8.yaml"

)

Predict ループ

詳細については、predictモードを参照してください。

import cv2

from ultralytics import YOLO

model = YOLO("yolo26n.engine")

img = cv2.imread("path/to/image.jpg")

for _ in range(100):

result = model.predict(

[img] * 8, # batch=8 of the same image

verbose=False,

device="cuda",

)

検証構成

参照 val mode 検証構成引数についてさらに詳しく。

from ultralytics import YOLO

model = YOLO("yolo26n.engine")

results = model.val(

data="data.yaml", # COCO, ImageNet, or DOTAv1 for appropriate model task

batch=1,

imgsz=640,

verbose=False,

device="cuda",

)

エクスポートされたYOLO26 TensorRTモデルのデプロイ

Ultralytics YOLO26モデルをTensorRT形式にエクスポートが完了したら、デプロイの準備が整いました。さまざまな環境でTensorRTモデルをデプロイするための詳細な手順については、以下のリソースをご覧ください。

Tritonサーバーを使用したUltralyticsのデプロイ: NVIDIAのTriton Inference(旧TensorRT Inference)サーバーをUltralytics YOLOモデルで使用する方法に関するガイドです。

NVIDIA TensorRTを使用したディープニューラルネットワークのデプロイ: この記事では、NVIDIA TensorRTを使用して、GPUベースのデプロイメントプラットフォームにディープニューラルネットワークを効率的にデプロイする方法について解説します。

NVIDIAベースPC向けのエンドツーエンドAI:NVIDIA TensorRTデプロイメント: このブログ記事では、NVIDIAベースのPCでAIモデルを最適化およびデプロイするためのNVIDIA TensorRTの使用について解説します。

NVIDIA TensorRTのGitHubリポジトリ:: これは、NVIDIA TensorRTのソースコードとドキュメントを含む公式GitHubリポジトリです。

概要

このガイドでは、Ultralytics YOLO26モデルをNVIDIAのTensorRTモデル形式に変換することに焦点を当てました。この変換ステップは、YOLO26モデルの効率と速度を向上させる上で不可欠であり、さまざまなデプロイ環境により効果的で適したものにします。

使用方法の詳細については、TensorRTの公式ドキュメントをご覧ください。

Ultralytics YOLO26の追加の統合についてご興味がある場合は、当社の統合ガイドページに、豊富な情報リソースと洞察が掲載されています。

よくある質問

YOLO26モデルをTensorRT形式に変換するにはどうすればよいですか?

最適化されたNVIDIA GPU推論のためにUltralytics YOLO26モデルをTensorRT形式に変換するには、以下の手順に従ってください。

必要なパッケージをインストールする:

pip install ultralyticsYOLO26モデルをエクスポート:

from ultralytics import YOLO model = YOLO("yolo26n.pt") model.export(format="engine") # creates 'yolo26n.engine' # Run inference model = YOLO("yolo26n.engine") results = model("https://ultralytics.com/images/bus.jpg")

詳細については、YOLO26インストールガイドとエクスポートドキュメントをご覧ください。

YOLO26モデルにTensorRTを使用する利点は何ですか?

TensorRTを使用してYOLO26モデルを最適化することには、いくつかの利点があります。

- より高速な推論速度: TensorRTは、モデルレイヤーを最適化し、精度キャリブレーション(INT8およびFP16)を使用して、精度を大幅に犠牲にすることなく推論を高速化します。

- メモリ効率:TensorRTはテンソルメモリを動的に管理し、オーバーヘッドを削減し、GPUメモリの使用率を向上させます。

- レイヤー融合: 複数のレイヤーを単一の操作に結合し、計算の複雑さを軽減します。

- カーネル自動チューニング:各モデルレイヤーに対して最適化されたGPUカーネルを自動的に選択し、最大のパフォーマンスを保証します。

詳細については、NVIDIA の公式 TensorRT ドキュメント と、詳細な TensorRT の概要 をご覧ください。

YOLO26モデルにTensorRTと組み合わせてINT8量子化を使用できますか?

はい、INT8量子化を使用してTensorRTでYOLO26モデルをエクスポートできます。このプロセスには、トレーニング後量子化(PTQ)とキャリブレーションが含まれます。

INT8でエクスポート:

from ultralytics import YOLO model = YOLO("yolo26n.pt") model.export(format="engine", batch=8, workspace=4, int8=True, data="coco.yaml")推論の実行:

from ultralytics import YOLO model = YOLO("yolo26n.engine", task="detect") result = model.predict("https://ultralytics.com/images/bus.jpg")

詳細については、INT8量子化によるTensorRTのエクスポートに関するセクションを参照してください。

NVIDIA Triton Inference ServerにYOLO26 TensorRTモデルをデプロイするにはどうすればよいですか?

NVIDIA Triton Inference ServerにYOLO26 TensorRTモデルをデプロイするには、以下のリソースを使用できます。

- Triton ServerでUltralytics YOLO26をデプロイ:Triton Inference Serverのセットアップと使用に関するステップバイステップガイド。

- NVIDIA Triton Inference Serverドキュメント: 詳細なデプロイメントオプションと構成に関するNVIDIAの公式ドキュメントです。

これらのガイドは、さまざまなデプロイ環境でYOLO26モデルを効率的に統合するのに役立ちます。

TensorRTにエクスポートされたYOLO26モデルで観測されるパフォーマンスの改善点は何ですか?

TensorRTによるパフォーマンスの向上は、使用するハードウェアによって異なります。一般的なベンチマークを以下に示します。

NVIDIA A100:

- FP32 推論: ~0.52 ms / 画像

- FP16 推論: ~0.34 ms / 画像

- INT8 推論: ~0.28 ms / 画像

- INT8精度ではmAPがわずかに低下しますが、速度は大幅に向上します。

コンシューマーGPU(例:RTX 3080):

- FP32 推論: ~1.06 ms / 画像

- FP16 推論: ~0.62 ms / 画像

- INT8 推論: ~0.52 ms / 画像

異なるハードウェア構成での詳細なパフォーマンスベンチマークは、パフォーマンスセクションをご覧ください。

TensorRTのパフォーマンスに関するより包括的な洞察については、Ultralyticsドキュメントおよび当社のパフォーマンス分析レポートを参照してください。