Ultralytics YOLO26 모드

소개

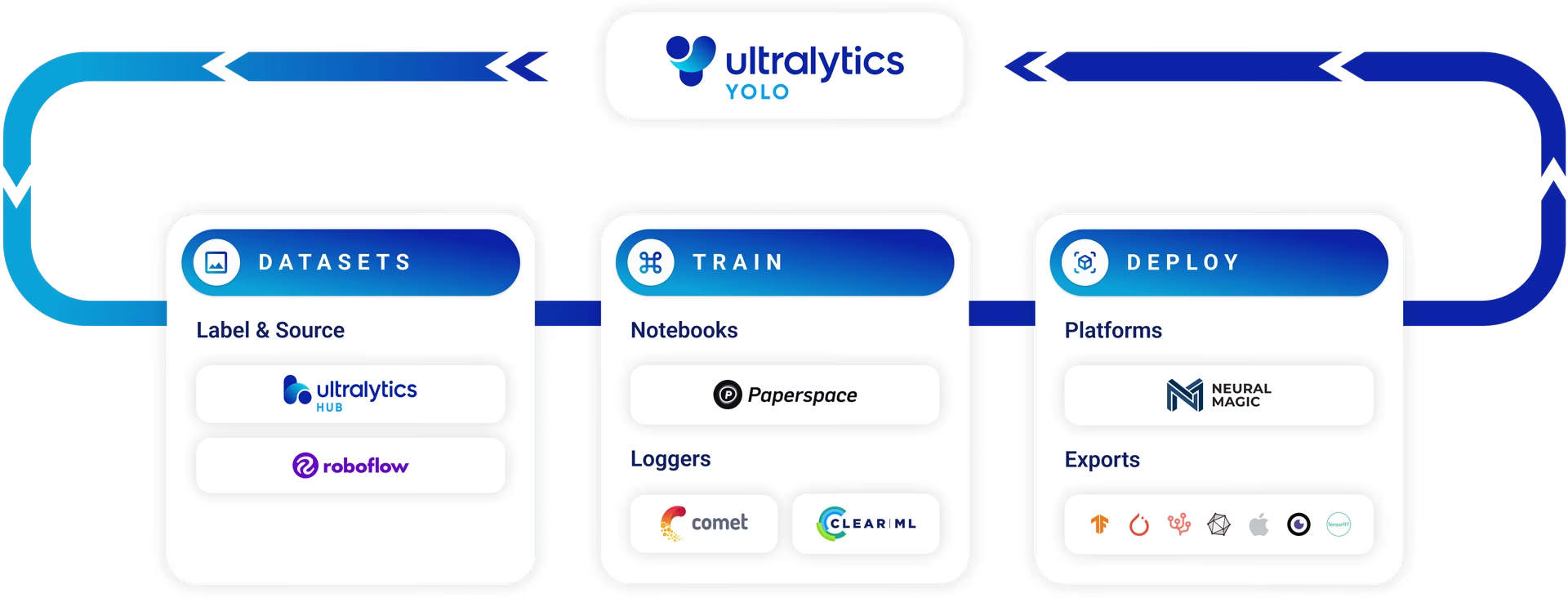

Ultralytics YOLO26은 단순한 객체 탐지 모델이 아닙니다. 데이터 수집 및 모델 훈련부터 검증, 배포, 실제 추적에 이르기까지 머신러닝 모델의 전체 수명 주기를 포괄하도록 설계된 다재다능한 프레임워크입니다. 각 모드는 특정 목적을 수행하며 다양한 작업 및 사용 사례에 필요한 유연성과 효율성을 제공하도록 설계되었습니다.

참고: Ultralytics 모드 튜토리얼: 훈련, 검증, 예측, 내보내기 및 벤치마크.

모드 개요

Ultralytics YOLO26이 지원하는 다양한 모드를 이해하는 것은 모델을 최대한 활용하는 데 중요합니다.

- 훈련 모드: 사용자 정의 또는 미리 로드된 데이터 세트에서 모델을 미세 조정합니다.

- Val 모드: 모델 성능을 검증하기 위한 훈련 후 체크포인트입니다.

- 예측 모드: 실제 데이터에서 모델의 예측 능력을 활용합니다.

- 내보내기 모드: 다양한 형식으로 모델 배포 준비를 완료합니다.

- 트랙 모드: 객체 감지 모델을 실시간 추적 애플리케이션으로 확장합니다.

- 벤치마크 모드: 다양한 배포 환경에서 모델의 속도와 정확도를 분석합니다.

이 포괄적인 가이드는 각 모드에 대한 개요와 실용적인 통찰력을 제공하여 YOLO26의 잠재력을 최대한 활용하는 데 도움을 주는 것을 목표로 합니다.

Train

훈련 모드는 사용자 지정 데이터셋에서 YOLO26 모델을 훈련하는 데 사용됩니다. 이 모드에서는 지정된 데이터셋과 하이퍼파라미터를 사용하여 모델을 훈련합니다. 훈련 프로세스는 이미지 내 객체의 클래스와 위치를 정확하게 예측할 수 있도록 모델의 파라미터를 최적화하는 것을 포함합니다. 훈련은 애플리케이션과 관련된 특정 객체를 인식할 수 있는 모델을 만드는 데 필수적입니다.

Val

검증 모드는 YOLO26 모델이 훈련된 후 모델을 검증하는 데 사용됩니다. 이 모드에서는 검증 세트에서 모델을 평가하여 정확도와 일반화 성능을 측정합니다. 검증은 과적합과 같은 잠재적 문제를 식별하는 데 도움이 되며, 모델 성능을 정량화하기 위해 평균 정밀도(mAP)와 같은 지표를 제공합니다. 이 모드는 하이퍼파라미터 튜닝 및 전반적인 모델 효율성 향상에 중요합니다.

예측

예측 모드는 학습된 YOLO26 모델을 사용하여 새로운 이미지 또는 비디오에 대한 예측을 수행하는 데 사용됩니다. 이 모드에서는 체크포인트 파일에서 모델이 로드되며, 사용자는 추론을 수행하기 위해 이미지 또는 비디오를 제공할 수 있습니다. 모델은 입력 미디어에서 객체를 식별하고 위치를 파악하여 실제 애플리케이션에 적용할 준비를 합니다. 예측 모드는 학습된 모델을 실제 문제 해결에 적용하는 관문입니다.

내보내기

내보내기 모드는 YOLO26 모델을 다양한 플랫폼 및 장치에 배포하기에 적합한 형식으로 변환하는 데 사용됩니다. 이 모드는 PyTorch 모델을 ONNX, TensorRT 또는 CoreML과 같은 최적화된 형식으로 변환하여 프로덕션 환경에 배포할 수 있도록 합니다. 내보내기는 모델을 다양한 소프트웨어 애플리케이션 또는 하드웨어 장치와 통합하는 데 필수적이며, 종종 상당한 성능 향상을 가져옵니다.

추적

추적 모드는 YOLO26의 객체 detect 기능을 확장하여 비디오 프레임 또는 라이브 스트림 전반에 걸쳐 객체를 track합니다. 이 모드는 감시 시스템 또는 자율 주행 자동차와 같이 지속적인 객체 식별이 필요한 애플리케이션에 특히 유용합니다. 추적 모드는 ByteTrack과 같은 정교한 알고리즘을 구현하여 객체가 일시적으로 시야에서 사라지더라도 프레임 전반에 걸쳐 객체 ID를 유지합니다.

벤치마크

벤치마크 모드는 YOLO26의 다양한 내보내기 형식에 대한 속도와 정확도를 프로파일링합니다. 이 모드는 모델 크기, 정확도(detect 작업의 경우 mAP50-95 또는 classify의 경우 accuracy_top5), ONNX, OpenVINO, TensorRT와 같은 다양한 형식에 걸친 추론 시간에 대한 포괄적인 지표를 제공합니다. 벤치마킹은 배포 환경에서 속도 및 정확도에 대한 특정 요구 사항에 따라 최적의 내보내기 형식을 선택하는 데 도움이 됩니다.

FAQ

Ultralytics YOLO26으로 사용자 지정 객체 detect 모델을 어떻게 학습시키나요?

Ultralytics YOLO26으로 사용자 지정 객체 detect 모델을 학습시키려면 train 모드를 사용해야 합니다. 이미지 및 해당 주석 파일이 포함된 YOLO 형식의 데이터셋이 필요합니다. 학습 프로세스를 시작하려면 다음 명령을 사용하십시오:

예시

from ultralytics import YOLO

# Load a pretrained YOLO model (you can choose n, s, m, l, or x versions)

model = YOLO("yolo26n.pt")

# Start training on your custom dataset

model.train(data="path/to/dataset.yaml", epochs=100, imgsz=640)

# Train a YOLO model from the command line

yolo detect train data=path/to/dataset.yaml model=yolo26n.pt epochs=100 imgsz=640

자세한 내용은 Ultralytics Train Guide를 참조하십시오.

Ultralytics YOLO26은 모델 성능을 검증하기 위해 어떤 지표를 사용하나요?

Ultralytics YOLO26은 검증 프로세스 중에 모델 성능을 평가하기 위해 다양한 지표를 사용합니다. 여기에는 다음이 포함됩니다:

- mAP (평균 정밀도): 이는 객체 detect의 정확도를 평가합니다.

- IOU (교차 영역 over Union): 예측된 경계 상자와 실제 경계 상자 간의 겹침을 측정합니다.

- 정밀도 및 재현율: 정밀도는 총 감지된 양성 수에 대한 실제 양성 감지 비율을 측정하고, 재현율은 총 실제 양성 수에 대한 실제 양성 감지 비율을 측정합니다.

다음 명령을 실행하여 유효성 검사를 시작할 수 있습니다.

예시

from ultralytics import YOLO

# Load a pretrained or custom YOLO model

model = YOLO("yolo26n.pt")

# Run validation on your dataset

model.val(data="path/to/validation.yaml")

# Validate a YOLO model from the command line

yolo val model=yolo26n.pt data=path/to/validation.yaml

자세한 내용은 Validation Guide를 참조하십시오.

배포용으로 YOLO26 모델을 어떻게 내보낼 수 있나요?

Ultralytics YOLO26은 학습된 모델을 ONNX, TensorRT, CoreML 등 다양한 배포 형식으로 변환하는 내보내기 기능을 제공합니다. 모델을 내보내려면 다음 예시를 사용하십시오:

예시

from ultralytics import YOLO

# Load your trained YOLO model

model = YOLO("yolo26n.pt")

# Export the model to ONNX format (you can specify other formats as needed)

model.export(format="onnx")

# Export a YOLO model to ONNX format from the command line

yolo export model=yolo26n.pt format=onnx

각 내보내기 형식에 대한 자세한 단계는 Export Guide에서 확인할 수 있습니다.

Ultralytics YOLO26의 벤치마크 모드 목적은 무엇인가요?

Ultralytics YOLO26의 벤치마크 모드는 속도와 정확도 ONNX, TensorRT, OpenVINO와 같은 다양한 내보내기 형식을 지원합니다. 모델 크기와 같은 지표를 제공하며, mAP50-95 객체 탐지 성능, 다양한 하드웨어 설정에서의 추론 시간을 측정하여 배포 요구 사항에 가장 적합한 형식을 선택하는 데 도움을 줍니다.

예시

from ultralytics.utils.benchmarks import benchmark

# Run benchmark on GPU (device 0)

# You can adjust parameters like model, dataset, image size, and precision as needed

benchmark(model="yolo26n.pt", data="coco8.yaml", imgsz=640, half=False, device=0)

# Benchmark a YOLO model from the command line

# Adjust parameters as needed for your specific use case

yolo benchmark model=yolo26n.pt data='coco8.yaml' imgsz=640 half=False device=0

자세한 내용은 벤치마크 가이드를 참조하십시오.

Ultralytics YOLO26을 사용하여 실시간 객체 추적을 어떻게 수행할 수 있나요?

Ultralytics YOLO26의 track 모드를 사용하여 실시간 객체 추적을 달성할 수 있습니다. 이 모드는 객체 detect 기능을 확장하여 비디오 프레임 또는 라이브 피드 전반에 걸쳐 객체를 track합니다. 추적을 활성화하려면 다음 예시를 사용하십시오:

예시

from ultralytics import YOLO

# Load a pretrained YOLO model

model = YOLO("yolo26n.pt")

# Start tracking objects in a video

# You can also use live video streams or webcam input

model.track(source="path/to/video.mp4")

# Perform object tracking on a video from the command line

# You can specify different sources like webcam (0) or RTSP streams

yolo track model=yolo26n.pt source=path/to/video.mp4

자세한 지침은 트랙 가이드를 참조하십시오.