Набор данных ключевых точек рук

Введение

Набор данных Hand Keypoints содержит 26 768 изображений рук, аннотированных ключевыми точками, что делает его подходящим для обучения таких моделей, как Ultralytics YOLO, для задач оценки позы. Аннотации были сгенерированы с использованием библиотеки Google MediaPipe, что обеспечивает высокую точность и согласованность, а набор данных совместим с форматами Ultralytics YOLO26.

Смотреть: Оценка ключевых точек руки с Ultralytics YOLO26 | Руководство по оценке позы человеческой руки

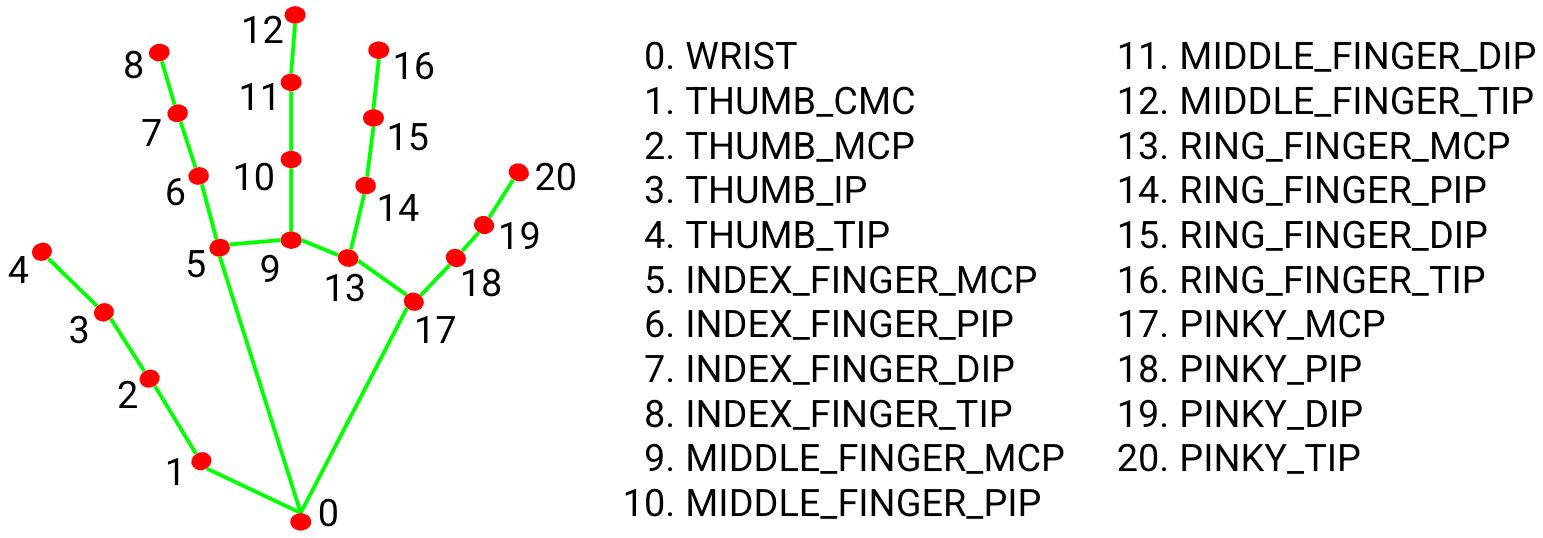

Ориентиры рук

Ключевые точки

Набор данных включает ключевые точки для обнаружения рук. Ключевые точки аннотированы следующим образом:

- Запястье

- Большой палец (4 точки)

- Указательный палец (4 точки)

- Средний палец (4 точки)

- Безымянный палец (4 точки)

- Мизинец (4 точки)

Каждая рука имеет в общей сложности 21 ключевую точку.

Основные характеристики

- Большой набор данных: 26 768 изображений с аннотациями ключевых точек рук.

- Совместимость с YOLO26: Метки поставляются в формате ключевых точек YOLO и готовы к использованию с моделями YOLO26.

- 21 ключевая точка: Детализированное представление позы руки, охватывающее запястье и четыре точки на каждый палец.

Структура набора данных

Набор данных ключевых точек рук разделен на два подмножества:

- Train: Этот поднабор содержит 18 776 изображений из набора данных ключевых точек рук, аннотированных для обучения моделей оценки позы.

- Val: Этот поднабор содержит 7 992 изображения, которые можно использовать для целей валидации во время обучения модели.

Приложения

Ключевые точки рук могут использоваться для распознавания жестов, управления AR/VR, управления роботами и анализа движений рук в здравоохранении. Они также могут применяться в анимации для захвата движения и в системах биометрической аутентификации для обеспечения безопасности. Детальное отслеживание положения пальцев обеспечивает точное взаимодействие с виртуальными объектами и бесконтактными интерфейсами управления.

YAML-файл набора данных

YAML-файл (Yet Another Markup Language) используется для определения конфигурации набора данных. Он содержит информацию о путях к набору данных, классах и другую важную информацию. В случае набора данных Hand Keypoints, hand-keypoints.yaml файл поддерживается по адресу https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/hand-keypoints.yaml.

ultralytics/cfg/datasets/hand-keypoints.yaml

# Ultralytics 🚀 AGPL-3.0 License - https://ultralytics.com/license

# Hand Keypoints dataset by Ultralytics

# Documentation: https://docs.ultralytics.com/datasets/pose/hand-keypoints/

# Example usage: yolo train data=hand-keypoints.yaml

# parent

# ├── ultralytics

# └── datasets

# └── hand-keypoints ← downloads here (369 MB)

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: hand-keypoints # dataset root dir

train: images/train # train images (relative to 'path') 18776 images

val: images/val # val images (relative to 'path') 7992 images

# Keypoints

kpt_shape: [21, 3] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

flip_idx: [0, 1, 2, 4, 3, 10, 11, 12, 13, 14, 5, 6, 7, 8, 9, 15, 16, 17, 18, 19, 20]

# Classes

names:

0: hand

# Keypoint names per class

kpt_names:

0:

- wrist

- thumb_cmc

- thumb_mcp

- thumb_ip

- thumb_tip

- index_mcp

- index_pip

- index_dip

- index_tip

- middle_mcp

- middle_pip

- middle_dip

- middle_tip

- ring_mcp

- ring_pip

- ring_dip

- ring_tip

- pinky_mcp

- pinky_pip

- pinky_dip

- pinky_tip

# Download script/URL (optional)

download: https://github.com/ultralytics/assets/releases/download/v0.0.0/hand-keypoints.zip

Использование

Для обучения модели YOLO26n-pose на наборе данных Hand Keypoints в течение 100 эпох с размером изображения 640, вы можете использовать следующие фрагменты кода. Полный список доступных аргументов см. на странице Обучение модели.

Пример обучения

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="hand-keypoints.yaml", epochs=100, imgsz=640)

# Start training from a pretrained *.pt model

yolo pose train data=hand-keypoints.yaml model=yolo26n-pose.pt epochs=100 imgsz=640

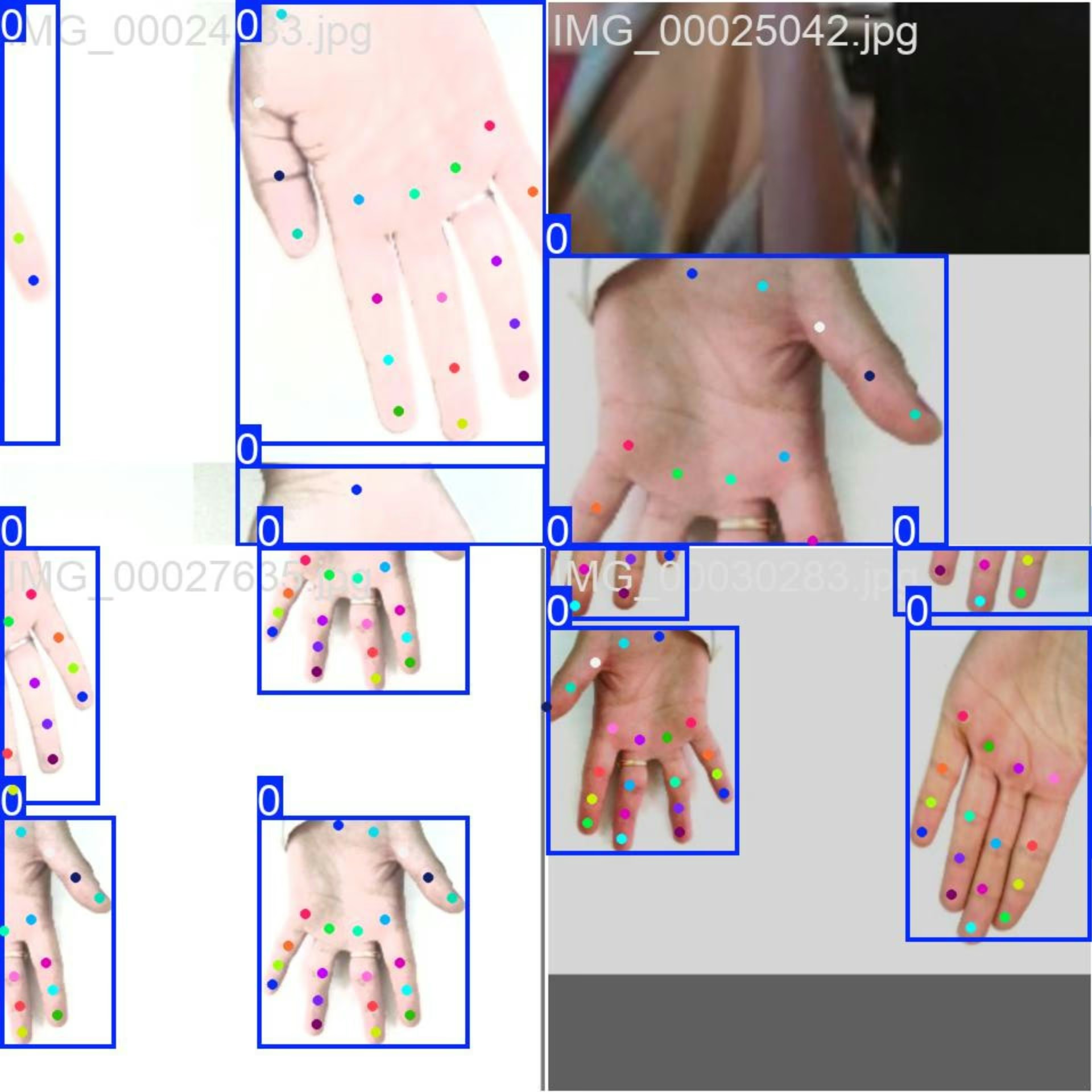

Примеры изображений и аннотации

Набор данных Hand Keypoints содержит разнообразные изображения человеческих рук, аннотированные ключевыми точками. Вот несколько примеров изображений из набора данных с соответствующими аннотациями:

- Скомпилированное изображение: Это изображение демонстрирует пакет обучения, состоящий из скомпилированных изображений набора данных. Компиляция — это метод, используемый во время обучения, который объединяет несколько изображений в одно изображение, чтобы увеличить разнообразие объектов и сцен в каждом пакете обучения. Это помогает улучшить способность модели обобщать различные размеры объектов, соотношения сторон и контексты.

Этот пример демонстрирует разнообразие и сложность изображений в наборе данных Hand Keypoints и преимущества использования мозаики в процессе обучения.

Цитирование и благодарности

Если вы используете набор данных hand-keypoints в своих исследованиях или разработках, пожалуйста, укажите следующие источники:

Мы хотели бы поблагодарить следующие источники за предоставление изображений, использованных в этом наборе данных:

Изображения были собраны и использованы в соответствии с соответствующими лицензиями, предоставленными каждой платформой, и распространяются в соответствии с международной лицензией Creative Commons Attribution-NonCommercial-ShareAlike 4.0.

Мы также хотели бы отметить создателя этого набора данных, Риона Дсилву, за его большой вклад в исследования Vision AI.

Часто задаваемые вопросы

Как обучить модель YOLO26 на наборе данных Hand Keypoints?

Для обучения модели YOLO26 на наборе данных Hand Keypoints можно использовать python или интерфейс командной строки (CLI). Вот пример обучения модели YOLO26n-pose в течение 100 эпох с размером изображения 640:

Пример

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="hand-keypoints.yaml", epochs=100, imgsz=640)

# Start training from a pretrained *.pt model

yolo pose train data=hand-keypoints.yaml model=yolo26n-pose.pt epochs=100 imgsz=640

Для получения полного списка доступных аргументов обратитесь к странице Обучение модели.

Каковы основные характеристики набора данных Hand Keypoints?

Набор данных Hand Keypoints предназначен для расширенных задач оценки позы и включает в себя несколько ключевых функций:

- Большой набор данных: Содержит 26 768 изображений с аннотациями ключевых точек рук.

- Совместимость с YOLO26: Готов к использованию с моделями YOLO26.

- 21 ключевая точка: Детальное представление позы руки, включая запястье и суставы пальцев.

Для получения более подробной информации вы можете изучить раздел Набор данных ключевых точек рук.

Какие приложения могут выиграть от использования набора данных Hand Keypoints?

Набор данных Hand Keypoints может применяться в различных областях, включая:

- Распознавание жестов: Улучшение взаимодействия человека с компьютером.

- AR/VR Controls: Улучшение пользовательского опыта в дополненной и виртуальной реальности.

- Управление роботами: Обеспечение точного управления руками роботов.

- Здравоохранение: Анализ движений рук для медицинской диагностики.

- Анимация: Захват движения для реалистичной анимации.

- Биометрическая аутентификация: Улучшение систем безопасности.

Для получения дополнительной информации обратитесь к разделу Приложения.

Какова структура набора данных Hand Keypoints?

Набор данных Hand Keypoints разделен на два подмножества:

- Train: Содержит 18 776 изображений для обучения моделей оценки позы.

- Val: Содержит 7 992 изображения для целей валидации во время обучения модели.

Эта структура обеспечивает комплексный процесс обучения и валидации. Для получения более подробной информации см. раздел Структура набора данных.

Как использовать YAML-файл набора данных для обучения?

Конфигурация набора данных определена в YAML-файле, который включает пути, классы и другую релевантную информацию. The hand-keypoints.yaml файл можно найти по адресу hand-keypoints.yaml.

Чтобы использовать этот YAML-файл для обучения, укажите его в своем скрипте обучения или команде CLI, как показано в примере обучения выше. Для получения более подробной информации обратитесь к разделу Dataset YAML.