Мониторинг тренировок с использованием Ultralytics YOLO26

Мониторинг тренировок с помощью оценки позы с Ultralytics YOLO26 улучшает оценку упражнений за счет точного отслеживания ключевых ориентиров и суставов тела в реальном времени. Эта технология обеспечивает мгновенную обратную связь о технике выполнения упражнений, отслеживает тренировочные программы и измеряет показатели производительности, оптимизируя тренировочные сессии как для пользователей, так и для тренеров.

Смотреть: Как отслеживать упражнения с помощью Ultralytics YOLO | Приседания, разгибание ног, отжимания и многое другое

Преимущества мониторинга тренировок

- Оптимизированная производительность: Адаптация тренировок на основе данных мониторинга для достижения лучших результатов.

- Достижение целей: Отслеживайте и корректируйте фитнес-цели для измеримого прогресса.

- Персонализация: Индивидуальные планы тренировок, основанные на индивидуальных данных, для достижения эффективности.

- Осведомленность о здоровье: Раннее выявление закономерностей, указывающих на проблемы со здоровьем или перетренировку.

- Обоснованные решения: Принятие решений на основе данных для корректировки распорядка и постановки реалистичных целей.

Приложения в реальном мире

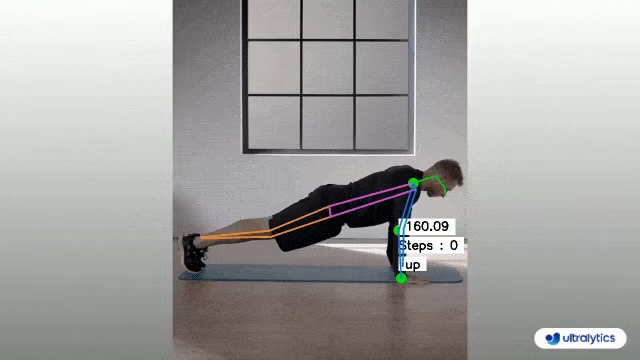

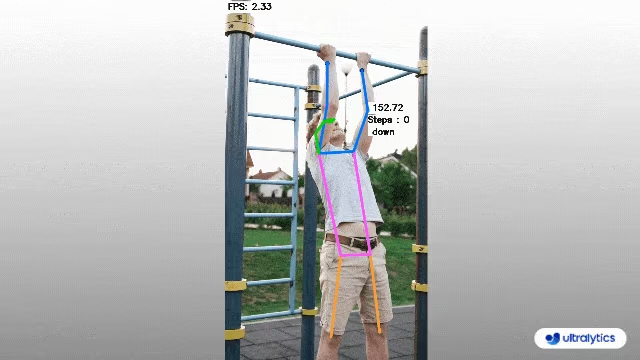

| Мониторинг тренировок | Мониторинг тренировок |

|---|---|

|  |

| Подсчет отжиманий | Подсчет подтягиваний |

Мониторинг тренировок с использованием Ultralytics YOLO

# Run a workout example

yolo solutions workout show=True

# Pass a source video

yolo solutions workout source="path/to/video.mp4"

# Use keypoints for pushups

yolo solutions workout kpts="[6, 8, 10]"

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

# Video writer

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter("workouts_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Init AIGym

gym = solutions.AIGym(

show=True, # display the frame

kpts=[6, 8, 10], # keypoints for monitoring specific exercise, by default it's for pushup

model="yolo26n-pose.pt", # path to the YOLO26 pose estimation model file

# line_width=2, # adjust the line width for bounding boxes and text display

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = gym(im0)

# print(results) # access the output

video_writer.write(results.plot_im) # write the processed frame.

cap.release()

video_writer.release()

cv2.destroyAllWindows() # destroy all opened windows

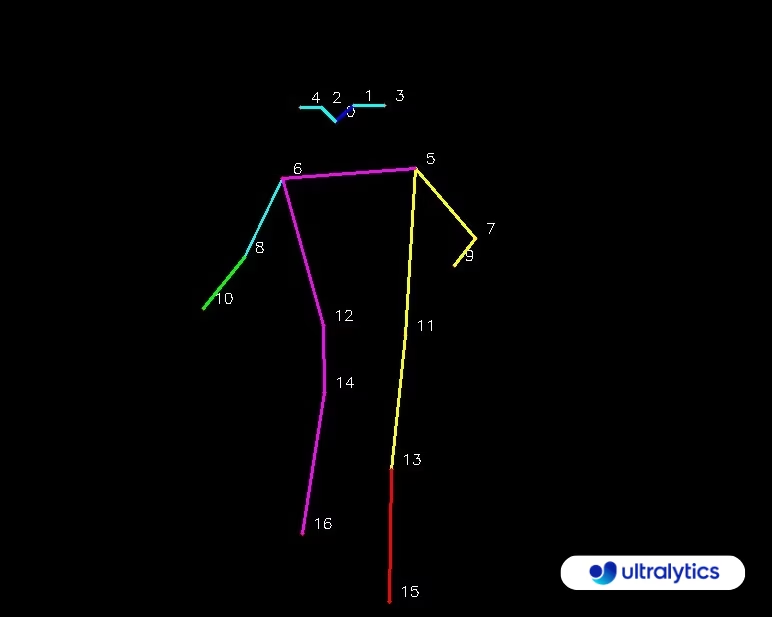

Карта ключевых точек

AIGym Аргументы

Вот таблица с AIGym аргументы:

| Аргумент | Тип | По умолчанию | Описание |

|---|---|---|---|

model | str | None | Путь к файлу модели Ultralytics YOLO. |

up_angle | float | 145.0 | Пороговое значение угла для позы 'вверху'. |

down_angle | float | 90.0 | Пороговое значение угла для позы 'внизу'. |

kpts | list[int] | '[6, 8, 10]' | Список из трех индексов ключевых точек, используемых для мониторинга тренировок. Эти ключевые точки соответствуют суставам или частям тела, таким как плечи, локти и запястья, для таких упражнений, как отжимания, подтягивания, приседания и упражнения на пресс. |

Параметр AIGym Решение также поддерживает ряд параметров отслеживания объектов:

| Аргумент | Тип | По умолчанию | Описание |

|---|---|---|---|

tracker | str | 'botsort.yaml' | Указывает алгоритм отслеживания, например, bytetrack.yaml или botsort.yaml. |

conf | float | 0.1 | Устанавливает порог уверенности для обнаружений; более низкие значения позволяют отслеживать больше объектов, но могут включать ложные срабатывания. |

iou | float | 0.7 | Устанавливает порог Intersection over Union (IoU) для фильтрации перекрывающихся обнаружений. |

classes | list | None | Фильтрует результаты по индексу класса. Например, classes=[0, 2, 3] отслеживает только указанные классы. |

verbose | bool | True | Управляет отображением результатов отслеживания, обеспечивая визуальный вывод отслеживаемых объектов. |

device | str | None | Указывает устройство для инференса (например, cpu, cuda:0 или 0). Позволяет пользователям выбирать между CPU, конкретным GPU или другими вычислительными устройствами для выполнения модели. |

Кроме того, можно применить следующие настройки визуализации:

| Аргумент | Тип | По умолчанию | Описание |

|---|---|---|---|

show | bool | False | Если True, отображает аннотированные изображения или видео в окне. Полезно для немедленной визуальной обратной связи во время разработки или тестирования. |

line_width | int or None | None | Указывает ширину линии ограничивающих рамок. Если None, ширина линии автоматически регулируется в зависимости от размера изображения. Обеспечивает визуальную настройку для большей четкости. |

show_conf | bool | True | Отображает оценку достоверности для каждого обнаружения рядом с меткой. Дает представление об уверенности модели для каждого обнаружения. |

show_labels | bool | True | Отображает метки для каждого обнаружения в визуальном выводе. Обеспечивает немедленное понимание обнаруженных объектов. |

Часто задаваемые вопросы

Как мне отслеживать свои тренировки с помощью Ultralytics YOLO26?

Для мониторинга ваших тренировок с помощью Ultralytics YOLO26 вы можете использовать возможности оценки позы для отслеживания и анализа ключевых ориентиров тела и суставов в реальном времени. Это позволяет получать мгновенную обратную связь о правильности выполнения упражнений, подсчитывать повторения и измерять показатели производительности. Вы можете начать с использования предоставленного примера кода для отжиманий, подтягиваний или упражнений на пресс, как показано:

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

gym = solutions.AIGym(

line_width=2,

show=True,

kpts=[6, 8, 10],

)

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = gym(im0)

cv2.destroyAllWindows()

Для дальнейшей настройки и параметров вы можете обратиться к разделу AIGym в документации.

Каковы преимущества использования Ultralytics YOLO26 для мониторинга тренировок?

Использование Ultralytics YOLO26 для мониторинга тренировок предоставляет несколько ключевых преимуществ:

- Оптимизированная производительность: Адаптируя тренировки на основе данных мониторинга, вы можете добиться лучших результатов.

- Достижение целей: Легко отслеживайте и корректируйте фитнес-цели для измеримого прогресса.

- Персонализация: Получите индивидуальные планы тренировок, основанные на ваших личных данных, для достижения оптимальной эффективности.

- Осведомленность о здоровье: Раннее выявление закономерностей, указывающих на потенциальные проблемы со здоровьем или перетренировку.

- Обоснованные решения: Принимайте решения на основе данных, чтобы корректировать распорядок и ставить реалистичные цели.

Вы можете посмотреть видеодемонстрацию на YouTube, чтобы увидеть эти преимущества в действии.

Насколько точен Ultralytics YOLO26 в detect и track упражнений?

Ultralytics YOLO26 обладает высокой точностью в обнаружении и отслеживании упражнений благодаря своим передовым возможностям оценки позы. Он может точно отслеживать ключевые ориентиры тела и суставы, предоставляя обратную связь в реальном времени о правильности выполнения упражнений и показателях производительности. Предварительно обученные веса модели и надежная архитектура обеспечивают высокую точность и надежность. Примеры из реального мира можно найти в разделе реальных приложений в документации, где демонстрируется подсчет отжиманий и подтягиваний.

Могу ли я использовать Ultralytics YOLO26 для пользовательских тренировочных программ?

Да, Ultralytics YOLO26 может быть адаптирован для пользовательских программ тренировок. AIGym класс поддерживает различные типы поз, такие как pushup, pullupи abworkout. Вы можете указать ключевые точки и углы для detect конкретных упражнений. Вот пример настройки:

from ultralytics import solutions

gym = solutions.AIGym(

line_width=2,

show=True,

kpts=[6, 8, 10], # For pushups - can be customized for other exercises

)

Для получения более подробной информации о настройке аргументов обратитесь к Аргументы AIGym раздел. Эта гибкость позволяет отслеживать различные упражнения и настраивать процедуры в соответствии с вашими цели по фитнесу.

Как я могу сохранить результаты мониторинга тренировок с помощью Ultralytics YOLO26?

Чтобы сохранить вывод мониторинга тренировки, вы можете изменить код, включив в него модуль записи видео, который сохраняет обработанные кадры. Вот пример:

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter("workouts.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

gym = solutions.AIGym(

line_width=2,

show=True,

kpts=[6, 8, 10],

)

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = gym(im0)

video_writer.write(results.plot_im)

cap.release()

video_writer.release()

cv2.destroyAllWindows()

Эта настройка записывает отслеживаемое видео в выходной файл, позволяя вам просматривать результаты тренировки позже или делиться ими с тренерами для получения дополнительных отзывов.