Hızlı Başlangıç Rehberi: Ultralytics YOLO26 ile NVIDIA Jetson

Bu kapsamlı rehber, Ultralytics YOLO26'yı NVIDIA Jetson cihazlarına dağıtmak için ayrıntılı bir yol haritası sunar. Ayrıca, YOLO26'nın bu küçük ve güçlü cihazlardaki yeteneklerini göstermek için performans kıyaslamalarını sergiler.

Yeni ürün desteği

Bu kılavuzu, 2070 FP4 TFLOPS'a kadar AI işlem gücü ve 40 W ile 130 W arasında yapılandırılabilir güçle 128 GB bellek sağlayan en son NVIDIA Jetson AGX Thor Geliştirici Kiti ile güncelledik. En popüler AI modellerini sorunsuz bir şekilde çalıştırmak için NVIDIA Jetson AGX Orin'den 7,5 kat daha yüksek AI işlem gücü ve 3,5 kat daha iyi enerji verimliliği sunar.

İzle: Ultralytics YOLO26 NVIDIA Jetson Cihazlarında Nasıl Kullanılır

Not

Bu kılavuz, JP7.0'ın en son kararlı JetPack sürümünü çalıştıran NVIDIA Jetson AGX Thor Geliştirici Kiti (Jetson T5000), JP6.2'nin JetPack sürümünü çalıştıran NVIDIA Jetson AGX Orin Geliştirici Kiti (64GB), JP6.1'in JetPack sürümünü çalıştıran NVIDIA Jetson Orin Nano Süper Geliştirici Kiti, JP6.0/ JP5.1.3'ün JetPack sürümünü çalıştıran NVIDIA Jetson Orin NX 16GB tabanlı Seeed Studio reComputer J4012 ve JP4.6.1'in JetPack sürümünü çalıştıran NVIDIA Jetson Nano 4GB tabanlı Seeed Studio reComputer J1020 v2 ile test edilmiştir. En yeni ve eski cihazlar da dahil olmak üzere tüm NVIDIA Jetson donanım serisinde çalışması beklenmektedir.

NVIDIA Jetson nedir?

NVIDIA Jetson, hızlandırılmış yapay zeka (YZ) bilgi işlemini uç cihazlara taşımak için tasarlanmış bir dizi gömülü bilgi işlem kartıdır. Bu kompakt ve güçlü cihazlar, NVIDIA'nın GPU mimarisi etrafında inşa edilmiştir ve bulut bilişim kaynaklarına bağımlı kalmadan karmaşık YZ algoritmalarını ve derin öğrenme modellerini doğrudan cihaz üzerinde çalıştırabilir. Jetson kartları genellikle robotik, otonom araçlar, endüstriyel otomasyon ve YZ çıkarımının düşük gecikme süresi ve yüksek verimlilikle yerel olarak gerçekleştirilmesi gereken diğer uygulamalarda kullanılır. Ayrıca, bu kartlar ARM64 mimarisine dayanır ve geleneksel GPU bilgi işlem cihazlarına kıyasla daha düşük güç tüketimiyle çalışır.

NVIDIA Jetson Serisi Karşılaştırması

NVIDIA Jetson AGX Thor, önceki nesillere kıyasla büyük ölçüde iyileştirilmiş AI performansı getiren NVIDIA Blackwell mimarisine dayalı NVIDIA Jetson ailesinin en son sürümüdür. Aşağıdaki tablo, ekosistemdeki birkaç Jetson cihazını karşılaştırmaktadır.

| Jetson AGX Thor(T5000) | Jetson AGX Orin 64GB | Jetson Orin NX 16GB | Jetson Orin Nano Süper | Jetson AGX Xavier | Jetson Xavier NX | Jetson Nano | |

|---|---|---|---|---|---|---|---|

| Yapay Zeka Performansı | 2070 TFLOPS | 275 TOPS | 100 TOPS | 67 TOPS | 32 TOPS | 21 TOPS | 472 GFLOPS |

| GPU | 96 Tensor Çekirdeğine sahip 2560 çekirdekli NVIDIA Blackwell mimarisi GPU | 64 adet Tensor Çekirdeğine sahip 2048 çekirdekli NVIDIA Ampere mimarisi GPU | 32 Tensor Çekirdeği ile 1024 çekirdekli NVIDIA Ampere mimarisi GPU | 32 Tensor Çekirdeği ile 1024 çekirdekli NVIDIA Ampere mimarisi GPU | 64 Tensor Çekirdeği ile 512 çekirdekli NVIDIA Volta mimarisi GPU | 48 Tensor Çekirdeği ile 384 çekirdekli NVIDIA Volta™ mimarisi GPU | 128 çekirdekli NVIDIA Maxwell™ mimarisi GPU |

| GPU Maksimum Frekansı | 1.57 GHz | 1.3 GHz | 918 MHz | 1020 MHz | 1377 MHz | 1100 MHz | 921MHz |

| CPU | 14 çekirdekli Arm® Neoverse®-V3AE 64-bit CPU 1MB L2 + 16MB L3 | 12 çekirdekli NVIDIA Arm® Cortex A78AE v8.2 64-bit CPU 3MB L2 + 6MB L3 | 8 çekirdekli NVIDIA Arm® Cortex A78AE v8.2 64-bit CPU 2MB L2 + 4MB L3 | 6 çekirdekli Arm® Cortex®-A78AE v8.2 64-bit CPU 1.5MB L2 + 4MB L3 | 8 çekirdekli NVIDIA Carmel Arm®v8.2 64-bit CPU 8MB L2 + 4MB L3 | 6 çekirdekli NVIDIA Carmel Arm®v8.2 64-bit CPU 6MB L2 + 4MB L3 | Dört Çekirdekli Arm® Cortex®-A57 MPCore işlemci |

| CPU Maksimum Frekansı | 2.6 GHz | 2.2 GHz | 2.0 GHz | 1.7 GHz | 2.2 GHz | 1.9 GHz | 1.43GHz |

| Bellek | 128GB 256-bit LPDDR5X 273GB/s | 64GB 256-bit LPDDR5 204.8GB/s | 16GB 128-bit LPDDR5 102.4GB/s | 8GB 128-bit LPDDR5 102 GB/s | 32GB 256-bit LPDDR4x 136.5GB/s | 8GB 128-bit LPDDR4x 59.7GB/s | 4GB 64-bit LPDDR4 25.6GB/s |

Daha ayrıntılı bir karşılaştırma tablosu için lütfen resmi NVIDIA Jetson sayfasının Özellikleri Karşılaştır bölümünü ziyaret edin.

NVIDIA JetPack nedir?

Jetson modüllerine güç veren NVIDIA JetPack SDK, uçtan uca hızlandırılmış yapay zeka uygulamaları oluşturmak ve pazara sunma süresini kısaltmak için en kapsamlı çözümdür ve eksiksiz bir geliştirme ortamı sağlar. JetPack, önyükleyici, Linux çekirdeği, Ubuntu masaüstü ortamı ve GPU hesaplama, multimedya, grafik ve bilgisayar görüşü'nün hızlandırılması için eksiksiz bir kitaplık seti ile Jetson Linux'u içerir. Ayrıca, hem ana bilgisayar hem de geliştirme kiti için örnekler, belgeler ve geliştirici araçları içerir ve akışlı video analizi için DeepStream, robotik için Isaac ve konuşmaya dayalı yapay zeka için Riva gibi daha üst düzey SDK'ları destekler.

NVIDIA Jetson'a JetPack'i Flash'la Yükleme

Bir NVIDIA Jetson cihazına sahip olduktan sonraki ilk adım, NVIDIA JetPack'i cihaza yüklemektir. NVIDIA Jetson cihazlarını yüklemenin birkaç farklı yolu vardır.

- Jetson AGX Thor Geliştirici Kiti gibi resmi bir NVIDIA Geliştirme Kitiniz varsa, JetPack'i birlikte verilen SSD'ye yüklemek için bir görüntü indirebilir ve önyüklenebilir bir USB belleği hazırlayabilirsiniz.

- Jetson Orin Nano Geliştirici Kiti gibi resmi bir NVIDIA Geliştirme Kitiniz varsa, bir görüntü indirebilir ve cihazı başlatmak için JetPack ile bir SD kart hazırlayabilirsiniz.

- Başka bir NVIDIA Geliştirme Kitiniz varsa, SDK Manager'ı kullanarak JetPack'i cihaza yazabilirsiniz.

- Bir Seeed Studio reComputer J4012 cihazınız varsa, JetPack'i birlikte verilen SSD'ye yazabilirsiniz ve bir Seeed Studio reComputer J1020 v2 cihazınız varsa, JetPack'i eMMC/ SSD'ye yazabilirsiniz.

- NVIDIA Jetson modülüyle çalışan başka bir üçüncü taraf cihaza sahipseniz, komut satırı üzerinden yükleme yöntemini izlemeniz önerilir.

Not

Yukarıdaki 1, 4 ve 5 numaralı yöntemler için, sistemi flash'ladıktan ve cihazı başlattıktan sonra, gereken tüm JetPack bileşenlerini kurmak için lütfen cihaz terminalinde "sudo apt update && sudo apt install nvidia-jetpack -y" komutunu girin.

Jetson Cihazına Dayalı JetPack Desteği

Aşağıdaki tabloda, farklı NVIDIA Jetson cihazları tarafından desteklenen NVIDIA JetPack sürümleri vurgulanmaktadır.

| JetPack 4 | JetPack 5 | JetPack 6 | JetPack 7 | |

|---|---|---|---|---|

| Jetson Nano | ✅ | ❌ | ❌ | ❌ |

| Jetson TX2 | ✅ | ❌ | ❌ | ❌ |

| Jetson Xavier NX | ✅ | ✅ | ❌ | ❌ |

| Jetson AGX Xavier | ✅ | ✅ | ❌ | ❌ |

| Jetson AGX Orin | ❌ | ✅ | ✅ | ❌ |

| Jetson Orin NX | ❌ | ✅ | ✅ | ❌ |

| Jetson Orin Nano | ❌ | ✅ | ✅ | ❌ |

| Jetson AGX Thor | ❌ | ❌ | ❌ | ✅ |

Docker ile Hızlı Başlangıç

NVIDIA Jetson'da Ultralytics YOLO26 ile başlamanın en hızlı yolu, Jetson için önceden oluşturulmuş Docker imajları ile çalıştırmaktır. Yukarıdaki tabloya bakın ve sahip olduğunuz Jetson cihazına göre JetPack sürümünü seçin.

t=ultralytics/ultralytics:latest-jetson-jetpack4

sudo docker pull $t && sudo docker run -it --ipc=host --runtime=nvidia $t

t=ultralytics/ultralytics:latest-jetson-jetpack5

sudo docker pull $t && sudo docker run -it --ipc=host --runtime=nvidia $t

t=ultralytics/ultralytics:latest-jetson-jetpack6

sudo docker pull $t && sudo docker run -it --ipc=host --runtime=nvidia $t

t=ultralytics/ultralytics:latest-nvidia-arm64

sudo docker pull $t && sudo docker run -it --ipc=host --runtime=nvidia $t

Bu işlem tamamlandıktan sonra, NVIDIA Jetson'da TensorRT Kullanımı bölümüne geçin.

Yerel Kurulum ile başlayın

Docker olmadan yerel bir kurulum için lütfen aşağıdaki adımlara bakın.

JetPack 7.0 üzerinde çalıştırın

Ultralytics Paketini Kurulumu

Burada, PyTorch modellerini diğer farklı formatlara aktarabilmemiz için isteğe bağlı bağımlılıklarla birlikte Ultralytics paketini Jetson'a kuracağız. Esas olarak NVIDIA TensorRT aktarımlarına odaklanacağız çünkü TensorRT, Jetson cihazlarından maksimum performansı almamızı sağlayacaktır.

Paketler listesini güncelleyin, pip'i kurun ve en son sürüme yükseltin

sudo apt update sudo apt install python3-pip -y pip install -U pipKurulum

ultralyticsisteğe bağlı bağımlılıkları olan pip paketipip install ultralytics[export]Cihazı yeniden başlatın

sudo reboot

PyTorch ve Torchvision'ı yükle

Yukarıdaki ultralytics kurulumu Torch ve Torchvision'ı kuracaktır. Ancak, pip aracılığıyla kurulan bu 2 paket, JetPack 7.0 ve CUDA 13 ile birlikte gelen Jetson AGX Thor üzerinde çalışmaya uygun değildir. Bu nedenle, bunları manuel olarak kurmamız gerekir.

Kurulum torch ve torchvision JP7.0'a göre

pip install torch torchvision --index-url https://download.pytorch.org/whl/cu130

Kurulum onnxruntime-gpu

onnxruntime-gpu PyPI'de barındırılan paketinde aarch64 Jetson için ikili dosyalar. Bu nedenle bu paketi manuel olarak kurmamız gerekiyor. Bu paket, bazı dışa aktarmalar için gereklidir.

Burada indirecek ve kuracağız onnxruntime-gpu 1.24.0 ile Python3.12 destek.

pip install https://github.com/ultralytics/assets/releases/download/v0.0.0/onnxruntime_gpu-1.24.0-cp312-cp312-linux_aarch64.whl

JetPack 6.1 üzerinde çalıştırın

Ultralytics Paketini Kurulumu

Burada, PyTorch modellerini diğer farklı formatlara aktarabilmemiz için isteğe bağlı bağımlılıklarla birlikte Ultralytics paketini Jetson'a kuracağız. Esas olarak NVIDIA TensorRT aktarımlarına odaklanacağız çünkü TensorRT, Jetson cihazlarından maksimum performansı almamızı sağlayacaktır.

Paketler listesini güncelleyin, pip'i kurun ve en son sürüme yükseltin

sudo apt update sudo apt install python3-pip -y pip install -U pipKurulum

ultralyticsisteğe bağlı bağımlılıkları olan pip paketipip install ultralytics[export]Cihazı yeniden başlatın

sudo reboot

PyTorch ve Torchvision'ı yükle

Yukarıdaki ultralytics kurulumu Torch ve Torchvision'ı kuracaktır. Ancak, pip aracılığıyla kurulan bu iki paket, ARM64 mimarisine dayalı olan Jetson platformuyla uyumlu değildir. Bu nedenle, önceden oluşturulmuş bir PyTorch pip wheel'i manuel olarak kurmamız ve Torchvision'ı kaynaktan derlememiz veya kurmamız gerekir.

Kurulum torch 2.5.0 ve torchvision 0.20 JP6.1'e göre

pip install https://github.com/ultralytics/assets/releases/download/v0.0.0/torch-2.5.0a0+872d972e41.nv24.08-cp310-cp310-linux_aarch64.whl

pip install https://github.com/ultralytics/assets/releases/download/v0.0.0/torchvision-0.20.0a0+afc54f7-cp310-cp310-linux_aarch64.whl

Not

Farklı JetPack sürümleri için PyTorch'un tüm farklı versiyonlarına erişmek için Jetson için PyTorch sayfasına gidin. PyTorch, Torchvision uyumluluğu hakkında daha ayrıntılı bir liste için PyTorch ve Torchvision uyumluluk sayfasını ziyaret edin.

Kurulum cuSPARSELt ile ilgili bir bağımlılık sorununu düzeltmek için torch 2.5.0

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/arm64/cuda-keyring_1.1-1_all.deb

sudo dpkg -i cuda-keyring_1.1-1_all.deb

sudo apt-get update

sudo apt-get -y install libcusparselt0 libcusparselt-dev

Kurulum onnxruntime-gpu

onnxruntime-gpu PyPI'de barındırılan paketinde aarch64 Jetson için ikili dosyalar. Bu nedenle bu paketi manuel olarak kurmamız gerekiyor. Bu paket, bazı dışa aktarmalar için gereklidir.

Mevcut tüm onnxruntime-gpu paketler—JetPack sürümüne, Python sürümüne ve diğer uyumluluk ayrıntılarına göre düzenlenmiş—içinde Jetson Zoo ONNX Runtime uyumluluk matrisi.

İçin JetPack 6 ile Python 3.10 desteği için, kurabilirsiniz onnxruntime-gpu 1.23.0:

pip install https://github.com/ultralytics/assets/releases/download/v0.0.0/onnxruntime_gpu-1.23.0-cp310-cp310-linux_aarch64.whl

Alternatif olarak, için onnxruntime-gpu 1.20.0:

pip install https://github.com/ultralytics/assets/releases/download/v0.0.0/onnxruntime_gpu-1.20.0-cp310-cp310-linux_aarch64.whl

JetPack 5.1.2 üzerinde çalıştırın

Ultralytics Paketini Kurulumu

Burada, PyTorch modellerini diğer farklı formatlara aktarabilmemiz için isteğe bağlı bağımlılıklarla birlikte Ultralytics paketini Jetson'a kuracağız. Esas olarak NVIDIA TensorRT aktarımlarına odaklanacağız çünkü TensorRT, Jetson cihazlarından maksimum performansı almamızı sağlayacaktır.

Paketler listesini güncelleyin, pip'i kurun ve en son sürüme yükseltin

sudo apt update sudo apt install python3-pip -y pip install -U pipKurulum

ultralyticsisteğe bağlı bağımlılıkları olan pip paketipip install ultralytics[export]Cihazı yeniden başlatın

sudo reboot

PyTorch ve Torchvision'ı yükle

Yukarıdaki ultralytics kurulumu Torch ve Torchvision'ı kuracaktır. Ancak, pip aracılığıyla kurulan bu iki paket, ARM64 mimarisine dayalı olan Jetson platformuyla uyumlu değildir. Bu nedenle, önceden oluşturulmuş bir PyTorch pip wheel'i manuel olarak kurmamız ve Torchvision'ı kaynaktan derlememiz veya kurmamız gerekir.

Şu anda kurulu olan PyTorch ve Torchvision'ı kaldırın

pip uninstall torch torchvisionKurulum

torch 2.2.0vetorchvision 0.17.2JP5.1.2'ye görepip install https://github.com/ultralytics/assets/releases/download/v0.0.0/torch-2.2.0-cp38-cp38-linux_aarch64.whl pip install https://github.com/ultralytics/assets/releases/download/v0.0.0/torchvision-0.17.2+c1d70fe-cp38-cp38-linux_aarch64.whl

Not

Farklı JetPack sürümleri için PyTorch'un tüm farklı versiyonlarına erişmek için Jetson için PyTorch sayfasına gidin. PyTorch, Torchvision uyumluluğu hakkında daha ayrıntılı bir liste için PyTorch ve Torchvision uyumluluk sayfasını ziyaret edin.

Kurulum onnxruntime-gpu

onnxruntime-gpu PyPI'de barındırılan paketinde aarch64 Jetson için ikili dosyalar. Bu nedenle bu paketi manuel olarak kurmamız gerekiyor. Bu paket, bazı dışa aktarmalar için gereklidir.

Mevcut tüm onnxruntime-gpu paketler—JetPack sürümüne, Python sürümüne ve diğer uyumluluk ayrıntılarına göre düzenlenmiş—içinde Jetson Zoo ONNX Runtime uyumluluk matrisi. Burada indirecek ve kuracağız onnxruntime-gpu 1.17.0 ile Python3.8 destek.

wget https://nvidia.box.com/shared/static/zostg6agm00fb6t5uisw51qi6kpcuwzd.whl -O onnxruntime_gpu-1.17.0-cp38-cp38-linux_aarch64.whl

pip install onnxruntime_gpu-1.17.0-cp38-cp38-linux_aarch64.whl

Not

onnxruntime-gpu numpy sürümünü otomatik olarak en son sürüme geri döndürecektir. Bu nedenle numpy'yi tekrar kurmamız gerekiyor: 1.23.5 şu komutu çalıştırarak bir sorunu düzeltmek için:

pip install numpy==1.23.5

NVIDIA Jetson'da TensorRT kullanın

Ultralytics tarafından desteklenen tüm model dışa aktarma biçimleri arasında TensorRT, NVIDIA Jetson cihazlarında en yüksek çıkarım performansını sunar ve bu da onu Jetson dağıtımları için en iyi önerimiz yapar. Kurulum talimatları ve gelişmiş kullanım için özel TensorRT entegrasyon kılavuzumuza bakın.

Modeli TensorRT'ye Dönüştürün ve Çıkarım Çalıştırın

PyTorch formatındaki YOLO26n modeli, dışa aktarılan modelle çıkarım yapmak için TensorRT'ye dönüştürülür.

Örnek

from ultralytics import YOLO

# Load a YOLO26n PyTorch model

model = YOLO("yolo26n.pt")

# Export the model to TensorRT

model.export(format="engine") # creates 'yolo26n.engine'

# Load the exported TensorRT model

trt_model = YOLO("yolo26n.engine")

# Run inference

results = trt_model("https://ultralytics.com/images/bus.jpg")

# Export a YOLO26n PyTorch model to TensorRT format

yolo export model=yolo26n.pt format=engine # creates 'yolo26n.engine'

# Run inference with the exported model

yolo predict model=yolo26n.engine source='https://ultralytics.com/images/bus.jpg'

Not

Modelleri farklı model formatlarına aktarırken ek argümanlara erişmek için Dışa Aktarma sayfasına gidin

NVIDIA Derin Öğrenme Hızlandırıcısı'nı (DLA) kullanın

NVIDIA Derin Öğrenme Hızlandırıcısı (DLA), NVIDIA Jetson cihazlarına yerleştirilmiş, enerji verimliliği ve performans için derin öğrenme çıkarımını optimize eden özel bir donanım bileşenidir. Görevleri GPU'dan boşaltarak (daha yoğun işlemler için serbest bırakarak) DLA, modellerin daha düşük güç tüketimiyle çalışmasını sağlarken yüksek verimi korur, bu da gömülü sistemler ve gerçek zamanlı yapay zeka uygulamaları için idealdir.

Aşağıdaki Jetson cihazları, DLA donanımına sahiptir:

| Jetson Cihazı | DLA Çekirdekleri | DLA Maksimum Frekansı |

|---|---|---|

| Jetson AGX Orin Serisi | 2 | 1.6 GHz |

| Jetson Orin NX 16GB | 2 | 614 MHz |

| Jetson Orin NX 8GB | 1 | 614 MHz |

| Jetson AGX Xavier Serisi | 2 | 1.4 GHz |

| Jetson Xavier NX Serisi | 2 | 1.1 GHz |

Örnek

from ultralytics import YOLO

# Load a YOLO26n PyTorch model

model = YOLO("yolo26n.pt")

# Export the model to TensorRT with DLA enabled (only works with FP16 or INT8)

model.export(format="engine", device="dla:0", half=True) # dla:0 or dla:1 corresponds to the DLA cores

# Load the exported TensorRT model

trt_model = YOLO("yolo26n.engine")

# Run inference

results = trt_model("https://ultralytics.com/images/bus.jpg")

# Export a YOLO26n PyTorch model to TensorRT format with DLA enabled (only works with FP16 or INT8)

# Once DLA core number is specified at export, it will use the same core at inference

yolo export model=yolo26n.pt format=engine device="dla:0" half=True # dla:0 or dla:1 corresponds to the DLA cores

# Run inference with the exported model on the DLA

yolo predict model=yolo26n.engine source='https://ultralytics.com/images/bus.jpg'

Not

DLA dışa aktarımlarını kullanırken, bazı katmanlar DLA üzerinde çalışmayı desteklemeyebilir ve yürütme için GPU'ya geri dönebilir. Bu geri dönüş, ek gecikmeye neden olabilir ve genel çıkarım performansını etkileyebilir. Bu nedenle, DLA öncelikle TensorRT'nin tamamen GPU üzerinde çalışmasına kıyasla çıkarım gecikmesini azaltmak için tasarlanmamıştır. Bunun yerine, temel amacı verimi artırmak ve enerji verimliliğini iyileştirmektir.

NVIDIA Jetson YOLO11/ YOLO26 Benchmarks

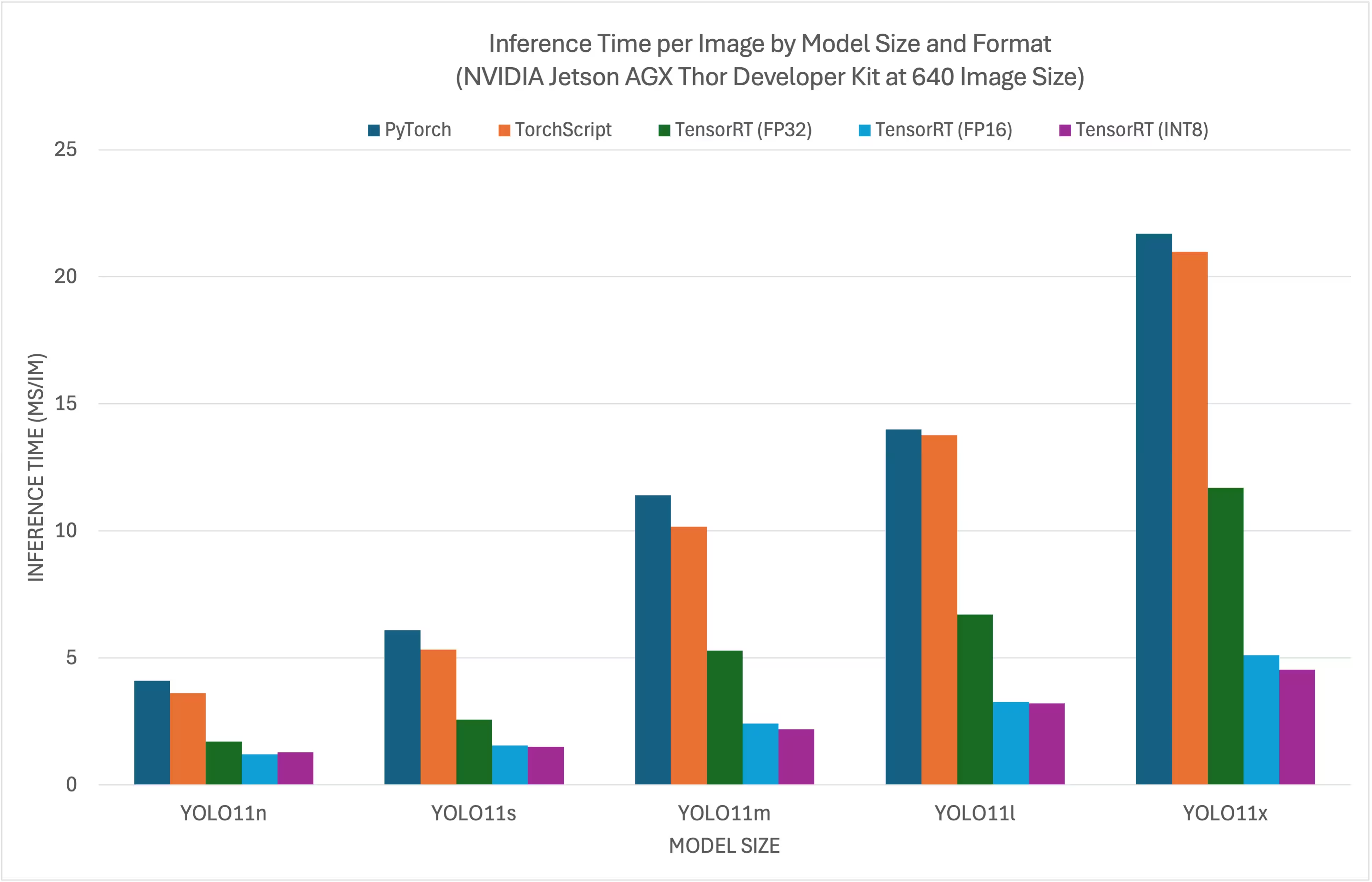

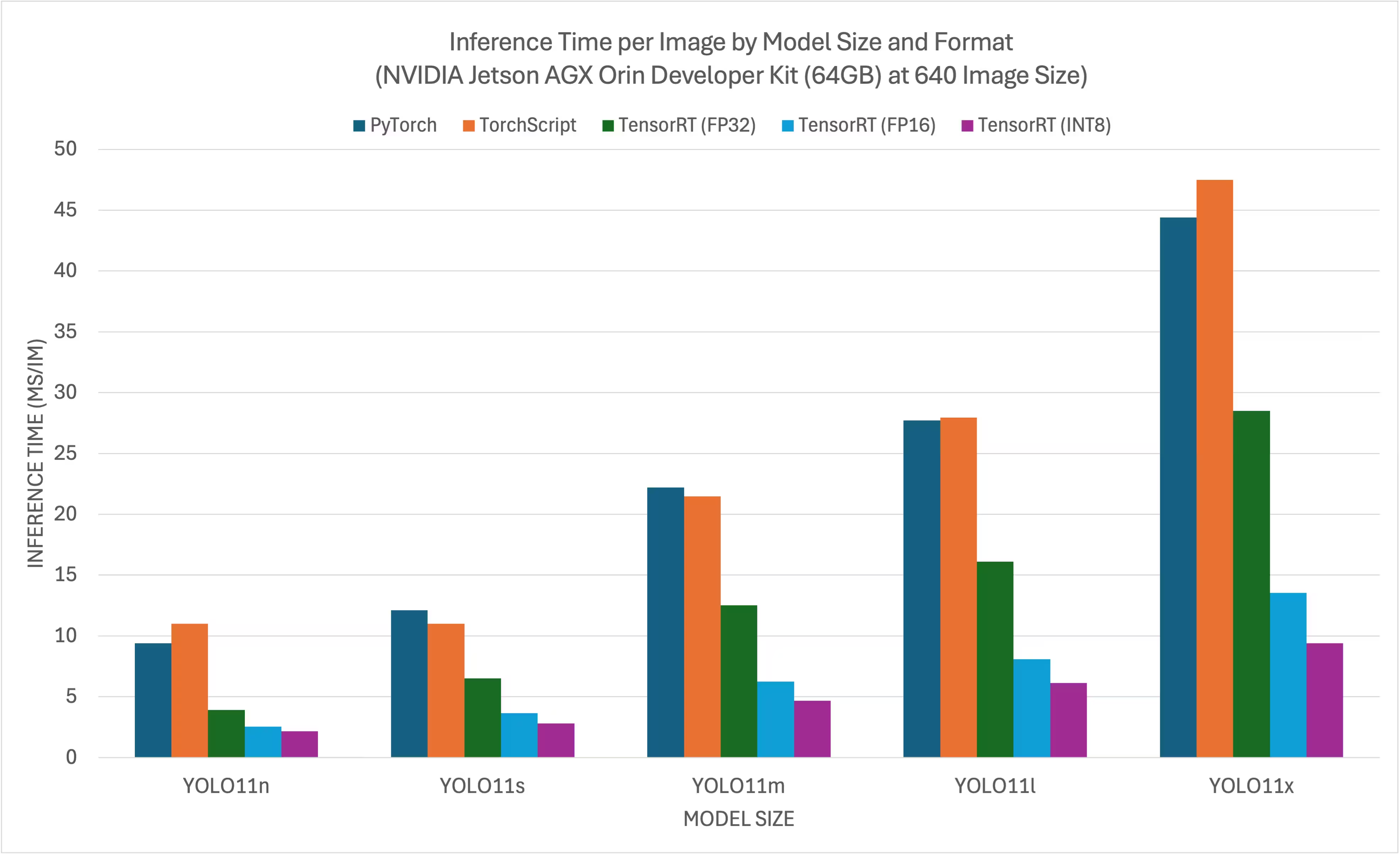

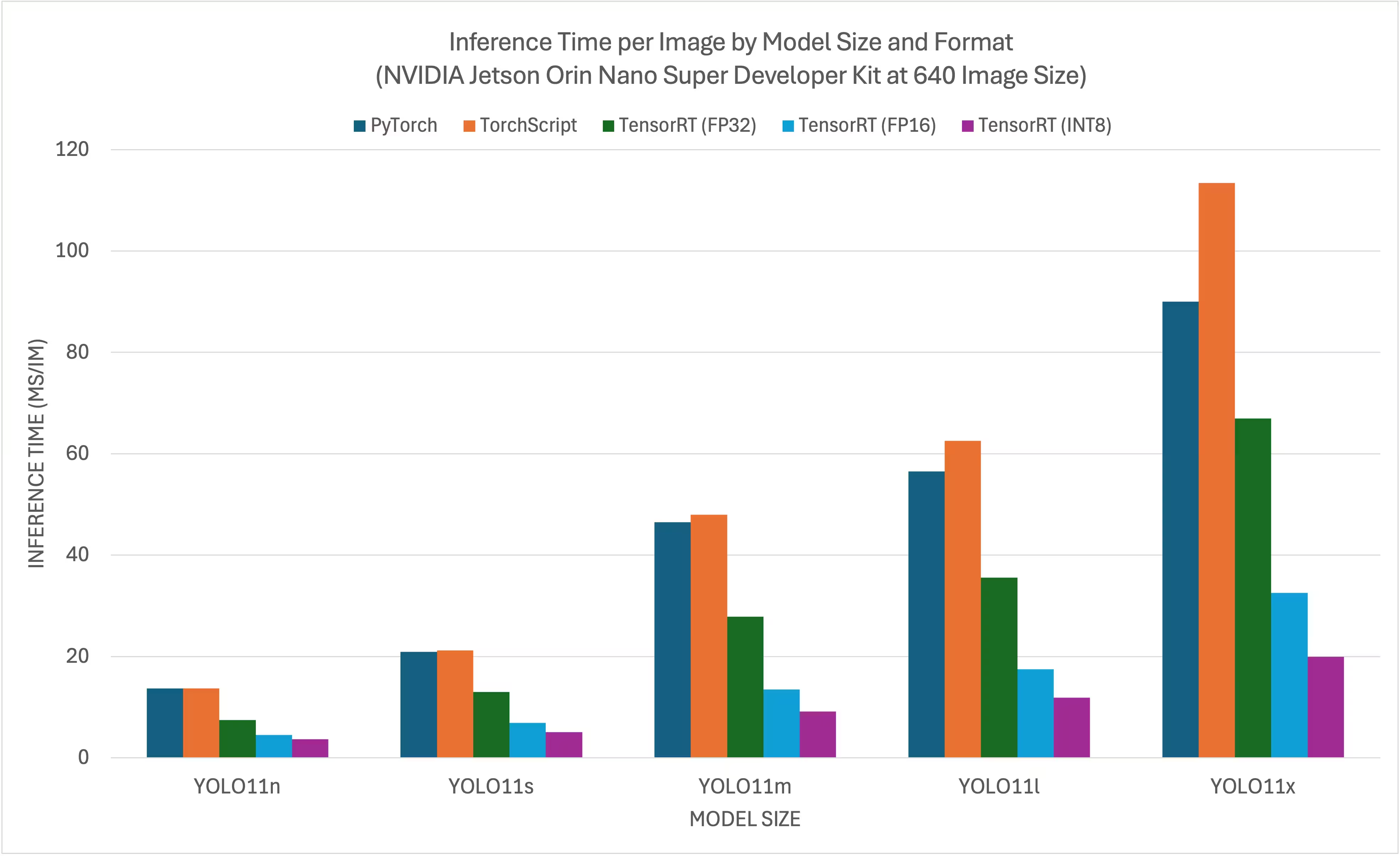

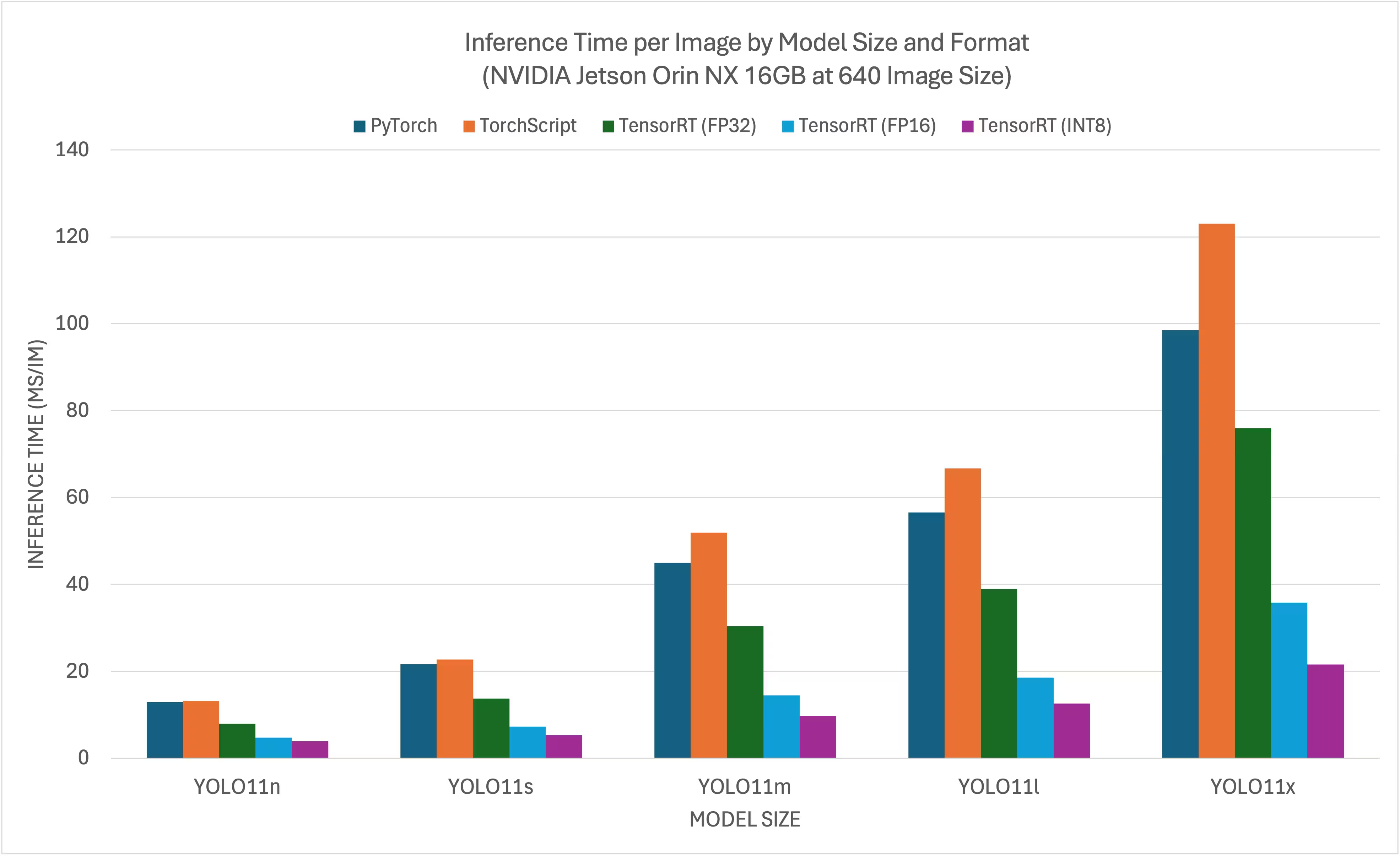

YOLO11/ YOLO26 benchmarks were run by the Ultralytics team on 11 different model formats measuring speed and accuracy: PyTorch, TorchScript, ONNX, OpenVINO, TensorRT, TF SavedModel, TF GraphDef, TF Lite, MNN, NCNN, ExecuTorch. Benchmarks were run on NVIDIA Jetson AGX Thor Developer Kit, NVIDIA Jetson AGX Orin Developer Kit (64GB), NVIDIA Jetson Orin Nano Super Developer Kit and Seeed Studio reComputer J4012 powered by Jetson Orin NX 16GB device at FP32 precision with default input image size of 640.

Karşılaştırma Grafikleri

Tüm model dışa aktarımları NVIDIA Jetson üzerinde çalışsa da, aşağıdaki karşılaştırma tablosu için yalnızca PyTorch, TorchScript, TensorRT'yi dahil ettik çünkü bunlar Jetson üzerindeki GPU'yu kullanır ve en iyi sonuçları garanti eder. Diğer tüm dışa aktarımlar yalnızca CPU'yu kullanır ve performansları yukarıdaki üçü kadar iyi değildir. Tüm dışa aktarımlar için kıyaslamaları bu tablodan sonraki bölümde bulabilirsiniz.

NVIDIA Jetson AGX Thor Geliştirici Kiti

NVIDIA Jetson AGX Orin Geliştirici Kiti (64GB)

NVIDIA Jetson Orin Nano Süper Geliştirici Kiti

NVIDIA Jetson Orin NX 16GB

Ayrıntılı Karşılaştırma Tabloları

Aşağıdaki tablo, 11 farklı formatta (PyTorch, TorchScript, ONNX, OpenVINO, TensorRT, TF SavedModel, TF GraphDef, TF Lite, MNN, NCNN, ExecuTorch) beş farklı model (YOLO11n, YOLO11s, YOLO11m, YOLO11l, YOLO11x) için kıyaslama sonuçlarını göstermektedir ve bize her kombinasyon için durumu, boyutu, mAP50-95(B) metriğini ve çıkarım süresini vermektedir.

NVIDIA Jetson AGX Thor Geliştirici Kiti

Performans

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 5.3 | 0.4798 | 7.39 |

| TorchScript | ✅ | 9.8 | 0.4789 | 4.21 |

| ONNX | ✅ | 9.5 | 0.4767 | 6.58 |

| OpenVINO | ✅ | 10.1 | 0.4794 | 17.50 |

| TensorRT (FP32) | ✅ | 13.9 | 0.4791 | 1.90 |

| TensorRT (FP16) | ✅ | 7.6 | 0.4797 | 1.39 |

| TensorRT (INT8) | ✅ | 6.5 | 0.4273 | 1.52 |

| TF SavedModel | ✅ | 25.7 | 0.4764 | 47.24 |

| TF GraphDef | ✅ | 9.5 | 0.4764 | 45.98 |

| TF Lite | ✅ | 9.9 | 0.4764 | 182.04 |

| MNN | ✅ | 9.4 | 0.4784 | 21.83 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 19.5 | 0.5738 | 7.99 |

| TorchScript | ✅ | 36.8 | 0.5664 | 6.01 |

| ONNX | ✅ | 36.5 | 0.5666 | 9.31 |

| OpenVINO | ✅ | 38.5 | 0.5656 | 35.56 |

| TensorRT (FP32) | ✅ | 38.9 | 0.5664 | 2.95 |

| TensorRT (FP16) | ✅ | 21.0 | 0.5650 | 1.77 |

| TensorRT (INT8) | ✅ | 13.5 | 0.5010 | 1.75 |

| TF SavedModel | ✅ | 96.6 | 0.5665 | 88.87 |

| TF GraphDef | ✅ | 36.5 | 0.5665 | 89.20 |

| TF Lite | ✅ | 36.9 | 0.5665 | 604.25 |

| MNN | ✅ | 36.4 | 0.5651 | 53.75 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 42.2 | 0.6237 | 10.76 |

| TorchScript | ✅ | 78.5 | 0.6217 | 10.57 |

| ONNX | ✅ | 78.2 | 0.6211 | 14.91 |

| OpenVINO | ✅ | 82.2 | 0.6204 | 86.27 |

| TensorRT (FP32) | ✅ | 82.2 | 0.6230 | 5.56 |

| TensorRT (FP16) | ✅ | 41.6 | 0.6209 | 2.58 |

| TensorRT (INT8) | ✅ | 24.3 | 0.5595 | 2.49 |

| TF SavedModel | ✅ | 205.8 | 0.6229 | 200.96 |

| TF GraphDef | ✅ | 78.2 | 0.6229 | 203.00 |

| TF Lite | ✅ | 78.6 | 0.6229 | 1867.12 |

| MNN | ✅ | 78.0 | 0.6176 | 142.00 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 50.7 | 0.6258 | 13.34 |

| TorchScript | ✅ | 95.5 | 0.6248 | 13.86 |

| ONNX | ✅ | 95.0 | 0.6247 | 18.44 |

| OpenVINO | ✅ | 99.9 | 0.6238 | 106.67 |

| TensorRT (FP32) | ✅ | 99.0 | 0.6249 | 6.74 |

| TensorRT (FP16) | ✅ | 50.3 | 0.6243 | 3.34 |

| TensorRT (INT8) | ✅ | 29.0 | 0.5708 | 3.24 |

| TF SavedModel | ✅ | 250.0 | 0.6245 | 259.74 |

| TF GraphDef | ✅ | 95.0 | 0.6245 | 263.42 |

| TF Lite | ✅ | 95.4 | 0.6245 | 2367.83 |

| MNN | ✅ | 94.8 | 0.6272 | 174.39 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 113.2 | 0.6565 | 20.92 |

| TorchScript | ✅ | 213.5 | 0.6595 | 21.76 |

| ONNX | ✅ | 212.9 | 0.6590 | 26.72 |

| OpenVINO | ✅ | 223.6 | 0.6620 | 205.27 |

| TensorRT (FP32) | ✅ | 217.2 | 0.6593 | 12.29 |

| TensorRT (FP16) | ✅ | 112.1 | 0.6611 | 5.16 |

| TensorRT (INT8) | ✅ | 58.9 | 0.5222 | 4.72 |

| TF SavedModel | ✅ | 559.2 | 0.6593 | 498.85 |

| TF GraphDef | ✅ | 213.0 | 0.6593 | 507.43 |

| TF Lite | ✅ | 213.3 | 0.6593 | 5134.22 |

| MNN | ✅ | 212.8 | 0.6625 | 347.84 |

Benchmarked with Ultralytics 8.4.7

Not

Çıkarım süresi, ön/son işlemeyi içermez.

NVIDIA Jetson AGX Orin Geliştirici Kiti (64GB)

Performans

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 5.4 | 0.5101 | 9.40 |

| TorchScript | ✅ | 10.5 | 0.5083 | 11.00 |

| ONNX | ✅ | 10.2 | 0.5077 | 48.32 |

| OpenVINO | ✅ | 10.4 | 0.5058 | 27.24 |

| TensorRT (FP32) | ✅ | 12.1 | 0.5085 | 3.93 |

| TensorRT (FP16) | ✅ | 8.3 | 0.5063 | 2.55 |

| TensorRT (INT8) | ✅ | 5.4 | 0.4719 | 2.18 |

| TF SavedModel | ✅ | 25.9 | 0.5077 | 66.87 |

| TF GraphDef | ✅ | 10.3 | 0.5077 | 65.68 |

| TF Lite | ✅ | 10.3 | 0.5077 | 272.92 |

| MNN | ✅ | 10.1 | 0.5059 | 36.33 |

| NCNN | ✅ | 10.2 | 0.5031 | 28.51 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 18.4 | 0.5783 | 12.10 |

| TorchScript | ✅ | 36.5 | 0.5782 | 11.01 |

| ONNX | ✅ | 36.3 | 0.5782 | 107.54 |

| OpenVINO | ✅ | 36.4 | 0.5810 | 55.03 |

| TensorRT (FP32) | ✅ | 38.1 | 0.5781 | 6.52 |

| TensorRT (FP16) | ✅ | 21.4 | 0.5803 | 3.65 |

| TensorRT (INT8) | ✅ | 12.1 | 0.5735 | 2.81 |

| TF SavedModel | ✅ | 91.0 | 0.5782 | 132.73 |

| TF GraphDef | ✅ | 36.4 | 0.5782 | 134.96 |

| TF Lite | ✅ | 36.3 | 0.5782 | 798.21 |

| MNN | ✅ | 36.2 | 0.5777 | 82.35 |

| NCNN | ✅ | 36.2 | 0.5784 | 56.07 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 38.8 | 0.6265 | 22.20 |

| TorchScript | ✅ | 77.3 | 0.6307 | 21.47 |

| ONNX | ✅ | 76.9 | 0.6307 | 270.89 |

| OpenVINO | ✅ | 77.1 | 0.6284 | 129.10 |

| TensorRT (FP32) | ✅ | 78.8 | 0.6306 | 12.53 |

| TensorRT (FP16) | ✅ | 41.9 | 0.6305 | 6.25 |

| TensorRT (INT8) | ✅ | 23.2 | 0.6291 | 4.69 |

| TF SavedModel | ✅ | 192.7 | 0.6307 | 299.95 |

| TF GraphDef | ✅ | 77.1 | 0.6307 | 310.58 |

| TF Lite | ✅ | 77.0 | 0.6307 | 2400.54 |

| MNN | ✅ | 76.8 | 0.6308 | 213.56 |

| NCNN | ✅ | 76.8 | 0.6284 | 141.18 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 49.0 | 0.6364 | 27.70 |

| TorchScript | ✅ | 97.6 | 0.6399 | 27.94 |

| ONNX | ✅ | 97.0 | 0.6409 | 345.47 |

| OpenVINO | ✅ | 97.3 | 0.6378 | 161.93 |

| TensorRT (FP32) | ✅ | 99.1 | 0.6406 | 16.11 |

| TensorRT (FP16) | ✅ | 52.6 | 0.6376 | 8.08 |

| TensorRT (INT8) | ✅ | 30.8 | 0.6208 | 6.12 |

| TF SavedModel | ✅ | 243.1 | 0.6409 | 390.78 |

| TF GraphDef | ✅ | 97.2 | 0.6409 | 398.76 |

| TF Lite | ✅ | 97.1 | 0.6409 | 3037.05 |

| MNN | ✅ | 96.9 | 0.6372 | 265.46 |

| NCNN | ✅ | 96.9 | 0.6364 | 179.68 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 109.3 | 0.7005 | 44.40 |

| TorchScript | ✅ | 218.1 | 0.6898 | 47.49 |

| ONNX | ✅ | 217.5 | 0.6900 | 682.98 |

| OpenVINO | ✅ | 217.8 | 0.6876 | 298.15 |

| TensorRT (FP32) | ✅ | 219.6 | 0.6904 | 28.50 |

| TensorRT (FP16) | ✅ | 112.2 | 0.6887 | 13.55 |

| TensorRT (INT8) | ✅ | 60.0 | 0.6574 | 9.40 |

| TF SavedModel | ✅ | 544.3 | 0.6900 | 749.85 |

| TF GraphDef | ✅ | 217.7 | 0.6900 | 753.86 |

| TF Lite | ✅ | 217.6 | 0.6900 | 6603.27 |

| MNN | ✅ | 217.3 | 0.6868 | 519.77 |

| NCNN | ✅ | 217.3 | 0.6849 | 298.58 |

Ultralytics 8.3.157 ile kıyaslandı

Not

Çıkarım süresi, ön/son işlemeyi içermez.

NVIDIA Jetson Orin Nano Süper Geliştirici Kiti

Performans

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 5.4 | 0.5101 | 13.70 |

| TorchScript | ✅ | 10.5 | 0.5082 | 13.69 |

| ONNX | ✅ | 10.2 | 0.5081 | 14.47 |

| OpenVINO | ✅ | 10.4 | 0.5058 | 56.66 |

| TensorRT (FP32) | ✅ | 12.0 | 0.5081 | 7.44 |

| TensorRT (FP16) | ✅ | 8.2 | 0.5061 | 4.53 |

| TensorRT (INT8) | ✅ | 5.4 | 0.4825 | 3.70 |

| TF SavedModel | ✅ | 25.9 | 0.5077 | 116.23 |

| TF GraphDef | ✅ | 10.3 | 0.5077 | 114.92 |

| TF Lite | ✅ | 10.3 | 0.5077 | 340.75 |

| MNN | ✅ | 10.1 | 0.5059 | 76.26 |

| NCNN | ✅ | 10.2 | 0.5031 | 45.03 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 18.4 | 0.5790 | 20.90 |

| TorchScript | ✅ | 36.5 | 0.5781 | 21.22 |

| ONNX | ✅ | 36.3 | 0.5781 | 25.07 |

| OpenVINO | ✅ | 36.4 | 0.5810 | 122.98 |

| TensorRT (FP32) | ✅ | 37.9 | 0.5783 | 13.02 |

| TensorRT (FP16) | ✅ | 21.8 | 0.5779 | 6.93 |

| TensorRT (INT8) | ✅ | 12.2 | 0.5735 | 5.08 |

| TF SavedModel | ✅ | 91.0 | 0.5782 | 250.65 |

| TF GraphDef | ✅ | 36.4 | 0.5782 | 252.69 |

| TF Lite | ✅ | 36.3 | 0.5782 | 998.68 |

| MNN | ✅ | 36.2 | 0.5781 | 188.01 |

| NCNN | ✅ | 36.2 | 0.5784 | 101.37 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 38.8 | 0.6266 | 46.50 |

| TorchScript | ✅ | 77.3 | 0.6307 | 47.95 |

| ONNX | ✅ | 76.9 | 0.6307 | 53.06 |

| OpenVINO | ✅ | 77.1 | 0.6284 | 301.63 |

| TensorRT (FP32) | ✅ | 78.8 | 0.6305 | 27.86 |

| TensorRT (FP16) | ✅ | 41.7 | 0.6309 | 13.50 |

| TensorRT (INT8) | ✅ | 23.2 | 0.6291 | 9.12 |

| TF SavedModel | ✅ | 192.7 | 0.6307 | 622.24 |

| TF GraphDef | ✅ | 77.1 | 0.6307 | 628.74 |

| TF Lite | ✅ | 77.0 | 0.6307 | 2997.93 |

| MNN | ✅ | 76.8 | 0.6299 | 509.96 |

| NCNN | ✅ | 76.8 | 0.6284 | 292.99 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 49.0 | 0.6364 | 56.50 |

| TorchScript | ✅ | 97.6 | 0.6409 | 62.51 |

| ONNX | ✅ | 97.0 | 0.6399 | 68.35 |

| OpenVINO | ✅ | 97.3 | 0.6378 | 376.03 |

| TensorRT (FP32) | ✅ | 99.2 | 0.6396 | 35.59 |

| TensorRT (FP16) | ✅ | 52.1 | 0.6361 | 17.48 |

| TensorRT (INT8) | ✅ | 30.9 | 0.6207 | 11.87 |

| TF SavedModel | ✅ | 243.1 | 0.6409 | 807.47 |

| TF GraphDef | ✅ | 97.2 | 0.6409 | 822.88 |

| TF Lite | ✅ | 97.1 | 0.6409 | 3792.23 |

| MNN | ✅ | 96.9 | 0.6372 | 631.16 |

| NCNN | ✅ | 96.9 | 0.6364 | 350.46 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 109.3 | 0.7005 | 90.00 |

| TorchScript | ✅ | 218.1 | 0.6901 | 113.40 |

| ONNX | ✅ | 217.5 | 0.6901 | 122.94 |

| OpenVINO | ✅ | 217.8 | 0.6876 | 713.1 |

| TensorRT (FP32) | ✅ | 219.5 | 0.6904 | 66.93 |

| TensorRT (FP16) | ✅ | 112.2 | 0.6892 | 32.58 |

| TensorRT (INT8) | ✅ | 61.5 | 0.6612 | 19.90 |

| TF SavedModel | ✅ | 544.3 | 0.6900 | 1605.4 |

| TF GraphDef | ✅ | 217.8 | 0.6900 | 2961.8 |

| TF Lite | ✅ | 217.6 | 0.6900 | 8234.86 |

| MNN | ✅ | 217.3 | 0.6893 | 1254.18 |

| NCNN | ✅ | 217.3 | 0.6849 | 725.50 |

Ultralytics 8.3.157 ile kıyaslandı

Not

Çıkarım süresi, ön/son işlemeyi içermez.

NVIDIA Jetson Orin NX 16GB

Performans

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 5.4 | 0.5101 | 12.90 |

| TorchScript | ✅ | 10.5 | 0.5082 | 13.17 |

| ONNX | ✅ | 10.2 | 0.5081 | 15.43 |

| OpenVINO | ✅ | 10.4 | 0.5058 | 39.80 |

| TensorRT (FP32) | ✅ | 11.8 | 0.5081 | 7.94 |

| TensorRT (FP16) | ✅ | 8.1 | 0.5085 | 4.73 |

| TensorRT (INT8) | ✅ | 5.4 | 0.4786 | 3.90 |

| TF SavedModel | ✅ | 25.9 | 0.5077 | 88.48 |

| TF GraphDef | ✅ | 10.3 | 0.5077 | 86.67 |

| TF Lite | ✅ | 10.3 | 0.5077 | 302.55 |

| MNN | ✅ | 10.1 | 0.5059 | 52.73 |

| NCNN | ✅ | 10.2 | 0.5031 | 32.04 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 18.4 | 0.5790 | 21.70 |

| TorchScript | ✅ | 36.5 | 0.5781 | 22.71 |

| ONNX | ✅ | 36.3 | 0.5781 | 26.49 |

| OpenVINO | ✅ | 36.4 | 0.5810 | 84.73 |

| TensorRT (FP32) | ✅ | 37.8 | 0.5783 | 13.77 |

| TensorRT (FP16) | ✅ | 21.2 | 0.5796 | 7.31 |

| TensorRT (INT8) | ✅ | 12.0 | 0.5735 | 5.33 |

| TF SavedModel | ✅ | 91.0 | 0.5782 | 185.06 |

| TF GraphDef | ✅ | 36.4 | 0.5782 | 186.45 |

| TF Lite | ✅ | 36.3 | 0.5782 | 882.58 |

| MNN | ✅ | 36.2 | 0.5775 | 126.36 |

| NCNN | ✅ | 36.2 | 0.5784 | 66.73 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 38.8 | 0.6266 | 45.00 |

| TorchScript | ✅ | 77.3 | 0.6307 | 51.87 |

| ONNX | ✅ | 76.9 | 0.6307 | 56.00 |

| OpenVINO | ✅ | 77.1 | 0.6284 | 202.69 |

| TensorRT (FP32) | ✅ | 78.7 | 0.6305 | 30.38 |

| TensorRT (FP16) | ✅ | 41.8 | 0.6302 | 14.48 |

| TensorRT (INT8) | ✅ | 23.2 | 0.6291 | 9.74 |

| TF SavedModel | ✅ | 192.7 | 0.6307 | 445.58 |

| TF GraphDef | ✅ | 77.1 | 0.6307 | 460.94 |

| TF Lite | ✅ | 77.0 | 0.6307 | 2653.65 |

| MNN | ✅ | 76.8 | 0.6308 | 339.38 |

| NCNN | ✅ | 76.8 | 0.6284 | 187.64 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 49.0 | 0.6364 | 56.60 |

| TorchScript | ✅ | 97.6 | 0.6409 | 66.72 |

| ONNX | ✅ | 97.0 | 0.6399 | 71.92 |

| OpenVINO | ✅ | 97.3 | 0.6378 | 254.17 |

| TensorRT (FP32) | ✅ | 99.2 | 0.6406 | 38.89 |

| TensorRT (FP16) | ✅ | 51.9 | 0.6363 | 18.59 |

| TensorRT (INT8) | ✅ | 30.9 | 0.6207 | 12.60 |

| TF SavedModel | ✅ | 243.1 | 0.6409 | 575.98 |

| TF GraphDef | ✅ | 97.2 | 0.6409 | 583.79 |

| TF Lite | ✅ | 97.1 | 0.6409 | 3353.41 |

| MNN | ✅ | 96.9 | 0.6367 | 421.33 |

| NCNN | ✅ | 96.9 | 0.6364 | 228.26 |

| Format | Durum | Disk üzerindeki boyut (MB) | mAP50-95(B) | Çıkarım süresi (ms/im) |

|---|---|---|---|---|

| PyTorch | ✅ | 109.3 | 0.7005 | 98.50 |

| TorchScript | ✅ | 218.1 | 0.6901 | 123.03 |

| ONNX | ✅ | 217.5 | 0.6901 | 129.55 |

| OpenVINO | ✅ | 217.8 | 0.6876 | 483.44 |

| TensorRT (FP32) | ✅ | 219.6 | 0.6904 | 75.92 |

| TensorRT (FP16) | ✅ | 112.1 | 0.6885 | 35.78 |

| TensorRT (INT8) | ✅ | 61.6 | 0.6592 | 21.60 |

| TF SavedModel | ✅ | 544.3 | 0.6900 | 1120.43 |

| TF GraphDef | ✅ | 217.7 | 0.6900 | 1172.35 |

| TF Lite | ✅ | 217.6 | 0.6900 | 7283.63 |

| MNN | ✅ | 217.3 | 0.6877 | 840.16 |

| NCNN | ✅ | 217.3 | 0.6849 | 474.41 |

Ultralytics 8.3.157 ile kıyaslandı

Not

Çıkarım süresi, ön/son işlemeyi içermez.

NVIDIA Jetson donanımının farklı sürümlerinde çalışan Seeed Studio tarafından daha fazla kıyaslama çabasını keşfedin.

Sonuçlarımızı Tekrar Üretin

Yukarıdaki Ultralytics kıyaslamalarını tüm dışa aktarma biçimlerinde yeniden oluşturmak için bu kodu çalıştırın:

Örnek

from ultralytics import YOLO

# Load a YOLO11n PyTorch model

model = YOLO("yolo11n.pt")

# Benchmark YOLO11n speed and accuracy on the COCO128 dataset for all export formats

results = model.benchmark(data="coco128.yaml", imgsz=640)

# Benchmark YOLO11n speed and accuracy on the COCO128 dataset for all export formats

yolo benchmark model=yolo11n.pt data=coco128.yaml imgsz=640

Kıyaslama sonuçlarının, bir sistemin donanım ve yazılım yapılandırmasına ve kıyaslamaların çalıştırıldığı zamanki sistemin mevcut iş yüküne göre değişebileceğini unutmayın. En güvenilir sonuçlar için, çok sayıda görüntü içeren bir veri kümesi kullanın, örneğin: data='coco.yaml' (5000 doğrulama görüntüsü).

NVIDIA Jetson kullanırken En İyi Uygulamalar

NVIDIA Jetson kullanırken, YOLO26 çalıştıran NVIDIA Jetson üzerinde maksimum performans sağlamak için izlenmesi gereken birkaç en iyi uygulama vardır.

MAX Güç Modunu Etkinleştir

Jetson'da MAX Güç Modunu Etkinleştirmek, tüm CPU, GPU çekirdeklerinin açık olduğundan emin olacaktır.

sudo nvpmodel -m 0Jetson Saatlerini Etkinleştir

Jetson Saatlerini Etkinleştirmek, tüm CPU, GPU çekirdeklerinin maksimum frekanslarında saatlenmesini sağlayacaktır.

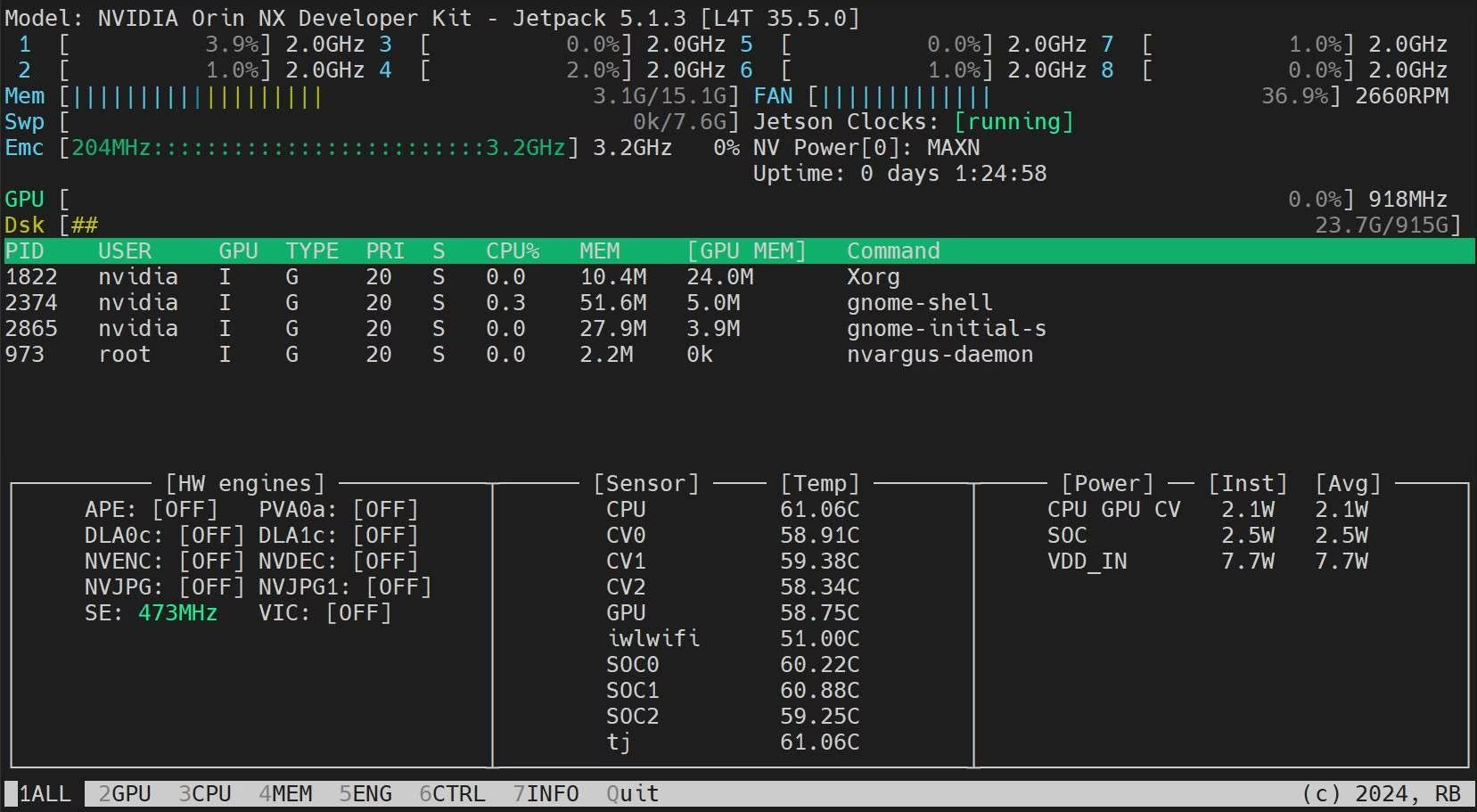

sudo jetson_clocksJetson İstatistik Uygulamasını Yükle

Sistem bileşenlerinin sıcaklıklarını izlemek ve CPU, GPU, RAM kullanımını görüntüleme, güç modlarını değiştirme, maksimum saatlere ayarlama, JetPack bilgilerini kontrol etme gibi diğer sistem ayrıntılarını kontrol etmek için jetson istatistikleri uygulamasını kullanabiliriz

sudo apt update sudo pip install jetson-stats sudo reboot jtop

Sonraki Adımlar

Daha fazla öğrenme ve destek için Ultralytics YOLO26 Belgeleri'ne bakın.

SSS

Ultralytics YOLO26'yı NVIDIA Jetson cihazlarına nasıl dağıtırım?

Ultralytics YOLO26'yı NVIDIA Jetson cihazlarına dağıtmak basit bir süreçtir. İlk olarak, Jetson cihazınızı NVIDIA JetPack SDK ile flaşlayın. Ardından, hızlı kurulum için önceden oluşturulmuş bir Docker görüntüsü kullanın veya gerekli paketleri manuel olarak kurun. Her yaklaşım için ayrıntılı adımlar Docker ile Hızlı Başlangıç ve Yerel Kurulum ile Başlangıç bölümlerinde bulunabilir.

NVIDIA Jetson cihazlarında YOLO11 modellerinden hangi performans kıyaslamalarını bekleyebilirim?

YOLO11 modelleri, önemli performans iyileştirmeleri gösteren çeşitli NVIDIA Jetson cihazlarında karşılaştırılmıştır. Örneğin, TensorRT formatı en iyi çıkarım performansını sunar. Ayrıntılı Karşılaştırma Tabloları bölümündeki tablo, farklı model formatlarında mAP50-95 ve çıkarım süresi gibi performans metriklerinin kapsamlı bir görünümünü sağlar.

NVIDIA Jetson üzerinde YOLO26 dağıtımı için neden TensorRT kullanmalıyım?

TensorRT, optimal performansı nedeniyle YOLO26 modellerini NVIDIA Jetson üzerinde dağıtmak için şiddetle tavsiye edilir. Jetson'ın GPU yeteneklerinden yararlanarak çıkarımı hızlandırır, maksimum verimlilik ve hız sağlar. TensorRT'ye nasıl dönüştürüleceği ve çıkarımın nasıl çalıştırılacağı hakkında daha fazla bilgiyi NVIDIA Jetson'da TensorRT Kullanımı bölümünde bulabilirsiniz.

NVIDIA Jetson'a PyTorch ve Torchvision'ı nasıl kurabilirim?

NVIDIA Jetson'a PyTorch ve Torchvision'ı kurmak için, öncelikle pip aracılığıyla yüklenmiş olabilecek mevcut sürümleri kaldırın. Ardından, Jetson'ın ARM64 mimarisi için uyumlu PyTorch ve Torchvision sürümlerini manuel olarak kurun. Bu işlem için ayrıntılı talimatlar, PyTorch ve Torchvision'ı Kurulum bölümünde verilmiştir.

YOLO26 kullanırken NVIDIA Jetson üzerinde performansı en üst düzeye çıkarmak için en iyi uygulamalar nelerdir?

YOLO26 ile NVIDIA Jetson üzerinde performansı en üst düzeye çıkarmak için şu en iyi uygulamaları izleyin:

- Tüm CPU ve GPU çekirdeklerini kullanmak için MAX Güç Modunu etkinleştirin.

- Tüm çekirdekleri maksimum frekanslarında çalıştırmak için Jetson Saatlerini etkinleştirin.

- Sistem metriklerini izlemek için Jetson Stats uygulamasını kurun.

Komutlar ve ek ayrıntılar için, NVIDIA Jetson kullanırken En İyi Uygulamalar bölümüne bakın.