使用 Neural Magic 的 DeepSparse Engine 优化 YOLO26 推理

在各种硬件上部署像 Ultralytics YOLO26 这样的目标检测模型时,可能会遇到优化等独特问题。YOLO26 与 Neural Magic 的 DeepSparse Engine 的集成正是在此发挥作用。它改变了 YOLO26 模型的执行方式,并直接在 CPU 上实现了 GPU 级别的性能。

本指南将向您展示如何使用 Neural Magic 的 DeepSparse 部署 YOLO26、如何运行推理,以及如何对性能进行基准测试以确保其得到优化。

SparseML EOL

Neural Magic 是 于 2025 年 1 月被 Red Hat 收购,并且正在弃用他们的社区版本 deepsparse, sparseml, sparsezoo和 sparsify 库。更多信息,请参见发布的通知 在 Readme 文件中 sparseml GitHub 仓库.

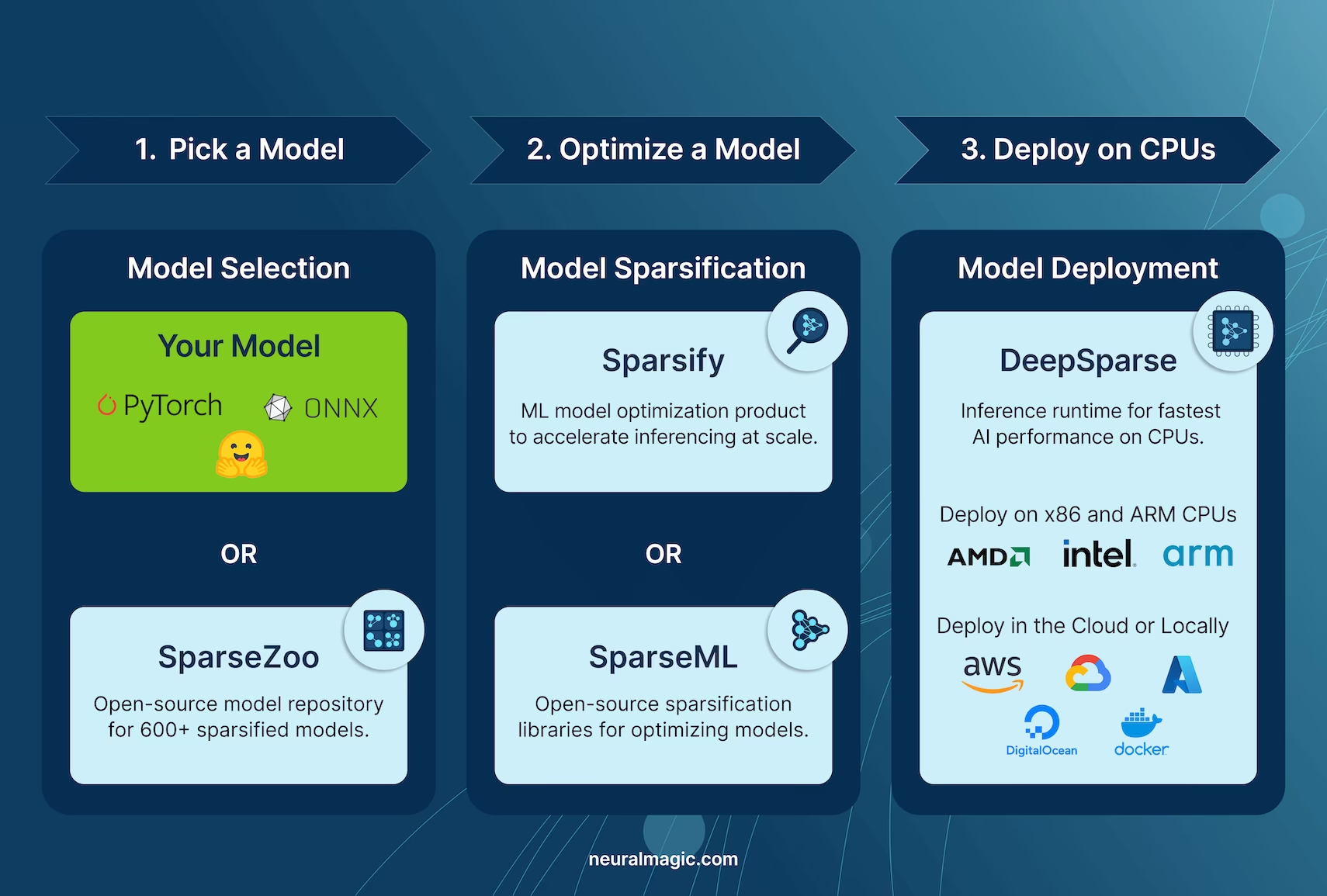

Neural Magic 的 DeepSparse

Neural Magic's DeepSparse 是一种推理运行时,旨在优化神经网络在 CPU 上的执行。它应用稀疏性、剪枝和量化等先进技术,以显著降低计算需求,同时保持准确性。DeepSparse 为跨各种设备的高效且可扩展的 神经网络 执行提供了一种敏捷的解决方案。

将 Neural Magic 的 DeepSparse 与 YOLO26 集成的优势

在深入了解如何使用 DeepSparse 部署 YOLO26 之前,让我们先了解使用 DeepSparse 的好处。主要优势包括:

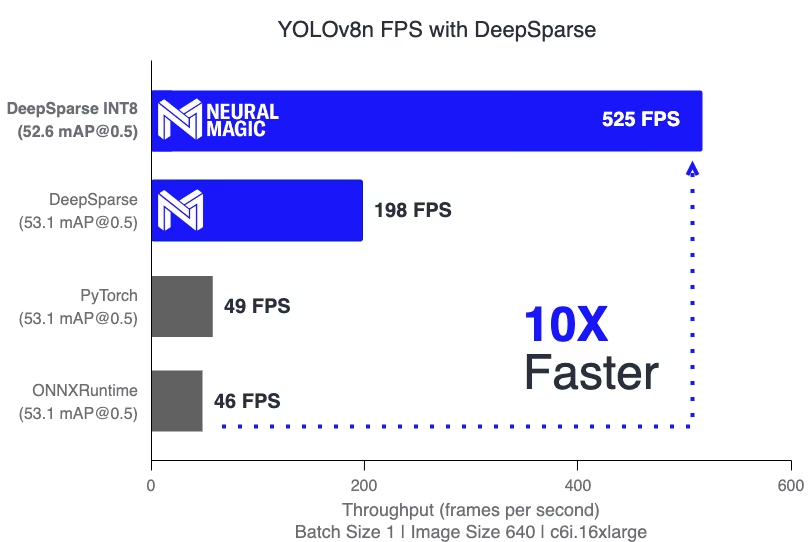

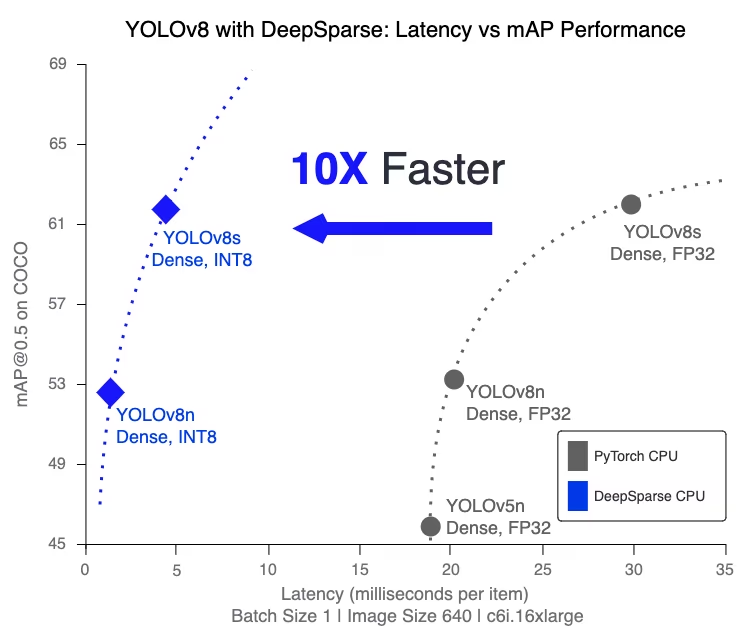

- 增强的推理速度:在 YOLO11n 上实现高达 525 FPS,与传统方法相比,显著加快了 YOLO 的推理能力。

- 优化的模型效率:利用剪枝和量化技术提升 YOLO26 的效率,在保持准确性的同时,减小模型大小并降低计算要求。

标准 CPU 上的高性能:在 CPU 上提供类似 GPU 的性能,为各种应用提供更易于访问且经济高效的选择。

简化的集成与部署:提供用户友好的工具,便于将 YOLO26 集成到应用程序中,包括图像和视频标注功能。

支持多种模型类型:兼容标准和稀疏优化后的 YOLO26 模型,增加了部署的灵活性。

经济高效且可扩展的解决方案: 降低运营费用,并提供高级对象检测模型的可扩展部署。

Neural Magic 的 DeepSparse 技术如何工作?

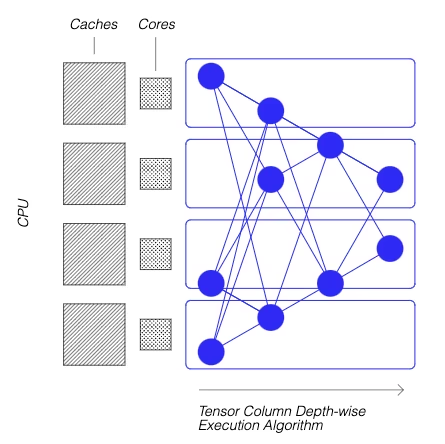

Neural Magic 的 DeepSparse 技术受人脑在神经网络计算效率方面的启发。它借鉴了大脑的两个关键原则,具体如下:

稀疏性: 稀疏化过程包括从深度学习网络中修剪冗余信息,从而在不影响准确性的前提下,生成更小、更快的模型。 这项技术显著降低了网络的规模和计算需求。

引用局部性:DeepSparse 使用独特的执行方法,将网络分解为 Tensor Columns。这些列按深度方向执行,完全适合 CPU 的缓存。这种方法模仿了大脑的效率,最大限度地减少了数据移动,并最大限度地利用了 CPU 的缓存。

创建在自定义数据集上训练的 YOLO26 稀疏版本

Neural Magic 的开源模型库 SparseZoo 提供了一系列预稀疏化的 YOLO26 模型检查点。通过与 Ultralytics 无缝集成的 SparseML,用户可以使用简单的命令行界面,轻松地在特定数据集上微调这些稀疏检查点。

查阅Neural Magic 的 SparseML YOLO26 文档了解更多详情。

用法:使用 DeepSparse 部署 YOLO26

使用 Neural Magic 的 DeepSparse 部署 YOLO26 涉及几个简单的步骤。在深入了解使用说明之前,请务必查看 Ultralytics 提供的 YOLO26 模型系列。这将帮助您根据项目需求选择最合适的模型。以下是入门方法。

步骤 1:安装

要安装所需的软件包,请运行:

安装

# Install the required packages

pip install deepsparse[yolov8]

步骤 2:将 YOLO26 导出为 ONNX 格式

DeepSparse Engine 要求 YOLO26 模型采用 ONNX 格式。将模型导出为这种格式对于与 DeepSparse 的兼容性至关重要。使用以下命令导出 YOLO26 模型:

模型导出

# Export YOLO26 model to ONNX format

yolo task=detect mode=export model=yolo26n.pt format=onnx opset=13

此命令将保存 yolo26n.onnx 模型到您的磁盘。

步骤 3:部署和运行推理

将 YOLO26 模型转换为 ONNX 格式后,您可以使用 DeepSparse 进行部署和运行推理。这可以通过其直观的 Python API 轻松完成:

部署和运行推理

from deepsparse import Pipeline

# Specify the path to your YOLO26 ONNX model

model_path = "path/to/yolo26n.onnx"

# Set up the DeepSparse Pipeline

yolo_pipeline = Pipeline.create(task="yolov8", model_path=model_path)

# Run the model on your images

images = ["path/to/image.jpg"]

pipeline_outputs = yolo_pipeline(images=images)

第四步:性能基准测试

检查您的 YOLO26 模型在 DeepSparse 上是否表现最佳至关重要。您可以对模型的性能进行基准测试,以分析吞吐量和延迟:

基准测试

# Benchmark performance

deepsparse.benchmark model_path="path/to/yolo26n.onnx" --scenario=sync --input_shapes="[1,3,640,640]"

第五步:附加功能

DeepSparse 为 YOLO26 在应用程序中的实际集成提供了额外功能,例如图像标注和数据集评估。

附加功能

# For image annotation

deepsparse.yolov8.annotate --source "path/to/image.jpg" --model_filepath "path/to/yolo26n.onnx"

# For evaluating model performance on a dataset

deepsparse.yolov8.eval --model_path "path/to/yolo26n.onnx"

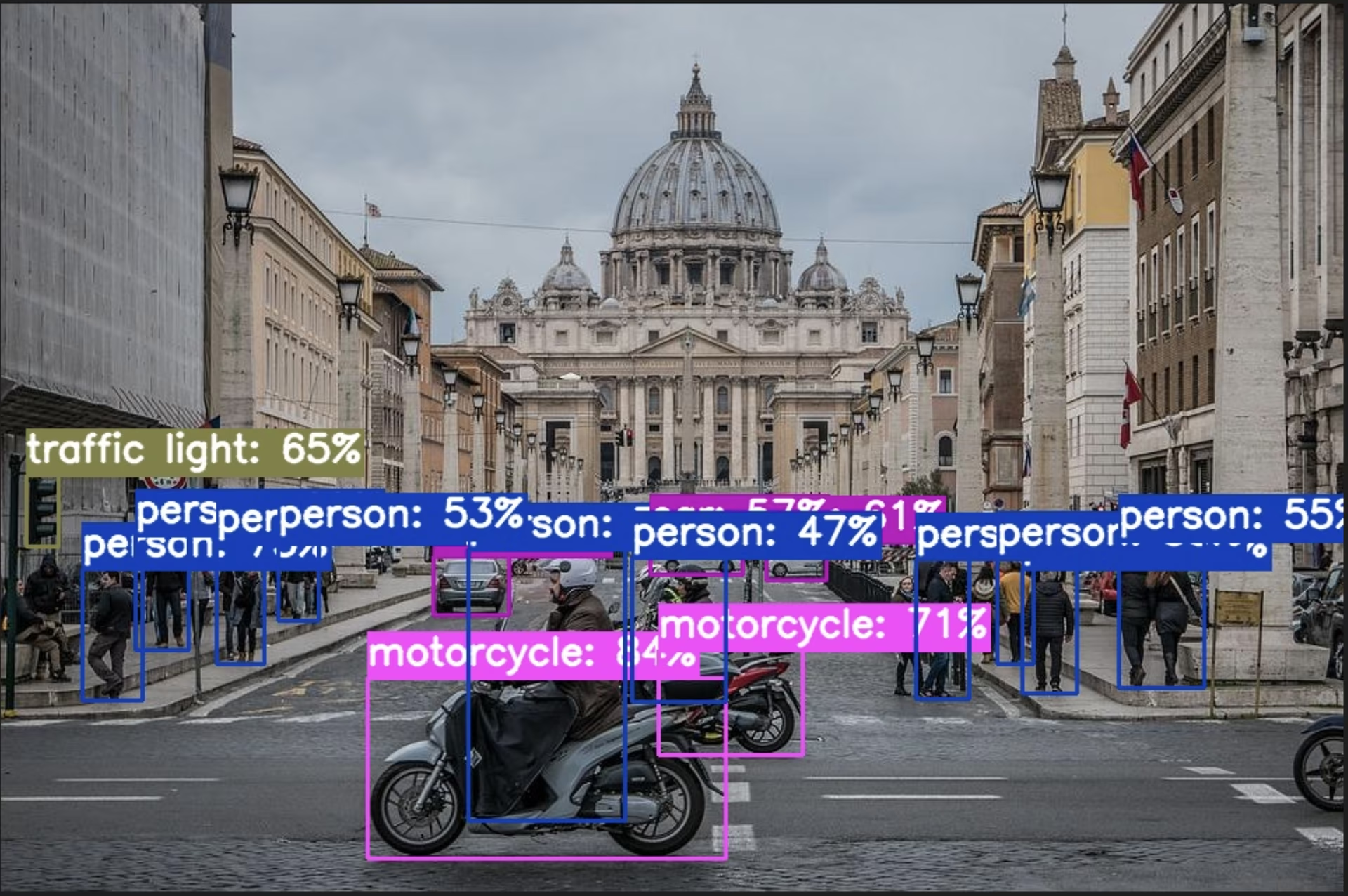

运行 annotate 命令会处理您指定的图像,检测对象,并保存带有边界框和分类的注释图像。注释图像将存储在 annotation-results 文件夹中。这有助于提供模型检测能力的可视化表示。

运行 eval 命令后,您将收到详细的输出指标,例如精确率、召回率和 mAP(平均精度均值)。这提供了模型在数据集上性能的全面视图,对于针对特定用例微调和优化 YOLO26 模型,确保高准确性和效率特别有用。

总结

本指南探讨了将 Ultralytics 的 YOLO26 与 Neural Magic 的 DeepSparse Engine 集成。它强调了这种集成如何增强 YOLO26 在 CPU 平台上的性能,提供 GPU 级别的效率和先进的神经网络稀疏化技术。

有关更详细的信息和高级用法,请访问Neural Magic 的 DeepSparse 文档。您还可以查阅 YOLO26 集成指南并观看 YouTube 上的演练会话。

此外,为了更全面地了解各种 YOLO26 集成,请访问Ultralytics 集成指南页面,在那里您可以发现一系列其他令人兴奋的集成可能性。

常见问题

Neural Magic 的 DeepSparse Engine 是什么,它如何优化 YOLO26 性能?

Neural Magic 的 DeepSparse Engine 是一种推理运行时,旨在通过稀疏性、剪枝和量化等先进技术优化神经网络在 CPU 上的执行。通过将 DeepSparse 与 YOLO26 集成,您可以在标准 CPU 上实现类似 GPU 的性能,显著提高推理速度、模型效率和整体性能,同时保持准确性。有关更多详细信息,请查阅Neural Magic 的 DeepSparse 部分。

如何安装所需的软件包以使用 Neural Magic 的 DeepSparse 部署 YOLO26?

使用 Neural Magic 的 DeepSparse 部署 YOLO26 所需的软件包安装过程非常简单。您可以使用 CLI 轻松安装它们。以下是您需要运行的命令:

pip install deepsparse[yolov8]

安装完成后,请按照安装部分中提供的步骤设置您的环境,并开始将 DeepSparse 与 YOLO26 结合使用。

如何将 YOLO26 模型转换为 ONNX 格式以用于 DeepSparse?

要将 YOLO26 模型转换为 ONNX 格式(DeepSparse 兼容性所需),您可以使用以下 CLI 命令:

yolo task=detect mode=export model=yolo26n.pt format=onnx opset=13

此命令将导出您的 YOLO26 模型(yolo26n.pt) 转换为 (yolo26n.onnx),可以被 DeepSparse 引擎利用。有关模型导出的更多信息,请参见 模型导出章节.

如何对 DeepSparse Engine 上的 YOLO26 性能进行基准测试?

在 DeepSparse 上对 YOLO26 性能进行基准测试有助于您分析吞吐量和延迟,以确保您的模型得到优化。您可以使用以下 CLI 命令运行基准测试:

deepsparse.benchmark model_path="path/to/yolo26n.onnx" --scenario=sync --input_shapes="[1,3,640,640]"

此命令将为您提供重要的性能指标。 有关更多详细信息,请参见基准测试性能部分。

为什么我应该将 Neural Magic 的 DeepSparse 与 YOLO26 结合用于目标检测任务?

将 Neural Magic 的 DeepSparse 与 YOLO26 集成可带来以下优势:

- 增强的推理速度: 实现高达 525 FPS(在 YOLO11n 上),展示了 DeepSparse 的优化能力。

- 优化的模型效率: 使用稀疏性、剪枝和量化技术来减少模型大小和计算需求,同时保持准确性。

- 标准 CPU 上的高性能: 在经济高效的 CPU 硬件上提供类似 GPU 的性能。

- 简化的集成: 用于轻松部署和集成的用户友好工具。

- 灵活性: 支持标准和稀疏性优化的 YOLO26 模型。

- 经济高效: 通过高效的资源利用来降低运营费用。

要深入了解这些优势,请访问将 Neural Magic 的 DeepSparse 与 YOLO26 集成的优势部分。