了解如何从YOLO26导出为TF SavedModel格式

部署机器学习模型可能具有挑战性。但是,使用高效且灵活的模型格式可以使您的工作更轻松。TF SavedModel 是 TensorFlow 使用的开源机器学习框架,用于以一致的方式加载机器学习模型。它就像 TensorFlow 模型的行李箱,使它们易于在不同的设备和系统上携带和使用。

学习如何将Ultralytics YOLO26模型导出为TF SavedModel格式,可以帮助您在不同平台和环境中轻松部署模型。在本指南中,我们将逐步介绍如何将模型转换为TF SavedModel格式,从而简化在不同设备上运行模型推理的过程。

为什么要导出到 TF SavedModel?

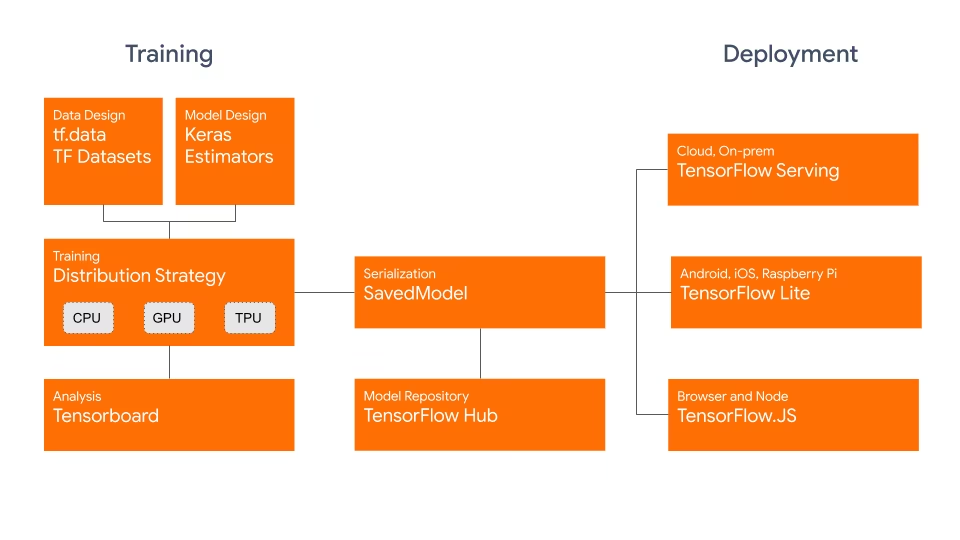

TensorFlow SavedModel格式是Google开发的TensorFlow生态系统的一部分,如下所示。它旨在无缝保存和序列化TensorFlow模型。它封装了模型的完整细节,如架构、权重,甚至编译信息。这使得在不同环境中共享、部署和继续训练变得简单。

TF SavedModel 有一个关键优势:它的兼容性。它可以很好地与 TensorFlow Serving、TensorFlow Lite 和 TensorFlow.js 配合使用。这种兼容性使得在各种平台(包括 Web 和移动应用程序)上共享和部署模型变得更加容易。TF SavedModel 格式对于研究和生产都很有用。它提供了一种统一的方式来管理您的模型,确保它们为任何应用程序做好准备。

TF SavedModels 的主要特性

以下是使 TF SavedModel 成为 AI 开发人员的绝佳选择的关键特性:

可移植性:TF SavedModel 提供了一种语言中立、可恢复、密封的序列化格式。它们使更高级别的系统和工具能够生成、使用和转换 TensorFlow 模型。SavedModel 可以轻松地在不同的平台和环境中共享和部署。

易于部署:TF SavedModel 将计算图、训练参数和必要的元数据捆绑到单个包中。 它们可以轻松加载并用于推理,而无需构建模型的原始代码。 这使得 TensorFlow 模型在各种生产环境中的部署变得简单高效。

资产管理: TF SavedModel 支持包含外部资产,例如词汇表、嵌入或查找表。这些资产与图定义和变量一起存储,确保在加载模型时可用。此功能简化了依赖外部资源的模型的管理和分发。

TF SavedModel 的部署选项

在深入了解将YOLO26模型导出为TF SavedModel格式的过程之前,让我们先探讨一些使用此格式的典型部署场景。

TF SavedModel 提供了一系列部署机器学习模型的选项:

TensorFlow Serving: TensorFlow Serving 是一个灵活、高性能的服务系统,专为生产环境而设计。它原生支持 TF SavedModels,可以轻松地在云平台、本地服务器或边缘设备上部署和服务您的模型。

云平台: 主要云提供商,如 Google Cloud Platform (GCP)、Amazon Web Services (AWS) 和 Microsoft Azure 提供了用于部署和运行 TensorFlow 模型(包括 TF SavedModels)的服务。这些服务提供可扩展的托管基础设施,使您可以轻松地部署和扩展模型。

移动和嵌入式设备:TensorFlow Lite 是一种轻量级解决方案,用于在移动、嵌入式和物联网设备上运行机器学习模型,支持将 TF SavedModels 转换为 TensorFlow Lite 格式。这使您能够将模型部署到各种设备上,从智能手机和平板电脑到微控制器和边缘设备。

TensorFlow 运行时: TensorFlow 运行时 (

tfrt) 是一个用于执行的高性能运行时 TensorFlow graphs。它提供了用于在 C++ 环境中加载和运行 TF SavedModels 的较低级别 API。与标准 TensorFlow 运行时相比,TensorFlow Runtime 提供了更好的性能。它适用于需要低延迟推理以及与现有 C++ 代码库紧密集成的部署场景。

将YOLO26模型导出为TF SavedModel

通过将YOLO26模型导出为TF SavedModel格式,您可以增强其在各种平台上的适应性和部署便捷性。

安装

要安装所需的软件包,请运行:

安装

# Install the required package for YOLO26

pip install ultralytics

有关安装过程的详细说明和最佳实践,请查阅我们的 Ultralytics 安装指南。在安装 YOLO26 所需的软件包时,如果您遇到任何困难,请查阅我们的 常见问题指南 以获取解决方案和提示。

用法

所有 Ultralytics YOLO26 模型 都设计为开箱即用地支持导出,从而可以轻松将其集成到您首选的部署工作流程中。您可以查看支持的导出格式和配置选项的完整列表,为您的应用程序选择最佳设置。

用法

from ultralytics import YOLO

# Load the YOLO26 model

model = YOLO("yolo26n.pt")

# Export the model to TF SavedModel format

model.export(format="saved_model") # creates '/yolo26n_saved_model'

# Load the exported TF SavedModel model

tf_savedmodel_model = YOLO("./yolo26n_saved_model")

# Run inference

results = tf_savedmodel_model("https://ultralytics.com/images/bus.jpg")

# Export a YOLO26n PyTorch model to TF SavedModel format

yolo export model=yolo26n.pt format=saved_model # creates '/yolo26n_saved_model'

# Run inference with the exported model

yolo predict model='./yolo26n_saved_model' source='https://ultralytics.com/images/bus.jpg'

导出参数

| 参数 | 类型 | 默认值 | 描述 |

|---|---|---|---|

format | str | 'saved_model' | 导出模型的目标格式,定义与各种部署环境的兼容性。 |

imgsz | int 或 tuple | 640 | 模型输入的所需图像大小。 可以是正方形图像的整数或元组 (height, width) 用于指定特定维度。 |

keras | bool | False | 支持导出为 Keras 格式,从而提供与 TensorFlow serving 和 API 的兼容性。 |

int8 | bool | False | 激活 INT8 量化,进一步压缩模型并以最小的精度损失加快推理速度,主要用于边缘设备。 |

nms | bool | False | 添加非极大值抑制 (NMS),这对于准确高效的检测后处理至关重要。 |

batch | int | 1 | 指定导出模型批处理推理大小或导出模型将并发处理的最大图像数量,在 predict 模式下。 |

device | str | None | 指定导出设备:CPU(device=cpu),适用于 Apple 芯片的 MPS(device=mps)。 |

有关导出过程的更多详细信息,请访问Ultralytics 文档页面上的导出。

部署导出的YOLO26 TF SavedModel模型

既然您已将YOLO26模型导出为TF SavedModel格式,下一步就是部署它。运行TF SavedModel模型的主要且推荐的第一步是使用 YOLO("yolo26n_saved_model/") method,如先前在用法代码段中所示。

但是,有关部署 TF SavedModel 模型的深入说明,请查看以下资源:

TensorFlow Serving: 以下是关于如何使用 TensorFlow Serving 部署您的 TF SavedModel 模型的开发者文档。

在 Node.js 中运行 TensorFlow SavedModel: 一篇关于在 Node.js 中直接运行 TensorFlow SavedModel 而无需转换的 TensorFlow 博客文章。

在云端部署: 一篇关于在Cloud AI Platform上部署TensorFlow SavedModel模型的TensorFlow博客文章。

总结

在本指南中,我们探讨了如何将Ultralytics YOLO26模型导出为TF SavedModel格式。通过导出为TF SavedModel,您可以灵活地在各种平台上优化、部署和扩展您的YOLO26模型。

有关使用详情,请访问 TF SavedModel 官方文档。

有关将Ultralytics YOLO26与其他平台和框架集成的更多信息,请务必查看我们的集成指南页面。它包含丰富的资源,可帮助您在项目中充分利用YOLO26。

常见问题

如何将 Ultralytics YOLO 模型导出为 TensorFlow SavedModel 格式?

将 Ultralytics YOLO 模型导出为 TensorFlow SavedModel 格式非常简单。您可以使用 python 或 CLI 来实现这一点:

将YOLO26导出为TF SavedModel

from ultralytics import YOLO

# Load the YOLO26 model

model = YOLO("yolo26n.pt")

# Export the model to TF SavedModel format

model.export(format="saved_model") # creates '/yolo26n_saved_model'

# Load the exported TF SavedModel for inference

tf_savedmodel_model = YOLO("./yolo26n_saved_model")

results = tf_savedmodel_model("https://ultralytics.com/images/bus.jpg")

# Export the YOLO26 model to TF SavedModel format

yolo export model=yolo26n.pt format=saved_model # creates '/yolo26n_saved_model'

# Run inference with the exported model

yolo predict model='./yolo26n_saved_model' source='https://ultralytics.com/images/bus.jpg'

有关更多详细信息,请参阅Ultralytics Export 文档。

为什么我应该使用 TensorFlow SavedModel 格式?

TensorFlow SavedModel 格式为模型部署提供了几个优势:

- 可移植性:它提供了一种语言中立的格式,可以轻松地在不同环境中共享和部署模型。

- 兼容性: 与TensorFlow Serving、TensorFlow Lite和TensorFlow.js等工具无缝集成,这对于在各种平台(包括Web和移动应用程序)上部署模型至关重要。

- 完整封装: 编码模型架构、权重和编译信息,从而实现直接共享和训练延续。

如需更多优势和部署选项,请查看 Ultralytics YOLO 模型部署选项。

TF SavedModel 的典型部署场景有哪些?

TF SavedModel 可以部署在各种环境中,包括:

- TensorFlow Serving: 适用于需要可扩展和高性能模型服务的生产环境。

- 云平台: 支持主要的云服务,如 Google Cloud Platform (GCP)、Amazon Web Services (AWS) 和 Microsoft Azure,以实现可扩展的模型部署。

- 移动和嵌入式设备: 使用 TensorFlow Lite 转换 TF SavedModels 允许部署在移动设备、物联网设备和微控制器上。

- TensorFlow Runtime: 适用于需要低延迟推理和更好性能的 C++ 环境。

有关详细的部署选项,请访问关于部署 TensorFlow 模型的官方指南。

如何安装导出YOLO26模型所需的包?

要导出YOLO26模型,您需要安装 ultralytics package。在您的终端中运行以下命令:

pip install ultralytics

有关更详细的安装说明和最佳实践,请参阅我们的 Ultralytics 安装指南。如果您遇到任何问题,请查阅我们的常见问题解答指南。

TensorFlow SavedModel 格式的主要特点是什么?

由于以下特性,TF SavedModel 格式对 AI 开发人员很有帮助:

- 可移植性:允许轻松地在各种环境中共享和部署。

- 易于部署: 将计算图、训练参数和元数据封装到单个包中,从而简化了加载和推理。

- 资产管理: 支持外部资产(如词汇表),确保它们在模型加载时可用。

更多详情,请查阅 TensorFlow 官方文档。