Ultralytics YOLO NCNN 导出

在计算能力有限的设备(如移动或嵌入式系统)上部署计算机视觉模型需要仔细选择格式。使用优化的格式可确保即使是资源受限的设备也能高效处理高级计算机视觉任务。

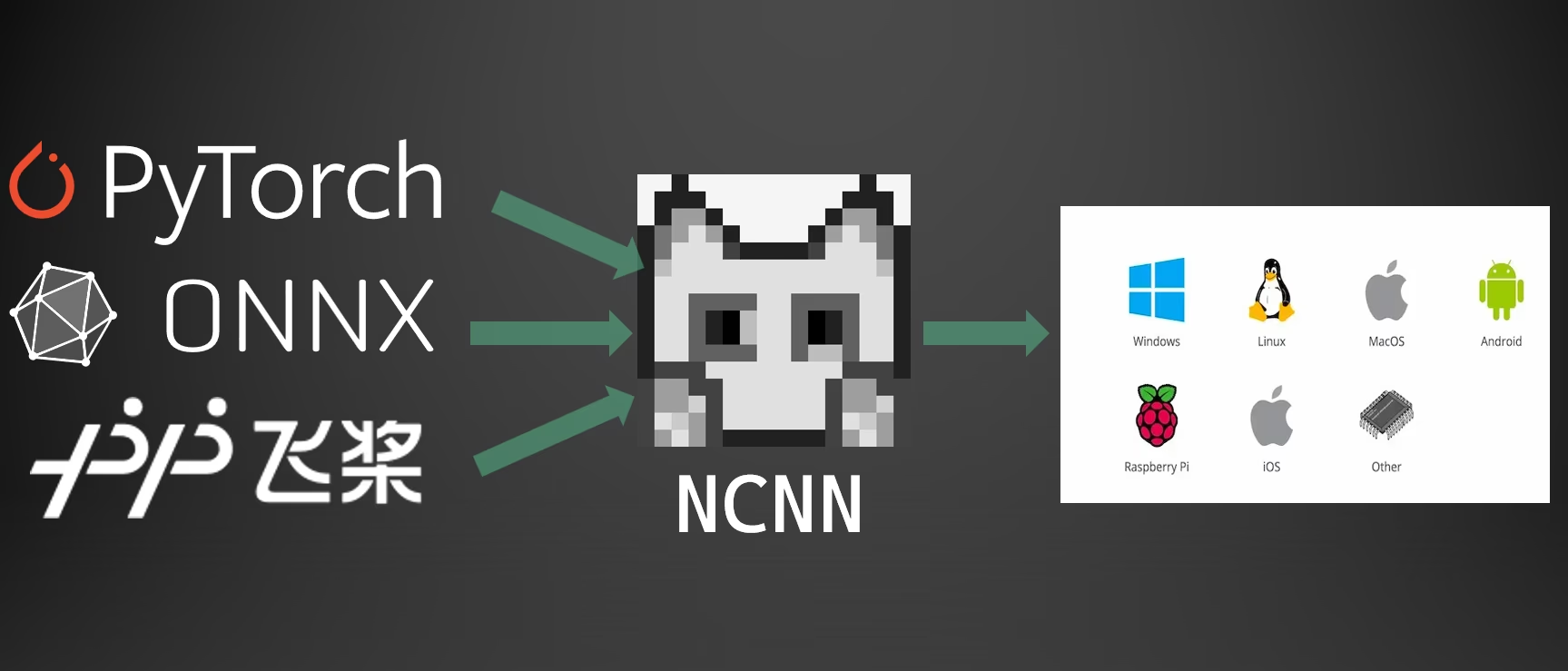

导出到 NCNN 格式可让您优化您的 Ultralytics YOLO26 模型,以用于轻量级设备应用。本指南介绍了如何将模型转换为 NCNN 格式,以提高在移动和嵌入式设备上的性能。

为何导出为 NCNN 格式?

NCNN框架由腾讯开发,是一个高性能的神经网络推理计算框架,专门为移动平台(包括手机、嵌入式设备和物联网设备)优化。NCNN与包括Linux、Android、iOS和macOS在内的各种平台兼容。

NCNN 以其在移动 CPU 上的快速处理速度而闻名,并能将 深度学习模型快速部署到移动平台,使其成为构建 AI 驱动应用程序的绝佳选择。

NCNN 模型的主要特性

NCNN 模型提供了多项关键功能,可实现设备上的 机器学习,帮助开发人员在移动、嵌入式和边缘设备上部署模型:

高效高性能:NCNN 模型轻量化,并针对 Raspberry Pi 等资源有限的移动和嵌入式设备进行了优化,同时在计算机视觉任务中保持高准确性。

量化:NCNN 支持量化,这是一种降低模型权重和激活的精度以提高性能和减少内存占用的技术。

兼容性:NCNN 模型与流行的深度学习框架兼容,包括 TensorFlow、Caffe 和 ONNX,使开发人员能够利用现有模型和工作流程。

易用性:NCNN 提供了用户友好的工具,用于在不同格式之间转换模型,确保在不同开发环境中的平滑互操作性。

Vulkan GPU 加速:NCNN 支持 Vulkan,可在包括 AMD、Intel 和其他非 NVIDIA GPU 在内的多个供应商的 GPU 上进行加速推理,从而在更广泛的硬件上实现高性能部署。

NCNN 的部署选项

NCNN 模型兼容多种部署平台:

移动部署:针对 Android 和 iOS 进行了优化,可无缝集成到移动应用程序中,以实现高效的设备端推理。

嵌入式系统和物联网设备:适用于 Raspberry Pi 和 NVIDIA Jetson 等资源受限设备。如果使用 Ultralytics 指南在 Raspberry Pi 上进行标准推理不足,NCNN 可以显著提高性能。

桌面和服务器部署:支持在 Linux、Windows 和 macOS 上部署,用于开发、训练和评估工作流程。

Vulkan GPU 加速

NCNN 通过 Vulkan 支持 GPU 加速,可在包括 AMD、Intel 和其他非 NVIDIA 显卡在内的各种 GPU 上实现高性能推理。这对于以下情况特别有用:

- 跨供应商 GPU 支持:与仅限于 NVIDIA GPU 的 CUDA 不同,Vulkan 可跨多个 GPU 供应商工作。

- 多 GPU 系统:在具有多个 GPU 的系统中使用特定 Vulkan 设备,例如

device="vulkan:0",device="vulkan:1"等。 - 边缘和桌面部署:在不支持 CUDA 的设备上利用 GPU 加速。

要使用 Vulkan 加速,请在运行推理时指定 Vulkan 设备:

Vulkan 推理

from ultralytics import YOLO

# Load the exported NCNN model

ncnn_model = YOLO("./yolo26n_ncnn_model")

# Run inference with Vulkan GPU acceleration (first Vulkan device)

results = ncnn_model("https://ultralytics.com/images/bus.jpg", device="vulkan:0")

# Use second Vulkan device in multi-GPU systems

results = ncnn_model("https://ultralytics.com/images/bus.jpg", device="vulkan:1")

# Run inference with Vulkan GPU acceleration

yolo predict model='./yolo26n_ncnn_model' source='https://ultralytics.com/images/bus.jpg' device=vulkan:0

Vulkan 要求

确保您的GPU已安装Vulkan驱动程序。大多数现代GPU驱动程序默认包含Vulkan支持。您可以使用以下工具验证Vulkan的可用性,例如 vulkaninfo 在Linux上或在Windows上使用Vulkan SDK。

导出到 NCNN:转换您的 YOLO26 模型

通过将 YOLO26 模型转换为 NCNN 格式,您可以扩展模型兼容性和部署灵活性。

安装

要安装所需的软件包,请运行:

安装

# Install the required package for YOLO26

pip install ultralytics

有关详细说明和最佳实践,请参阅Ultralytics安装指南。如果您遇到任何困难,请查阅我们的常见问题指南以获取解决方案。

用法

所有 Ultralytics YOLO26 模型 都设计为开箱即用地支持导出,从而可以轻松将其集成到您首选的部署工作流程中。您可以查看支持的导出格式和配置选项的完整列表,为您的应用程序选择最佳设置。

用法

from ultralytics import YOLO

# Load the YOLO26 model

model = YOLO("yolo26n.pt")

# Export the model to NCNN format

model.export(format="ncnn") # creates '/yolo26n_ncnn_model'

# Load the exported NCNN model

ncnn_model = YOLO("./yolo26n_ncnn_model")

# Run inference

results = ncnn_model("https://ultralytics.com/images/bus.jpg")

# Export a YOLO26n PyTorch model to NCNN format

yolo export model=yolo26n.pt format=ncnn # creates '/yolo26n_ncnn_model'

# Run inference with the exported model

yolo predict model='./yolo26n_ncnn_model' source='https://ultralytics.com/images/bus.jpg'

导出参数

| 参数 | 类型 | 默认值 | 描述 |

|---|---|---|---|

format | str | 'ncnn' | 导出模型的目标格式,定义与各种部署环境的兼容性。 |

imgsz | int 或 tuple | 640 | 模型输入的所需图像大小。 可以是正方形图像的整数或元组 (height, width) 用于指定特定维度。 |

half | bool | False | 启用 FP16(半精度)量化,从而减小模型大小并可能加快受支持硬件上的推理速度。 |

batch | int | 1 | 指定导出模型批处理推理大小或导出模型将并发处理的最大图像数量,在 predict 模式下。 |

device | str | None | 指定导出设备:GPU (device=0),CPU(device=cpu),适用于 Apple 芯片的 MPS(device=mps)。 |

有关导出过程的更多详细信息,请访问Ultralytics 文档页面上的导出。

部署已导出的 YOLO26 NCNN 模型

将您的 Ultralytics YOLO26 模型导出到 NCNN 格式后,您可以使用 YOLO("yolo26n_ncnn_model/") 方法进行部署,如上方的使用示例所示。有关特定平台的部署说明,请参阅以下资源:

Android:在Android应用程序中构建和集成NCNN模型以进行object detect。

macOS:在macOS系统上部署NCNN模型。

Linux:在Linux设备上部署NCNN模型,包括树莓派和类似的嵌入式系统。

Windows x64:使用Visual Studio在Windows x64上部署NCNN模型。

总结

本指南介绍了将 Ultralytics YOLO26 模型导出到 NCNN 格式,以提高在资源受限设备上的效率和速度。

有关更多详细信息,请参阅NCNN官方文档。有关其他导出选项,请访问我们的集成指南页面。

常见问题

如何将 Ultralytics YOLO26 模型导出到 NCNN 格式?

要将您的 Ultralytics YOLO26 模型导出到 NCNN 格式:

Python:使用

exportYOLO类中的方法。from ultralytics import YOLO # Load the YOLO26 model model = YOLO("yolo26n.pt") # Export to NCNN format model.export(format="ncnn") # creates '/yolo26n_ncnn_model'CLI:使用

yolo export命令。yolo export model=yolo26n.pt format=ncnn # creates '/yolo26n_ncnn_model'

有关详细的导出选项,请参阅导出文档。

将 YOLO26 模型导出到 NCNN 有哪些优势?

将您的 Ultralytics YOLO26 模型导出到 NCNN 具有以下几个优势:

- 效率: NCNN 模型针对移动和嵌入式设备进行了优化,即使在计算资源有限的情况下也能确保高性能。

- 量化:NCNN 支持诸如量化等技术,这些技术可以提高模型速度并减少内存使用。

- 广泛的兼容性: 您可以在包括 Android、iOS、Linux 和 macOS 在内的多个平台上部署 NCNN 模型。

- Vulkan GPU加速:通过Vulkan在AMD、Intel和其他非NVIDIA GPU上利用GPU加速,以实现更快的推理。

有关更多详细信息,请参阅为什么要导出到NCNN?部分。

为什么我应该使用 NCNN 用于我的移动 AI 应用程序?

NCNN 由腾讯开发,专门为移动平台优化。使用 NCNN 的主要原因包括:

- 高性能:专为在移动 CPU 上进行高效快速处理而设计。

- 跨平台: 与 TensorFlow 和 ONNX 等常用框架兼容,可以更轻松地在不同平台之间转换和部署模型。

- 社区支持: 活跃的社区支持确保持续改进和更新。

有关更多信息,请参阅NCNN模型的主要特性部分。

NCNN 模型部署支持哪些平台?

NCNN 功能多样,支持多种平台:

- 移动端: Android、iOS。

- 嵌入式系统和物联网设备: 诸如 Raspberry Pi 和 NVIDIA Jetson 等设备。

- 桌面和服务器: Linux、Windows 和 macOS。

为了提高树莓派上的性能,请考虑使用NCNN格式,具体细节请参阅我们的树莓派指南。

如何在 Android 上部署 Ultralytics YOLO26 NCNN 模型?

要在 Android 上部署您的 YOLO26 模型:

- 为 Android 构建: 请参考 NCNN Android 构建 指南。

- 与您的应用集成:使用 NCNN Android SDK 将导出的模型集成到您的应用程序中,以实现高效的设备端推理。

有关详细说明,请参阅部署已导出的 YOLO26 NCNN 模型。

有关更高级的指南和用例,请访问Ultralytics部署指南。

如何将 Vulkan GPU 加速与 NCNN 模型结合使用?

NCNN支持Vulkan,可在AMD、Intel和其他非NVIDIA GPU上实现GPU加速。要使用Vulkan:

from ultralytics import YOLO

# Load NCNN model and run with Vulkan GPU

model = YOLO("yolo26n_ncnn_model")

results = model("image.jpg", device="vulkan:0") # Use first Vulkan device

对于多GPU系统,请指定设备索引(例如, vulkan:1 表示第二个GPU)。确保您的GPU已安装Vulkan驱动程序。请参阅 Vulkan GPU 加速 部分了解更多详细信息。