探索 Ultralytics YOLOv8

概述

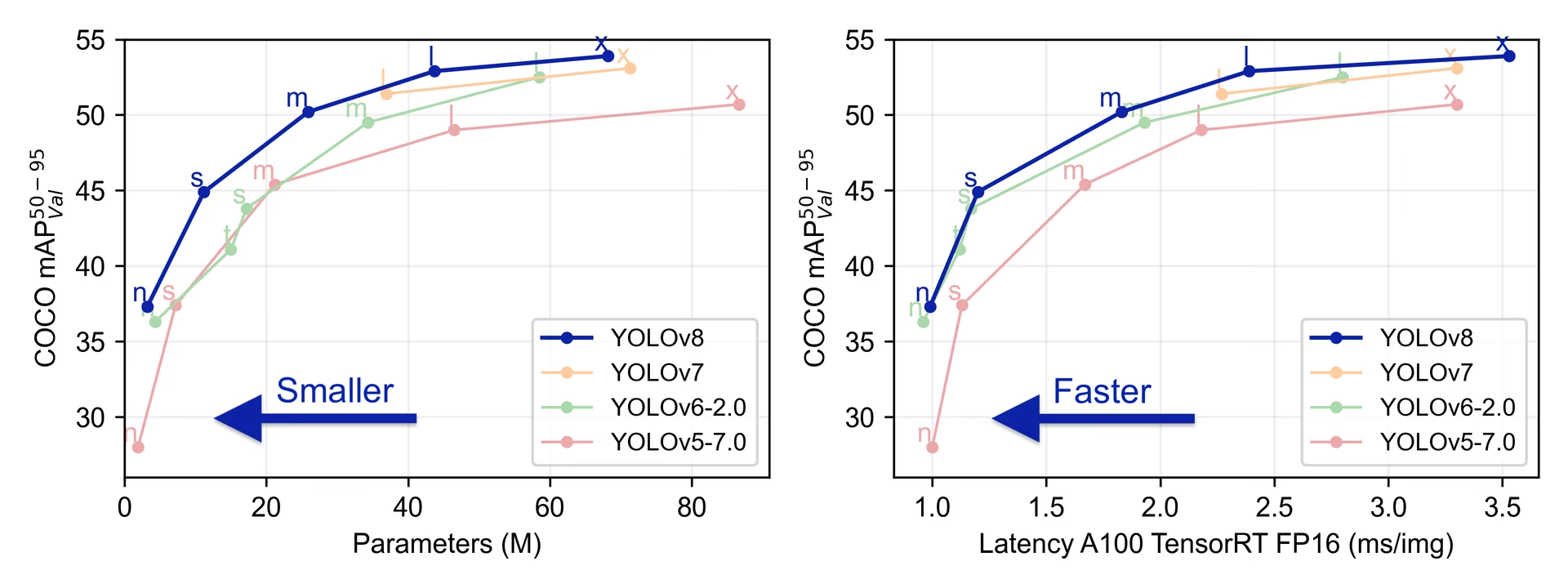

YOLOv8 由 Ultralytics 于 2023 年 1 月 10 日发布,在准确性和速度方面提供了尖端性能。基于先前 YOLO 版本的进步,YOLOv8 引入了新功能和优化,使其成为各种应用中 目标检测 任务的理想选择。

观看: Ultralytics YOLOv8 模型概述

试用UltralyticsUltralytics

在Ultralytics 直接探索并运行YOLOv8 。

YOLOv8 的主要特性

- 高级骨干和颈部架构: YOLOv8 采用最先进的骨干和颈部架构,从而改进了特征提取和目标检测性能。

- 无锚点分离式 Ultralytics Head: YOLOv8 采用无锚点分离式 Ultralytics head,与基于锚点的方法相比,这有助于提高准确性并提高检测效率。

- 优化的准确性-速度权衡: YOLOv8 专注于在准确性和速度之间保持最佳平衡,适用于各种应用领域中的实时对象检测任务。

- 丰富的预训练模型: YOLOv8提供了一系列预训练模型,以满足各种任务和性能要求,使您更容易为特定用例找到合适的模型。

支持的任务和模式

YOLOv8 系列提供各种各样的模型,每个模型都专门用于计算机视觉中的特定任务。这些模型旨在满足各种需求,从目标检测到更复杂的任务,如实例分割、姿势/关键点检测、旋转框检测和分类。

YOLOv8 系列的每个变体都针对其各自的任务进行了优化,从而确保了高性能和高精度。此外,这些模型与各种操作模式兼容,包括 推理、验证、训练 和 导出,从而方便了它们在部署和开发的不同阶段中使用。

| 模型 | 文件名 | 任务 | 推理 | 验证 | 训练 | 导出 |

|---|---|---|---|---|---|---|

| YOLOv8 | yolov8n.pt yolov8s.pt yolov8m.pt yolov8l.pt yolov8x.pt | 检测 | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-seg | yolov8n-seg.pt yolov8s-seg.pt yolov8m-seg.pt yolov8l-seg.pt yolov8x-seg.pt | 实例分割 | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-pose | yolov8n-pose.pt yolov8s-pose.pt yolov8m-pose.pt yolov8l-pose.pt yolov8x-pose.pt yolov8x-pose-p6.pt | 姿势/关键点 | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-obb | yolov8n-obb.pt yolov8s-obb.pt yolov8m-obb.pt yolov8l-obb.pt yolov8x-obb.pt | 定向检测 | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-cls | yolov8n-cls.pt yolov8s-cls.pt yolov8m-cls.pt yolov8l-cls.pt yolov8x-cls.pt | 分类 | ✅ | ✅ | ✅ | ✅ |

此表概述了 YOLOv8 模型变体,重点介绍了它们在特定任务中的适用性以及它们与各种操作模式(如推理、验证、训练和导出)的兼容性。它展示了 YOLOv8 系列的多功能性和稳健性,使其适用于计算机视觉中的各种应用。

性能指标

性能

请参阅detect 文档,以获取这些在COCO上训练的模型的使用示例,其中包括80个预训练类别。

| 模型 | 尺寸 (像素) | mAPval 50-95 | 速度 CPU ONNX (毫秒) | 速度 A100 TensorRT (毫秒) | 参数 (M) | FLOPs (B) |

|---|---|---|---|---|---|---|

| YOLOv8n | 640 | 37.3 | 80.4 | 0.99 | 3.2 | 8.7 |

| YOLOv8s | 640 | 44.9 | 128.4 | 1.20 | 11.2 | 28.6 |

| YOLOv8m | 640 | 50.2 | 234.7 | 1.83 | 25.9 | 78.9 |

| YOLOv8l | 640 | 52.9 | 375.2 | 2.39 | 43.7 | 165.2 |

| YOLOv8x | 640 | 53.9 | 479.1 | 3.53 | 68.2 | 257.8 |

请参阅detect 文档,以获取这些在Open Image V7上训练的模型的使用示例,其中包括600个预训练类别。

| 模型 | 尺寸 (像素) | mAPval 50-95 | 速度 CPU ONNX (毫秒) | 速度 A100 TensorRT (毫秒) | 参数 (M) | FLOPs (B) |

|---|---|---|---|---|---|---|

| YOLOv8n | 640 | 18.4 | 142.4 | 1.21 | 3.5 | 10.5 |

| YOLOv8s | 640 | 27.7 | 183.1 | 1.40 | 11.4 | 29.7 |

| YOLOv8m | 640 | 33.6 | 408.5 | 2.26 | 26.2 | 80.6 |

| YOLOv8l | 640 | 34.9 | 596.9 | 2.43 | 44.1 | 167.4 |

| YOLOv8x | 640 | 36.3 | 860.6 | 3.56 | 68.7 | 260.6 |

请参阅segment 文档,以获取这些在COCO上训练的模型的使用示例,其中包括80个预训练类别。

| 模型 | 尺寸 (像素) | mAPbox 50-95 | mAPmask 50-95 | 速度 CPU ONNX (毫秒) | 速度 A100 TensorRT (毫秒) | 参数 (M) | FLOPs (B) |

|---|---|---|---|---|---|---|---|

| YOLOv8n-seg | 640 | 36.7 | 30.5 | 96.1 | 1.21 | 3.4 | 12.6 |

| YOLOv8s-seg | 640 | 44.6 | 36.8 | 155.7 | 1.47 | 11.8 | 42.6 |

| YOLOv8m-seg | 640 | 49.9 | 40.8 | 317.0 | 2.18 | 27.3 | 110.2 |

| YOLOv8l-seg | 640 | 52.3 | 42.6 | 572.4 | 2.79 | 46.0 | 220.5 |

| YOLOv8x-seg | 640 | 53.4 | 43.4 | 712.1 | 4.02 | 71.8 | 344.1 |

请参阅分类文档,以获取这些在ImageNet上训练的模型的使用示例,其中包括1000个预训练类别。

| 模型 | 尺寸 (像素) | acc top1 | acc top5 | 速度 CPU ONNX (毫秒) | 速度 A100 TensorRT (毫秒) | 参数 (M) | FLOPs (B) at 224 |

|---|---|---|---|---|---|---|---|

| YOLOv8n-cls | 224 | 69.0 | 88.3 | 12.9 | 0.31 | 2.7 | 0.5 |

| YOLOv8s-cls | 224 | 73.8 | 91.7 | 23.4 | 0.35 | 6.4 | 1.7 |

| YOLOv8m-cls | 224 | 76.8 | 93.5 | 85.4 | 0.62 | 17.0 | 5.3 |

| YOLOv8l-cls | 224 | 76.8 | 93.5 | 163.0 | 0.87 | 37.5 | 12.3 |

| YOLOv8x-cls | 224 | 79.0 | 94.6 | 232.0 | 1.01 | 57.4 | 19.0 |

请参阅姿势估计文档,以获取这些在COCO上训练的模型的使用示例,其中包括1个预训练类别,“person”。

| 模型 | 尺寸 (像素) | mAP姿势估计 50-95 | mAP姿势估计 50 | 速度 CPU ONNX (毫秒) | 速度 A100 TensorRT (毫秒) | 参数 (M) | FLOPs (B) |

|---|---|---|---|---|---|---|---|

| YOLOv8n-pose | 640 | 50.4 | 80.1 | 131.8 | 1.18 | 3.3 | 9.2 |

| YOLOv8s-pose | 640 | 60.0 | 86.2 | 233.2 | 1.42 | 11.6 | 30.2 |

| YOLOv8m-pose | 640 | 65.0 | 88.8 | 456.3 | 2.00 | 26.4 | 81.0 |

| YOLOv8l-pose | 640 | 67.6 | 90.0 | 784.5 | 2.59 | 44.4 | 168.6 |

| YOLOv8x-pose | 640 | 69.2 | 90.2 | 1607.1 | 3.73 | 69.4 | 263.2 |

| YOLOv8x-pose-p6 | 1280 | 71.6 | 91.2 | 4088.7 | 10.04 | 99.1 | 1066.4 |

请参阅定向 detect 文档,以获取这些在DOTAv1上训练的模型的使用示例,其中包括15个预训练类别。

| 模型 | 尺寸 (像素) | mAPtest 50 | 速度 CPU ONNX (毫秒) | 速度 A100 TensorRT (毫秒) | 参数 (M) | FLOPs (B) |

|---|---|---|---|---|---|---|

| YOLOv8n-obb | 1024 | 78.0 | 204.77 | 3.57 | 3.1 | 23.3 |

| YOLOv8s-obb | 1024 | 79.5 | 424.88 | 4.07 | 11.4 | 76.3 |

| YOLOv8m-obb | 1024 | 80.5 | 763.48 | 7.61 | 26.4 | 208.6 |

| YOLOv8l-obb | 1024 | 80.7 | 1278.42 | 11.83 | 44.5 | 433.8 |

| YOLOv8x-obb | 1024 | 81.36 | 1759.10 | 13.23 | 69.5 | 676.7 |

YOLOv8 使用示例

此示例提供了简单的 YOLOv8 训练和推理示例。有关这些及其他模式的完整文档,请参阅预测、训练、验证和导出文档页面。

请注意,以下示例适用于用于目标检测的 YOLOv8 Detect 模型。有关其他支持的任务,请参阅Segment、Classify、OBB 文档和 Pose 文档。

示例

PyTorch pretrained *.pt 模型以及配置 *.yaml 文件可以传递给 YOLO() 类,以便在 python 中创建模型实例:

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv8n model

model = YOLO("yolov8n.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the YOLOv8n model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

可以使用 CLI 命令直接运行模型:

# Load a COCO-pretrained YOLOv8n model and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolov8n.pt data=coco8.yaml epochs=100 imgsz=640

# Load a COCO-pretrained YOLOv8n model and run inference on the 'bus.jpg' image

yolo predict model=yolov8n.pt source=path/to/bus.jpg

引用和致谢

Ultralytics YOLOv8 发布

由于 YOLOv8 模型快速迭代的特性,Ultralytics 尚未发布正式的研究论文。我们专注于推进技术并使其更易于使用,而不是生成静态文档。有关 YOLO 架构、功能和用法的最新信息,请参阅我们的 GitHub 仓库 和 文档。

如果您在工作中使用 YOLOv8 模型或来自此存储库的任何其他软件,请使用以下格式引用:

@software{yolov8_ultralytics,

author = {Glenn Jocher and Ayush Chaurasia and Jing Qiu},

title = {Ultralytics YOLOv8},

version = {8.0.0},

year = {2023},

url = {https://github.com/ultralytics/ultralytics},

orcid = {0000-0001-5950-6979, 0000-0002-7603-6750, 0000-0003-3783-7069},

license = {AGPL-3.0}

}

请注意,DOI 正在申请中,一旦可用,将添加到引用中。YOLOv8 模型根据 AGPL-3.0 和 Enterprise 许可提供。

常见问题

什么是 YOLOv8,它与之前的 YOLO 版本有何不同?

YOLOv8 旨在通过高级功能改进实时目标检测性能。与早期版本不同,YOLOv8 结合了无锚框分离式 Ultralytics 检测头、最先进的 主干网络和颈部架构,并提供了优化的准确性-速度权衡,使其成为各种应用的理想选择。有关更多详细信息,请查看“概述”和“主要功能”部分。

如何将 YOLOv8 用于不同的计算机视觉任务?

YOLOv8 支持广泛的计算机视觉任务,包括目标检测、实例分割、姿势/关键点检测、旋转框检测和分类。每个模型变体都针对其特定任务进行了优化,并且与各种操作模式(如 推理、验证、训练 和 导出)兼容。有关更多信息,请参阅支持的任务和模式部分。

YOLOv8 模型的性能指标有哪些?

YOLOv8 模型在各种基准数据集上实现了最先进的性能。例如,YOLOv8n 模型在 COCO 数据集上达到了 37.3 的 mAP(平均精度均值),并在 A100 TensorRT 上实现了 0.99 毫秒的速度。每个模型变体在不同任务和数据集上的详细性能指标可在性能指标部分找到。

如何训练 YOLOv8 模型?

训练 YOLOv8 模型可以使用 python 或 CLI 完成。以下是使用 COCO 预训练的 YOLOv8 模型在 COCO8 数据集上训练 100 个 epoch 的示例:

示例

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv8n model

model = YOLO("yolov8n.pt")

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

yolo train model=yolov8n.pt data=coco8.yaml epochs=100 imgsz=640

有关更多详细信息,请访问训练文档。

我可以对 YOLOv8 模型进行性能基准测试吗?

是的,可以根据各种导出格式的速度和准确性来对 YOLOv8 模型进行基准测试。您可以将 PyTorch、ONNX、TensorRT 等用于基准测试。以下是使用 python 和 CLI 进行基准测试的示例命令:

示例

from ultralytics.utils.benchmarks import benchmark

# Benchmark on GPU

benchmark(model="yolov8n.pt", data="coco8.yaml", imgsz=640, half=False, device=0)

yolo benchmark model=yolov8n.pt data='coco8.yaml' imgsz=640 half=False device=0

有关更多信息,请查看性能指标部分。