COCO-姿势估计数据集

The COCO-姿势估计数据集是 COCO(通用对象上下文)数据集的一个专门版本,专为姿势估计任务设计。它利用 COCO 关键点 2017 图像和标签,以支持训练 YOLO 等模型进行姿势估计任务。

COCO-姿势估计预训练模型

| 模型 | 尺寸 (像素) | mAP姿势估计 50-95(e2e) | mAP姿势估计 50(e2e) | 速度 CPU ONNX (毫秒) | 速度 T4 TensorRT10 (毫秒) | 参数 (M) | FLOPs (B) |

|---|---|---|---|---|---|---|---|

| YOLO26n-姿势估计 | 640 | 57.2 | 83.3 | 40.3 ± 0.5 | 1.8 ± 0.0 | 2.9 | 7.5 |

| YOLO26s-姿势估计 | 640 | 63.0 | 86.6 | 85.3 ± 0.9 | 2.7 ± 0.0 | 10.4 | 23.9 |

| YOLO26m-姿势估计 | 640 | 68.8 | 89.6 | 218.0 ± 1.5 | 5.0 ± 0.1 | 21.5 | 73.1 |

| YOLO26l-姿势估计 | 640 | 70.4 | 90.5 | 275.4 ± 2.4 | 6.5 ± 0.1 | 25.9 | 91.3 |

| YOLO26x-姿势估计 | 640 | 71.6 | 91.6 | 565.4 ± 3.0 | 12.2 ± 0.2 | 57.6 | 201.7 |

主要功能

- COCO-姿势估计基于 COCO 关键点 2017 数据集,该数据集包含 20 万张图像,并标注了用于姿势估计任务的关键点。

- 该数据集支持人体 17 个关键点,有助于进行详细的姿势估计。

- 与 COCO 类似,它提供了标准化的评估指标,包括用于姿势估计任务的物体关键点相似度 (OKS),使其适用于比较模型性能。

数据集结构

COCO-Pose 数据集分为三个子集:

- Train2017:此子集包含来自COCO数据集的56599张图像,标注用于训练姿势估计模型。

- Val2017: 此子集包含 2346 张图像,用于模型训练期间的验证。

- Test2017:此子集包含用于测试和基准评估训练好的模型的图像。此子集的真实标注未公开,结果将提交至 COCO 评估服务器 进行性能评估。

应用

COCO-Pose 数据集专门用于训练和评估深度学习模型,涵盖关键点检测和姿势估计任务,例如 OpenPose。该数据集大量的标注图像和标准化评估指标,使其成为专注于姿势估计的计算机视觉研究人员和从业者的重要资源。

数据集 YAML

YAML(Yet Another Markup Language)文件用于定义数据集配置。它包含数据集的路径、类别及其他相关信息。对于COCO姿势估计数据集, coco-pose.yaml 文件保存在 https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco-pose.yaml.

ultralytics/cfg/datasets/coco-pose.yaml

# Ultralytics 🚀 AGPL-3.0 License - https://ultralytics.com/license

# COCO 2017 Keypoints dataset https://cocodataset.org by Microsoft

# Documentation: https://docs.ultralytics.com/datasets/pose/coco/

# Example usage: yolo train data=coco-pose.yaml

# parent

# ├── ultralytics

# └── datasets

# └── coco-pose ← downloads here (20.1 GB)

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: coco-pose # dataset root dir

train: train2017.txt # train images (relative to 'path') 56599 images

val: val2017.txt # val images (relative to 'path') 2346 images

test: test-dev2017.txt # 20288 of 40670 images, submit to https://codalab.lisn.upsaclay.fr/competitions/7403

# Keypoints

kpt_shape: [17, 3] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

flip_idx: [0, 2, 1, 4, 3, 6, 5, 8, 7, 10, 9, 12, 11, 14, 13, 16, 15]

# Classes

names:

0: person

# Keypoint names per class

kpt_names:

0:

- nose

- left_eye

- right_eye

- left_ear

- right_ear

- left_shoulder

- right_shoulder

- left_elbow

- right_elbow

- left_wrist

- right_wrist

- left_hip

- right_hip

- left_knee

- right_knee

- left_ankle

- right_ankle

# Download script/URL (optional)

download: |

from pathlib import Path

from ultralytics.utils import ASSETS_URL

from ultralytics.utils.downloads import download

# Download labels

dir = Path(yaml["path"]) # dataset root dir

urls = [f"{ASSETS_URL}/coco2017labels-pose.zip"]

download(urls, dir=dir.parent)

# Download data

urls = [

"http://images.cocodataset.org/zips/train2017.zip", # 19G, 118k images

"http://images.cocodataset.org/zips/val2017.zip", # 1G, 5k images

"http://images.cocodataset.org/zips/test2017.zip", # 7G, 41k images (optional)

]

download(urls, dir=dir / "images", threads=3)

用法

要在 COCO-Pose 数据集上训练 YOLO26n-pose 模型 100 个 epoch,图像尺寸为 640,您可以使用以下代码片段。有关可用参数的完整列表,请参阅模型训练页面。

训练示例

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="coco-pose.yaml", epochs=100, imgsz=640)

# Start training from a pretrained *.pt model

yolo pose train data=coco-pose.yaml model=yolo26n-pose.pt epochs=100 imgsz=640

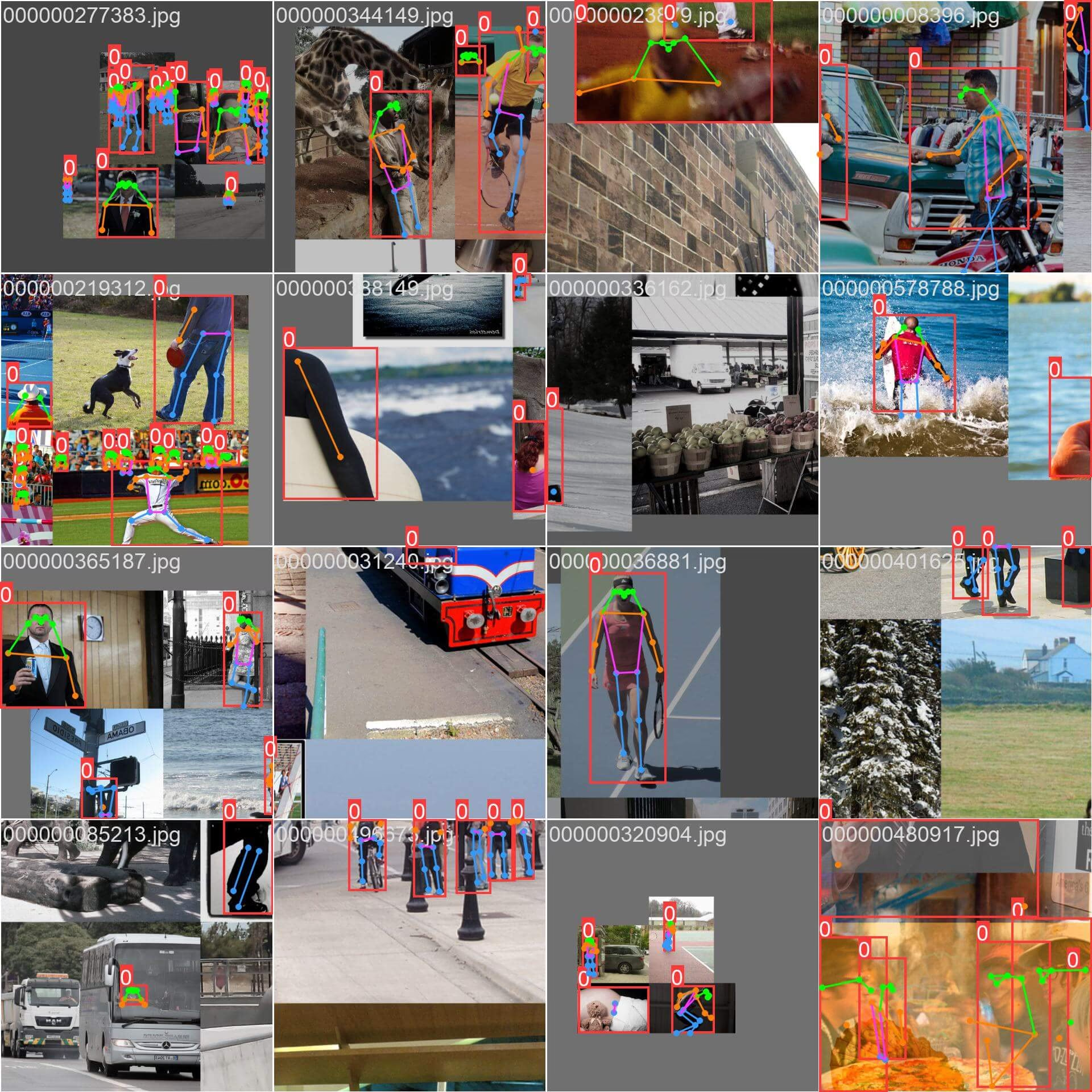

Sample Images 和注释

COCO-Pose 数据集包含一系列多样化的图像,其中人体图像标注了关键点。以下是数据集中一些图像及其相应标注的示例:

- Mosaiced Image:此图像演示了一个由 mosaiced 数据集图像组成的训练批次。Mosaicing 是一种在训练期间使用的技术,它将多个图像组合成一个图像,以增加每个训练批次中对象和场景的多样性。这有助于提高模型泛化到不同对象大小、纵横比和上下文的能力。

该示例展示了 COCO-Pose 数据集中图像的多样性和复杂性,以及在训练过程中使用马赛克增强的好处。

引用和致谢

如果您在研究或开发工作中使用 COCO-姿势估计数据集,请引用以下论文:

@misc{lin2015microsoft,

title={Microsoft COCO: Common Objects in Context},

author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

year={2015},

eprint={1405.0312},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

我们感谢 COCO 联盟为计算机视觉社区创建和维护这一宝贵资源。有关 COCO-姿势估计 数据集及其创建者的更多信息,请访问 COCO 数据集网站。

常见问题

COCO-姿势估计数据集是什么,以及它如何与Ultralytics YOLO结合用于姿势估计?

COCO-Pose 数据集是 COCO(Common Objects in Context)数据集的专门版本,专为姿势估计任务设计。它基于 COCO Keypoints 2017 图像和注释,支持训练 Ultralytics YOLO 等模型进行详细的姿势估计。例如,您可以通过加载预训练模型并使用 yaml 配置进行训练,从而使用 COCO-Pose 数据集训练 YOLO26n-pose 模型。有关训练示例,请参阅训练文档。

我如何在 COCO-Pose 数据集上训练 YOLO26 模型?

在 COCO-Pose 数据集上训练 YOLO26 模型可以通过 python 或 CLI 命令完成。例如,要训练 YOLO26n-pose 模型 100 个 epoch,图像尺寸为 640,您可以按照以下步骤操作:

训练示例

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="coco-pose.yaml", epochs=100, imgsz=640)

# Start training from a pretrained *.pt model

yolo pose train data=coco-pose.yaml model=yolo26n-pose.pt epochs=100 imgsz=640

有关训练过程和可用参数的更多详细信息,请查看训练页面。

COCO-姿势估计数据集提供了哪些不同的指标来评估模型性能?

COCO-Pose 数据集提供了多种标准化评估指标用于姿势估计任务,类似于原始 COCO 数据集。关键指标包括对象关键点相似度(OKS),它评估预测关键点与真实标注的准确性。这些指标允许对不同模型进行全面的性能比较。例如,COCO-Pose 预训练模型,如 YOLO26n-pose、YOLO26s-pose 等,在文档中列出了具体的性能指标,例如 mAPpose50-95 和 mAPpose50。

COCO-姿势估计数据集是如何构建和划分的?

COCO-Pose 数据集分为三个子集:

- Train2017:包含56599张COCO图像,标注用于训练姿势估计模型。

- Val2017: 2346 张图像,用于模型训练期间的验证。

- Test2017:用于测试和基准测试训练模型的图像。此子集的真实标注不公开;结果将提交到 COCO 评估服务器进行性能评估。

这些子集有助于有效地组织训练、验证和测试阶段。 有关配置详细信息,请浏览 coco-pose.yaml 文件,该文件可在 GitHub.

COCO-姿势估计数据集的主要特点和应用是什么?

COCO-Pose 数据集扩展了 COCO Keypoints 2017 注释,包含了 17 个人体关键点,从而实现了详细的姿势估计。标准化评估指标(例如 OKS)有助于在不同模型之间进行比较。COCO-Pose 数据集的应用涵盖多个领域,例如体育分析、医疗保健和人机交互,凡是需要详细人体姿势估计的场景。在实际应用中,利用文档中提供的预训练模型(例如 YOLO26n-pose)可以显著简化流程(主要功能)。

如果您在研究或开发工作中使用 COCO-姿势估计数据集,请引用包含以下BibTeX 条目的论文。