Explore Ultralytics YOLOv8

Overview

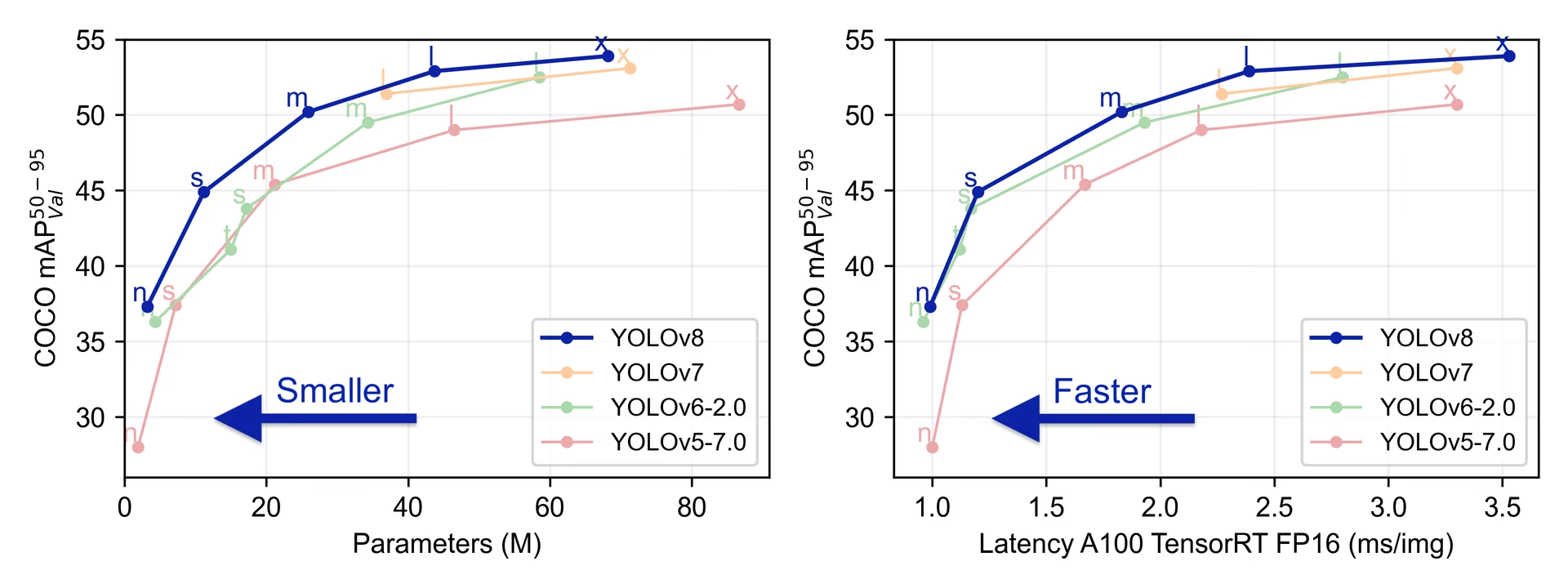

YOLOv8 was released by Ultralytics on January 10, 2023, offering cutting-edge performance in terms of accuracy and speed. Building upon the advancements of previous YOLO versions, YOLOv8 introduced new features and optimizations that make it an ideal choice for various object detection tasks in a wide range of applications.

Watch: Ultralytics YOLOv8 Model Overview

Try on Ultralytics Platform

Explore and run YOLOv8 models directly on Ultralytics Platform.

Key Features of YOLOv8

- Advanced Backbone and Neck Architectures: YOLOv8 employs state-of-the-art backbone and neck architectures, resulting in improved feature extraction and object detection performance.

- Anchor-free Split Ultralytics Head: YOLOv8 adopts an anchor-free split Ultralytics head, which contributes to better accuracy and a more efficient detection process compared to anchor-based approaches.

- Optimized Accuracy-Speed Tradeoff: With a focus on maintaining an optimal balance between accuracy and speed, YOLOv8 is suitable for real-time object detection tasks in diverse application areas.

- Variety of Pretrained Models: YOLOv8 offers a range of pretrained models to cater to various tasks and performance requirements, making it easier to find the right model for your specific use case.

Supported Tasks and Modes

The YOLOv8 series offers a diverse range of models, each specialized for specific tasks in computer vision. These models are designed to cater to various requirements, from object detection to more complex tasks like instance segmentation, pose/keypoints detection, oriented object detection, and classification.

Each variant of the YOLOv8 series is optimized for its respective task, ensuring high performance and accuracy. Additionally, these models are compatible with various operational modes including Inference, Validation, Training, and Export, facilitating their use in different stages of deployment and development.

| Model | Filenames | Task | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| YOLOv8 | yolov8n.pt yolov8s.pt yolov8m.pt yolov8l.pt yolov8x.pt | Detection | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-seg | yolov8n-seg.pt yolov8s-seg.pt yolov8m-seg.pt yolov8l-seg.pt yolov8x-seg.pt | Instance Segmentation | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-pose | yolov8n-pose.pt yolov8s-pose.pt yolov8m-pose.pt yolov8l-pose.pt yolov8x-pose.pt yolov8x-pose-p6.pt | Pose/Keypoints | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-obb | yolov8n-obb.pt yolov8s-obb.pt yolov8m-obb.pt yolov8l-obb.pt yolov8x-obb.pt | Oriented Detection | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-cls | yolov8n-cls.pt yolov8s-cls.pt yolov8m-cls.pt yolov8l-cls.pt yolov8x-cls.pt | Classification | ✅ | ✅ | ✅ | ✅ |

This table provides an overview of the YOLOv8 model variants, highlighting their applicability in specific tasks and their compatibility with various operational modes such as Inference, Validation, Training, and Export. It showcases the versatility and robustness of the YOLOv8 series, making them suitable for a variety of applications in computer vision.

Performance Metrics

Performance

See Detection Docs for usage examples with these models trained on COCO, which include 80 pretrained classes.

| Model | size (pixels) | mAPval 50-95 | Speed CPU ONNX (ms) | Speed A100 TensorRT (ms) | params (M) | FLOPs (B) |

|---|---|---|---|---|---|---|

| YOLOv8n | 640 | 37.3 | 80.4 | 0.99 | 3.2 | 8.7 |

| YOLOv8s | 640 | 44.9 | 128.4 | 1.20 | 11.2 | 28.6 |

| YOLOv8m | 640 | 50.2 | 234.7 | 1.83 | 25.9 | 78.9 |

| YOLOv8l | 640 | 52.9 | 375.2 | 2.39 | 43.7 | 165.2 |

| YOLOv8x | 640 | 53.9 | 479.1 | 3.53 | 68.2 | 257.8 |

See Detection Docs for usage examples with these models trained on Open Image V7, which include 600 pretrained classes.

| Model | size (pixels) | mAPval 50-95 | Speed CPU ONNX (ms) | Speed A100 TensorRT (ms) | params (M) | FLOPs (B) |

|---|---|---|---|---|---|---|

| YOLOv8n | 640 | 18.4 | 142.4 | 1.21 | 3.5 | 10.5 |

| YOLOv8s | 640 | 27.7 | 183.1 | 1.40 | 11.4 | 29.7 |

| YOLOv8m | 640 | 33.6 | 408.5 | 2.26 | 26.2 | 80.6 |

| YOLOv8l | 640 | 34.9 | 596.9 | 2.43 | 44.1 | 167.4 |

| YOLOv8x | 640 | 36.3 | 860.6 | 3.56 | 68.7 | 260.6 |

See Segmentation Docs for usage examples with these models trained on COCO, which include 80 pretrained classes.

| Model | size (pixels) | mAPbox 50-95 | mAPmask 50-95 | Speed CPU ONNX (ms) | Speed A100 TensorRT (ms) | params (M) | FLOPs (B) |

|---|---|---|---|---|---|---|---|

| YOLOv8n-seg | 640 | 36.7 | 30.5 | 96.1 | 1.21 | 3.4 | 12.6 |

| YOLOv8s-seg | 640 | 44.6 | 36.8 | 155.7 | 1.47 | 11.8 | 42.6 |

| YOLOv8m-seg | 640 | 49.9 | 40.8 | 317.0 | 2.18 | 27.3 | 110.2 |

| YOLOv8l-seg | 640 | 52.3 | 42.6 | 572.4 | 2.79 | 46.0 | 220.5 |

| YOLOv8x-seg | 640 | 53.4 | 43.4 | 712.1 | 4.02 | 71.8 | 344.1 |

See Classification Docs for usage examples with these models trained on ImageNet, which include 1000 pretrained classes.

| Model | size (pixels) | acc top1 | acc top5 | Speed CPU ONNX (ms) | Speed A100 TensorRT (ms) | params (M) | FLOPs (B) at 224 |

|---|---|---|---|---|---|---|---|

| YOLOv8n-cls | 224 | 69.0 | 88.3 | 12.9 | 0.31 | 2.7 | 0.5 |

| YOLOv8s-cls | 224 | 73.8 | 91.7 | 23.4 | 0.35 | 6.4 | 1.7 |

| YOLOv8m-cls | 224 | 76.8 | 93.5 | 85.4 | 0.62 | 17.0 | 5.3 |

| YOLOv8l-cls | 224 | 76.8 | 93.5 | 163.0 | 0.87 | 37.5 | 12.3 |

| YOLOv8x-cls | 224 | 79.0 | 94.6 | 232.0 | 1.01 | 57.4 | 19.0 |

See Pose Estimation Docs for usage examples with these models trained on COCO, which include 1 pretrained class, 'person'.

| Model | size (pixels) | mAPpose 50-95 | mAPpose 50 | Speed CPU ONNX (ms) | Speed A100 TensorRT (ms) | params (M) | FLOPs (B) |

|---|---|---|---|---|---|---|---|

| YOLOv8n-pose | 640 | 50.4 | 80.1 | 131.8 | 1.18 | 3.3 | 9.2 |

| YOLOv8s-pose | 640 | 60.0 | 86.2 | 233.2 | 1.42 | 11.6 | 30.2 |

| YOLOv8m-pose | 640 | 65.0 | 88.8 | 456.3 | 2.00 | 26.4 | 81.0 |

| YOLOv8l-pose | 640 | 67.6 | 90.0 | 784.5 | 2.59 | 44.4 | 168.6 |

| YOLOv8x-pose | 640 | 69.2 | 90.2 | 1607.1 | 3.73 | 69.4 | 263.2 |

| YOLOv8x-pose-p6 | 1280 | 71.6 | 91.2 | 4088.7 | 10.04 | 99.1 | 1066.4 |

See Oriented Detection Docs for usage examples with these models trained on DOTAv1, which include 15 pretrained classes.

| Model | size (pixels) | mAPtest 50 | Speed CPU ONNX (ms) | Speed A100 TensorRT (ms) | params (M) | FLOPs (B) |

|---|---|---|---|---|---|---|

| YOLOv8n-obb | 1024 | 78.0 | 204.77 | 3.57 | 3.1 | 23.3 |

| YOLOv8s-obb | 1024 | 79.5 | 424.88 | 4.07 | 11.4 | 76.3 |

| YOLOv8m-obb | 1024 | 80.5 | 763.48 | 7.61 | 26.4 | 208.6 |

| YOLOv8l-obb | 1024 | 80.7 | 1278.42 | 11.83 | 44.5 | 433.8 |

| YOLOv8x-obb | 1024 | 81.36 | 1759.10 | 13.23 | 69.5 | 676.7 |

YOLOv8 Usage Examples

This example provides simple YOLOv8 training and inference examples. For full documentation on these and other modes see the Predict, Train, Val and Export docs pages.

Note the below example is for YOLOv8 Detect models for object detection. For additional supported tasks see the Segment, Classify, OBB docs and Pose docs.

Example

PyTorch pretrained *.pt models as well as configuration *.yaml files can be passed to the YOLO() class to create a model instance in python:

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv8n model

model = YOLO("yolov8n.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the YOLOv8n model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

CLI commands are available to directly run the models:

# Load a COCO-pretrained YOLOv8n model and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolov8n.pt data=coco8.yaml epochs=100 imgsz=640

# Load a COCO-pretrained YOLOv8n model and run inference on the 'bus.jpg' image

yolo predict model=yolov8n.pt source=path/to/bus.jpg

Citations and Acknowledgments

Ultralytics YOLOv8 Publication

Ultralytics has not published a formal research paper for YOLOv8 due to the rapidly evolving nature of the models. We focus on advancing the technology and making it easier to use, rather than producing static documentation. For the most up-to-date information on YOLO architecture, features, and usage, please refer to our GitHub repository and documentation.

If you use the YOLOv8 model or any other software from this repository in your work, please cite it using the following format:

@software{yolov8_ultralytics,

author = {Glenn Jocher and Ayush Chaurasia and Jing Qiu},

title = {Ultralytics YOLOv8},

version = {8.0.0},

year = {2023},

url = {https://github.com/ultralytics/ultralytics},

orcid = {0000-0001-5950-6979, 0000-0002-7603-6750, 0000-0003-3783-7069},

license = {AGPL-3.0}

}

Please note that the DOI is pending and will be added to the citation once it is available. YOLOv8 models are provided under AGPL-3.0 and Enterprise licenses.

FAQ

What is YOLOv8 and how does it differ from previous YOLO versions?

YOLOv8 is designed to improve real-time object detection performance with advanced features. Unlike earlier versions, YOLOv8 incorporates an anchor-free split Ultralytics head, state-of-the-art backbone and neck architectures, and offers optimized accuracy-speed tradeoff, making it ideal for diverse applications. For more details, check the Overview and Key Features sections.

How can I use YOLOv8 for different computer vision tasks?

YOLOv8 supports a wide range of computer vision tasks, including object detection, instance segmentation, pose/keypoints detection, oriented object detection, and classification. Each model variant is optimized for its specific task and compatible with various operational modes like Inference, Validation, Training, and Export. Refer to the Supported Tasks and Modes section for more information.

What are the performance metrics for YOLOv8 models?

YOLOv8 models achieve state-of-the-art performance across various benchmarking datasets. For instance, the YOLOv8n model achieves a mAP (mean Average Precision) of 37.3 on the COCO dataset and a speed of 0.99 ms on A100 TensorRT. Detailed performance metrics for each model variant across different tasks and datasets can be found in the Performance Metrics section.

How do I train a YOLOv8 model?

Training a YOLOv8 model can be done using either Python or CLI. Below are examples for training a model using a COCO-pretrained YOLOv8 model on the COCO8 dataset for 100 epochs:

Example

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv8n model

model = YOLO("yolov8n.pt")

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

yolo train model=yolov8n.pt data=coco8.yaml epochs=100 imgsz=640

For further details, visit the Training documentation.

Can I benchmark YOLOv8 models for performance?

Yes, YOLOv8 models can be benchmarked for performance in terms of speed and accuracy across various export formats. You can use PyTorch, ONNX, TensorRT, and more for benchmarking. Below are example commands for benchmarking using Python and CLI:

Example

from ultralytics.utils.benchmarks import benchmark

# Benchmark on GPU

benchmark(model="yolov8n.pt", data="coco8.yaml", imgsz=640, half=False, device=0)

yolo benchmark model=yolov8n.pt data='coco8.yaml' imgsz=640 half=False device=0

For additional information, check the Performance Metrics section.