Conjunto de datos Roboflow 100

Roboflow , patrocinado por Intel, es un innovador conjunto de datos de referencia para la detección de objetos. Incluye 100 conjuntos de datos diversos. Este conjunto de datos de referencia está diseñado específicamente para probar la adaptabilidad de los modelos de visión artificial, como YOLO Ultralytics , a diversos ámbitos, entre los que se incluyen la sanidad, las imágenes aéreas y los videojuegos.

Licencias

Ultralytics ofrece dos opciones de licencia para adaptarse a diferentes casos de uso:

- Licencia AGPL-3.0: Esta licencia de código abierto aprobada por la OSI es ideal para estudiantes y entusiastas, ya que promueve la colaboración abierta y el intercambio de conocimientos. Consulte el archivo LICENSE para obtener más detalles y visite nuestra página de la licencia AGPL-3.0.

- Licencia Enterprise: Diseñada para uso comercial, esta licencia permite la integración perfecta del software Ultralytics y los modelos de IA en productos y servicios comerciales. Si su caso implica aplicaciones comerciales, póngase en contacto a través de Licencias de Ultralytics.

Características clave

- Dominios diversos: Incluye 100 conjuntos de datos en siete dominios distintos: Aéreo, videojuegos, microscópico, submarino, documentos, electromagnético y mundo real.

- Escala: El benchmark comprende 224.714 imágenes en 805 clases, lo que representa más de 11.170 horas de esfuerzo de etiquetado de datos.

- Estandarización: Todas las imágenes son preprocesadas y redimensionadas a 640x640 píxeles para una evaluación consistente.

- Evaluación Limpia: Se centra en eliminar la ambigüedad de clase y filtra las clases subrepresentadas para garantizar una evaluación del modelo más limpia.

- Anotaciones: Incluye bounding boxes para objetos, adecuado para el entrenamiento y la evaluación de modelos de object detection utilizando métricas como mAP.

Estructura del conjunto de datos

El conjunto de datos Roboflow 100 está organizado en siete categorías, cada una de las cuales contiene una colección única de conjuntos de datos, imágenes y clases:

- Aéreo: 7 conjuntos de datos, 9.683 imágenes, 24 clases.

- Videojuegos: 7 conjuntos de datos, 11.579 imágenes, 88 clases.

- Microscópico: 11 conjuntos de datos, 13.378 imágenes, 28 clases.

- Subacuático: 5 conjuntos de datos, 18.003 imágenes, 39 clases.

- Documentos: 8 conjuntos de datos, 24.813 imágenes, 90 clases.

- Electromagnético: 12 conjuntos de datos, 36.381 imágenes, 41 clases.

- Mundo real: 50 conjuntos de datos, 110.615 imágenes, 495 clases.

Esta estructura proporciona un campo de pruebas diverso y extenso para los modelos de detección de objetos, lo que refleja una amplia gama de escenarios de aplicación del mundo real que se encuentran en varias Soluciones Ultralytics.

Evaluación comparativa

La evaluación comparativa de conjuntos de datos implica evaluar el rendimiento de los modelos de aprendizaje automático en conjuntos de datos específicos utilizando métricas estandarizadas. Las métricas comunes incluyen la precisión, la precisión media promedio (mAP) y la puntuación F1. Puede obtener más información sobre estos en nuestra guía de métricas de rendimiento de YOLO.

Resultados de la evaluación comparativa

Los resultados de la evaluación comparativa que utilicen el script proporcionado se almacenarán en el ultralytics-benchmarks/ directorio, específicamente en evaluation.txt.

Ejemplo de evaluación comparativa

El siguiente script demuestra cómo realizar una evaluación comparativa programática de un modelo Ultralytics YOLO (p. ej., YOLO26n) en los 100 conjuntos de datos del benchmark Roboflow 100 utilizando el RF100Benchmark clase.

import os

import shutil

from pathlib import Path

from ultralytics.utils.benchmarks import RF100Benchmark

# Initialize RF100Benchmark and set API key

benchmark = RF100Benchmark()

benchmark.set_key(api_key="YOUR_ROBOFLOW_API_KEY")

# Parse dataset and define file paths

names, cfg_yamls = benchmark.parse_dataset()

val_log_file = Path("ultralytics-benchmarks") / "validation.txt"

eval_log_file = Path("ultralytics-benchmarks") / "evaluation.txt"

# Run benchmarks on each dataset in RF100

for ind, path in enumerate(cfg_yamls):

path = Path(path)

if path.exists():

# Fix YAML file and run training

benchmark.fix_yaml(str(path))

os.system(f"yolo detect train data={path} model=yolo26s.pt epochs=1 batch=16")

# Run validation and evaluate

os.system(f"yolo detect val data={path} model=runs/detect/train/weights/best.pt > {val_log_file} 2>&1")

benchmark.evaluate(str(path), str(val_log_file), str(eval_log_file), ind)

# Remove the 'runs' directory

runs_dir = Path.cwd() / "runs"

shutil.rmtree(runs_dir)

else:

print("YAML file path does not exist")

continue

print("RF100 Benchmarking completed!")

Aplicaciones

Roboflow 100 es invaluable para varias aplicaciones relacionadas con la visión artificial y el aprendizaje profundo. Los investigadores e ingenieros pueden aprovechar este benchmark para:

- Evaluar el rendimiento de los modelos de detección de objetos en un contexto multidominio.

- Pruebe la adaptabilidad y la robustez de los modelos a escenarios del mundo real más allá de los conjuntos de datos de referencia comunes como COCO o PASCAL VOC.

- Evalúe las capacidades de los modelos de detección de objetos en diversos conjuntos de datos, incluidas áreas especializadas como la atención médica, las imágenes aéreas y los videojuegos.

- Compare el rendimiento del modelo entre diferentes arquitecturas de redes neuronales y técnicas de optimización.

- Identifique los desafíos específicos del dominio que pueden requerir consejos especializados para el entrenamiento de modelos o enfoques de ajuste fino como el aprendizaje por transferencia.

Para más ideas e inspiración sobre aplicaciones en el mundo real, explore nuestras guías sobre proyectos prácticos o consulte la Plataforma Ultralytics para una formación de modelos y un despliegue optimizados.

Uso

El conjunto de datos Roboflow 100, incluidos los metadatos y los enlaces de descarga, está disponible en el sitio oficial Repositorio de Roboflow 100 en GitHub. Puede acceder y utilizar el conjunto de datos directamente desde allí para sus necesidades de evaluación comparativa. Ultralytics RF100Benchmark simplifica el proceso de descarga y preparación de estos conjuntos de datos para su uso con los modelos de Ultralytics.

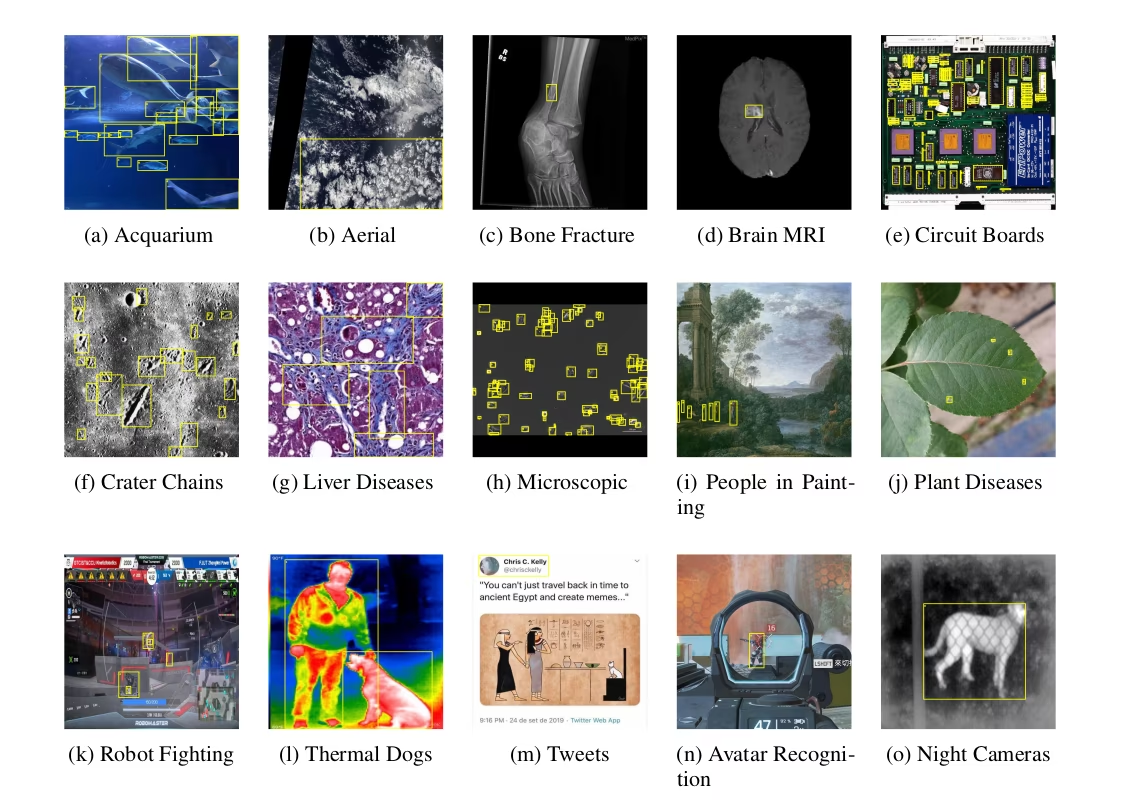

Datos de muestra y anotaciones

Roboflow 100 consta de conjuntos de datos con imágenes diversas capturadas desde varios ángulos y dominios. A continuación, se muestran ejemplos de imágenes anotadas incluidas en el benchmark RF100, que muestran la variedad de objetos y escenas. Técnicas como el aumento de datos pueden mejorar aún más la diversidad durante el entrenamiento.

La diversidad observada en el benchmark Roboflow 100 representa un avance significativo con respecto a los benchmarks tradicionales, que a menudo se centran en la optimización de una sola métrica dentro de un dominio limitado. Este enfoque integral ayuda a desarrollar modelos de visión artificial más robustos y versátiles, capaces de funcionar bien en una multitud de escenarios diferentes.

Citas y agradecimientos

Si utiliza el conjunto de datos Roboflow 100 en su trabajo de investigación o desarrollo, cite el artículo original:

@misc{rf100benchmark,

Author = {Floriana Ciaglia and Francesco Saverio Zuppichini and Paul Guerrie and Mark McQuade and Jacob Solawetz},

Title = {Roboflow 100: A Rich, Multi-Domain Object Detection Benchmark},

Year = {2022},

Eprint = {arXiv:2211.13523},

url = {https://arxiv.org/abs/2211.13523}

}

Extendemos nuestra gratitud al equipo de Roboflow y a todos los colaboradores por sus importantes esfuerzos en la creación y el mantenimiento del conjunto de datos Roboflow 100 como un valioso recurso para la comunidad de visión artificial.

Si está interesado en explorar más conjuntos de datos para mejorar sus proyectos de detección de objetos y aprendizaje automático, no dude en visitar nuestra colección completa de conjuntos de datos, que incluye una variedad de otros conjuntos de datos de detección.

Preguntas frecuentes

¿Qué es el conjunto de datos Roboflow 100 y por qué es significativo para la detección de objetos?

El conjunto de datos Roboflow es un punto de referencia para los modelos de detección de objetos. Comprende 100 conjuntos de datos diversos que abarcan ámbitos como la asistencia sanitaria, las imágenes aéreas y los videojuegos. Su importancia radica en que proporciona una forma estandarizada de probar la adaptabilidad y la solidez de los modelos en una amplia gama de escenarios del mundo real, superando los puntos de referencia tradicionales, que a menudo se limitan a un ámbito concreto.

¿Qué dominios cubre el conjunto de datos Roboflow 100?

El conjunto de datos Roboflow 100 abarca siete dominios diversos, que ofrecen retos únicos para los modelos de detección de objetos:

- Aéreo: 7 conjuntos de datos (p. ej., imágenes de satélite, vistas de drones).

- Videojuegos: 7 conjuntos de datos (por ejemplo, objetos de varios entornos de juego).

- Microscópico: 11 conjuntos de datos (p. ej., células, partículas).

- Subacuático: 5 conjuntos de datos (por ejemplo, vida marina, objetos sumergidos).

- Documentos: 8 conjuntos de datos (p. ej., regiones de texto, elementos de formulario).

- Electromagnético: 12 conjuntos de datos (por ejemplo, firmas de radar, visualizaciones de datos espectrales).

- Mundo real: 50 conjuntos de datos (una categoría amplia que incluye objetos cotidianos, escenas, comercio minorista, etc.).

Esta variedad hace que RF100 sea un excelente recurso para evaluar la generalización de los modelos de visión artificial.

¿Qué debo incluir al citar el conjunto de datos Roboflow 100 en mi investigación?

Al utilizar el conjunto de datos Roboflow 100, cite el artículo original para dar crédito a los creadores. Aquí está la cita BibTeX recomendada:

@misc{rf100benchmark,

Author = {Floriana Ciaglia and Francesco Saverio Zuppichini and Paul Guerrie and Mark McQuade and Jacob Solawetz},

Title = {Roboflow 100: A Rich, Multi-Domain Object Detection Benchmark},

Year = {2022},

Eprint = {arXiv:2211.13523},

url = {https://arxiv.org/abs/2211.13523}

}

Para una mayor exploración, considera la posibilidad de visitar nuestra colección completa de conjuntos de datos o de explorar otros conjuntos de datos de detección compatibles con los modelos de Ultralytics.