Roboflow 100 Dataset

Roboflow 100, sponsored by Intel, is a groundbreaking object detection benchmark dataset. It includes 100 diverse datasets. This benchmark is specifically designed to test the adaptability of computer vision models, like Ultralytics YOLO models, to various domains, including healthcare, aerial imagery, and video games.

Licensing

Ultralytics offers two licensing options to accommodate different use cases:

- AGPL-3.0 License: This OSI-approved open-source license is ideal for students and enthusiasts, promoting open collaboration and knowledge sharing. See the LICENSE file for more details and visit our AGPL-3.0 License page.

- Enterprise License: Designed for commercial use, this license allows for the seamless integration of Ultralytics software and AI models into commercial products and services. If your scenario involves commercial applications, please reach out via Ultralytics Licensing.

Key Features

- Diverse Domains: Includes 100 datasets across seven distinct domains: Aerial, Video games, Microscopic, Underwater, Documents, Electromagnetic, and Real World.

- Scale: The benchmark comprises 224,714 images across 805 classes, representing over 11,170 hours of data labeling effort.

- Standardization: All images are preprocessed and resized to 640x640 pixels for consistent evaluation.

- Clean Evaluation: Focuses on eliminating class ambiguity and filters out underrepresented classes to ensure cleaner model evaluation.

- Annotations: Includes bounding boxes for objects, suitable for training and evaluating object detection models using metrics like mAP.

Dataset Structure

The Roboflow 100 dataset is organized into seven categories, each containing a unique collection of datasets, images, and classes:

- Aerial: 7 datasets, 9,683 images, 24 classes.

- Video Games: 7 datasets, 11,579 images, 88 classes.

- Microscopic: 11 datasets, 13,378 images, 28 classes.

- Underwater: 5 datasets, 18,003 images, 39 classes.

- Documents: 8 datasets, 24,813 images, 90 classes.

- Electromagnetic: 12 datasets, 36,381 images, 41 classes.

- Real World: 50 datasets, 110,615 images, 495 classes.

This structure provides a diverse and extensive testing ground for object detection models, reflecting a wide array of real-world application scenarios found in various Ultralytics Solutions.

Benchmarking

Dataset benchmarking involves evaluating the performance of machine learning models on specific datasets using standardized metrics. Common metrics include accuracy, mean Average Precision (mAP), and F1-score. You can learn more about these in our YOLO Performance Metrics guide.

Benchmarking Results

Benchmarking results using the provided script will be stored in the ultralytics-benchmarks/ directory, specifically in evaluation.txt.

Benchmarking Example

The following script demonstrates how to programmatically benchmark an Ultralytics YOLO model (e.g., YOLO26n) on all 100 datasets within the Roboflow 100 benchmark using the RF100Benchmark class.

import os

import shutil

from pathlib import Path

from ultralytics.utils.benchmarks import RF100Benchmark

# Initialize RF100Benchmark and set API key

benchmark = RF100Benchmark()

benchmark.set_key(api_key="YOUR_ROBOFLOW_API_KEY")

# Parse dataset and define file paths

names, cfg_yamls = benchmark.parse_dataset()

val_log_file = Path("ultralytics-benchmarks") / "validation.txt"

eval_log_file = Path("ultralytics-benchmarks") / "evaluation.txt"

# Run benchmarks on each dataset in RF100

for ind, path in enumerate(cfg_yamls):

path = Path(path)

if path.exists():

# Fix YAML file and run training

benchmark.fix_yaml(str(path))

os.system(f"yolo detect train data={path} model=yolo26s.pt epochs=1 batch=16")

# Run validation and evaluate

os.system(f"yolo detect val data={path} model=runs/detect/train/weights/best.pt > {val_log_file} 2>&1")

benchmark.evaluate(str(path), str(val_log_file), str(eval_log_file), ind)

# Remove the 'runs' directory

runs_dir = Path.cwd() / "runs"

shutil.rmtree(runs_dir)

else:

print("YAML file path does not exist")

continue

print("RF100 Benchmarking completed!")

Applications

Roboflow 100 is invaluable for various applications related to computer vision and deep learning. Researchers and engineers can leverage this benchmark to:

- Evaluate the performance of object detection models in a multi-domain context.

- Test the adaptability and robustness of models to real-world scenarios beyond common benchmark datasets like COCO or PASCAL VOC.

- Benchmark the capabilities of object detection models across diverse datasets, including specialized areas like healthcare, aerial imagery, and video games.

- Compare model performance across different neural network architectures and optimization techniques.

- Identify domain-specific challenges that may require specialized model training tips or fine-tuning approaches like transfer learning.

For more ideas and inspiration on real-world applications, explore our guides on practical projects or check out Ultralytics Platform for streamlined model training and deployment.

Usage

The Roboflow 100 dataset, including metadata and download links, is available on the official Roboflow 100 GitHub repository. You can access and utilize the dataset directly from there for your benchmarking needs. The Ultralytics RF100Benchmark utility simplifies the process of downloading and preparing these datasets for use with Ultralytics models.

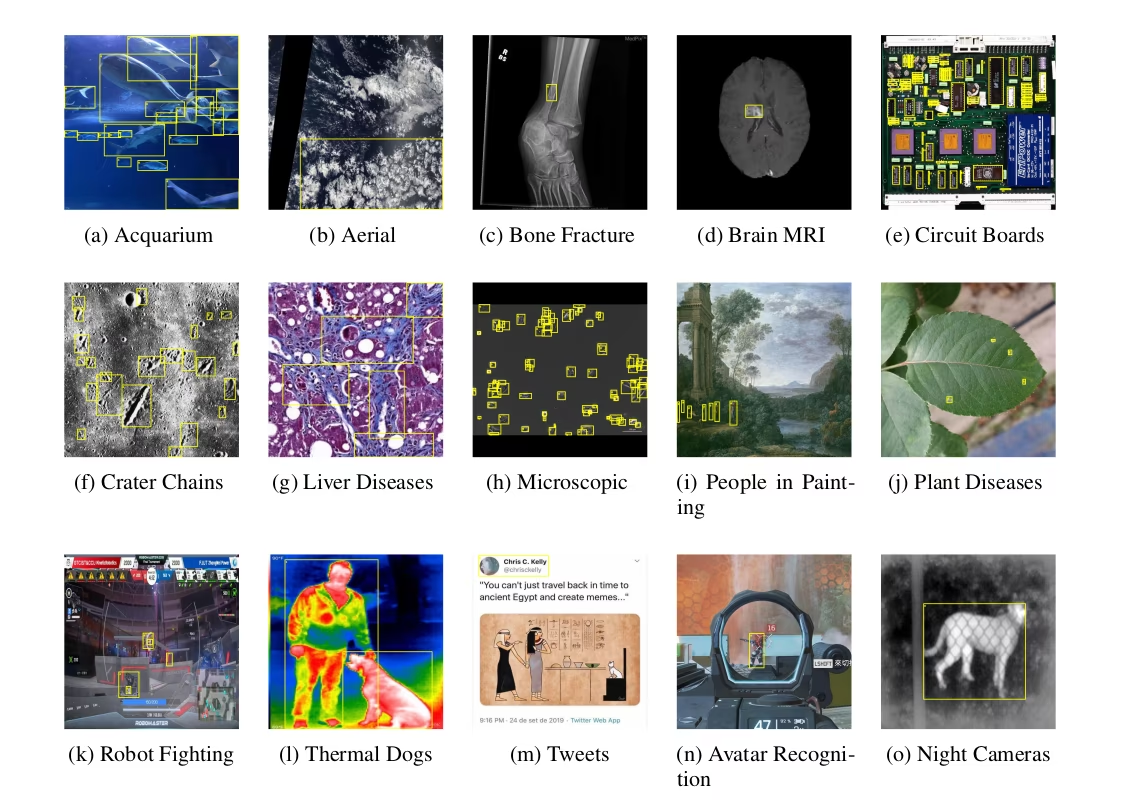

Sample Data and Annotations

Roboflow 100 consists of datasets with diverse images captured from various angles and domains. Below are examples of annotated images included in the RF100 benchmark, showcasing the variety of objects and scenes. Techniques like data augmentation can further enhance the diversity during training.

The diversity seen in the Roboflow 100 benchmark represents a significant advancement from traditional benchmarks, which often focus on optimizing a single metric within a limited domain. This comprehensive approach aids in developing more robust and versatile computer vision models capable of performing well across a multitude of different scenarios.

Citations and Acknowledgments

If you use the Roboflow 100 dataset in your research or development work, please cite the original paper:

@misc{rf100benchmark,

Author = {Floriana Ciaglia and Francesco Saverio Zuppichini and Paul Guerrie and Mark McQuade and Jacob Solawetz},

Title = {Roboflow 100: A Rich, Multi-Domain Object Detection Benchmark},

Year = {2022},

Eprint = {arXiv:2211.13523},

url = {https://arxiv.org/abs/2211.13523}

}

We extend our gratitude to the Roboflow team and all contributors for their significant efforts in creating and maintaining the Roboflow 100 dataset as a valuable resource for the computer vision community.

If you are interested in exploring more datasets to enhance your object detection and machine learning projects, feel free to visit our comprehensive dataset collection, which includes a variety of other detection datasets.

FAQ

What is the Roboflow 100 dataset, and why is it significant for object detection?

The Roboflow 100 dataset is a benchmark for object detection models. It comprises 100 diverse datasets covering domains like healthcare, aerial imagery, and video games. Its significance lies in providing a standardized way to test model adaptability and robustness across a wide range of real-world scenarios, moving beyond traditional, often domain-limited, benchmarks.

Which domains are covered by the Roboflow 100 dataset?

The Roboflow 100 dataset spans seven diverse domains, offering unique challenges for object detection models:

- Aerial: 7 datasets (e.g., satellite imagery, drone views).

- Video Games: 7 datasets (e.g., objects from various game environments).

- Microscopic: 11 datasets (e.g., cells, particles).

- Underwater: 5 datasets (e.g., marine life, submerged objects).

- Documents: 8 datasets (e.g., text regions, form elements).

- Electromagnetic: 12 datasets (e.g., radar signatures, spectral data visualizations).

- Real World: 50 datasets (a broad category including everyday objects, scenes, retail, etc.).

This variety makes RF100 an excellent resource for assessing the generalizability of computer vision models.

What should I include when citing the Roboflow 100 dataset in my research?

When using the Roboflow 100 dataset, please cite the original paper to give credit to the creators. Here is the recommended BibTeX citation:

@misc{rf100benchmark,

Author = {Floriana Ciaglia and Francesco Saverio Zuppichini and Paul Guerrie and Mark McQuade and Jacob Solawetz},

Title = {Roboflow 100: A Rich, Multi-Domain Object Detection Benchmark},

Year = {2022},

Eprint = {arXiv:2211.13523},

url = {https://arxiv.org/abs/2211.13523}

}

For further exploration, consider visiting our comprehensive dataset collection or browsing other detection datasets compatible with Ultralytics models.