Workouts Monitoring using Ultralytics YOLO26

Monitoring workouts through pose estimation with Ultralytics YOLO26 enhances exercise assessment by accurately tracking key body landmarks and joints in real-time. This technology provides instant feedback on exercise form, tracks workout routines, and measures performance metrics, optimizing training sessions for users and trainers alike.

Watch: How to Monitor Workout Exercises with Ultralytics YOLO | Squats, Leg Extension, Pushups and More

Advantages of Workouts Monitoring

- Optimized Performance: Tailoring workouts based on monitoring data for better results.

- Goal Achievement: Track and adjust fitness goals for measurable progress.

- Personalization: Customized workout plans based on individual data for effectiveness.

- Health Awareness: Early detection of patterns indicating health issues or over-training.

- Informed Decisions: Data-driven decisions for adjusting routines and setting realistic goals.

Real World Applications

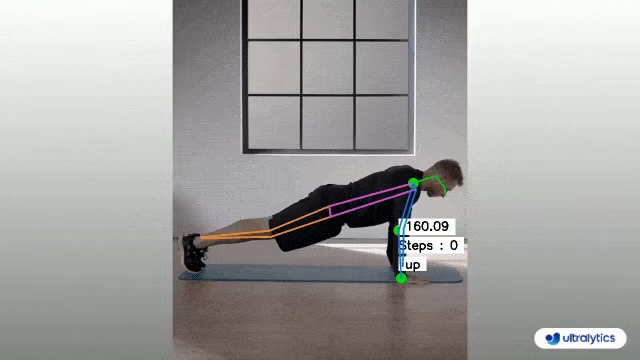

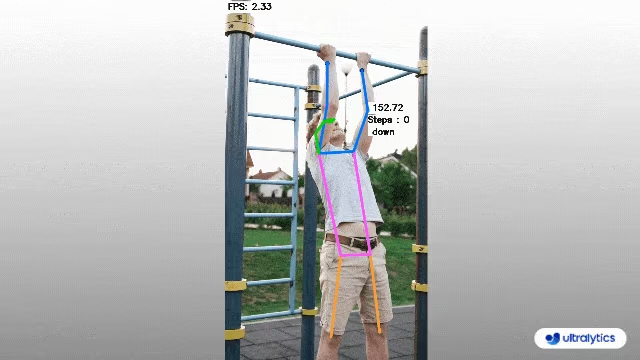

| Workouts Monitoring | Workouts Monitoring |

|---|---|

|  |

| PushUps Counting | PullUps Counting |

Workouts Monitoring using Ultralytics YOLO

# Run a workout example

yolo solutions workout show=True

# Pass a source video

yolo solutions workout source="path/to/video.mp4"

# Use keypoints for pushups

yolo solutions workout kpts="[6, 8, 10]"

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

# Video writer

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter("workouts_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Init AIGym

gym = solutions.AIGym(

show=True, # display the frame

kpts=[6, 8, 10], # keypoints for monitoring specific exercise, by default it's for pushup

model="yolo26n-pose.pt", # path to the YOLO26 pose estimation model file

# line_width=2, # adjust the line width for bounding boxes and text display

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = gym(im0)

# print(results) # access the output

video_writer.write(results.plot_im) # write the processed frame.

cap.release()

video_writer.release()

cv2.destroyAllWindows() # destroy all opened windows

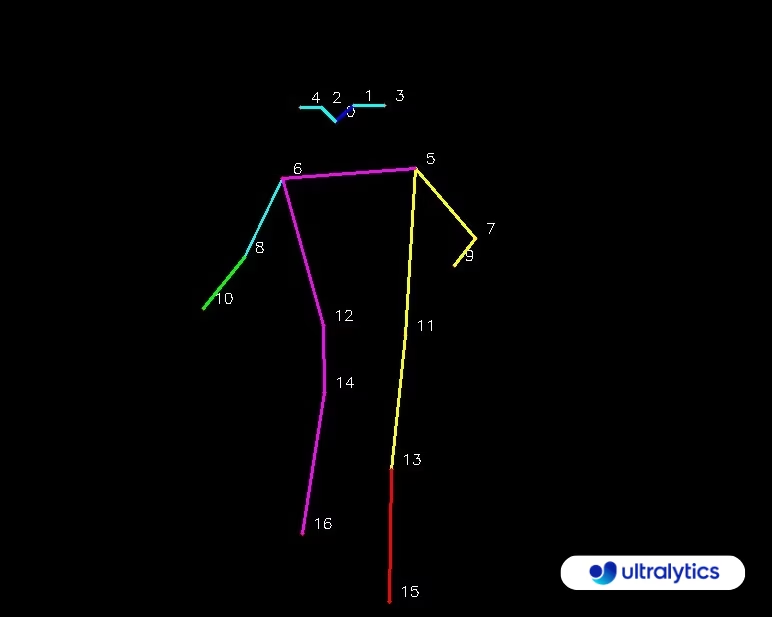

KeyPoints Map

AIGym Arguments

Here's a table with the AIGym arguments:

| Argument | Type | Default | Description |

|---|---|---|---|

model | str | None | Path to an Ultralytics YOLO model file. |

up_angle | float | 145.0 | Angle threshold for the 'up' pose. |

down_angle | float | 90.0 | Angle threshold for the 'down' pose. |

kpts | list[int] | '[6, 8, 10]' | List of three keypoint indices used for monitoring workouts. These keypoints correspond to body joints or parts, such as shoulders, elbows, and wrists, for exercises like push-ups, pull-ups, squats, and ab-workouts. |

The AIGym solution also supports a range of object tracking parameters:

| Argument | Type | Default | Description |

|---|---|---|---|

tracker | str | 'botsort.yaml' | Specifies the tracking algorithm to use, e.g., bytetrack.yaml or botsort.yaml. |

conf | float | 0.1 | Sets the confidence threshold for detections; lower values allow more objects to be tracked but may include false positives. |

iou | float | 0.7 | Sets the Intersection over Union (IoU) threshold for filtering overlapping detections. |

classes | list | None | Filters results by class index. For example, classes=[0, 2, 3] only tracks the specified classes. |

verbose | bool | True | Controls the display of tracking results, providing a visual output of tracked objects. |

device | str | None | Specifies the device for inference (e.g., cpu, cuda:0 or 0). Allows users to select between CPU, a specific GPU, or other compute devices for model execution. |

Additionally, the following visualization settings can be applied:

| Argument | Type | Default | Description |

|---|---|---|---|

show | bool | False | If True, displays the annotated images or videos in a window. Useful for immediate visual feedback during development or testing. |

line_width | int or None | None | Specifies the line width of bounding boxes. If None, the line width is automatically adjusted based on the image size. Provides visual customization for clarity. |

show_conf | bool | True | Displays the confidence score for each detection alongside the label. Gives insight into the model's certainty for each detection. |

show_labels | bool | True | Displays labels for each detection in the visual output. Provides immediate understanding of detected objects. |

FAQ

How do I monitor my workouts using Ultralytics YOLO26?

To monitor your workouts using Ultralytics YOLO26, you can utilize the pose estimation capabilities to track and analyze key body landmarks and joints in real-time. This allows you to receive instant feedback on your exercise form, count repetitions, and measure performance metrics. You can start by using the provided example code for push-ups, pull-ups, or ab workouts as shown:

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

gym = solutions.AIGym(

line_width=2,

show=True,

kpts=[6, 8, 10],

)

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = gym(im0)

cv2.destroyAllWindows()

For further customization and settings, you can refer to the AIGym section in the documentation.

What are the benefits of using Ultralytics YOLO26 for workout monitoring?

Using Ultralytics YOLO26 for workout monitoring provides several key benefits:

- Optimized Performance: By tailoring workouts based on monitoring data, you can achieve better results.

- Goal Achievement: Easily track and adjust fitness goals for measurable progress.

- Personalization: Get customized workout plans based on your individual data for optimal effectiveness.

- Health Awareness: Early detection of patterns that indicate potential health issues or over-training.

- Informed Decisions: Make data-driven decisions to adjust routines and set realistic goals.

You can watch a YouTube video demonstration to see these benefits in action.

How accurate is Ultralytics YOLO26 in detecting and tracking exercises?

Ultralytics YOLO26 is highly accurate in detecting and tracking exercises due to its state-of-the-art pose estimation capabilities. It can accurately track key body landmarks and joints, providing real-time feedback on exercise form and performance metrics. The model's pretrained weights and robust architecture ensure high precision and reliability. For real-world examples, check out the real-world applications section in the documentation, which showcases push-ups and pull-ups counting.

Can I use Ultralytics YOLO26 for custom workout routines?

Yes, Ultralytics YOLO26 can be adapted for custom workout routines. The AIGym class supports different pose types such as pushup, pullup, and abworkout. You can specify keypoints and angles to detect specific exercises. Here is an example setup:

from ultralytics import solutions

gym = solutions.AIGym(

line_width=2,

show=True,

kpts=[6, 8, 10], # For pushups - can be customized for other exercises

)

For more details on setting arguments, refer to the Arguments AIGym section. This flexibility allows you to monitor various exercises and customize routines based on your fitness goals.

How can I save the workout monitoring output using Ultralytics YOLO26?

To save the workout monitoring output, you can modify the code to include a video writer that saves the processed frames. Here's an example:

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter("workouts.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

gym = solutions.AIGym(

line_width=2,

show=True,

kpts=[6, 8, 10],

)

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = gym(im0)

video_writer.write(results.plot_im)

cap.release()

video_writer.release()

cv2.destroyAllWindows()

This setup writes the monitored video to an output file, allowing you to review your workout performance later or share it with trainers for additional feedback.