Ultralytics YOLO26를 활용한 분석

소개

이 가이드는 세 가지 기본 데이터 시각화 유형인 선 그래프, 막대 그래프, 원형 차트에 대한 포괄적인 개요를 제공합니다. 각 섹션에는 python을 사용하여 이러한 시각화 자료를 만드는 방법에 대한 단계별 지침과 코드 스니펫이 포함되어 있습니다.

참고: Ultralytics를 사용하여 분석 그래프를 생성하는 방법 | 선 그래프, 막대 그래프, 영역 및 원형 차트

시각적 샘플

| 선 그래프 | 막대 플롯 | 원형 차트 |

|---|---|---|

|  |  |

그래프가 중요한 이유

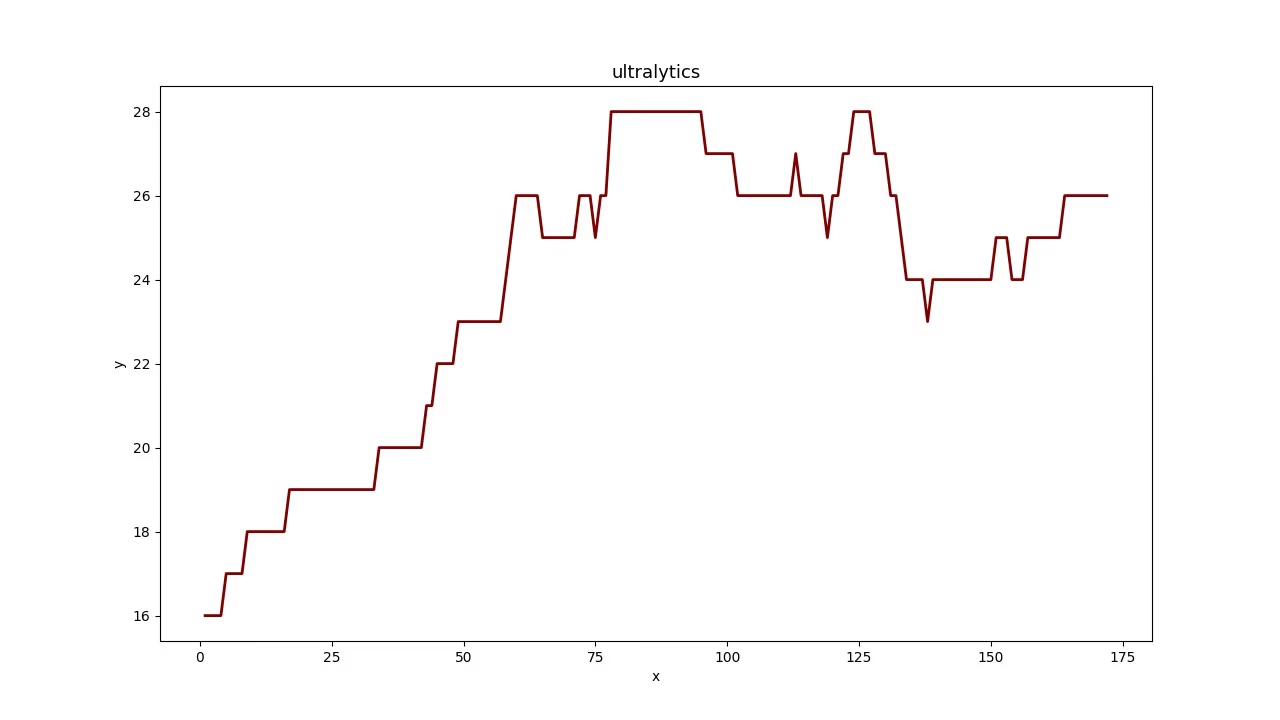

- 선 그래프는 단기간 및 장기간에 걸친 변화를 추적하고 동일한 기간 동안 여러 그룹의 변화를 비교하는 데 적합합니다.

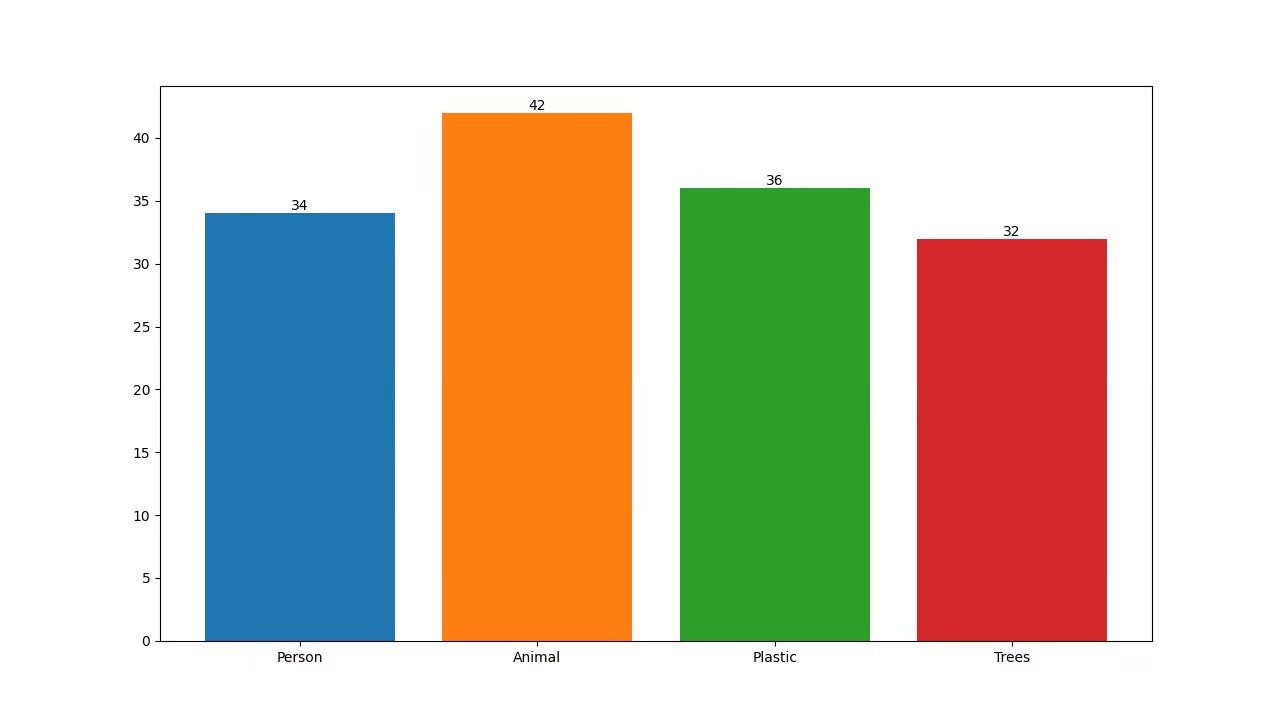

- 반면에 막대 그래프는 여러 범주에 걸쳐 수량을 비교하고 범주와 해당 숫자 값 간의 관계를 표시하는 데 적합합니다.

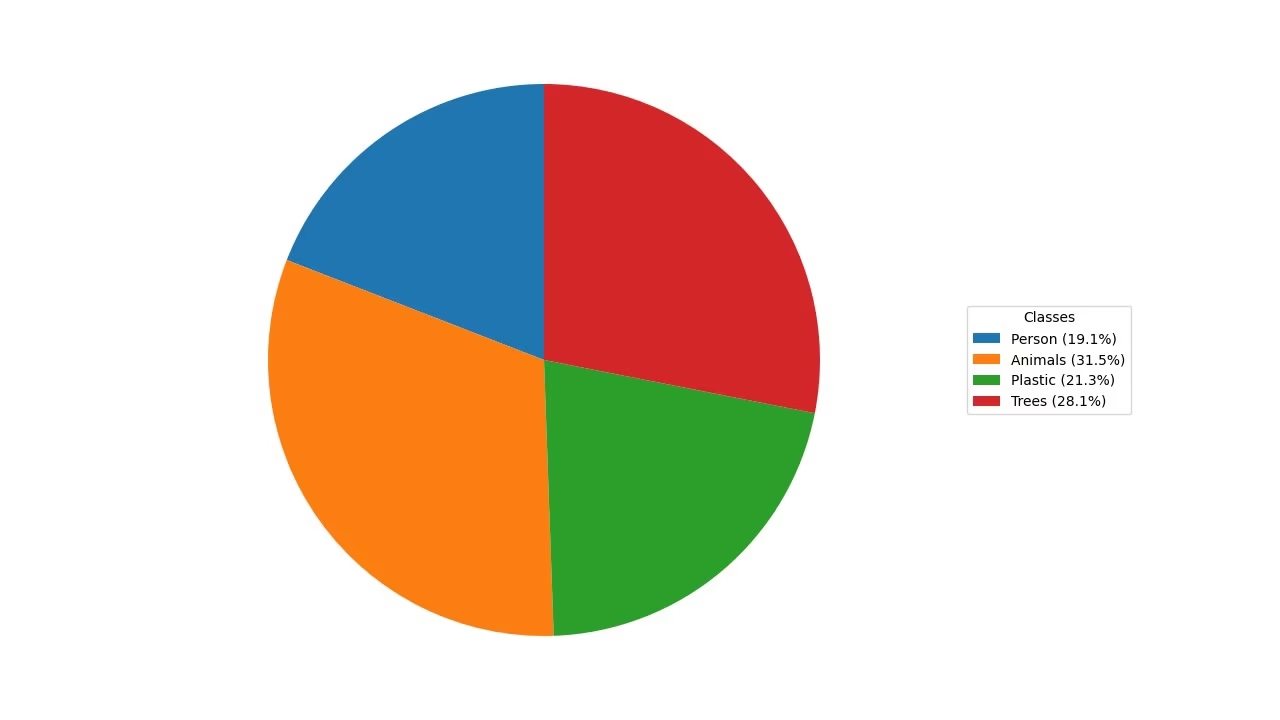

- 마지막으로 원형 차트는 범주 간의 비율을 설명하고 전체의 부분을 보여주는 데 효과적입니다.

Ultralytics YOLO를 사용한 분석

yolo solutions analytics show=True

# Pass the source

yolo solutions analytics source="path/to/video.mp4"

# Generate the pie chart

yolo solutions analytics analytics_type="pie" show=True

# Generate the bar plots

yolo solutions analytics analytics_type="bar" show=True

# Generate the area plots

yolo solutions analytics analytics_type="area" show=True

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

# Video writer

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

out = cv2.VideoWriter(

"analytics_output.avi",

cv2.VideoWriter_fourcc(*"MJPG"),

fps,

(1280, 720), # this is fixed

)

# Initialize analytics object

analytics = solutions.Analytics(

show=True, # display the output

analytics_type="line", # pass the analytics type, could be "pie", "bar" or "area".

model="yolo26n.pt", # path to the YOLO26 model file

# classes=[0, 2], # display analytics for specific detection classes

)

# Process video

frame_count = 0

while cap.isOpened():

success, im0 = cap.read()

if success:

frame_count += 1

results = analytics(im0, frame_count) # update analytics graph every frame

# print(results) # access the output

out.write(results.plot_im) # write the video file

else:

break

cap.release()

out.release()

cv2.destroyAllWindows() # destroy all opened windows

Analytics 인수

다음은 Analytics 인수를 간략하게 설명하는 표입니다.

| 인수 | 유형 | 기본값 | 설명 |

|---|---|---|---|

model | str | None | Ultralytics YOLO 모델 파일의 경로입니다. |

analytics_type | str | 'line' | 그래프 유형 (예: line, bar, area또는 pie. |

다양하게 활용할 수도 있습니다. track 다음의 인수들 Analytics 솔루션.

| 인수 | 유형 | 기본값 | 설명 |

|---|---|---|---|

tracker | str | 'botsort.yaml' | 사용할 추적 알고리즘을 지정합니다 (예: bytetrack.yaml 또는 botsort.yaml. |

conf | float | 0.1 | 검출에 대한 신뢰도 임계값을 설정합니다. 값이 낮을수록 더 많은 객체를 추적할 수 있지만 오탐지가 포함될 수 있습니다. |

iou | float | 0.7 | 중복되는 detect를 필터링하기 위한 IoU(Intersection over Union) 임계값을 설정합니다. |

classes | list | None | 클래스 인덱스별로 결과를 필터링합니다. 예를 들어, classes=[0, 2, 3] 지정된 클래스만 추적합니다. |

verbose | bool | True | 추적 결과 표시를 제어하여 추적된 객체의 시각적 출력을 제공합니다. |

device | str | None | 추론을 위한 장치를 지정합니다 (예: cpu, cuda:0 또는 0). 사용자는 모델 실행을 위해 CPU, 특정 GPU 또는 기타 컴퓨팅 장치 중에서 선택할 수 있습니다. |

또한 다음과 같은 시각화 인수가 지원됩니다.

| 인수 | 유형 | 기본값 | 설명 |

|---|---|---|---|

show | bool | False | 만약 True, 어노테이션이 적용된 이미지나 비디오를 창에 표시합니다. 개발 또는 테스트 중 즉각적인 시각적 피드백에 유용합니다. |

line_width | int or None | None | 경계 상자의 선 너비를 지정합니다. 만약 None, 선 너비는 이미지 크기에 따라 자동으로 조정됩니다. 명확성을 위해 시각적 사용자 정의를 제공합니다. |

결론

효과적인 데이터 분석을 위해서는 다양한 유형의 시각화를 언제 어떻게 사용해야 하는지 이해하는 것이 중요합니다. 선 그래프, 막대 그래프, 파이 차트는 데이터를 더 명확하고 효과적으로 전달하는 데 도움이 되는 기본적인 도구입니다. Ultralytics YOLO26 Analytics 솔루션은 객체 detect 및 track 결과로부터 이러한 시각화를 생성하는 간소화된 방법을 제공하여 시각적 데이터에서 의미 있는 통찰력을 더 쉽게 추출할 수 있도록 합니다.

FAQ

Ultralytics YOLO26 Analytics를 사용하여 선 그래프를 어떻게 생성합니까?

Ultralytics YOLO26 Analytics를 사용하여 선 그래프를 생성하려면 다음 단계를 따르십시오:

- YOLO26 모델을 로드하고 비디오 파일을 여십시오.

- 다음을 초기화합니다.

Analytics유형이 "line"으로 설정된 클래스입니다. - 비디오 프레임을 반복하면서 프레임당 객체 수와 같은 관련 데이터로 선 그래프를 업데이트합니다.

- 선 그래프를 표시하는 출력 비디오를 저장합니다.

예시:

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

out = cv2.VideoWriter(

"ultralytics_analytics.avi",

cv2.VideoWriter_fourcc(*"MJPG"),

fps,

(1280, 720), # this is fixed

)

analytics = solutions.Analytics(

analytics_type="line",

show=True,

)

frame_count = 0

while cap.isOpened():

success, im0 = cap.read()

if success:

frame_count += 1

results = analytics(im0, frame_count) # update analytics graph every frame

out.write(results.plot_im) # write the video file

else:

break

cap.release()

out.release()

cv2.destroyAllWindows()

다음 구성에 대한 자세한 내용은 Analytics 클래스를 참조하십시오. Ultralytics YOLO26를 활용한 분석 섹션에서 확인할 수 있습니다.

막대 그래프 생성에 Ultralytics YOLO26를 사용하는 이점은 무엇입니까?

Ultralytics YOLO26를 사용하여 막대 그래프를 생성하는 것은 여러 가지 이점을 제공합니다:

- 실시간 데이터 시각화: 객체 감지 결과를 막대 그래프에 완벽하게 통합하여 동적으로 업데이트합니다.

- 사용 편의성: 간단한 API 및 기능을 통해 데이터를 쉽게 구현하고 시각화할 수 있습니다.

- 맞춤 설정: 특정 요구 사항에 맞게 제목, 레이블, 색상 등을 사용자 정의합니다.

- 효율성: 대량의 데이터를 효율적으로 처리하고 비디오 처리 중에 실시간으로 플롯을 업데이트합니다.

다음 예제를 사용하여 막대 그래프를 생성합니다.

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

out = cv2.VideoWriter(

"ultralytics_analytics.avi",

cv2.VideoWriter_fourcc(*"MJPG"),

fps,

(1280, 720), # this is fixed

)

analytics = solutions.Analytics(

analytics_type="bar",

show=True,

)

frame_count = 0

while cap.isOpened():

success, im0 = cap.read()

if success:

frame_count += 1

results = analytics(im0, frame_count) # update analytics graph every frame

out.write(results.plot_im) # write the video file

else:

break

cap.release()

out.release()

cv2.destroyAllWindows()

자세한 내용은 가이드의 막대 그래프 섹션을 참조하십시오.

데이터 시각화 프로젝트에서 파이 차트 생성에 Ultralytics YOLO26를 사용해야 하는 이유는 무엇입니까?

Ultralytics YOLO26는 파이 차트 생성에 탁월한 선택입니다. 그 이유는 다음과 같습니다:

- 객체 감지와의 통합: 객체 감지 결과를 원형 차트에 직접 통합하여 즉각적인 통찰력을 얻으십시오.

- 사용자 친화적인 API: 최소한의 코드로 간편하게 설정하고 사용할 수 있습니다.

- 맞춤형: 색상, 레이블 등을 위한 다양한 사용자 정의 옵션.

- 실시간 업데이트: 실시간으로 데이터를 처리하고 시각화합니다. 이는 비디오 분석 프로젝트에 이상적입니다.

다음은 간단한 예입니다.

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

out = cv2.VideoWriter(

"ultralytics_analytics.avi",

cv2.VideoWriter_fourcc(*"MJPG"),

fps,

(1280, 720), # this is fixed

)

analytics = solutions.Analytics(

analytics_type="pie",

show=True,

)

frame_count = 0

while cap.isOpened():

success, im0 = cap.read()

if success:

frame_count += 1

results = analytics(im0, frame_count) # update analytics graph every frame

out.write(results.plot_im) # write the video file

else:

break

cap.release()

out.release()

cv2.destroyAllWindows()

자세한 내용은 가이드의 원형 차트 섹션을 참조하십시오.

Ultralytics YOLO26를 사용하여 객체를 track하고 시각화를 동적으로 업데이트할 수 있습니까?

예, Ultralytics YOLO26는 객체를 track하고 시각화를 동적으로 업데이트하는 데 사용할 수 있습니다. 실시간으로 여러 객체를 track하는 것을 지원하며, track된 객체 데이터를 기반으로 선 그래프, 막대 그래프, 파이 차트와 같은 다양한 시각화를 업데이트할 수 있습니다.

선 그래프 추적 및 업데이트 예:

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

out = cv2.VideoWriter(

"ultralytics_analytics.avi",

cv2.VideoWriter_fourcc(*"MJPG"),

fps,

(1280, 720), # this is fixed

)

analytics = solutions.Analytics(

analytics_type="line",

show=True,

)

frame_count = 0

while cap.isOpened():

success, im0 = cap.read()

if success:

frame_count += 1

results = analytics(im0, frame_count) # update analytics graph every frame

out.write(results.plot_im) # write the video file

else:

break

cap.release()

out.release()

cv2.destroyAllWindows()

전체 기능에 대한 자세한 내용은 추적 섹션을 참조하십시오.

Ultralytics YOLO26가 OpenCV 및 TensorFlow와 같은 다른 객체 detect 솔루션과 다른 점은 무엇입니까?

Ultralytics YOLO26는 OpenCV 및 TensorFlow와 같은 다른 객체 detect 솔루션과 여러 가지 이유로 차별화됩니다:

- 최첨단 정확도: YOLO26는 객체 detect, segment 및 분류 작업에서 우수한 정확도를 제공합니다.

- 사용 편의성: 사용자 친화적인 API를 통해 광범위한 코딩 없이도 빠른 구현 및 통합이 가능합니다.

- 실시간 성능: 고속 추론에 최적화되어 실시간 애플리케이션에 적합합니다.

- 다양한 응용 분야: 멀티 객체 추적, 사용자 정의 모델 학습, ONNX, TensorRT 및 CoreML과 같은 다양한 형식으로 내보내기를 포함한 다양한 작업을 지원합니다.

- 포괄적인 문서: 모든 단계를 안내하는 광범위한 문서 및 블로그 자료를 제공합니다.

자세한 비교 및 사용 사례는 Ultralytics 블로그를 참조하십시오.