Object Counting using Ultralytics YOLO26

What is Object Counting?

Object counting with Ultralytics YOLO26 involves accurate identification and counting of specific objects in videos and camera streams. YOLO26 excels in real-time applications, providing efficient and precise object counting for various scenarios like crowd analysis and surveillance, thanks to its state-of-the-art algorithms and deep learning capabilities.

Watch: How to Perform Real-Time Object Counting with Ultralytics YOLO26 🚀

Advantages of Object Counting

- Resource Optimization: Object counting facilitates efficient resource management by providing accurate counts, optimizing resource allocation in applications like inventory management.

- Enhanced Security: Object counting enhances security and surveillance by accurately tracking and counting entities, aiding in proactive threat detection.

- Informed Decision-Making: Object counting offers valuable insights for decision-making, optimizing processes in retail, traffic management, and various other domains.

Real World Applications

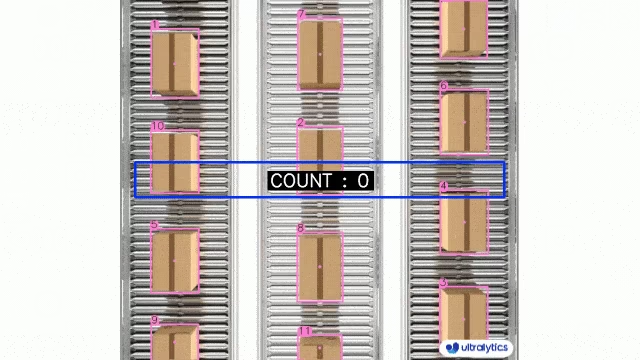

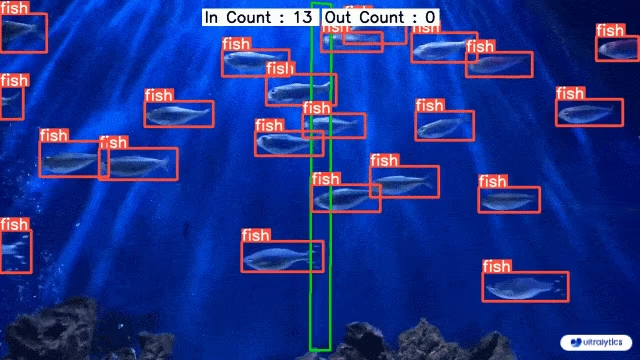

| Logistics | Aquaculture |

|---|---|

|  |

| Conveyor Belt Packets Counting Using Ultralytics YOLO26 | Fish Counting in Sea using Ultralytics YOLO26 |

Object Counting using Ultralytics YOLO

# Run a counting example

yolo solutions count show=True

# Pass a source video

yolo solutions count source="path/to/video.mp4"

# Pass region coordinates

yolo solutions count region="[(20, 400), (1080, 400), (1080, 360), (20, 360)]"

The region argument accepts either two points (for a line) or a polygon with three or more points. Define the coordinates in the order they should be connected so the counter knows exactly where entries and exits occur.

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

# region_points = [(20, 400), (1080, 400)] # line counting

region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360)] # rectangular region

# region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360), (20, 400)] # polygon region

# Video writer

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter("object_counting_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Initialize object counter object

counter = solutions.ObjectCounter(

show=True, # display the output

region=region_points, # pass region points

model="yolo26n.pt", # model="yolo26n-obb.pt" for object counting with OBB model.

# classes=[0, 2], # count specific classes, e.g., person and car with the COCO pretrained model.

# tracker="botsort.yaml", # choose trackers, e.g., "bytetrack.yaml"

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = counter(im0)

# print(results) # access the output

video_writer.write(results.plot_im) # write the processed frame.

cap.release()

video_writer.release()

cv2.destroyAllWindows() # destroy all opened windows

ObjectCounter Arguments

Here's a table with the ObjectCounter arguments:

| Argument | Type | Default | Description |

|---|---|---|---|

model | str | None | Path to an Ultralytics YOLO model file. |

show_in | bool | True | Flag to control whether to display the in counts on the video stream. |

show_out | bool | True | Flag to control whether to display the out counts on the video stream. |

region | list | '[(20, 400), (1260, 400)]' | List of points defining the counting region. |

The ObjectCounter solution allows the use of several track arguments:

| Argument | Type | Default | Description |

|---|---|---|---|

tracker | str | 'botsort.yaml' | Specifies the tracking algorithm to use, e.g., bytetrack.yaml or botsort.yaml. |

conf | float | 0.1 | Sets the confidence threshold for detections; lower values allow more objects to be tracked but may include false positives. |

iou | float | 0.7 | Sets the Intersection over Union (IoU) threshold for filtering overlapping detections. |

classes | list | None | Filters results by class index. For example, classes=[0, 2, 3] only tracks the specified classes. |

verbose | bool | True | Controls the display of tracking results, providing a visual output of tracked objects. |

device | str | None | Specifies the device for inference (e.g., cpu, cuda:0 or 0). Allows users to select between CPU, a specific GPU, or other compute devices for model execution. |

Additionally, the visualization arguments listed below are supported:

| Argument | Type | Default | Description |

|---|---|---|---|

show | bool | False | If True, displays the annotated images or videos in a window. Useful for immediate visual feedback during development or testing. |

line_width | int or None | None | Specifies the line width of bounding boxes. If None, the line width is automatically adjusted based on the image size. Provides visual customization for clarity. |

show_conf | bool | True | Displays the confidence score for each detection alongside the label. Gives insight into the model's certainty for each detection. |

show_labels | bool | True | Displays labels for each detection in the visual output. Provides immediate understanding of detected objects. |

FAQ

How do I count objects in a video using Ultralytics YOLO26?

To count objects in a video using Ultralytics YOLO26, you can follow these steps:

- Import the necessary libraries (

cv2,ultralytics). - Define the counting region (e.g., a polygon, line, etc.).

- Set up the video capture and initialize the object counter.

- Process each frame to track objects and count them within the defined region.

Here's a simple example for counting in a region:

import cv2

from ultralytics import solutions

def count_objects_in_region(video_path, output_video_path, model_path):

"""Count objects in a specific region within a video."""

cap = cv2.VideoCapture(video_path)

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter(output_video_path, cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360)]

counter = solutions.ObjectCounter(show=True, region=region_points, model=model_path)

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = counter(im0)

video_writer.write(results.plot_im)

cap.release()

video_writer.release()

cv2.destroyAllWindows()

count_objects_in_region("path/to/video.mp4", "output_video.avi", "yolo26n.pt")

For more advanced configurations and options, check out the RegionCounter solution for counting objects in multiple regions simultaneously.

What are the advantages of using Ultralytics YOLO26 for object counting?

Using Ultralytics YOLO26 for object counting offers several advantages:

- Resource Optimization: It facilitates efficient resource management by providing accurate counts, helping optimize resource allocation in industries like inventory management.

- Enhanced Security: It enhances security and surveillance by accurately tracking and counting entities, aiding in proactive threat detection and security systems.

- Informed Decision-Making: It offers valuable insights for decision-making, optimizing processes in domains like retail, traffic management, and more.

- Real-time Processing: YOLO26's architecture enables real-time inference, making it suitable for live video streams and time-sensitive applications.

For implementation examples and practical applications, explore the TrackZone solution for tracking objects in specific zones.

How can I count specific classes of objects using Ultralytics YOLO26?

To count specific classes of objects using Ultralytics YOLO26, you need to specify the classes you are interested in during the tracking phase. Below is a Python example:

import cv2

from ultralytics import solutions

def count_specific_classes(video_path, output_video_path, model_path, classes_to_count):

"""Count specific classes of objects in a video."""

cap = cv2.VideoCapture(video_path)

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter(output_video_path, cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

line_points = [(20, 400), (1080, 400)]

counter = solutions.ObjectCounter(show=True, region=line_points, model=model_path, classes=classes_to_count)

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = counter(im0)

video_writer.write(results.plot_im)

cap.release()

video_writer.release()

cv2.destroyAllWindows()

count_specific_classes("path/to/video.mp4", "output_specific_classes.avi", "yolo26n.pt", [0, 2])

In this example, classes_to_count=[0, 2] means it counts objects of class 0 and 2 (e.g., person and car in the COCO dataset). You can find more information about class indices in the COCO dataset documentation.

Why should I use YOLO26 over other object detection models for real-time applications?

Ultralytics YOLO26 provides several advantages over other object detection models like Faster R-CNN, SSD, and previous YOLO versions:

- Speed and Efficiency: YOLO26 offers real-time processing capabilities, making it ideal for applications requiring high-speed inference, such as surveillance and autonomous driving.

- Accuracy: It provides state-of-the-art accuracy for object detection and tracking tasks, reducing the number of false positives and improving overall system reliability.

- Ease of Integration: YOLO26 offers seamless integration with various platforms and devices, including mobile and edge devices, which is crucial for modern AI applications.

- Flexibility: Supports various tasks like object detection, segmentation, and tracking with configurable models to meet specific use-case requirements.

Check out Ultralytics YOLO26 Documentation for a deeper dive into its features and performance comparisons.

Can I use YOLO26 for advanced applications like crowd analysis and traffic management?

Yes, Ultralytics YOLO26 is perfectly suited for advanced applications like crowd analysis and traffic management due to its real-time detection capabilities, scalability, and integration flexibility. Its advanced features allow for high-accuracy object tracking, counting, and classification in dynamic environments. Example use cases include:

- Crowd Analysis: Monitor and manage large gatherings, ensuring safety and optimizing crowd flow with region-based counting.

- Traffic Management: Track and count vehicles, analyze traffic patterns, and manage congestion in real-time with speed estimation capabilities.

- Retail Analytics: Analyze customer movement patterns and product interactions to optimize store layouts and improve customer experience.

- Industrial Automation: Count products on conveyor belts and monitor production lines for quality control and efficiency improvements.

For more specialized applications, explore Ultralytics Solutions for a comprehensive set of tools designed for real-world computer vision challenges.