간단한 유틸리티

에 지정되어 있습니다. ultralytics 패키지는 워크플로우를 지원, 개선 및 가속화하는 다양한 유틸리티를 제공합니다. 사용 가능한 유틸리티가 더 많지만, 이 가이드에서는 개발자에게 가장 유용한 유틸리티를 강조하여 Ultralytics 도구를 사용한 프로그래밍에 대한 실질적인 참고 자료 역할을 합니다.

참고: Ultralytics 유틸리티 | 자동 어노테이션, Explorer API 및 데이터 세트 변환

데이터

자동 라벨링/어노테이션

데이터세트 어노테이션은 리소스 집약적이고 시간이 많이 소요되는 프로세스입니다. 적절한 양의 데이터로 학습된 Ultralytics YOLO 객체 감지 모델이 있는 경우 SAM과 함께 사용하여 분할 형식으로 추가 데이터를 자동 어노테이션할 수 있습니다.

from ultralytics.data.annotator import auto_annotate

auto_annotate(

data="path/to/new/data",

det_model="yolo26n.pt",

sam_model="mobile_sam.pt",

device="cuda",

output_dir="path/to/save_labels",

)

이 함수는 값을 반환하지 않습니다. 자세한 내용은 다음을 참조하십시오.

- 다음을 참조하십시오. 참조 섹션:

annotator.auto_annotate해당 기능의 작동 방식에 대한 자세한 내용은 참조하십시오. - 다음과 함께 사용: 함수

segments2boxes객체 감지 바운딩 박스도 생성합니다.

데이터세트 어노테이션 시각화

이 기능은 학습 전에 이미지에 YOLO 어노테이션을 시각화하여 잘못된 탐지 결과를 초래할 수 있는 잘못된 어노테이션을 식별하고 수정하는 데 도움이 됩니다. 경계 상자를 그리고, 클래스 이름으로 객체에 레이블을 지정하고, 더 나은 가독성을 위해 배경의 밝기에 따라 텍스트 색상을 조정합니다.

from ultralytics.data.utils import visualize_image_annotations

label_map = { # Define the label map with all annotated class labels.

0: "person",

1: "car",

}

# Visualize

visualize_image_annotations(

"path/to/image.jpg", # Input image path.

"path/to/annotations.txt", # Annotation file path for the image.

label_map,

)

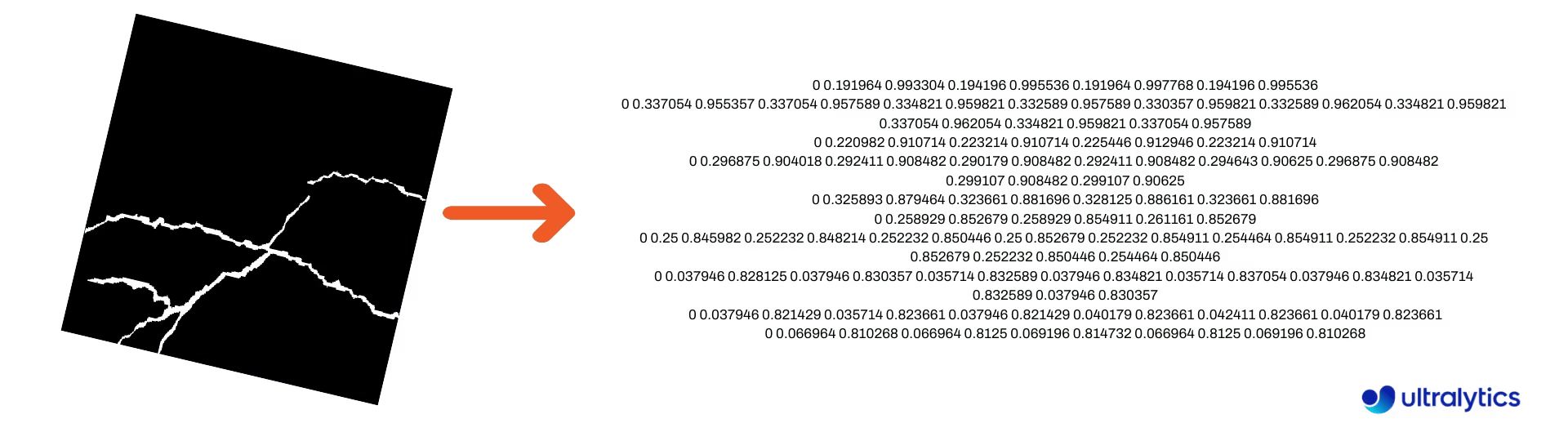

세분화 마스크를 YOLO 형식으로 변환

세분화 마스크 이미지 데이터 세트를 Ultralytics YOLO 세분화 형식으로 변환하는 데 사용합니다. 이 함수는 바이너리 형식 마스크 이미지가 포함된 디렉터리를 가져와 YOLO 세분화 형식으로 변환합니다.

변환된 마스크는 지정된 출력 디렉터리에 저장됩니다.

from ultralytics.data.converter import convert_segment_masks_to_yolo_seg

# The classes here is the total classes in the dataset.

# for COCO dataset we have 80 classes.

convert_segment_masks_to_yolo_seg(masks_dir="path/to/masks_dir", output_dir="path/to/output_dir", classes=80)

COCO를 YOLO 형식으로 변환

다음을 변환하는 데 사용합니다. COCO JSON 주석을 YOLO 형식으로 변환합니다. 객체 감지(bounding box) 데이터 세트의 경우, 둘 다 설정합니다. use_segments 및 use_keypoints 에서 False.

from ultralytics.data.converter import convert_coco

convert_coco(

"coco/annotations/",

use_segments=False,

use_keypoints=False,

cls91to80=True,

)

다음에 대한 추가 정보는 convert_coco 함수, 참조 페이지를 방문하십시오..

바운딩 박스 크기 가져오기

import cv2

from ultralytics import YOLO

from ultralytics.utils.plotting import Annotator

model = YOLO("yolo26n.pt") # Load pretrain or fine-tune model

# Process the image

source = cv2.imread("path/to/image.jpg")

results = model(source)

# Extract results

annotator = Annotator(source, example=model.names)

for box in results[0].boxes.xyxy.cpu():

width, height, area = annotator.get_bbox_dimension(box)

print(f"Bounding Box Width {width.item()}, Height {height.item()}, Area {area.item()}")

바운딩 박스를 세그먼트로 변환

기존 x y w h 바운딩 박스 데이터를 사용하여 세그먼트로 변환합니다. yolo_bbox2segment 함수. 이미지 및 주석 파일을 다음과 같이 구성합니다.

data

|__ images

├─ 001.jpg

├─ 002.jpg

├─ ..

└─ NNN.jpg

|__ labels

├─ 001.txt

├─ 002.txt

├─ ..

└─ NNN.txt

from ultralytics.data.converter import yolo_bbox2segment

yolo_bbox2segment(

im_dir="path/to/images",

save_dir=None, # saved to "labels-segment" in images directory

sam_model="sam_b.pt",

)

다음 페이지를 방문하십시오. yolo_bbox2segment 참조 페이지 해당 기능에 대한 자세한 내용은 참조하십시오.

세그먼트를 바운딩 박스로 변환

다음을 사용하는 데이터 세트가 있는 경우 분할 데이터 세트 형식을(를) 사용하면 이러한 항목을 똑바로 세운 (또는 수평) 경계 상자(x y w h format)으로 변환할 수 있습니다.

import numpy as np

from ultralytics.utils.ops import segments2boxes

segments = np.array(

[

[805, 392, 797, 400, ..., 808, 714, 808, 392],

[115, 398, 113, 400, ..., 150, 400, 149, 298],

[267, 412, 265, 413, ..., 300, 413, 299, 412],

]

)

segments2boxes([s.reshape(-1, 2) for s in segments])

# >>> array([[ 741.66, 631.12, 133.31, 479.25],

# [ 146.81, 649.69, 185.62, 502.88],

# [ 281.81, 636.19, 118.12, 448.88]],

# dtype=float32) # xywh bounding boxes

이 함수의 작동 방식을 이해하려면 참조 페이지를 방문하십시오.

유틸리티

이미지 압축

단일 이미지 파일의 가로 세로 비율과 품질을 유지하면서 크기를 줄여 압축합니다. 입력 이미지의 크기가 최대 크기보다 작으면 크기가 조정되지 않습니다.

from pathlib import Path

from ultralytics.data.utils import compress_one_image

for f in Path("path/to/dataset").rglob("*.jpg"):

compress_one_image(f)

자동 데이터세트 분할

데이터세트를 자동으로 분할 train/val/test 분할하고 결과 분할을 다음 위치에 저장합니다. autosplit_*.txt 파일. 이 함수는 임의 샘플링을 사용하며, 이는 다음을 사용할 때는 제외됩니다. fraction 학습을 위한 인수.

from ultralytics.data.split import autosplit

autosplit(

path="path/to/images",

weights=(0.9, 0.1, 0.0), # (train, validation, test) fractional splits

annotated_only=False, # split only images with annotation file when True

)

이 기능에 대한 자세한 내용은 참조 페이지를 참조하십시오.

세그먼트-폴리곤에서 바이너리 마스크로

단일 다각형(목록으로)을 지정된 이미지 크기의 이진 마스크로 변환합니다. 다각형은 다음 형식이어야 합니다. [N, 2]인 경우 N 다음의 수입니다. (x, y) 다각형 윤곽선을 정의하는 포인트.

경고

N 반드시 짝수여야 합니다.

import numpy as np

from ultralytics.data.utils import polygon2mask

imgsz = (1080, 810)

polygon = np.array([805, 392, 797, 400, ..., 808, 714, 808, 392]) # (238, 2)

mask = polygon2mask(

imgsz, # tuple

[polygon], # input as list

color=255, # 8-bit binary

downsample_ratio=1,

)

바운딩 박스

바운딩 박스 (수평) 인스턴스

바운딩 박스 데이터를 관리하려면, Bboxes 클래스는 상자 좌표 형식을 변환하고, 상자 크기를 조정하고, 면적을 계산하고, 오프셋을 포함하는 등의 작업을 수행하는 데 도움이 됩니다.

import numpy as np

from ultralytics.utils.instance import Bboxes

boxes = Bboxes(

bboxes=np.array(

[

[22.878, 231.27, 804.98, 756.83],

[48.552, 398.56, 245.35, 902.71],

[669.47, 392.19, 809.72, 877.04],

[221.52, 405.8, 344.98, 857.54],

[0, 550.53, 63.01, 873.44],

[0.0584, 254.46, 32.561, 324.87],

]

),

format="xyxy",

)

boxes.areas()

# >>> array([ 4.1104e+05, 99216, 68000, 55772, 20347, 2288.5])

boxes.convert("xywh")

print(boxes.bboxes)

# >>> array(

# [[ 413.93, 494.05, 782.1, 525.56],

# [ 146.95, 650.63, 196.8, 504.15],

# [ 739.6, 634.62, 140.25, 484.85],

# [ 283.25, 631.67, 123.46, 451.74],

# [ 31.505, 711.99, 63.01, 322.91],

# [ 16.31, 289.67, 32.503, 70.41]]

# )

다음을 참조하십시오. Bboxes 참조 섹션 더 많은 속성 및 메서드에 대해 설명합니다.

팁

다음 함수(및 기타)의 대부분은 다음을 사용하여 액세스할 수 있습니다. Bboxes 클래스, 그러나 함수를 직접 사용하려면 다음 하위 섹션에서 독립적으로 가져오는 방법을 참조하십시오.

박스 크기 조정

이미지를 확대 또는 축소할 때 다음을 사용하여 해당 경계 상자 좌표를 적절하게 조정하여 일치시킬 수 있습니다. ultralytics.utils.ops.scale_boxes.

import cv2 as cv

import numpy as np

from ultralytics.utils.ops import scale_boxes

image = cv.imread("ultralytics/assets/bus.jpg")

h, w, c = image.shape

resized = cv.resize(image, None, (), fx=1.2, fy=1.2)

new_h, new_w, _ = resized.shape

xyxy_boxes = np.array(

[

[22.878, 231.27, 804.98, 756.83],

[48.552, 398.56, 245.35, 902.71],

[669.47, 392.19, 809.72, 877.04],

[221.52, 405.8, 344.98, 857.54],

[0, 550.53, 63.01, 873.44],

[0.0584, 254.46, 32.561, 324.87],

]

)

new_boxes = scale_boxes(

img1_shape=(h, w), # original image dimensions

boxes=xyxy_boxes, # boxes from original image

img0_shape=(new_h, new_w), # resized image dimensions (scale to)

ratio_pad=None,

padding=False,

xywh=False,

)

print(new_boxes)

# >>> array(

# [[ 27.454, 277.52, 965.98, 908.2],

# [ 58.262, 478.27, 294.42, 1083.3],

# [ 803.36, 470.63, 971.66, 1052.4],

# [ 265.82, 486.96, 413.98, 1029],

# [ 0, 660.64, 75.612, 1048.1],

# [ 0.0701, 305.35, 39.073, 389.84]]

# )

바운딩 박스 형식 변환

XYXY → XYWH

경계 상자 좌표를 (x1, y1, x2, y2) 형식에서 (x, y, 너비, 높이) 형식으로 변환합니다. 여기서 (x1, y1)은 왼쪽 상단 모서리이고 (x2, y2)는 오른쪽 하단 모서리입니다.

import numpy as np

from ultralytics.utils.ops import xyxy2xywh

xyxy_boxes = np.array(

[

[22.878, 231.27, 804.98, 756.83],

[48.552, 398.56, 245.35, 902.71],

[669.47, 392.19, 809.72, 877.04],

[221.52, 405.8, 344.98, 857.54],

[0, 550.53, 63.01, 873.44],

[0.0584, 254.46, 32.561, 324.87],

]

)

xywh = xyxy2xywh(xyxy_boxes)

print(xywh)

# >>> array(

# [[ 413.93, 494.05, 782.1, 525.56],

# [ 146.95, 650.63, 196.8, 504.15],

# [ 739.6, 634.62, 140.25, 484.85],

# [ 283.25, 631.67, 123.46, 451.74],

# [ 31.505, 711.99, 63.01, 322.91],

# [ 16.31, 289.67, 32.503, 70.41]]

# )

모든 경계 상자 변환

from ultralytics.utils.ops import (

ltwh2xywh,

ltwh2xyxy,

xywh2ltwh, # xywh → top-left corner, w, h

xywh2xyxy,

xywhn2xyxy, # normalized → pixel

xyxy2ltwh, # xyxy → top-left corner, w, h

xyxy2xywhn, # pixel → normalized

)

for func in (ltwh2xywh, ltwh2xyxy, xywh2ltwh, xywh2xyxy, xywhn2xyxy, xyxy2ltwh, xyxy2xywhn):

print(help(func)) # print function docstrings

각 함수에 대한 docstring을 참조하거나 다음을 방문하십시오. ultralytics.utils.ops 참조 페이지 자세히 알아보려면 읽어보세요.

플로팅

주석 유틸리티

Ultralytics에는 다음이 포함됩니다. Annotator 다양한 데이터 유형에 주석을 다는 클래스입니다. 다음과 함께 사용하는 것이 가장 좋습니다. 객체 감지 경계 상자, 포즈 키포인트및 방향이 지정된 경계 상자.

Box 어노테이션

Ultralytics YOLO 🚀를 사용한 Python 예제

import cv2 as cv

import numpy as np

from ultralytics.utils.plotting import Annotator, colors

names = {

0: "person",

5: "bus",

11: "stop sign",

}

image = cv.imread("ultralytics/assets/bus.jpg")

ann = Annotator(

image,

line_width=None, # default auto-size

font_size=None, # default auto-size

font="Arial.ttf", # must be ImageFont compatible

pil=False, # use PIL, otherwise uses OpenCV

)

xyxy_boxes = np.array(

[

[5, 22.878, 231.27, 804.98, 756.83], # class-idx x1 y1 x2 y2

[0, 48.552, 398.56, 245.35, 902.71],

[0, 669.47, 392.19, 809.72, 877.04],

[0, 221.52, 405.8, 344.98, 857.54],

[0, 0, 550.53, 63.01, 873.44],

[11, 0.0584, 254.46, 32.561, 324.87],

]

)

for nb, box in enumerate(xyxy_boxes):

c_idx, *box = box

label = f"{str(nb).zfill(2)}:{names.get(int(c_idx))}"

ann.box_label(box, label, color=colors(c_idx, bgr=True))

image_with_bboxes = ann.result()

import cv2 as cv

import numpy as np

from ultralytics.utils.plotting import Annotator, colors

obb_names = {10: "small vehicle"}

obb_image = cv.imread("datasets/dota8/images/train/P1142__1024__0___824.jpg")

obb_boxes = np.array(

[

[0, 635, 560, 919, 719, 1087, 420, 803, 261], # class-idx x1 y1 x2 y2 x3 y2 x4 y4

[0, 331, 19, 493, 260, 776, 70, 613, -171],

[9, 869, 161, 886, 147, 851, 101, 833, 115],

]

)

ann = Annotator(

obb_image,

line_width=None, # default auto-size

font_size=None, # default auto-size

font="Arial.ttf", # must be ImageFont compatible

pil=False, # use PIL, otherwise uses OpenCV

)

for obb in obb_boxes:

c_idx, *obb = obb

obb = np.array(obb).reshape(-1, 4, 2).squeeze()

label = f"{obb_names.get(int(c_idx))}"

ann.box_label(

obb,

label,

color=colors(c_idx, True),

)

image_with_obb = ann.result()

이름은 다음에서 사용할 수 있습니다. model.names 언제 감지 결과 작업.

다음을 참조하십시오. Annotator 참조 페이지 자세한 내용은.

Ultralytics 스윕 어노테이션

Ultralytics 유틸리티를 사용한 스윕 어노테이션

import cv2

import numpy as np

from ultralytics import YOLO

from ultralytics.solutions.solutions import SolutionAnnotator

from ultralytics.utils.plotting import colors

# User defined video path and model file

cap = cv2.VideoCapture("path/to/video.mp4")

model = YOLO(model="yolo26s-seg.pt") # Model file, e.g., yolo26s.pt or yolo26m-seg.pt

if not cap.isOpened():

print("Error: Could not open video.")

exit()

# Initialize the video writer object.

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter("ultralytics.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

masks = None # Initialize variable to store masks data

f = 0 # Initialize frame count variable for enabling mouse event.

line_x = w # Store width of line.

dragging = False # Initialize bool variable for line dragging.

classes = model.names # Store model classes names for plotting.

window_name = "Ultralytics Sweep Annotator"

def drag_line(event, x, _, flags, param):

"""Mouse callback function to enable dragging a vertical sweep line across the video frame."""

global line_x, dragging

if event == cv2.EVENT_LBUTTONDOWN or (flags & cv2.EVENT_FLAG_LBUTTON):

line_x = max(0, min(x, w))

dragging = True

while cap.isOpened(): # Loop over the video capture object.

ret, im0 = cap.read()

if not ret:

break

f = f + 1 # Increment frame count.

count = 0 # Re-initialize count variable on every frame for precise counts.

results = model.track(im0, persist=True)[0]

if f == 1:

cv2.namedWindow(window_name)

cv2.setMouseCallback(window_name, drag_line)

annotator = SolutionAnnotator(im0)

if results.boxes.is_track:

if results.masks is not None:

masks = [np.array(m, dtype=np.int32) for m in results.masks.xy]

boxes = results.boxes.xyxy.tolist()

track_ids = results.boxes.id.int().cpu().tolist()

clss = results.boxes.cls.cpu().tolist()

for mask, box, cls, t_id in zip(masks or [None] * len(boxes), boxes, clss, track_ids):

color = colors(t_id, True) # Assign different color to each tracked object.

label = f"{classes[cls]}:{t_id}"

if mask is not None and mask.size > 0:

if box[0] > line_x:

count += 1

cv2.polylines(im0, [mask], True, color, 2)

x, y = mask.min(axis=0)

(w_m, _), _ = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)

cv2.rectangle(im0, (x, y - 20), (x + w_m, y), color, -1)

cv2.putText(im0, label, (x, y - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 1)

else:

if box[0] > line_x:

count += 1

annotator.box_label(box=box, color=color, label=label)

# Generate draggable sweep line

annotator.sweep_annotator(line_x=line_x, line_y=h, label=f"COUNT:{count}")

cv2.imshow(window_name, im0)

video_writer.write(im0)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

# Release the resources

cap.release()

video_writer.release()

cv2.destroyAllWindows()

다음에 대한 추가 정보를 찾으십시오. sweep_annotator 참조 섹션의 메서드 여기.

적응형 레이블 어노테이션

경고

다음 버전부터 시작: Ultralytics v8.3.167, circle_label 및 text_label 통합된 것으로 대체되었습니다. adaptive_label 함수. 이제 다음을 사용하여 주석 유형을 지정할 수 있습니다. shape ). 관심 있는 모델 아키텍처 YAML과 빈

- 사각형:

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="rect") - 원:

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="circle")

참고: Python 라이브 데모를 통한 텍스트 및 원 주석에 대한 심층 가이드 | Ultralytics 주석 🚀

Ultralytics 유틸리티를 사용한 적응형 레이블 어노테이션

import cv2

from ultralytics import YOLO

from ultralytics.solutions.solutions import SolutionAnnotator

from ultralytics.utils.plotting import colors

model = YOLO("yolo26s.pt")

names = model.names

cap = cv2.VideoCapture("path/to/video.mp4")

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

writer = cv2.VideoWriter("Ultralytics circle annotation.avi", cv2.VideoWriter_fourcc(*"MJPG"), fps, (w, h))

while True:

ret, im0 = cap.read()

if not ret:

break

annotator = SolutionAnnotator(im0)

results = model.predict(im0)[0]

boxes = results.boxes.xyxy.cpu()

clss = results.boxes.cls.cpu().tolist()

for box, cls in zip(boxes, clss):

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="circle")

writer.write(im0)

cv2.imshow("Ultralytics circle annotation", im0)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

writer.release()

cap.release()

cv2.destroyAllWindows()

import cv2

from ultralytics import YOLO

from ultralytics.solutions.solutions import SolutionAnnotator

from ultralytics.utils.plotting import colors

model = YOLO("yolo26s.pt")

names = model.names

cap = cv2.VideoCapture("path/to/video.mp4")

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

writer = cv2.VideoWriter("Ultralytics text annotation.avi", cv2.VideoWriter_fourcc(*"MJPG"), fps, (w, h))

while True:

ret, im0 = cap.read()

if not ret:

break

annotator = SolutionAnnotator(im0)

results = model.predict(im0)[0]

boxes = results.boxes.xyxy.cpu()

clss = results.boxes.cls.cpu().tolist()

for box, cls in zip(boxes, clss):

annotator.adaptive_label(box, label=names[int(cls)], color=colors(cls, True), shape="rect")

writer.write(im0)

cv2.imshow("Ultralytics text annotation", im0)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

writer.release()

cap.release()

cv2.destroyAllWindows()

다음을 참조하십시오. SolutionAnnotator 참조 페이지 자세한 내용은.

기타

코드 프로파일링

다음을 사용하여 코드가 실행/처리되는 시간을 확인하십시오. with 또는 데코레이터로.

from ultralytics.utils.ops import Profile

with Profile(device="cuda:0") as dt:

pass # operation to measure

print(dt)

# >>> "Elapsed time is 9.5367431640625e-07 s"

Ultralytics 지원 형식

Ultralytics에서 지원하는 이미지 또는 비디오 형식을 프로그래밍 방식으로 사용해야 합니까? 필요한 경우 다음 상수를 사용하십시오.

from ultralytics.data.utils import IMG_FORMATS, VID_FORMATS

print(IMG_FORMATS)

# {'avif', 'bmp', 'dng', 'heic', 'heif', 'jp2', 'jpeg', 'jpeg2000', 'jpg', 'mpo', 'png', 'tif', 'tiff', 'webp'}

print(VID_FORMATS)

# {'asf', 'avi', 'gif', 'm4v', 'mkv', 'mov', 'mp4', 'mpeg', 'mpg', 'ts', 'wmv', 'webm'}

Make Divisible

가장 가까운 정수를 계산합니다. x 으로 균등하게 나눌 수 있습니다. y.

from ultralytics.utils.ops import make_divisible

make_divisible(7, 3)

# >>> 9

make_divisible(7, 2)

# >>> 8

FAQ

머신러닝 워크플로우를 향상시키기 위해 Ultralytics 패키지에 포함된 유틸리티는 무엇입니까?

Ultralytics 패키지에는 머신러닝 워크플로우를 간소화하고 최적화하도록 설계된 유틸리티가 포함되어 있습니다. 주요 유틸리티로는 데이터셋 레이블링을 위한 자동 주석 기능, convert_coco를 사용하여 COCO를 YOLO 형식으로 변환하는 기능, 이미지 압축 및 데이터셋 자동 분할 기능이 있습니다. 이러한 도구는 수동 작업을 줄이고 일관성을 보장하며 데이터 처리 효율성을 향상시킵니다.

Ultralytics를 사용하여 데이터 세트에 자동 레이블을 지정하려면 어떻게 해야 합니까?

사전 훈련된 Ultralytics YOLO 객체 감지 모델이 있는 경우, SAM 모델과 함께 사용하여 데이터셋을 세그멘테이션 형식으로 자동 주석 처리할 수 있습니다. 다음은 예시입니다.

from ultralytics.data.annotator import auto_annotate

auto_annotate(

data="path/to/new/data",

det_model="yolo26n.pt",

sam_model="mobile_sam.pt",

device="cuda",

output_dir="path/to/save_labels",

)

자세한 내용은 auto_annotate 참조 섹션을 확인하세요.

Ultralytics에서 COCO 데이터셋 주석을 YOLO 형식으로 어떻게 변환하나요?

객체 감지를 위해 COCO JSON 주석을 YOLO 형식으로 변환하려면 다음을 사용할 수 있습니다. convert_coco 유틸리티. 다음은 샘플 코드 스니펫입니다:

from ultralytics.data.converter import convert_coco

convert_coco(

"coco/annotations/",

use_segments=False,

use_keypoints=False,

cls91to80=True,

)

자세한 내용은 convert_coco 참조 페이지를 참조하십시오.

Ultralytics 패키지에서 YOLO Data Explorer의 목적은 무엇입니까?

에 지정되어 있습니다. YOLO Explorer 에서 소개된 강력한 도구입니다. 8.1.0 데이터세트 이해도를 높이기 위해 업데이트합니다. 텍스트 쿼리를 사용하여 데이터세트에서 객체 인스턴스를 찾을 수 있으므로 데이터를 더 쉽게 분석하고 관리할 수 있습니다. 이 도구는 데이터세트 구성 및 분포에 대한 귀중한 통찰력을 제공하여 모델 학습 및 성능을 개선하는 데 도움이 됩니다.

Ultralytics에서 바운딩 박스를 세그먼트로 어떻게 변환할 수 있습니까?

기존 경계 상자 데이터를 변환하려면( x y w h format)을 세그먼트로 변환하려면 다음을 사용할 수 있습니다. yolo_bbox2segment 함수입니다. 이미지와 레이블에 대한 별도의 디렉터리로 파일을 구성하십시오.

from ultralytics.data.converter import yolo_bbox2segment

yolo_bbox2segment(

im_dir="path/to/images",

save_dir=None, # saved to "labels-segment" in the images directory

sam_model="sam_b.pt",

)

자세한 내용은 yolo_bbox2segment 참조 페이지를 참조하십시오.