TFLite、ONNX、CoreML、TensorRT 导出

📚 本指南介绍了如何将训练好的 YOLOv5 🚀 模型从 PyTorch 导出为各种部署格式,包括 ONNX、TensorRT、CoreML 等。

开始之前

克隆仓库并在 Python>=3.8.0 环境中安装 requirements.txt,包括 PyTorch>=1.8。模型和数据集会自动从最新的 YOLOv5 版本下载。

git clone https://github.com/ultralytics/yolov5 # clone

cd yolov5

pip install -r requirements.txt # install

关于 TensorRT 导出示例(需要 GPU),请参阅我们的 Colab notebook 附录部分。

支持的导出格式

YOLOv5 推理正式支持 12 种格式:

| 格式 | export.py --include | 模型 |

|---|---|---|

| PyTorch | - | yolov5s.pt |

| TorchScript | torchscript | yolov5s.torchscript |

| ONNX | onnx | yolov5s.onnx |

| OpenVINO | openvino | yolov5s_openvino_model/ |

| TensorRT | engine | yolov5s.engine |

| CoreML | coreml | yolov5s.mlmodel |

| TensorFlow SavedModel | saved_model | yolov5s_saved_model/ |

| TensorFlow GraphDef | pb | yolov5s.pb |

| TensorFlow Lite | tflite | yolov5s.tflite |

| TensorFlow Edge TPU | edgetpu | yolov5s_edgetpu.tflite |

| TensorFlow.js | tfjs | yolov5s_web_model/ |

| PaddlePaddle | paddle | yolov5s_paddle_model/ |

基准测试

以下基准测试在带有 YOLOv5 教程 notebook 的 Colab Pro 上运行 。要重现:

python benchmarks.py --weights yolov5s.pt --imgsz 640 --device 0

Colab Pro V100 GPU

benchmarks: weights=/content/yolov5/yolov5s.pt, imgsz=640, batch_size=1, data=/content/yolov5/data/coco128.yaml, device=0, half=False, test=False

Checking setup...

YOLOv5 🚀 v6.1-135-g7926afc torch 1.10.0+cu111 CUDA:0 (Tesla V100-SXM2-16GB, 16160MiB)

Setup complete ✅ (8 CPUs, 51.0 GB RAM, 46.7/166.8 GB disk)

Benchmarks complete (458.07s)

Format mAP@0.5:0.95 Inference time (ms)

0 PyTorch 0.4623 10.19

1 TorchScript 0.4623 6.85

2 ONNX 0.4623 14.63

3 OpenVINO NaN NaN

4 TensorRT 0.4617 1.89

5 CoreML NaN NaN

6 TensorFlow SavedModel 0.4623 21.28

7 TensorFlow GraphDef 0.4623 21.22

8 TensorFlow Lite NaN NaN

9 TensorFlow Edge TPU NaN NaN

10 TensorFlow.js NaN NaN

Colab Pro CPU

benchmarks: weights=/content/yolov5/yolov5s.pt, imgsz=640, batch_size=1, data=/content/yolov5/data/coco128.yaml, device=cpu, half=False, test=False

Checking setup...

YOLOv5 🚀 v6.1-135-g7926afc torch 1.10.0+cu111 CPU

Setup complete ✅ (8 CPUs, 51.0 GB RAM, 41.5/166.8 GB disk)

Benchmarks complete (241.20s)

Format mAP@0.5:0.95 Inference time (ms)

0 PyTorch 0.4623 127.61

1 TorchScript 0.4623 131.23

2 ONNX 0.4623 69.34

3 OpenVINO 0.4623 66.52

4 TensorRT NaN NaN

5 CoreML NaN NaN

6 TensorFlow SavedModel 0.4623 123.79

7 TensorFlow GraphDef 0.4623 121.57

8 TensorFlow Lite 0.4623 316.61

9 TensorFlow Edge TPU NaN NaN

10 TensorFlow.js NaN NaN

导出已训练的 YOLOv5 模型

此命令将预训练的 YOLOv5s 模型导出为 TorchScript 和 ONNX 格式。 yolov5s.pt 是 'small' 模型,是可用的第二小模型。其他选项包括 yolov5n.pt, yolov5m.pt, yolov5l.pt 和 yolov5x.pt,以及它们的 P6 版本,例如 yolov5s6.pt 或您自己的自定义训练检查点,例如 runs/exp/weights/best.pt。有关所有可用模型的详细信息,请参阅我们的 README 表格.

python export.py --weights yolov5s.pt --include torchscript onnx

提示

添加 --half 以 FP16 半精度导出模型 精度 为了更小的文件体积

输出:

export: data=data/coco128.yaml, weights=['yolov5s.pt'], imgsz=[640, 640], batch_size=1, device=cpu, half=False, inplace=False, train=False, keras=False, optimize=False, int8=False, dynamic=False, simplify=False, opset=12, verbose=False, workspace=4, nms=False, agnostic_nms=False, topk_per_class=100, topk_all=100, iou_thres=0.45, conf_thres=0.25, include=['torchscript', 'onnx']

YOLOv5 🚀 v6.2-104-ge3e5122 Python-3.8.0 torch-1.12.1+cu113 CPU

Downloading https://github.com/ultralytics/yolov5/releases/download/v6.2/yolov5s.pt to yolov5s.pt...

100% 14.1M/14.1M [00:00<00:00, 274MB/s]

Fusing layers...

YOLOv5s summary: 213 layers, 7225885 parameters, 0 gradients

PyTorch: starting from yolov5s.pt with output shape (1, 25200, 85) (14.1 MB)

TorchScript: starting export with torch 1.12.1+cu113...

TorchScript: export success ✅ 1.7s, saved as yolov5s.torchscript (28.1 MB)

ONNX: starting export with onnx 1.12.0...

ONNX: export success ✅ 2.3s, saved as yolov5s.onnx (28.0 MB)

Export complete (5.5s)

Results saved to /content/yolov5

Detect: python detect.py --weights yolov5s.onnx

Validate: python val.py --weights yolov5s.onnx

PyTorch Hub: model = torch.hub.load('ultralytics/yolov5', 'custom', 'yolov5s.onnx')

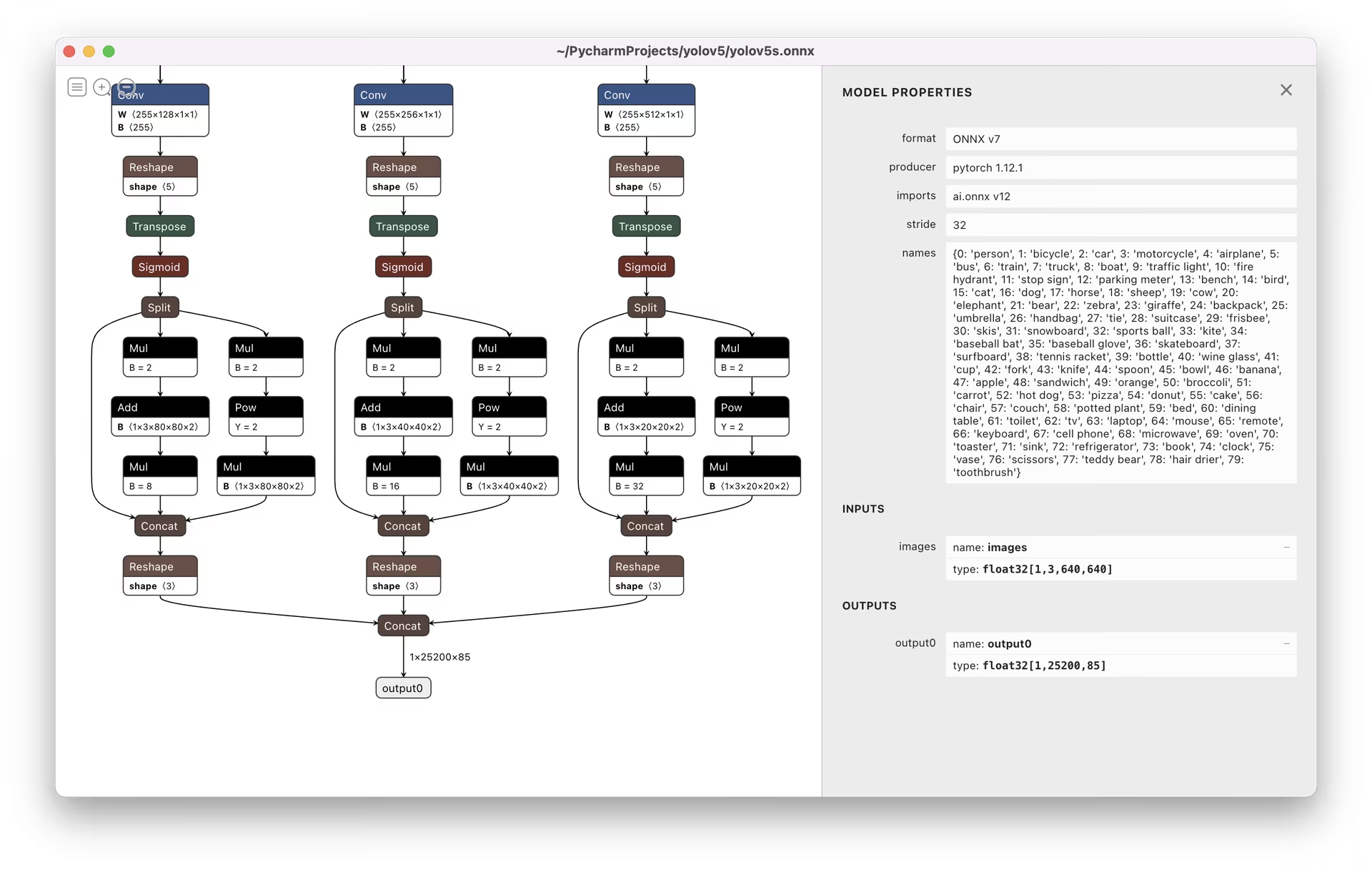

Visualize: https://netron.app/

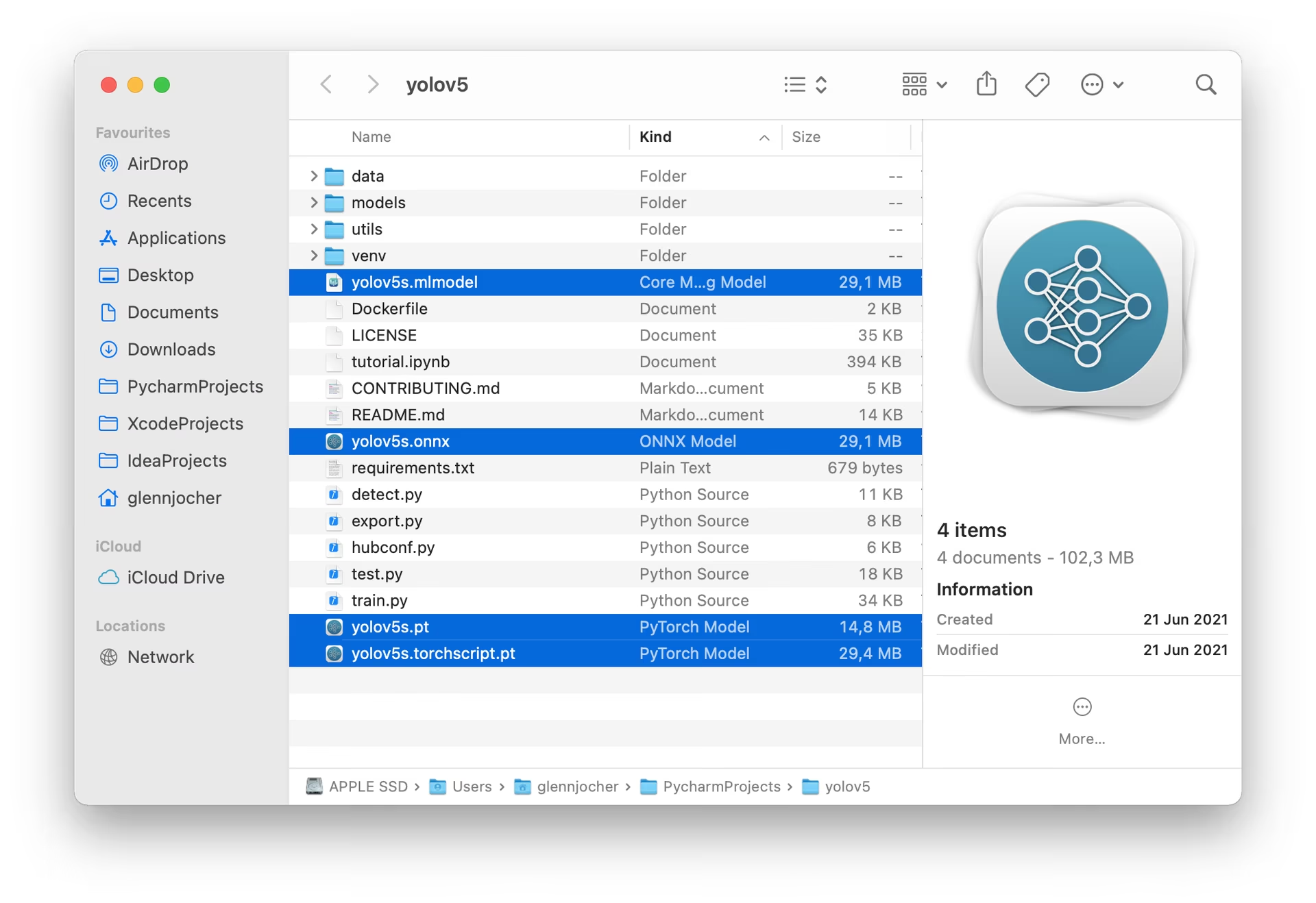

导出的3个模型将与原始PyTorch模型保存在一起:

推荐使用 Netron Viewer 来可视化导出的模型:

导出的模型使用示例

detect.py 运行导出的模型进行推理:

python detect.py --weights yolov5s.pt # PyTorch

python detect.py --weights yolov5s.torchscript # TorchScript

python detect.py --weights yolov5s.onnx # ONNX Runtime or OpenCV DNN with dnn=True

python detect.py --weights yolov5s_openvino_model # OpenVINO

python detect.py --weights yolov5s.engine # TensorRT

python detect.py --weights yolov5s.mlmodel # CoreML (macOS only)

python detect.py --weights yolov5s_saved_model # TensorFlow SavedModel

python detect.py --weights yolov5s.pb # TensorFlow GraphDef

python detect.py --weights yolov5s.tflite # TensorFlow Lite

python detect.py --weights yolov5s_edgetpu.tflite # TensorFlow Edge TPU

python detect.py --weights yolov5s_paddle_model # PaddlePaddle

val.py 运行导出的模型进行验证:

python val.py --weights yolov5s.pt # PyTorch

python val.py --weights yolov5s.torchscript # TorchScript

python val.py --weights yolov5s.onnx # ONNX Runtime or OpenCV DNN with dnn=True

python val.py --weights yolov5s_openvino_model # OpenVINO

python val.py --weights yolov5s.engine # TensorRT

python val.py --weights yolov5s.mlmodel # CoreML (macOS Only)

python val.py --weights yolov5s_saved_model # TensorFlow SavedModel

python val.py --weights yolov5s.pb # TensorFlow GraphDef

python val.py --weights yolov5s.tflite # TensorFlow Lite

python val.py --weights yolov5s_edgetpu.tflite # TensorFlow Edge TPU

python val.py --weights yolov5s_paddle_model # PaddlePaddle

将导出的 YOLOv5 模型与 PyTorch Hub 结合使用:

import torch

# Model

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s.pt")

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s.torchscript") # TorchScript

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s.onnx") # ONNX Runtime

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s_openvino_model") # OpenVINO

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s.engine") # TensorRT

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s.mlmodel") # CoreML (macOS Only)

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s_saved_model") # TensorFlow SavedModel

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s.pb") # TensorFlow GraphDef

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s.tflite") # TensorFlow Lite

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s_edgetpu.tflite") # TensorFlow Edge TPU

model = torch.hub.load("ultralytics/yolov5", "custom", "yolov5s_paddle_model") # PaddlePaddle

# Images

img = "https://ultralytics.com/images/zidane.jpg" # or file, Path, PIL, OpenCV, numpy, list

# Inference

results = model(img)

# Results

results.print() # or .show(), .save(), .crop(), .pandas(), etc.

OpenCV DNN推理

使用 ONNX 模型的 OpenCV 推理:

python export.py --weights yolov5s.pt --include onnx

python detect.py --weights yolov5s.onnx --dnn # detect

python val.py --weights yolov5s.onnx --dnn # validate

C++ 推理

YOLOv5 OpenCV DNN C++ 在导出的 ONNX 模型上的推理示例:

- https://github.com/Hexmagic/ONNX-yolov5/blob/master/src/test.cpp

- https://github.com/doleron/yolov5-opencv-cpp-python

YOLOv5 OpenVINO C++ 推理示例:

- https://github.com/dacquaviva/yolov5-openvino-cpp-python

- https://github.com/UNeedCryDear/yolov5-seg-opencv-dnn-cpp

TensorFlow.js Web 浏览器推理

支持的环境

Ultralytics 提供一系列即用型环境,每个环境都预装了必要的依赖项,如 CUDA、CUDNN、Python 和 PyTorch,以快速启动您的项目。

- 免费 GPU Notebooks:

- Google Cloud:GCP 快速入门指南

- Amazon:AWS 快速入门指南

- Azure:AzureML 快速入门指南

- Docker: Docker 快速入门指南

项目状态

此徽章表示所有 YOLOv5 GitHub Actions 持续集成 (CI) 测试均已成功通过。这些 CI 测试严格检查 YOLOv5 在各个关键方面的功能和性能:训练、验证、推理、导出 和 基准测试。它们确保在 macOS、Windows 和 Ubuntu 上运行的一致性和可靠性,测试每 24 小时进行一次,并在每次提交新内容时进行。