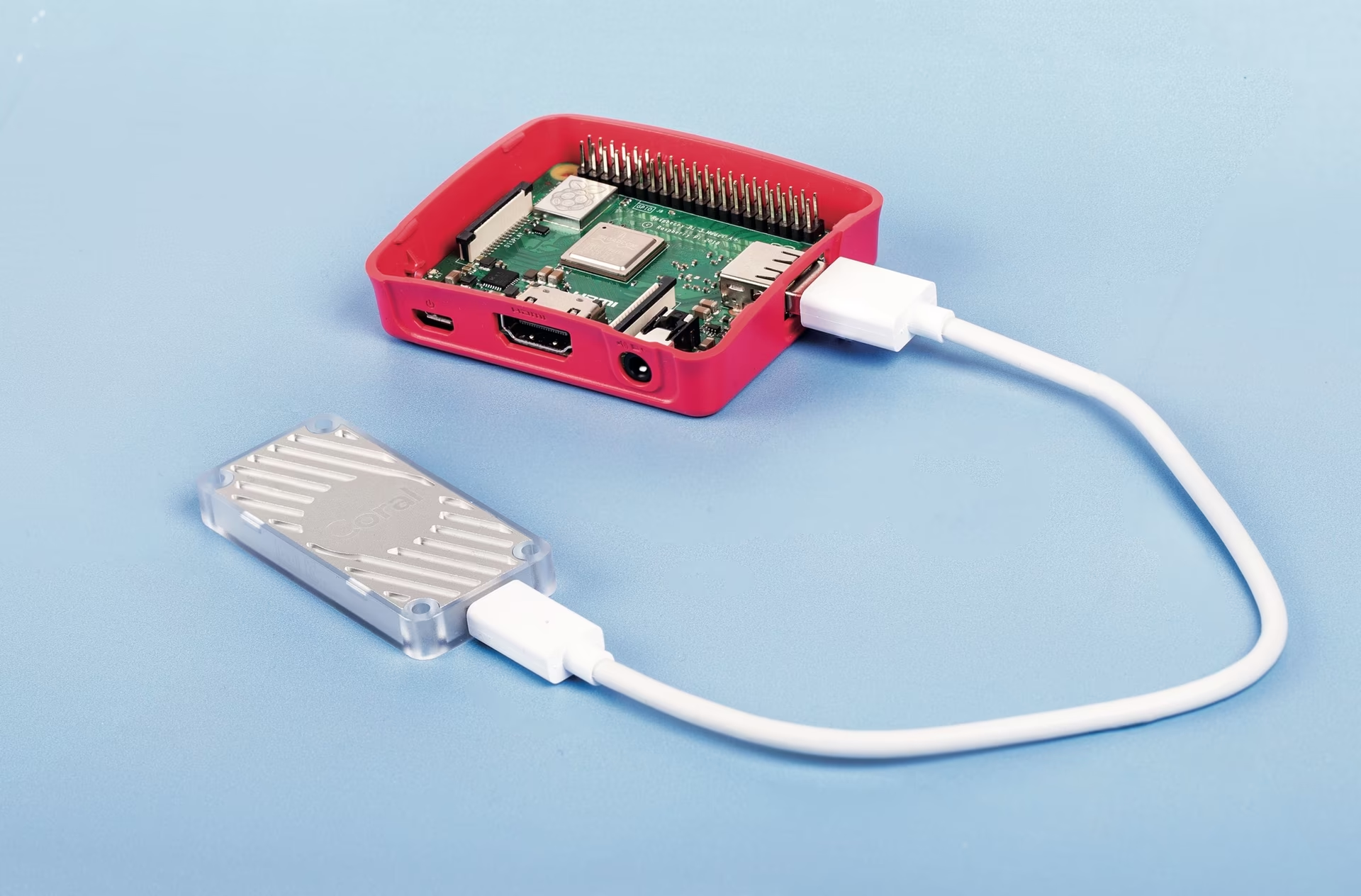

Coral Edge TPU on a Raspberry Pi with Ultralytics YOLO26 🚀

What is a Coral Edge TPU?

The Coral Edge TPU is a compact device that adds an Edge TPU coprocessor to your system. It enables low-power, high-performance ML inference for TensorFlow Lite models. Read more at the Coral Edge TPU home page.

Watch: How to Run Inference on Raspberry Pi using Google Coral Edge TPU

Boost Raspberry Pi Model Performance with Coral Edge TPU

Many people want to run their models on an embedded or mobile device such as a Raspberry Pi, since they are very power efficient and can be used in many different applications. However, the inference performance on these devices is usually poor even when using formats like ONNX or OpenVINO. The Coral Edge TPU is a great solution to this problem, since it can be used with a Raspberry Pi and accelerate inference performance greatly.

Edge TPU on Raspberry Pi with TensorFlow Lite (New)⭐

The existing guide by Coral on how to use the Edge TPU with a Raspberry Pi is outdated, and the current Coral Edge TPU runtime builds do not work with the current TensorFlow Lite runtime versions anymore. In addition to that, Google seems to have completely abandoned the Coral project, and there have not been any updates between 2021 and 2025. This guide will show you how to get the Edge TPU working with the latest versions of the TensorFlow Lite runtime and an updated Coral Edge TPU runtime on a Raspberry Pi single board computer (SBC).

Prerequisites

- Raspberry Pi 4B (2GB or more recommended) or Raspberry Pi 5 (Recommended)

- Raspberry Pi OS Bullseye/Bookworm (64-bit) with desktop (Recommended)

- Coral USB Accelerator

- A non-ARM based platform for exporting an Ultralytics PyTorch model

Installation Walkthrough

This guide assumes that you already have a working Raspberry Pi OS install and have installed ultralytics and all dependencies. To get ultralytics installed, visit the quickstart guide to get set up before continuing here.

Installing the Edge TPU runtime

First, we need to install the Edge TPU runtime. There are many different versions available, so you need to choose the right version for your operating system. The high-frequency version runs the Edge TPU at a higher clock speed, which improves performance. However, it might result in Edge TPU thermal throttling, so it is recommended to have some sort of cooling mechanism in place.

| Raspberry Pi OS | High frequency mode | Version to download |

|---|---|---|

| Bullseye 32bit | No | libedgetpu1-std_ ... .bullseye_armhf.deb |

| Bullseye 64bit | No | libedgetpu1-std_ ... .bullseye_arm64.deb |

| Bullseye 32bit | Yes | libedgetpu1-max_ ... .bullseye_armhf.deb |

| Bullseye 64bit | Yes | libedgetpu1-max_ ... .bullseye_arm64.deb |

| Bookworm 32bit | No | libedgetpu1-std_ ... .bookworm_armhf.deb |

| Bookworm 64bit | No | libedgetpu1-std_ ... .bookworm_arm64.deb |

| Bookworm 32bit | Yes | libedgetpu1-max_ ... .bookworm_armhf.deb |

| Bookworm 64bit | Yes | libedgetpu1-max_ ... .bookworm_arm64.deb |

Download the latest version from here.

After downloading the file, you can install it with the following command:

sudo dpkg -i path/to/package.deb

After installing the runtime, plug your Coral Edge TPU into a USB 3.0 port on the Raspberry Pi so the new udev rule can take effect.

Important

If you already have the Coral Edge TPU runtime installed, uninstall it using the following command.

# If you installed the standard version

sudo apt remove libedgetpu1-std

# If you installed the high-frequency version

sudo apt remove libedgetpu1-max

Export to Edge TPU

To use the Edge TPU, you need to convert your model into a compatible format. It is recommended that you run export on Google Colab, x86_64 Linux machine, using the official Ultralytics Docker container, or using Ultralytics Platform, since the Edge TPU compiler is not available on ARM. See the Export Mode for the available arguments.

Exporting the model

from ultralytics import YOLO

# Load a model

model = YOLO("path/to/model.pt") # Load an official model or custom model

# Export the model

model.export(format="edgetpu")

yolo export model=path/to/model.pt format=edgetpu # Export an official model or custom model

The exported model will be saved in the <model_name>_saved_model/ folder with the name <model_name>_full_integer_quant_edgetpu.tflite. Make sure the file name ends with the _edgetpu.tflite suffix; otherwise, Ultralytics will not detect that you're using an Edge TPU model.

Running the model

Before you can actually run the model, you will need to install the correct libraries.

If you already have TensorFlow installed, uninstall it with the following command:

pip uninstall tensorflow tensorflow-aarch64

Then install or update tflite-runtime:

pip install -U tflite-runtime

Now you can run inference using the following code:

Running the model

from ultralytics import YOLO

# Load a model

model = YOLO("path/to/<model_name>_full_integer_quant_edgetpu.tflite") # Load an official model or custom model

# Run Prediction

model.predict("path/to/source.png")

yolo predict model=path/to/MODEL_NAME_full_integer_quant_edgetpu.tflite source=path/to/source.png # Load an official model or custom model

Find comprehensive information on the Predict page for full prediction mode details.

Inference with multiple Edge TPUs

If you have multiple Edge TPUs, you can use the following code to select a specific TPU.

from ultralytics import YOLO

# Load a model

model = YOLO("path/to/<model_name>_full_integer_quant_edgetpu.tflite") # Load an official model or custom model

# Run Prediction

model.predict("path/to/source.png") # Inference defaults to the first TPU

model.predict("path/to/source.png", device="tpu:0") # Select the first TPU

model.predict("path/to/source.png", device="tpu:1") # Select the second TPU

Benchmarks

Benchmarks

Tested with Raspberry Pi OS Bookworm 64-bit and a USB Coral Edge TPU.

Note

Shown is the inference time, pre-/postprocessing is not included.

| Image Size | Model | Standard Inference Time (ms) | High-Frequency Inference Time (ms) |

|---|---|---|---|

| 320 | YOLOv8n | 32.2 | 26.7 |

| 320 | YOLOv8s | 47.1 | 39.8 |

| 512 | YOLOv8n | 73.5 | 60.7 |

| 512 | YOLOv8s | 149.6 | 125.3 |

| Image Size | Model | Standard Inference Time (ms) | High Frequency Inference Time (ms) |

|---|---|---|---|

| 320 | YOLOv8n | 22.2 | 16.7 |

| 320 | YOLOv8s | 40.1 | 32.2 |

| 512 | YOLOv8n | 53.5 | 41.6 |

| 512 | YOLOv8s | 132.0 | 103.3 |

On average:

- The Raspberry Pi 5 is 22% faster with the standard mode than the Raspberry Pi 4B.

- The Raspberry Pi 5 is 30.2% faster with the high-frequency mode than the Raspberry Pi 4B.

- The high-frequency mode is 28.4% faster than the standard mode.

FAQ

What is a Coral Edge TPU and how does it enhance Raspberry Pi's performance with Ultralytics YOLO26?

The Coral Edge TPU is a compact device designed to add an Edge TPU coprocessor to your system. This coprocessor enables low-power, high-performance machine learning inference, particularly optimized for TensorFlow Lite models. When using a Raspberry Pi, the Edge TPU accelerates ML model inference, significantly boosting performance, especially for Ultralytics YOLO26 models. You can read more about the Coral Edge TPU on their home page.

How do I install the Coral Edge TPU runtime on a Raspberry Pi?

To install the Coral Edge TPU runtime on your Raspberry Pi, download the appropriate .deb package for your Raspberry Pi OS version from this link. Once downloaded, use the following command to install it:

sudo dpkg -i path/to/package.deb

Make sure to uninstall any previous Coral Edge TPU runtime versions by following the steps outlined in the Installation Walkthrough section.

Can I export my Ultralytics YOLO26 model to be compatible with Coral Edge TPU?

Yes, you can export your Ultralytics YOLO26 model to be compatible with the Coral Edge TPU. It is recommended to perform the export on Google Colab, an x86_64 Linux machine, or using the Ultralytics Docker container. You can also use Ultralytics Platform for exporting. Here is how you can export your model using Python and CLI:

Exporting the model

from ultralytics import YOLO

# Load a model

model = YOLO("path/to/model.pt") # Load an official model or custom model

# Export the model

model.export(format="edgetpu")

yolo export model=path/to/model.pt format=edgetpu # Export an official model or custom model

For more information, refer to the Export Mode documentation.

What should I do if TensorFlow is already installed on my Raspberry Pi, but I want to use tflite-runtime instead?

If you have TensorFlow installed on your Raspberry Pi and need to switch to tflite-runtime, you'll need to uninstall TensorFlow first using:

pip uninstall tensorflow tensorflow-aarch64

Then, install or update tflite-runtime with the following command:

pip install -U tflite-runtime

For detailed instructions, refer to the Running the Model section.

How do I run inference with an exported YOLO26 model on a Raspberry Pi using the Coral Edge TPU?

After exporting your YOLO26 model to an Edge TPU-compatible format, you can run inference using the following code snippets:

Running the model

from ultralytics import YOLO

# Load a model

model = YOLO("path/to/edgetpu_model.tflite") # Load an official model or custom model

# Run Prediction

model.predict("path/to/source.png")

yolo predict model=path/to/edgetpu_model.tflite source=path/to/source.png # Load an official model or custom model

Comprehensive details on full prediction mode features can be found on the Predict Page.