Dog-Pose Dataset

Introduction

The Ultralytics Dog-Pose dataset is a high-quality and extensive dataset specifically curated for dog keypoint estimation. With 6,773 training images and 1,703 test images, this dataset provides a solid foundation for training robust pose estimation models.

Watch: How to Train Ultralytics YOLO26 on the Stanford Dog Pose Estimation Dataset | Step-by-Step Tutorial

Each annotated image includes 24 keypoints with 3 dimensions per keypoint (x, y, visibility), making it a valuable resource for advanced research and development in computer vision.

This dataset is intended for use with Ultralytics Platform and YOLO26.

Dataset Structure

- Split: 6,773 train / 1,703 test images with matching YOLO-format label files.

- Keypoints: 24 per dog with

(x, y, visibility)triplets. Layout:

datasets/dog-pose/ ├── images/{train,test} └── labels/{train,test}

Dataset YAML

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It includes paths, keypoint details, and other relevant information. In the case of the Dog-pose dataset, The dog-pose.yaml is available at https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/dog-pose.yaml.

ultralytics/cfg/datasets/dog-pose.yaml

# Ultralytics 🚀 AGPL-3.0 License - https://ultralytics.com/license

# Dogs dataset http://vision.stanford.edu/aditya86/ImageNetDogs/ by Stanford

# Documentation: https://docs.ultralytics.com/datasets/pose/dog-pose/

# Example usage: yolo train data=dog-pose.yaml

# parent

# ├── ultralytics

# └── datasets

# └── dog-pose ← downloads here (337 MB)

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: dog-pose # dataset root dir

train: images/train # train images (relative to 'path') 6773 images

val: images/val # val images (relative to 'path') 1703 images

# Keypoints

kpt_shape: [24, 3] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

# Classes

names:

0: dog

# Keypoint names per class

kpt_names:

0:

- front_left_paw

- front_left_knee

- front_left_elbow

- rear_left_paw

- rear_left_knee

- rear_left_elbow

- front_right_paw

- front_right_knee

- front_right_elbow

- rear_right_paw

- rear_right_knee

- rear_right_elbow

- tail_start

- tail_end

- left_ear_base

- right_ear_base

- nose

- chin

- left_ear_tip

- right_ear_tip

- left_eye

- right_eye

- withers

- throat

# Download script/URL (optional)

download: https://github.com/ultralytics/assets/releases/download/v0.0.0/dog-pose.zip

Usage

To train a YOLO26n-pose model on the Dog-pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model Training page.

Train Example

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="dog-pose.yaml", epochs=100, imgsz=640)

# Start training from a pretrained *.pt model

yolo pose train data=dog-pose.yaml model=yolo26n-pose.pt epochs=100 imgsz=640

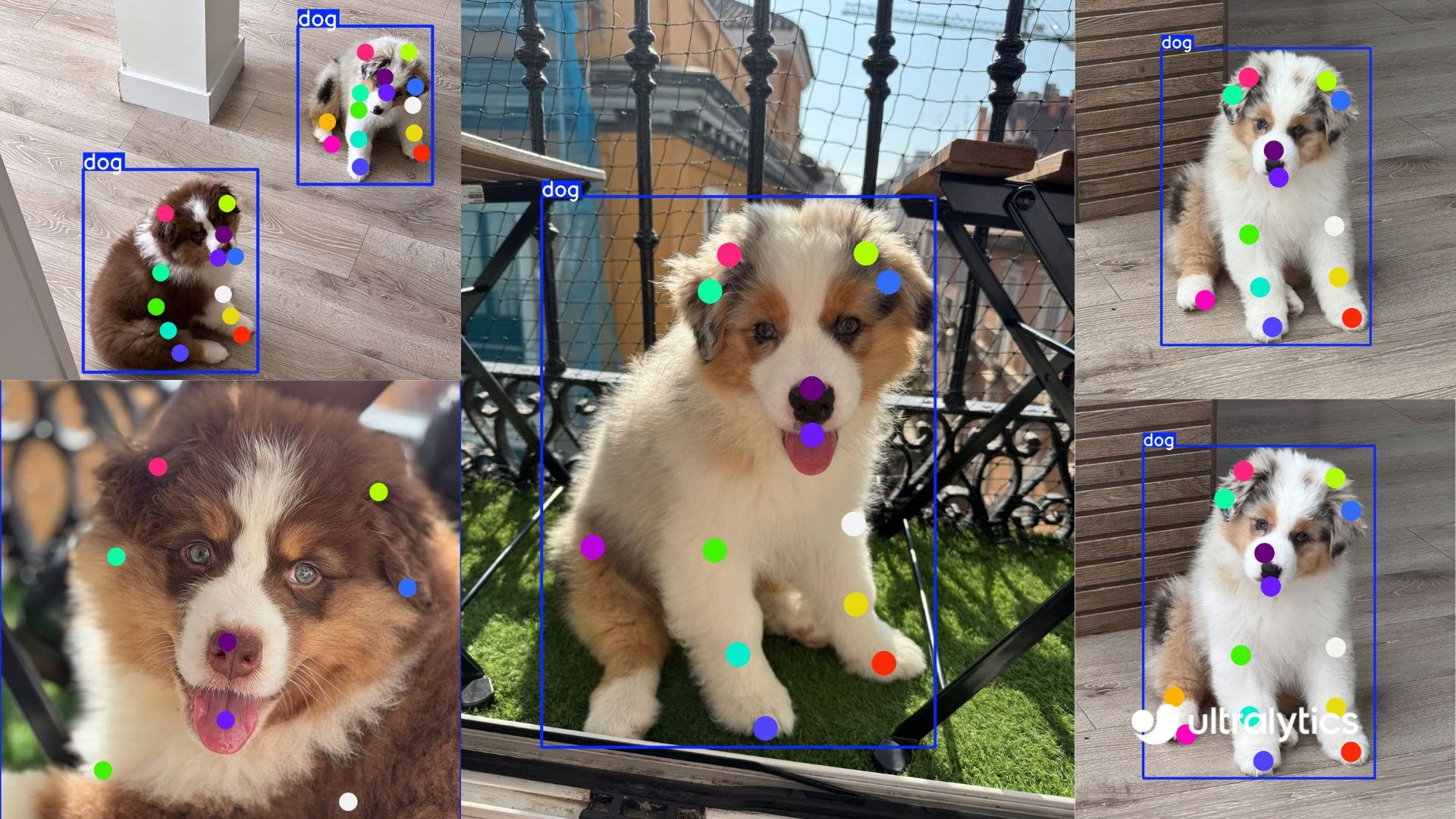

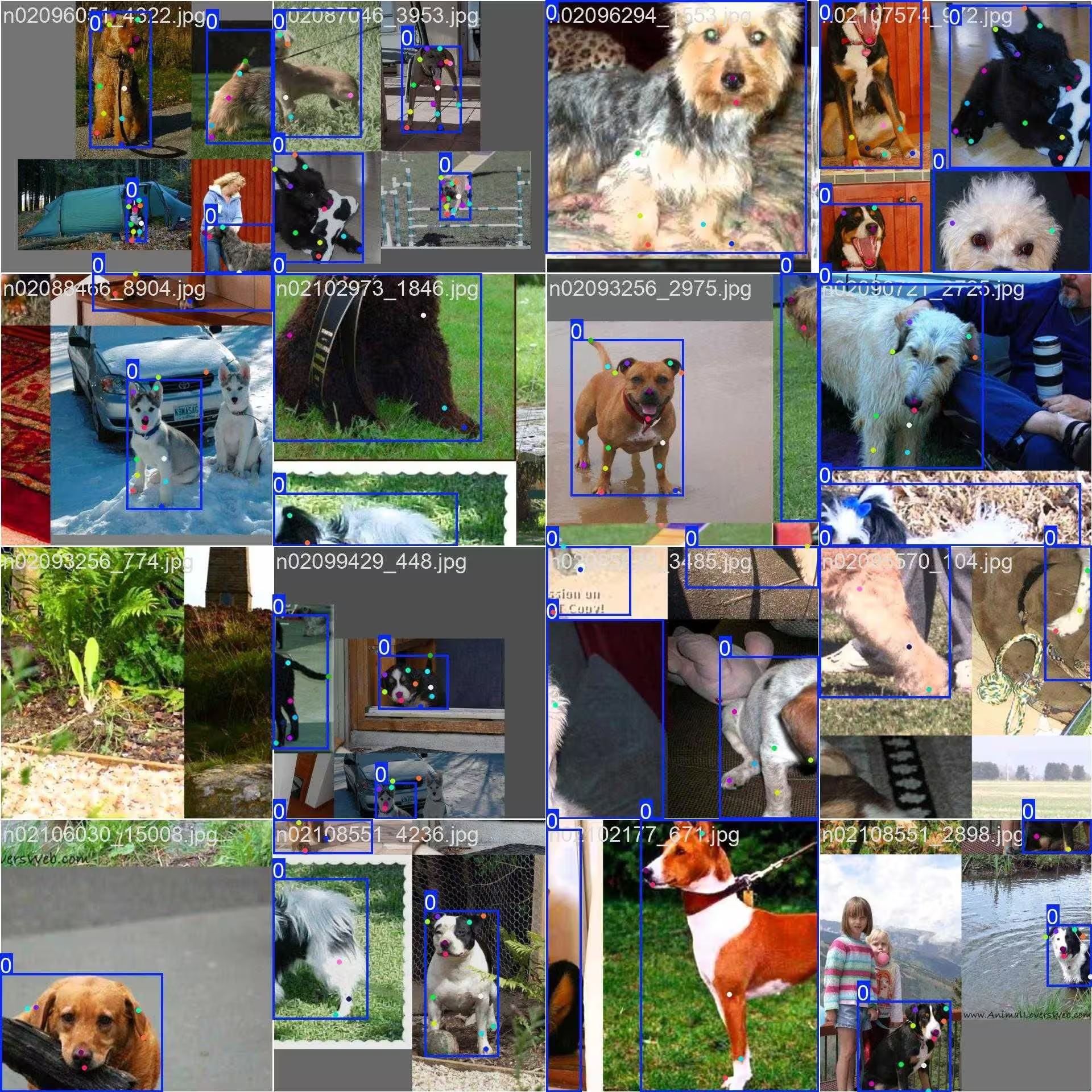

Sample Images and Annotations

Here are some examples of images from the Dog-pose dataset, along with their corresponding annotations:

- Mosaiced Image: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

The example showcases the variety and complexity of the images in the Dog-pose dataset and the benefits of using mosaicing during the training process.

Citations and Acknowledgments

If you use the Dog-pose dataset in your research or development work, please cite the following paper:

@inproceedings{khosla2011fgvc,

title={Novel dataset for Fine-Grained Image Categorization},

author={Aditya Khosla and Nityananda Jayadevaprakash and Bangpeng Yao and Li Fei-Fei},

booktitle={First Workshop on Fine-Grained Visual Categorization (FGVC), IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2011}

}

@inproceedings{deng2009imagenet,

title={ImageNet: A Large-Scale Hierarchical Image Database},

author={Jia Deng and Wei Dong and Richard Socher and Li-Jia Li and Kai Li and Li Fei-Fei},

booktitle={IEEE Computer Vision and Pattern Recognition (CVPR)},

year={2009}

}

We would like to acknowledge the Stanford team for creating and maintaining this valuable resource for the computer vision community. For more information about the Dog-pose dataset and its creators, visit the Stanford Dogs Dataset website.

FAQ

What is the Dog-pose dataset, and how is it used with Ultralytics YOLO26?

The Dog-Pose dataset features 6,773 training and 1,703 test images annotated with 24 keypoints for dog pose estimation. It's designed for training and validating models with Ultralytics YOLO26, supporting applications like animal behavior analysis, pet monitoring, and veterinary studies. The dataset's comprehensive annotations make it ideal for developing accurate pose estimation models for canines.

How do I train a YOLO26 model using the Dog-pose dataset in Ultralytics?

To train a YOLO26n-pose model on the Dog-pose dataset for 100 epochs with an image size of 640, follow these examples:

Train Example

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-pose.pt")

# Train the model

results = model.train(data="dog-pose.yaml", epochs=100, imgsz=640)

yolo pose train data=dog-pose.yaml model=yolo26n-pose.pt epochs=100 imgsz=640

For a comprehensive list of training arguments, refer to the model Training page.

What are the benefits of using the Dog-pose dataset?

The Dog-pose dataset offers several benefits:

Large and Diverse Dataset: With over 8,400 images, it provides substantial data covering a wide range of dog poses, breeds, and contexts, enabling robust model training and evaluation.

Detailed Keypoint Annotations: Each image includes 24 keypoints with 3 dimensions per keypoint (x, y, visibility), offering precise annotations for training accurate pose detection models.

Real-World Scenarios: Includes images from varied environments, enhancing the model's ability to generalize to real-world applications like pet monitoring and behavior analysis.

Transfer Learning Advantage: The dataset works well with transfer learning techniques, allowing models pretrained on human pose datasets to adapt to dog-specific features.

For more about its features and usage, see the Dataset Introduction section.

How does mosaicing benefit the YOLO26 training process using the Dog-pose dataset?

Mosaicing, as illustrated in the sample images from the Dog-pose dataset, merges multiple images into a single composite, enriching the diversity of objects and scenes in each training batch. This technique offers several benefits:

- Increases the variety of dog poses, sizes, and backgrounds in each batch

- Improves the model's ability to detect dogs in different contexts and scales

- Enhances generalization by exposing the model to more diverse visual patterns

- Reduces overfitting by creating novel combinations of training examples

This approach leads to more robust models that perform better in real-world scenarios. For example images, refer to the Sample Images and Annotations section.

Where can I find the Dog-pose dataset YAML file and how do I use it?

The Dog-pose dataset YAML file can be found at https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/dog-pose.yaml. This file defines the dataset configuration, including paths, classes, keypoint details, and other relevant information. The YAML specifies 24 keypoints with 3 dimensions per keypoint, making it suitable for detailed pose estimation tasks.

To use this file with YOLO26 training scripts, simply reference it in your training command as shown in the Usage section. The dataset will be automatically downloaded when first used, making setup straightforward.

For more FAQs and detailed documentation, visit the Ultralytics Documentation.