YOLO26 모델 및 배포를 위한 MNN 내보내기

MNN

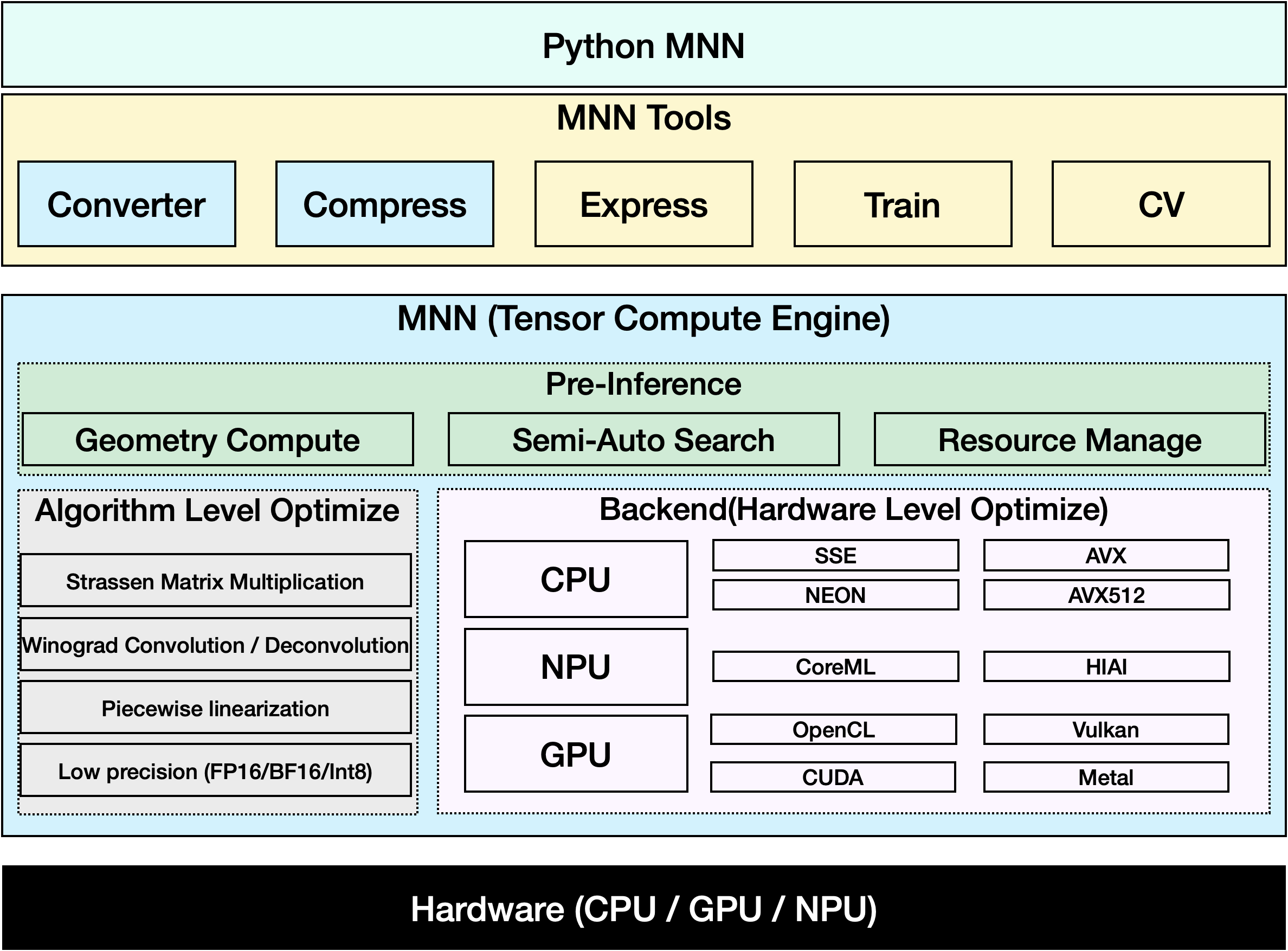

MNN은 매우 효율적이고 가벼운 딥러닝 프레임워크입니다. 딥러닝 모델의 추론 및 훈련을 지원하며 장치에서의 추론 및 훈련에 대해 업계 최고의 성능을 제공합니다. 현재 MNN은 Taobao, Tmall, Youku, DingTalk, Xianyu 등 Alibaba Inc.의 30개 이상의 앱에 통합되어 라이브 방송, 짧은 비디오 캡처, 검색 추천, 이미지로 제품 검색, 인터랙티브 마케팅, 지분 분배, 보안 위험 제어와 같은 70개 이상의 사용 시나리오를 다루고 있습니다. 또한 MNN은 IoT와 같은 임베디드 장치에서도 사용됩니다.

참고: Ultralytics YOLO26을 MNN 형식으로 내보내는 방법 | 모바일 기기에서 추론 속도 향상📱

MNN으로 내보내기: YOLO26 모델 변환

Ultralytics YOLO 모델을 MNN 형식으로 변환하여 모델 호환성 및 배포 유연성을 확장할 수 있습니다. 이 변환은 모바일 및 임베디드 환경에 맞게 모델을 최적화하여 리소스가 제한된 장치에서 효율적인 성능을 보장합니다.

설치

필수 패키지를 설치하려면 다음을 실행합니다:

설치

# Install the required package for YOLO26 and MNN

pip install ultralytics

pip install MNN

사용법

모든 Ultralytics YOLO26 모델은 기본적으로 내보내기를 지원하도록 설계되어 선호하는 배포 워크플로에 쉽게 통합할 수 있습니다. 애플리케이션에 가장 적합한 설정을 선택하려면 지원되는 내보내기 형식 및 구성 옵션의 전체 목록을 볼 수 있습니다.

사용법

from ultralytics import YOLO

# Load the YOLO26 model

model = YOLO("yolo26n.pt")

# Export the model to MNN format

model.export(format="mnn") # creates 'yolo26n.mnn'

# Load the exported MNN model

mnn_model = YOLO("yolo26n.mnn")

# Run inference

results = mnn_model("https://ultralytics.com/images/bus.jpg")

# Export a YOLO26n PyTorch model to MNN format

yolo export model=yolo26n.pt format=mnn # creates 'yolo26n.mnn'

# Run inference with the exported model

yolo predict model='yolo26n.mnn' source='https://ultralytics.com/images/bus.jpg'

인수 내보내기

| 인수 | 유형 | 기본값 | 설명 |

|---|---|---|---|

format | str | 'mnn' | 다양한 배포 환경과의 호환성을 정의하는 내보낸 모델의 대상 형식입니다. |

imgsz | int 또는 tuple | 640 | 모델 입력에 대한 원하는 이미지 크기입니다. 정사각형 이미지의 경우 정수이거나 튜플일 수 있습니다. (height, width) 특정 크기의 경우. |

half | bool | False | FP16(반정밀도) 양자화를 활성화하여 모델 크기를 줄이고 지원되는 하드웨어에서 추론 속도를 높일 수 있습니다. |

int8 | bool | False | INT8 양자화를 활성화하여 모델을 더욱 압축하고 정확도 손실을 최소화하면서 추론 속도를 높입니다(주로 에지 장치용). |

batch | int | 1 | 내보내기 모델 배치 추론 크기 또는 내보내기 모델이 동시에 처리할 이미지의 최대 수를 지정합니다. predict mode. |

device | str | None | 내보내기할 장치를 지정합니다: GPU (device=0), CPU (device=cpu), Apple Silicon용 MPS (device=mps)입니다. |

내보내기 프로세스에 대한 자세한 내용은 내보내기에 대한 Ultralytics 문서 페이지를 참조하십시오.

MNN 전용 추론

YOLO26 추론 및 전처리를 전적으로 MNN에 의존하는 함수가 구현되었으며, 어떤 시나리오에서도 쉽게 배포할 수 있도록 python 및 C++ 버전을 모두 제공합니다.

MNN

import argparse

import MNN

import MNN.cv as cv2

import MNN.numpy as np

def inference(model, img, precision, backend, thread):

config = {}

config["precision"] = precision

config["backend"] = backend

config["numThread"] = thread

rt = MNN.nn.create_runtime_manager((config,))

# net = MNN.nn.load_module_from_file(model, ['images'], ['output0'], runtime_manager=rt)

net = MNN.nn.load_module_from_file(model, [], [], runtime_manager=rt)

original_image = cv2.imread(img)

ih, iw, _ = original_image.shape

length = max((ih, iw))

scale = length / 640

image = np.pad(original_image, [[0, length - ih], [0, length - iw], [0, 0]], "constant")

image = cv2.resize(

image, (640, 640), 0.0, 0.0, cv2.INTER_LINEAR, -1, [0.0, 0.0, 0.0], [1.0 / 255.0, 1.0 / 255.0, 1.0 / 255.0]

)

image = image[..., ::-1] # BGR to RGB

input_var = image[None]

input_var = MNN.expr.convert(input_var, MNN.expr.NC4HW4)

output_var = net.forward(input_var)

output_var = MNN.expr.convert(output_var, MNN.expr.NCHW)

output_var = output_var.squeeze()

# output_var shape: [84, 8400]; 84 means: [cx, cy, w, h, prob * 80]

cx = output_var[0]

cy = output_var[1]

w = output_var[2]

h = output_var[3]

probs = output_var[4:]

# [cx, cy, w, h] -> [y0, x0, y1, x1]

x0 = cx - w * 0.5

y0 = cy - h * 0.5

x1 = cx + w * 0.5

y1 = cy + h * 0.5

boxes = np.stack([x0, y0, x1, y1], axis=1)

# ensure ratio is within the valid range [0.0, 1.0]

boxes = np.clip(boxes, 0, 1)

# get max prob and idx

scores = np.max(probs, 0)

class_ids = np.argmax(probs, 0)

result_ids = MNN.expr.nms(boxes, scores, 100, 0.45, 0.25)

print(result_ids.shape)

# nms result box, score, ids

result_boxes = boxes[result_ids]

result_scores = scores[result_ids]

result_class_ids = class_ids[result_ids]

for i in range(len(result_boxes)):

x0, y0, x1, y1 = result_boxes[i].read_as_tuple()

y0 = int(y0 * scale)

y1 = int(y1 * scale)

x0 = int(x0 * scale)

x1 = int(x1 * scale)

# clamp to the original image size to handle cases where padding was applied

x1 = min(iw, x1)

y1 = min(ih, y1)

print(result_class_ids[i])

cv2.rectangle(original_image, (x0, y0), (x1, y1), (0, 0, 255), 2)

cv2.imwrite("res.jpg", original_image)

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--model", type=str, required=True, help="the yolo26 model path")

parser.add_argument("--img", type=str, required=True, help="the input image path")

parser.add_argument("--precision", type=str, default="normal", help="inference precision: normal, low, high, lowBF")

parser.add_argument(

"--backend",

type=str,

default="CPU",

help="inference backend: CPU, OPENCL, OPENGL, NN, VULKAN, METAL, TRT, CUDA, HIAI",

)

parser.add_argument("--thread", type=int, default=4, help="inference using thread: int")

args = parser.parse_args()

inference(args.model, args.img, args.precision, args.backend, args.thread)

#include <stdio.h>

#include <MNN/ImageProcess.hpp>

#include <MNN/expr/Module.hpp>

#include <MNN/expr/Executor.hpp>

#include <MNN/expr/ExprCreator.hpp>

#include <MNN/expr/Executor.hpp>

#include <cv/cv.hpp>

using namespace MNN;

using namespace MNN::Express;

using namespace MNN::CV;

int main(int argc, const char* argv[]) {

if (argc < 3) {

MNN_PRINT("Usage: ./yolo26_demo.out model.mnn input.jpg [forwardType] [precision] [thread]\n");

return 0;

}

int thread = 4;

int precision = 0;

int forwardType = MNN_FORWARD_CPU;

if (argc >= 4) {

forwardType = atoi(argv[3]);

}

if (argc >= 5) {

precision = atoi(argv[4]);

}

if (argc >= 6) {

thread = atoi(argv[5]);

}

MNN::ScheduleConfig sConfig;

sConfig.type = static_cast<MNNForwardType>(forwardType);

sConfig.numThread = thread;

BackendConfig bConfig;

bConfig.precision = static_cast<BackendConfig::PrecisionMode>(precision);

sConfig.backendConfig = &bConfig;

std::shared_ptr<Executor::RuntimeManager> rtmgr = std::shared_ptr<Executor::RuntimeManager>(Executor::RuntimeManager::createRuntimeManager(sConfig));

if(rtmgr == nullptr) {

MNN_ERROR("Empty RuntimeManger\n");

return 0;

}

rtmgr->setCache(".cachefile");

std::shared_ptr<Module> net(Module::load(std::vector<std::string>{}, std::vector<std::string>{}, argv[1], rtmgr));

auto original_image = imread(argv[2]);

auto dims = original_image->getInfo()->dim;

int ih = dims[0];

int iw = dims[1];

int len = ih > iw ? ih : iw;

float scale = len / 640.0;

std::vector<int> padvals { 0, len - ih, 0, len - iw, 0, 0 };

auto pads = _Const(static_cast<void*>(padvals.data()), {3, 2}, NCHW, halide_type_of<int>());

auto image = _Pad(original_image, pads, CONSTANT);

image = resize(image, Size(640, 640), 0, 0, INTER_LINEAR, -1, {0., 0., 0.}, {1./255., 1./255., 1./255.});

image = cvtColor(image, COLOR_BGR2RGB);

auto input = _Unsqueeze(image, {0});

input = _Convert(input, NC4HW4);

auto outputs = net->onForward({input});

auto output = _Convert(outputs[0], NCHW);

output = _Squeeze(output);

// output shape: [84, 8400]; 84 means: [cx, cy, w, h, prob * 80]

auto cx = _Gather(output, _Scalar<int>(0));

auto cy = _Gather(output, _Scalar<int>(1));

auto w = _Gather(output, _Scalar<int>(2));

auto h = _Gather(output, _Scalar<int>(3));

std::vector<int> startvals { 4, 0 };

auto start = _Const(static_cast<void*>(startvals.data()), {2}, NCHW, halide_type_of<int>());

std::vector<int> sizevals { -1, -1 };

auto size = _Const(static_cast<void*>(sizevals.data()), {2}, NCHW, halide_type_of<int>());

auto probs = _Slice(output, start, size);

// [cx, cy, w, h] -> [y0, x0, y1, x1]

auto x0 = cx - w * _Const(0.5);

auto y0 = cy - h * _Const(0.5);

auto x1 = cx + w * _Const(0.5);

auto y1 = cy + h * _Const(0.5);

auto boxes = _Stack({x0, y0, x1, y1}, 1);

// ensure ratio is within the valid range [0.0, 1.0]

boxes = _Maximum(boxes, _Scalar<float>(0.0f));

boxes = _Minimum(boxes, _Scalar<float>(1.0f));

auto scores = _ReduceMax(probs, {0});

auto ids = _ArgMax(probs, 0);

auto result_ids = _Nms(boxes, scores, 100, 0.45, 0.25);

auto result_ptr = result_ids->readMap<int>();

auto box_ptr = boxes->readMap<float>();

auto ids_ptr = ids->readMap<int>();

auto score_ptr = scores->readMap<float>();

for (int i = 0; i < 100; i++) {

auto idx = result_ptr[i];

if (idx < 0) break;

auto x0 = box_ptr[idx * 4 + 0] * scale;

auto y0 = box_ptr[idx * 4 + 1] * scale;

auto x1 = box_ptr[idx * 4 + 2] * scale;

auto y1 = box_ptr[idx * 4 + 3] * scale;

// clamp to the original image size to handle cases where padding was applied

x1 = std::min(static_cast<float>(iw), x1);

y1 = std::min(static_cast<float>(ih), y1);

auto class_idx = ids_ptr[idx];

auto score = score_ptr[idx];

rectangle(original_image, {x0, y0}, {x1, y1}, {0, 0, 255}, 2);

}

if (imwrite("res.jpg", original_image)) {

MNN_PRINT("result image write to `res.jpg`.\n");

}

rtmgr->updateCache();

return 0;

}

요약

이 가이드에서는 Ultralytics YOLO26 모델을 MNN으로 내보내고 MNN을 추론에 사용하는 방법을 소개합니다. MNN 형식은 엣지 AI 애플리케이션에 탁월한 성능을 제공하여 리소스가 제한된 장치에 컴퓨터 비전 모델을 배포하는 데 이상적입니다.

자세한 사용법은 MNN 문서를 참조하세요.

FAQ

Ultralytics YOLO26 모델을 MNN 형식으로 내보내려면 어떻게 해야 합니까?

Ultralytics YOLO26 모델을 MNN 형식으로 내보내려면 다음 단계를 따르십시오:

내보내기

from ultralytics import YOLO

# Load the YOLO26 model

model = YOLO("yolo26n.pt")

# Export to MNN format

model.export(format="mnn") # creates 'yolo26n.mnn' with fp32 weight

model.export(format="mnn", half=True) # creates 'yolo26n.mnn' with fp16 weight

model.export(format="mnn", int8=True) # creates 'yolo26n.mnn' with int8 weight

yolo export model=yolo26n.pt format=mnn # creates 'yolo26n.mnn' with fp32 weight

yolo export model=yolo26n.pt format=mnn half=True # creates 'yolo26n.mnn' with fp16 weight

yolo export model=yolo26n.pt format=mnn int8=True # creates 'yolo26n.mnn' with int8 weight

자세한 내보내기 옵션은 설명서의 내보내기 페이지를 확인하십시오.

내보낸 YOLO26 MNN 모델로 예측하려면 어떻게 해야 합니까?

내보낸 YOLO26 MNN 모델로 예측하려면 다음을 사용하십시오. predict YOLO 클래스의 함수입니다.

예측

from ultralytics import YOLO

# Load the YOLO26 MNN model

model = YOLO("yolo26n.mnn")

# Export to MNN format

results = model("https://ultralytics.com/images/bus.jpg") # predict with `fp32`

results = model("https://ultralytics.com/images/bus.jpg", half=True) # predict with `fp16` if device support

for result in results:

result.show() # display to screen

result.save(filename="result.jpg") # save to disk

yolo predict model='yolo26n.mnn' source='https://ultralytics.com/images/bus.jpg' # predict with `fp32`

yolo predict model='yolo26n.mnn' source='https://ultralytics.com/images/bus.jpg' --half=True # predict with `fp16` if device support

MNN은 어떤 플랫폼을 지원합니까?

MNN은 다재다능하며 다양한 플랫폼을 지원합니다.

- 모바일: Android, iOS, Harmony.

- Embedded Systems and IoT Devices: Raspberry Pi 및 NVIDIA Jetson과 같은 장치.

- 데스크톱 및 서버: Linux, Windows 및 macOS.

모바일 기기에 Ultralytics YOLO26 MNN 모델을 배포하려면 어떻게 해야 합니까?

모바일 기기에 YOLO26 모델을 배포하려면:

- Android용 빌드: MNN Android 가이드를 따르십시오.

- iOS용 빌드: MNN iOS 가이드를 따르십시오.

- Harmony용 빌드: MNN Harmony 가이드를 따르십시오.