Computer Vision Tasks Supported by Ultralytics YOLO26

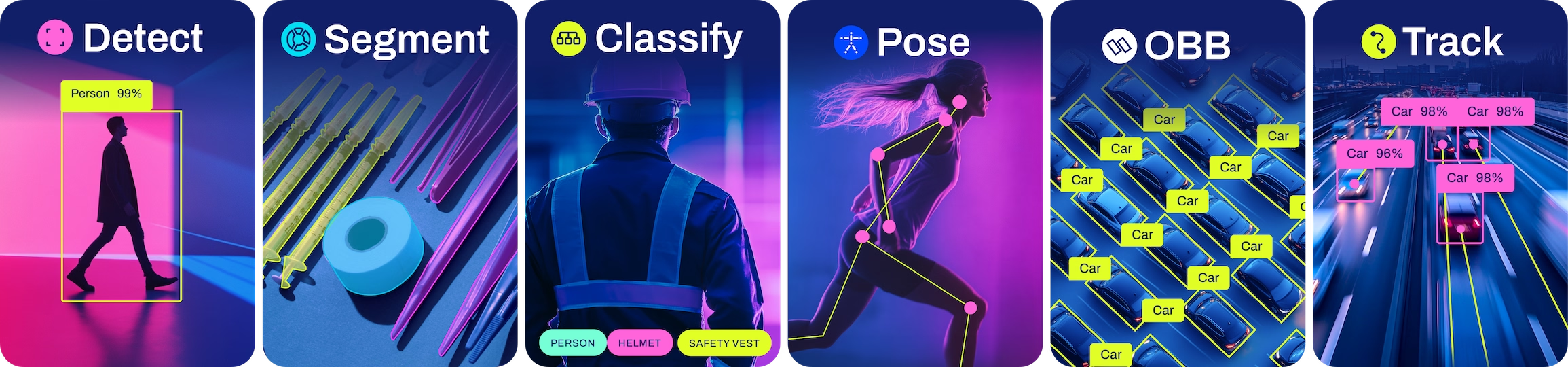

Ultralytics YOLO26 is a versatile AI framework that supports multiple computer visiontasks. The framework can be used to perform detection, segmentation, OBB, classification, and pose estimation. Each of these tasks has a different objective and use case, allowing you to address various computer vision challenges with a single framework.

Watch: Explore Ultralytics YOLO Tasks: Object Detection, Segmentation, OBB, Tracking, and Pose Estimation.

Detection

Detection is the primary task supported by YOLO26. It involves identifying objects in an image or video frame and drawing bounding boxes around them. The detected objects are classified into different categories based on their features. YOLO26 can detect multiple objects in a single image or video frame with high accuracy and speed, making it ideal for real-time applications like surveillance systems and autonomous vehicles.

Image segmentation

Segmentation takes object detection further by producing pixel-level masks for each object. This precision is useful for applications such as medical imaging, agricultural analysis, and manufacturing quality control.

Classification

Classification involves categorizing entire images based on their content. This task is essential for applications like product categorization in e-commerce, content moderation, and wildlife monitoring.

Pose estimation

Pose estimation detects specific keypoints in images or video frames to track movements or estimate poses. These keypoints can represent human joints, facial features, or other significant points of interest. YOLO26 excels at keypoint detection with high accuracy and speed, making it valuable for fitness applications, sports analytics, and human-computer interaction.

OBB

Oriented Bounding Box (OBB) detection enhances traditional object detection by adding an orientation angle to better locate rotated objects. This capability is particularly valuable for aerial imagery analysis, document processing, and industrial applications where objects appear at various angles. YOLO26 delivers high accuracy and speed for detecting rotated objects in diverse scenarios.

Conclusion

Ultralytics YOLO26 supports multiple computer vision tasks, including detection, segmentation, classification, oriented object detection, and keypoint detection. Each task addresses specific needs in the computer vision landscape, from basic object identification to detailed pose analysis. By understanding the capabilities and applications of each task, you can select the most appropriate approach for your specific computer vision challenges and leverage YOLO26's powerful features to build effective solutions.

FAQ

What computer vision tasks can Ultralytics YOLO26 perform?

Ultralytics YOLO26 is a versatile AI framework capable of performing various computer vision tasks with high accuracy and speed. These tasks include:

- Object Detection: Identifying and localizing objects in images or video frames by drawing bounding boxes around them.

- Image segmentation: Segmenting images into different regions based on their content, useful for applications like medical imaging.

- Classification: Categorizing entire images based on their content.

- Pose estimation: Detecting specific keypoints in an image or video frame to track movements or poses.

- Oriented Object Detection (OBB): Detecting rotated objects with an added orientation angle for enhanced accuracy.

How do I use Ultralytics YOLO26 for object detection?

To use Ultralytics YOLO26 for object detection, follow these steps:

- Prepare your dataset in the appropriate format.

- Train the YOLO26 model using the detection task.

- Use the model to make predictions by feeding in new images or video frames.

Example

from ultralytics import YOLO

# Load a pretrained YOLO model (adjust model type as needed)

model = YOLO("yolo26n.pt") # n, s, m, l, x versions available

# Perform object detection on an image

results = model.predict(source="image.jpg") # Can also use video, directory, URL, etc.

# Display the results

results[0].show() # Show the first image results

# Run YOLO detection from the command line

yolo detect model=yolo26n.pt source="image.jpg" # Adjust model and source as needed

For more detailed instructions, check out our detection examples.

What are the benefits of using YOLO26 for segmentation tasks?

Using YOLO26 for segmentation tasks provides several advantages:

- High Accuracy: The segmentation task provides precise, pixel-level masks.

- Speed: YOLO26 is optimized for real-time applications, offering quick processing even for high-resolution images.

- Multiple Applications: It is ideal for medical imaging, autonomous driving, and other applications requiring detailed image segmentation.

Learn more about the benefits and use cases of YOLO26 for segmentation in the image segmentation section.

Can Ultralytics YOLO26 handle pose estimation and keypoint detection?

Yes, Ultralytics YOLO26 can effectively perform pose estimation and keypoint detection with high accuracy and speed. This feature is particularly useful for tracking movements in sports analytics, healthcare, and human-computer interaction applications. YOLO26 detects keypoints in an image or video frame, allowing for precise pose estimation.

For more details and implementation tips, visit our pose estimation examples.

Why should I choose Ultralytics YOLO26 for oriented object detection (OBB)?

Oriented Object Detection (OBB) with YOLO26 provides enhanced precision by detecting objects with an additional angle parameter. This feature is beneficial for applications requiring accurate localization of rotated objects, such as aerial imagery analysis and warehouse automation.

- Increased Precision: The angle component reduces false positives for rotated objects.

- Versatile Applications: Useful for tasks in geospatial analysis, robotics, etc.

Check out the Oriented Object Detection section for more details and examples.