NVIDIA Jetson上的Ultralytics YOLO26:使用DeepStream SDK和TensorRT

观看: 如何在Jetson Orin NX上使用NVIDIA Deepstream运行Ultralytics YOLO26模型 🚀

本综合指南详细介绍了如何使用DeepStream SDK和TensorRT在NVIDIA Jetson设备上部署Ultralytics YOLO26。在此,我们使用TensorRT来最大化Jetson平台上的推理性能。

注意

本指南已经过以下测试:运行最新稳定 JetPack 版本的 NVIDIA Jetson Orin Nano Super Developer Kit(JP6.1)、基于 NVIDIA Jetson Orin NX 16GB 且运行 JetPack 版本的 Seeed Studio reComputer J4012(JP5.1.3)以及基于 NVIDIA Jetson Nano 4GB 且运行 JetPack 版本的 Seeed Studio reComputer J1020 v2(JP4.6.4)。预计它可以在包括最新和旧版在内的所有 NVIDIA Jetson 硬件系列上运行。

什么是 NVIDIA DeepStream?

NVIDIA 的 DeepStream SDK 是一个完整的流分析工具包,基于 GStreamer,用于基于 AI 的多传感器处理、视频、音频和图像理解。它非常适合视觉 AI 开发人员、软件合作伙伴、初创公司和构建 IVA(智能视频分析)应用程序和 OEM。现在,您可以创建包含 神经网络 和其他复杂处理任务(如跟踪、视频编码/解码和视频渲染)的流处理管道。这些管道支持对视频、图像和传感器数据进行实时分析。DeepStream 的多平台支持使您可以更快、更轻松地在本地、边缘和云中开发视觉 AI 应用程序和服务。

准备工作

在开始遵循本指南之前:

- 请访问我们的文档快速入门指南:NVIDIA Jetson与Ultralytics YOLO26,以使用Ultralytics YOLO26设置您的NVIDIA Jetson设备。

- 安装 DeepStream SDK 根据 JetPack 版本

- 对于 JetPack 4.6.4,安装 DeepStream 6.0.1

- 对于 JetPack 5.1.3,请安装 DeepStream 6.3

- 对于 JetPack 6.1,请安装 DeepStream 7.1

提示

在本指南中,我们使用了 Debian 软件包方法将 DeepStream SDK 安装到 Jetson 设备。您还可以访问 Jetson 上的 DeepStream SDK(已存档) 以访问 DeepStream 的旧版本。

YOLO26的DeepStream配置

这里我们使用 marcoslucianops/DeepStream-Yolo GitHub 仓库,它包括 NVIDIA DeepStream SDK 对 YOLO 模型 的支持。感谢 marcoslucianops 的贡献!

安装 Ultralytics 以及必要的依赖项

cd ~ pip install -U pip git clone https://github.com/ultralytics/ultralytics cd ultralytics pip install -e ".[export]" onnxslim克隆 DeepStream-Yolo 仓库

cd ~ git clone https://github.com/marcoslucianops/DeepStream-Yolo复制

export_yolo26.py文件来自DeepStream-Yolo/utils目录到ultralytics文件夹cp ~/DeepStream-Yolo/utils/export_yolo26.py ~/ultralytics cd ultralytics从YOLO26版本下载您选择的Ultralytics YOLO26 detect模型(.pt)。这里我们使用yolo26s.pt。

wget https://github.com/ultralytics/assets/releases/download/v8.4.0/yolo26s.pt注意

您也可以使用自定义训练的YOLO26模型。

将模型转换为 ONNX

python3 export_yolo26.py -w yolo26s.pt将以下参数传递给上述命令

对于 DeepStream 5.1,请移除

--dynamic参数并使用opset12 或更低。默认值为opset是 17。--opset 12要更改推理大小(默认值:640)

-s SIZE --size SIZE -s HEIGHT WIDTH --size HEIGHT WIDTH1280 示例:

-s 1280 or -s 1280 1280简化 ONNX 模型 (DeepStream >= 6.0)

--simplify要使用动态批量大小 (DeepStream >= 6.1)

--dynamic要使用静态批次大小(例如,批次大小 = 4)。

--batch 4复制生成的

.onnx模型文件和labels.txt文件到DeepStream-Yolo文件夹cp yolo26s.pt.onnx labels.txt ~/DeepStream-Yolo cd ~/DeepStream-Yolo根据已安装的 JetPack 版本设置 CUDA 版本

对于 JetPack 4.6.4:

export CUDA_VER=10.2对于 JetPack 5.1.3:

export CUDA_VER=11.4适用于 JetPack 6.1:

export CUDA_VER=12.6编译库

make -C nvdsinfer_custom_impl_Yolo clean && make -C nvdsinfer_custom_impl_Yolo编辑

config_infer_primary_yolo26.txt根据您的模型文件(适用于具有80个类别的YOLO26s)[property] ... onnx-file=yolo26s.pt.onnx ... num-detected-classes=80 ...编辑

deepstream_app_config文件... [primary-gie] ... config-file=config_infer_primary_yolo26.txt您还可以更改视频源。

deepstream_app_config文件。此处加载了一个默认视频文件... [source0] ... uri=file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4

运行推理

deepstream-app -c deepstream_app_config.txt

注意

在开始推理之前,生成 TensorRT 引擎文件需要很长时间。请耐心等待。

提示

如果您想将模型转换为 FP16 精度,只需设置 model-engine-file=model_b1_gpu0_fp16.engine 和 network-mode=2 在...里面 config_infer_primary_yolo26.txt

INT8 校准

如果您想使用INT8精度进行推理,您需要遵循以下步骤:

注意

目前,INT8 不适用于 TensorRT 10.x。本指南的这一部分已使用 TensorRT 8.x 进行了测试,预计可以正常工作。

设置

OPENCV环境变量export OPENCV=1编译库

make -C nvdsinfer_custom_impl_Yolo clean && make -C nvdsinfer_custom_impl_Yolo对于 COCO 数据集,下载 val2017,提取,然后移动到

DeepStream-Yolo文件夹创建一个新的目录来存放校准图像。

mkdir calibration运行以下命令,从COCO数据集中选择1000张随机图像以运行校准

for jpg in $(ls -1 val2017/*.jpg | sort -R | head -1000); do cp ${jpg} calibration/ done注意

NVIDIA 建议至少使用 500 张图像以获得良好的 准确率。在此示例中,选择 1000 张图像是为了获得更好的准确率(图像越多 = 准确率越高)。您可以从 head -1000 进行设置。例如,对于 2000 张图像,使用 head -2000。此过程可能需要很长时间。

创建

calibration.txt文件,其中包含所有选定的图像realpath calibration/*jpg > calibration.txt设置环境变量

export INT8_CALIB_IMG_PATH=calibration.txt export INT8_CALIB_BATCH_SIZE=1注意

更高的 INT8_CALIB_BATCH_SIZE 值将带来更高的精度和更快的校准速度。请根据您的 GPU 内存进行设置。

更新

config_infer_primary_yolo26.txt文件从

... model-engine-file=model_b1_gpu0_fp32.engine #int8-calib-file=calib.table ... network-mode=0 ...要

... model-engine-file=model_b1_gpu0_int8.engine int8-calib-file=calib.table ... network-mode=1 ...

运行推理

deepstream-app -c deepstream_app_config.txt

多流设置

观看: 如何在Jetson Nano上使用DeepStream SDK和Ultralytics YOLO26运行多个流 🎉

要在单个 DeepStream 应用程序下设置多个流,请对以下内容进行更改: deepstream_app_config.txt 文件:

根据所需的视频流数量,更改行和列以构建网格显示。例如,对于 4 个视频流,我们可以添加 2 行和 2 列。

[tiled-display] rows=2 columns=2设置

num-sources=4并添加uri所有四个流的条目。[source0] enable=1 type=3 uri=path/to/video1.jpg uri=path/to/video2.jpg uri=path/to/video3.jpg uri=path/to/video4.jpg num-sources=4

运行推理

deepstream-app -c deepstream_app_config.txt

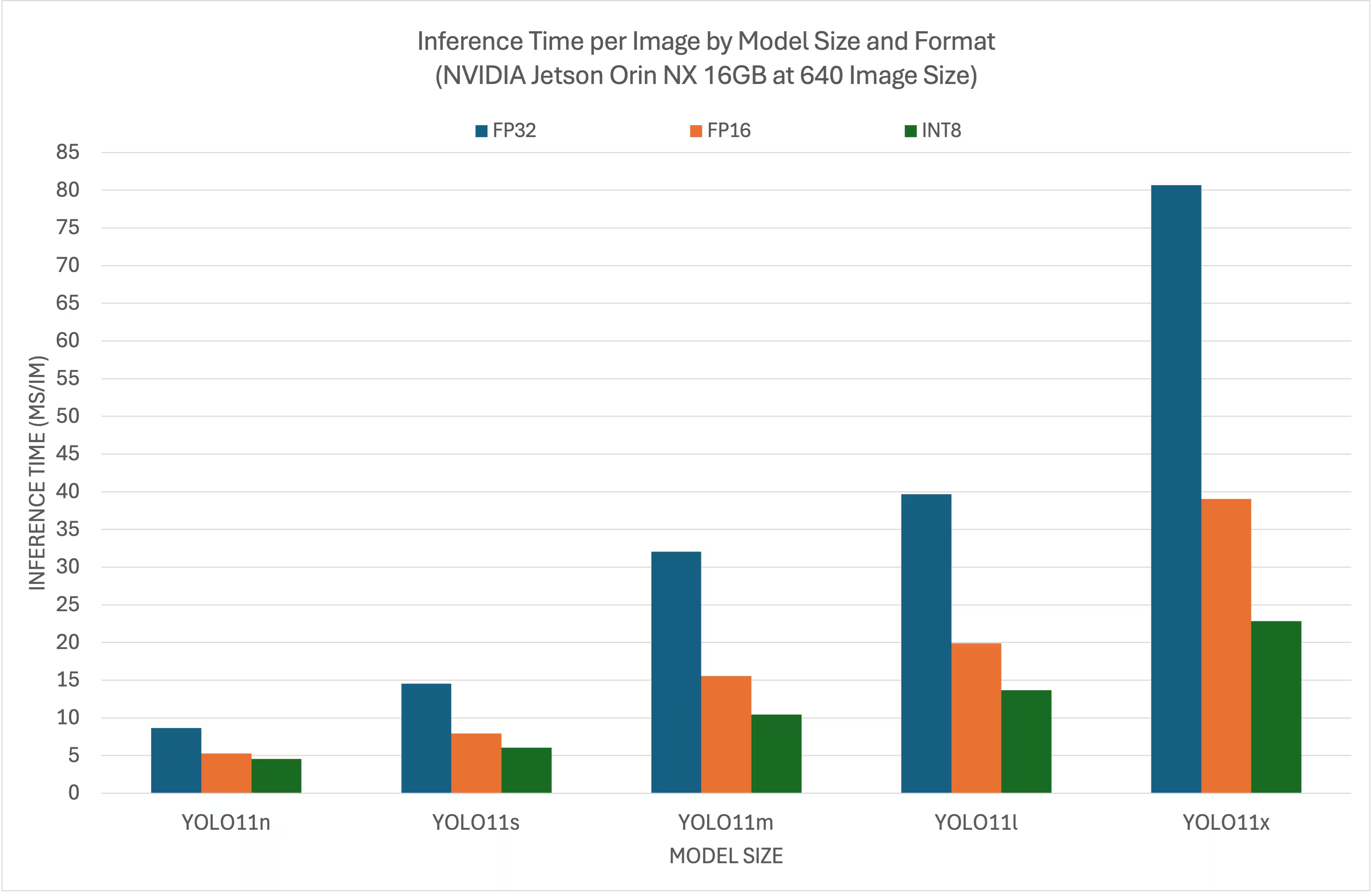

基准测试结果

以下基准测试总结了YOLO26模型在NVIDIA Jetson Orin NX 16GB上,输入尺寸为640x640时,在不同TensorRT精度级别下的表现。

对比图

详细对比表

性能

| 格式 | 状态 | 推理时间 (ms/im) |

|---|---|---|

| TensorRT (FP32) | ✅ | 8.64 |

| TensorRT (FP16) | ✅ | 5.27 |

| TensorRT (INT8) | ✅ | 4.54 |

| 格式 | 状态 | 推理时间 (ms/im) |

|---|---|---|

| TensorRT (FP32) | ✅ | 14.53 |

| TensorRT (FP16) | ✅ | 7.91 |

| TensorRT (INT8) | ✅ | 6.05 |

| 格式 | 状态 | 推理时间 (ms/im) |

|---|---|---|

| TensorRT (FP32) | ✅ | 32.05 |

| TensorRT (FP16) | ✅ | 15.55 |

| TensorRT (INT8) | ✅ | 10.43 |

| 格式 | 状态 | 推理时间 (ms/im) |

|---|---|---|

| TensorRT (FP32) | ✅ | 39.68 |

| TensorRT (FP16) | ✅ | 19.88 |

| TensorRT (INT8) | ✅ | 13.64 |

| 格式 | 状态 | 推理时间 (ms/im) |

|---|---|---|

| TensorRT (FP32) | ✅ | 80.65 |

| TensorRT (FP16) | ✅ | 39.06 |

| TensorRT (INT8) | ✅ | 22.83 |

致谢

本指南最初由我们在 Seeed Studio 的朋友 Lakshantha 和 Elaine 创建。

常见问题

如何在NVIDIA Jetson设备上设置Ultralytics YOLO26?

要在NVIDIA Jetson设备上设置Ultralytics YOLO26,您首先需要安装与您的JetPack版本兼容的DeepStream SDK。请遵循我们快速入门指南中的分步说明,为YOLO26部署配置您的NVIDIA Jetson。

在NVIDIA Jetson上使用TensorRT与YOLO26有什么好处?

将TensorRT与YOLO26结合使用可以优化模型推理,显著降低NVIDIA Jetson设备上的延迟并提高吞吐量。TensorRT通过层融合、精度校准和内核自动调优提供高性能、低延迟的深度学习推理。这使得执行速度更快、效率更高,特别适用于视频分析和自动驾驶机器等实时应用。

我可以在不同的NVIDIA Jetson硬件上使用DeepStream SDK运行Ultralytics YOLO26吗?

是的,使用DeepStream SDK和TensorRT部署Ultralytics YOLO26的指南兼容所有NVIDIA Jetson系列产品。这包括搭载JetPack 5.1.3的Jetson Orin NX 16GB和搭载JetPack 4.6.4的Jetson Nano 4GB等设备。有关详细步骤,请参阅YOLO26的DeepStream配置部分。

如何将YOLO26模型转换为ONNX格式以用于DeepStream?

要将YOLO26模型转换为ONNX格式以用于DeepStream部署,请使用 utils/export_yolo26.py 脚本来自 DeepStream-Yolo 仓库。

这是一个示例命令:

python3 utils/export_yolo26.py -w yolo26s.pt --opset 12 --simplify

有关模型转换的更多详细信息,请查看我们的模型导出部分。

YOLO 在 NVIDIA Jetson Orin NX 上的性能基准是什么?

YOLO26模型在NVIDIA Jetson Orin NX 16GB上的性能因TensorRT精度级别而异。例如,YOLO26s模型可实现:

- FP32 精度: 14.6 毫秒/帧, 68.5 FPS

- FP16 精度: 7.94 毫秒/帧, 126 FPS

- INT8 精度:5.95 毫秒/帧,168 FPS

这些基准测试强调了在NVIDIA Jetson硬件上使用TensorRT优化的YOLO26模型的效率和能力。有关更多详细信息,请参阅我们的基准测试结果部分。