模型部署 的最佳实践

简介

模型部署是计算机视觉项目中的一个步骤,它将模型从开发阶段带入实际应用。有多种模型部署选项:云部署提供可扩展性和易于访问的特性,边缘部署通过将模型更靠近数据源来减少延迟,而本地部署则确保隐私和控制。选择正确的策略取决于您的应用程序的需求,需要在速度、安全性和可扩展性之间取得平衡。

观看: 如何优化和部署 AI 模型:最佳实践、故障排除和安全注意事项

在部署模型时,遵循最佳实践也很重要,因为部署会显著影响模型性能的有效性和可靠性。在本指南中,我们将重点介绍如何确保您的模型部署顺利、高效且安全。

模型部署选项

通常,一旦模型经过训练、评估和测试,就需要将其转换为特定格式,以便在各种环境(例如云、边缘或本地设备)中有效部署。

使用YOLO26,您可以根据部署需求将模型导出为各种格式。例如,将YOLO26导出为ONNX非常简单,是跨框架传输模型的理想选择。要探索更多集成选项并确保在不同环境中的顺利部署,请访问我们的模型集成中心。

选择部署环境

选择在何处部署计算机视觉模型取决于多种因素。不同的环境有其独特的优势和挑战,因此选择最适合您需求的环境至关重要。

云部署

云部署非常适合需要快速扩展和处理大量数据的应用程序。诸如 AWS、Google Cloud 和 Azure 等平台可以轻松地管理从训练到部署的模型。它们提供诸如 AWS SageMaker、Google AI Platform 和 Azure Machine Learning 等服务,以帮助您完成整个过程。

然而,使用云可能很昂贵,尤其是在高数据使用量的情况下,并且如果您的用户远离数据中心,您可能会遇到延迟问题。为了管理成本和性能,优化资源使用并确保符合 数据隐私 规则非常重要。

边缘部署

边缘部署非常适合需要实时响应和低延迟的应用程序,尤其是在互联网接入有限或没有互联网接入的地区。在智能手机或物联网设备等边缘设备上部署模型可确保快速处理并保持数据本地化,从而提高隐私性。由于减少了发送到云的数据,在边缘部署还可以节省带宽。

然而,边缘设备通常处理能力有限,因此您需要优化您的模型。像 TensorFlow Lite 和 NVIDIA Jetson 这样的工具可以提供帮助。尽管有这些好处,但维护和更新许多设备可能具有挑战性。

本地部署

当数据隐私至关重要,或者在互联网连接不可靠或没有互联网连接时,本地部署是最佳选择。在本地服务器或桌面上运行模型使您可以完全控制并确保数据的安全。如果服务器靠近用户,还可以减少延迟。

然而,在本地扩展可能很困难,并且维护可能很耗时。使用像 Docker 这样的工具进行容器化和 Kubernetes 进行管理可以帮助使本地部署更有效率。定期更新和维护对于保持一切顺利运行是必要的。

用于简化部署的容器化

容器化是一种强大的方法,可将您的模型及其所有依赖项打包到一个称为容器的标准化单元中。此技术可确保在不同环境中的一致性能,并简化部署过程。

使用 Docker 进行模型部署的优势

由于以下几个原因,Docker已成为机器学习部署中容器化的行业标准:

- 环境一致性: Docker 容器封装了您的模型及其所有依赖项,通过确保在开发、测试和生产环境中的一致行为,消除了“它在我的机器上可以工作”的问题。

- 隔离:容器将应用程序彼此隔离,防止不同软件版本或库之间的冲突。

- 可移植性:Docker 容器可以在任何支持 Docker 的系统上运行,从而可以轻松地在不同平台上部署模型,而无需修改。

- 可扩展性:可以根据需求轻松地向上或向下扩展容器,而Kubernetes等编排工具可以自动执行此过程。

- 版本控制:Docker 镜像可以进行版本控制,允许您跟踪更改并在需要时回滚到以前的版本。

为YOLO26部署实施Docker

要将您的YOLO26模型容器化,您可以创建一个Dockerfile,其中指定所有必要的依赖项和配置。以下是一个基本示例:

FROM ultralytics/ultralytics:latest

WORKDIR /app

# Copy your model and any additional files

COPY ./models/yolo26.pt /app/models/

COPY ./scripts /app/scripts/

# Set up any environment variables

ENV MODEL_PATH=/app/models/yolo26.pt

# Command to run when the container starts

CMD ["python", "/app/scripts/predict.py"]

这种方法确保您的模型部署在不同的环境中是可重现且一致的,从而显著减少了经常困扰部署过程的“在我的机器上可以工作”的问题。

模型优化技术

优化您的计算机视觉模型有助于它高效运行,尤其是在资源有限的环境(如边缘设备)中部署时。以下是优化模型的一些关键技术。

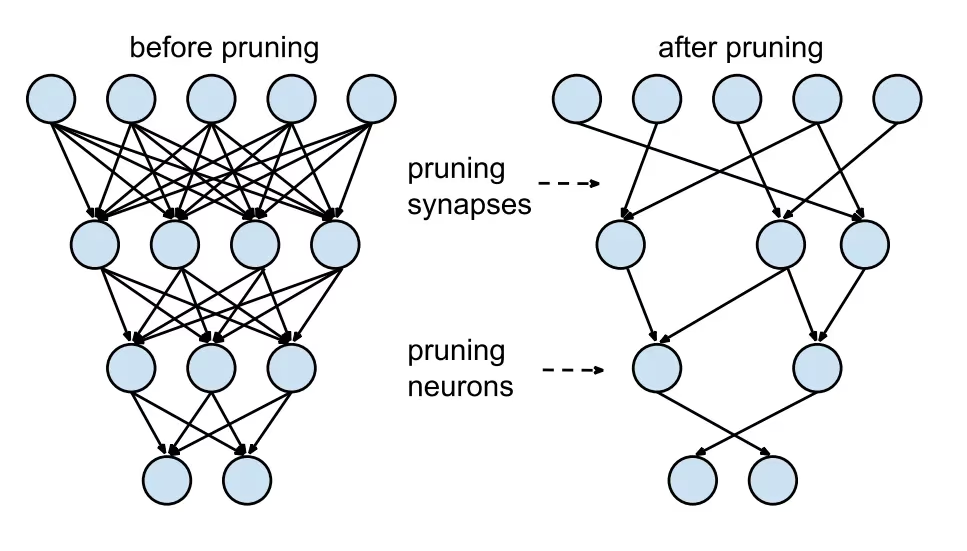

模型剪枝

剪枝通过移除对最终输出贡献较小的权重来减小模型的大小。它使模型更小、更快,而不会显着影响准确性。剪枝涉及识别和消除不必要的参数,从而产生一个需要较少计算能力的更轻量级的模型。它对于在资源有限的设备上部署模型特别有用。

模型量化

量化将模型的权重和激活从高精度(如 32 位浮点数)转换为较低精度(如 8 位整数)。通过减小模型尺寸,它可以加快推理速度。量化感知训练 (QAT) 是一种在训练时考虑量化的方法,与训练后量化相比,可以更好地保持准确性。通过在训练阶段处理量化,模型学习调整到较低的精度,从而在降低计算需求的同时保持性能。

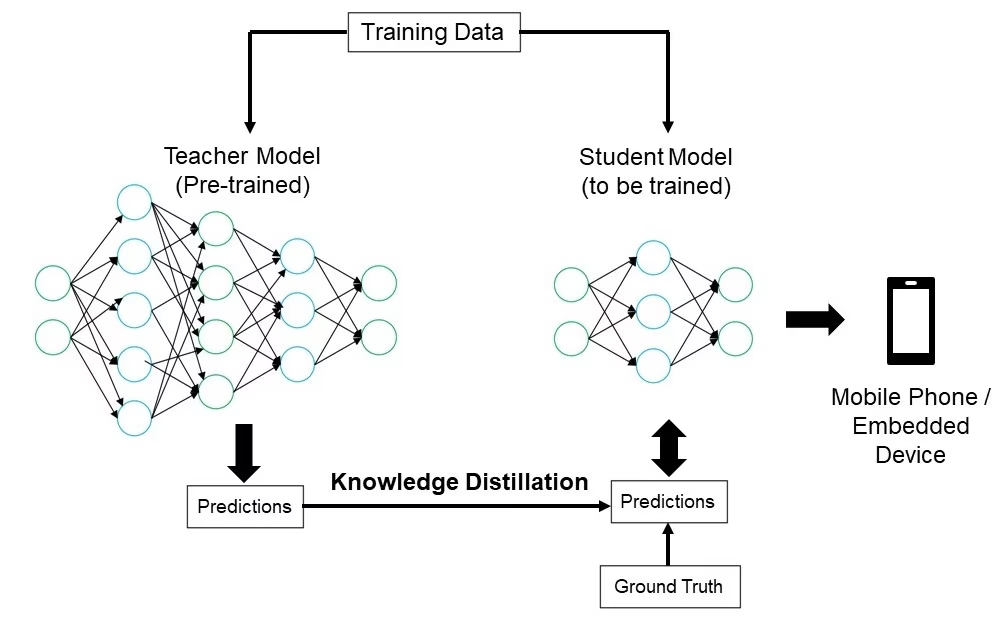

知识蒸馏

知识蒸馏涉及训练一个更小、更简单的模型(学生模型)来模仿一个更大、更复杂的模型(教师模型)的输出。学生模型学习逼近教师模型的预测,从而产生一个紧凑的模型,该模型保留了教师模型的大部分 准确性。这项技术有利于创建高效的模型,适用于在资源受限的边缘设备上部署。

解决部署问题

在部署计算机视觉模型时,您可能会面临挑战,但了解常见问题和解决方案可以使过程更加顺畅。以下是一些常规故障排除技巧和最佳实践,可帮助您解决部署问题。

您的模型在部署后准确性降低

部署后模型准确性下降可能会令人沮丧。这个问题可能源于多种因素。以下是一些帮助您识别和解决问题的步骤:

- 检查数据一致性: 检查模型部署后处理的数据是否与训练时使用的数据一致。数据分布、质量或格式的差异会显著影响性能。

- 验证预处理步骤: 确认在部署期间也一致地应用训练期间应用的所有预处理步骤。这包括调整图像大小、标准化像素值和其他数据转换。

- 评估模型的环境: 确保部署期间使用的硬件和软件配置与训练期间使用的配置相匹配。库、版本和硬件功能的差异可能会导致差异。

- 监控模型推理:在推理管道的各个阶段记录输入和输出,以检测任何异常。这有助于识别数据损坏或模型输出处理不当等问题。

- 检查模型导出和转换: 重新导出模型,并确保转换过程保持模型权重和架构的完整性。

- 使用受控数据集进行测试: 在具有您控制的数据集的测试环境中部署模型,并将结果与训练阶段进行比较。您可以确定问题是出在部署环境还是数据上。

部署YOLO26时,有几个因素可能会影响模型准确性。将模型转换为TensorRT等格式涉及权重量子化和层融合等优化,这可能会导致轻微的精度损失。使用FP16(半精度)而非FP32(全精度)可以加快推理速度,但可能会引入数值精度误差。此外,硬件限制,例如Jetson Nano上较低的CUDA核心数量和减少的内存带宽,也可能影响性能。

推理时间超出预期

在部署机器学习模型时,确保它们高效运行至关重要。如果推理时间超出预期,可能会影响用户体验和应用程序的有效性。以下是一些帮助您识别和解决问题的步骤:

- 实施预热运行: 初始运行通常包括设置开销,这可能会歪曲延迟测量。在测量延迟之前执行几次预热推理。排除这些初始运行可以更准确地测量模型的性能。

- 优化推理引擎: 仔细检查推理引擎是否已针对您的特定 GPU 架构进行了全面优化。使用针对您的硬件量身定制的最新驱动程序和软件版本,以确保最佳性能和兼容性。

- 使用异步处理: 异步处理可以帮助更有效地管理工作负载。 使用异步处理技术来并发处理多个推理,这有助于分配负载并减少等待时间。

- 分析推理流程: 识别推理流程中的瓶颈可以帮助查明延迟的来源。使用分析工具分析推理过程的每个步骤,识别并解决导致重大延迟的任何阶段,例如低效的层或数据传输问题。

- 使用适当的精度: 使用高于必要的精度会降低推理速度。 尝试使用较低的精度,例如 FP16(半精度)而不是 FP32(全精度)。 虽然 FP16 可以减少推理时间,但也要记住它会影响模型精度。

如果您在部署YOLO26时遇到此问题,请考虑YOLO26提供各种模型尺寸,例如适用于内存容量较低设备的YOLO26n(nano)和适用于更强大GPU的YOLO26x(超大)。为您的硬件选择合适的模型变体有助于平衡内存使用和处理时间。

另请注意,输入图像的大小直接影响内存使用和处理时间。较低的分辨率会减少内存使用并加快推理速度,而较高的分辨率会提高准确性,但需要更多的内存和处理能力。

模型部署中的安全注意事项

部署的另一个重要方面是安全性。已部署模型的安全性对于保护敏感数据和知识产权至关重要。以下是您可以遵循的与安全模型部署相关的一些最佳实践。

安全数据传输

确保客户端和服务器之间发送的数据安全对于防止未经授权的方拦截或访问数据非常重要。您可以使用 TLS(传输层安全)等加密协议在数据传输时对其进行加密。即使有人拦截了数据,他们也无法读取它。您还可以使用端到端加密,保护数据从源到目的地的整个过程,这样中间的任何人都无法访问它。

访问控制

必须控制谁可以访问您的模型及其数据,以防止未经授权的使用。使用强大的身份验证方法来验证尝试访问模型的用户或系统的身份,并考虑通过多因素身份验证 (MFA) 增加额外安全性。设置基于角色的访问控制 (RBAC),根据用户角色分配权限,以便人们只能访问他们所需的内容。保留详细的审计日志,以跟踪对模型及其数据的所有访问和更改,并定期审查这些日志以发现任何可疑活动。

模型混淆

通过模型混淆可以保护您的模型免受逆向工程或滥用。 它涉及加密模型参数,例如神经网络中的权重和偏差,使未经授权的个人难以理解或更改模型。 您还可以通过重命名层和参数或添加虚拟层来混淆模型的架构,从而使攻击者更难对其进行逆向工程。 您还可以在安全环境中提供模型服务,例如安全区域或使用可信执行环境 (TEE),可以在推理期间提供额外的保护层。

与您的同行分享想法

成为计算机视觉爱好者社区的一员可以帮助您更快地解决问题和学习。以下是一些联系、获取帮助和分享想法的方式。

社区资源

- GitHub Issues: 浏览YOLO26 GitHub仓库并使用“Issues”选项卡提问、报告bug和建议新功能。社区和维护者非常活跃,随时准备提供帮助。

- Ultralytics Discord 服务器: 加入 Ultralytics Discord 服务器,与其他用户和开发人员聊天、获得支持并分享您的经验。

官方文档

- Ultralytics YOLO26 文档:访问官方 YOLO26 文档,获取各种计算机视觉项目的详细指南和实用技巧。

使用这些资源将帮助您解决挑战,并及时了解计算机视觉社区中的最新趋势和实践。

结论与后续步骤

我们介绍了一些部署计算机视觉模型时应遵循的最佳实践。通过保护数据、控制访问和混淆模型细节,您可以在保护敏感信息的同时保持模型的平稳运行。我们还讨论了如何使用预热运行、优化引擎、异步处理、分析管道和选择正确的精度等策略来解决常见问题,如准确性降低和推理速度慢。

部署模型后,下一步是监控、维护和记录您的应用程序。定期监控有助于快速发现和修复问题,维护使您的模型保持最新和功能正常,良好的文档记录跟踪所有更改和更新。这些步骤将帮助您实现计算机视觉项目的目标。

常见问题

使用Ultralytics YOLO26部署机器学习模型的最佳实践是什么?

部署机器学习模型,特别是使用 Ultralytics YOLO26,涉及多项最佳实践,以确保效率和可靠性。首先,选择适合您需求的部署环境——云端、边缘或本地。通过剪枝、量化和知识蒸馏等技术优化模型,以便在资源受限的环境中高效部署。考虑使用Docker 容器化,以确保不同环境之间的一致性。最后,确保数据一致性和预处理步骤与训练阶段保持一致,以维持性能。您还可以参考模型部署选项,获取更详细的指南。

如何排查Ultralytics YOLO26模型常见的部署问题?

故障排除部署问题可以分解为几个关键步骤。如果您的模型在部署后准确率下降,请检查数据一致性,验证预处理步骤,并确保硬件/软件环境与您在训练期间使用的环境相匹配。对于推理时间过长的问题,请执行预热运行,优化您的推理引擎,使用异步处理,并分析您的推理管道。有关这些最佳实践的详细指南,请参阅故障排除部署问题。

Ultralytics YOLO26优化如何提升模型在边缘设备上的性能?

优化用于边缘设备的 Ultralytics YOLO26 模型涉及使用剪枝来减小模型大小,量化来将权重转换为更低精度,以及知识蒸馏来训练模仿大型模型的更小模型。这些技术确保模型在计算能力有限的设备上高效运行。TensorFlow Lite 和 NVIDIA Jetson 等工具对这些优化特别有用。在我们的模型优化部分了解更多关于这些技术的信息。

使用Ultralytics YOLO26部署机器学习模型有哪些安全考虑?

部署机器学习模型时,安全性至关重要。确保使用 TLS 等加密协议进行安全数据传输。实施强大的访问控制,包括强身份验证和基于角色的访问控制 (RBAC)。模型混淆技术(例如加密模型参数并在受信任的执行环境 (TEE) 等安全环境中提供模型)可提供额外的保护。有关详细实践,请参阅安全注意事项。

如何为我的Ultralytics YOLO26模型选择合适的部署环境?

为您的 Ultralytics YOLO26 模型选择最佳部署环境取决于您应用程序的具体需求。云端部署提供可扩展性和易访问性,非常适合处理大量数据的应用程序。边缘部署最适合需要实时响应的低延迟应用程序,可使用TensorFlow Lite等工具。本地部署适用于需要严格数据隐私和控制的场景。有关每个环境的全面概述,请查看我们关于选择部署环境的部分。