机器学习最佳实践和模型训练技巧

简介

在进行计算机视觉项目时,最重要的步骤之一是模型训练。在达到这一步之前,你需要定义你的目标并收集和标注你的数据。在预处理数据以确保其干净和一致后,你可以继续训练你的模型。

观看: 模型训练技巧 | 如何处理大型数据集 | 批量大小、GPU 利用率和 混合精度

那么,什么是模型训练?模型训练是指教导您的模型识别视觉模式并根据您的数据做出预测的过程。它直接影响应用程序的性能和准确性。在本指南中,我们将介绍最佳实践、优化技术和故障排除技巧,以帮助您有效地训练计算机视觉模型。

如何训练机器学习模型

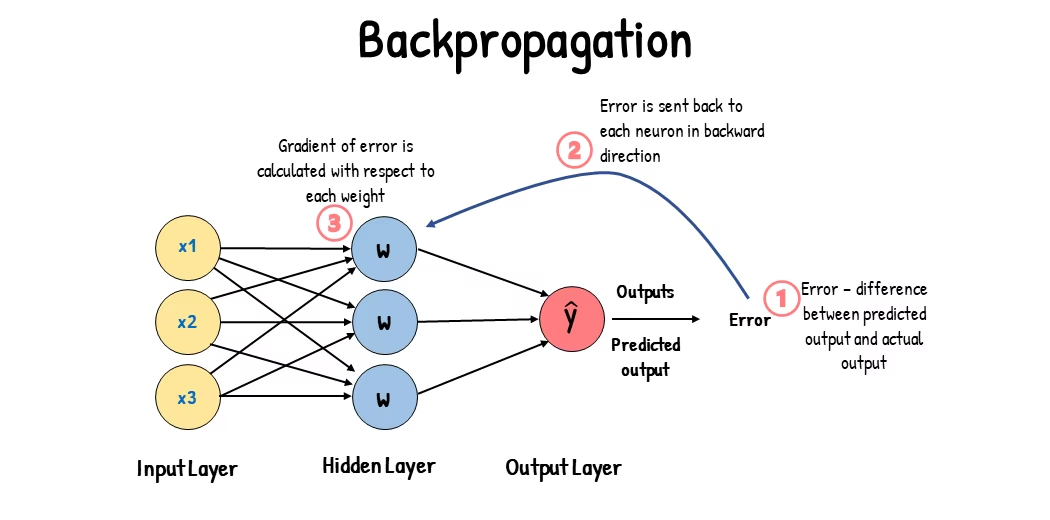

计算机视觉模型的训练是通过调整其内部参数以最小化误差来实现的。首先,模型会接收大量带有标签的图像。它对这些图像中的内容进行预测,并将预测结果与实际标签或内容进行比较,以计算误差。这些误差显示了模型的预测与真实值之间的差距。

在训练过程中,模型通过一种称为反向传播的过程,迭代地进行预测、计算误差并更新其参数。在这个过程中,模型调整其内部参数(权重和偏差)以减少误差。通过多次重复这个循环,模型逐渐提高其准确性。随着时间的推移,它学会识别复杂的模式,如形状、颜色和纹理。

这种学习过程使得计算机视觉模型能够执行各种任务,包括目标检测、实例分割和图像分类。最终目标是创建一个能够将其学习推广到新的、未见过的图像的模型,以便它可以准确地理解现实世界应用中的视觉数据。

现在我们了解了训练模型时幕后发生的事情,接下来让我们看看训练模型时需要考虑的要点。

大型数据集上的训练

当您计划使用大型数据集来训练模型时,需要考虑几个不同的方面。例如,您可以调整批次大小、控制 GPU 利用率、选择使用多尺度训练等。让我们详细了解一下这些选项。

批量大小和GPU利用率

在大型数据集上训练模型时,高效利用 GPU 至关重要。批次大小是一个重要因素。它是机器学习模型在单个训练迭代中处理的数据样本数。 使用 GPU 支持的最大批次大小,您可以充分利用其功能并减少模型训练所需的时间。但是,您需要避免耗尽 GPU 内存。如果遇到内存错误,请逐步减小批次大小,直到模型顺利训练。

观看: 如何使用 Ultralytics YOLO26 进行批量推理 | 加速 Python 中的目标 detect 🎉

对于 YOLO26,您可以设置 batch_size 参数在 训练配置 中以匹配您的 GPU 容量。此外,在训练脚本中设置 batch=-1 将自动确定 批次大小 ,该批次大小可以根据您设备的性能进行有效处理。通过微调批次大小,您可以充分利用 GPU 资源并改善整体训练过程。

子集训练

子集训练是一种明智的策略,它涉及在代表较大数据集的较小数据集上训练模型。它可以节省时间和资源,尤其是在初始模型开发和测试期间。如果您时间紧迫或正在试验不同的模型配置,子集训练是一个不错的选择。

对于 YOLO26,您可以通过使用以下方法轻松实现子集训练: fraction 参数轻松实现子集训练。此参数允许您指定用于训练的数据集比例。例如,设置 fraction=0.1 将使用 10% 的数据训练您的模型。您可以使用此技术进行快速迭代和调整模型,然后再提交使用完整数据集训练模型。子集训练可帮助您快速取得进展并及早发现潜在问题。

多尺度训练

多尺度训练是一种通过在不同尺寸的图像上训练模型来提高模型泛化能力的技术。您的模型可以学习检测不同尺度和距离的物体,并变得更加鲁棒。

例如,当您训练 YOLO26 时,您可以通过设置以下参数来启用多尺度训练: scale 参数来启用多尺度训练。此参数按指定因子调整训练图像的大小,从而模拟不同距离的物体。例如,设置 scale=0.5 在训练期间,将训练图像随机缩放 0.5 到 1.5 倍。配置此参数可使您的模型体验各种图像比例,并提高其在不同对象大小和场景中的检测能力。

Ultralytics 通过以下方式支持图像尺寸多尺度训练: multi_scale 参数。不同于 scale该功能会放大图像,然后通过填充/裁剪恢复至原始尺寸。 imgsz, multi_scale 变化 imgsz 自身每批(四舍五入至模型步长)。例如,对于 imgsz=640 和 multi_scale=0.25训练数据的大小以步长方式从480到800进行采样(例如:480, 512, 544, ..., 800),而 multi_scale=0.0 保持固定大小。

缓存

缓存是提高训练机器学习模型效率的重要技术。通过将预处理的图像存储在内存中,缓存减少了 GPU 等待从磁盘加载数据的时间。模型可以持续接收数据,而不会因磁盘 I/O 操作而导致延迟。

在训练 YOLO26 时,缓存可以通过以下参数进行控制: cache 参数训练 YOLO11 时,可以控制缓存:

cache=True:将数据集图像存储在 RAM 中,提供最快的访问速度,但会增加内存使用量。cache='disk':将图像存储在磁盘上,比RAM慢,但比每次都加载新数据要快。cache=False:禁用缓存,完全依赖磁盘I/O,这是最慢的选项。

混合精度训练

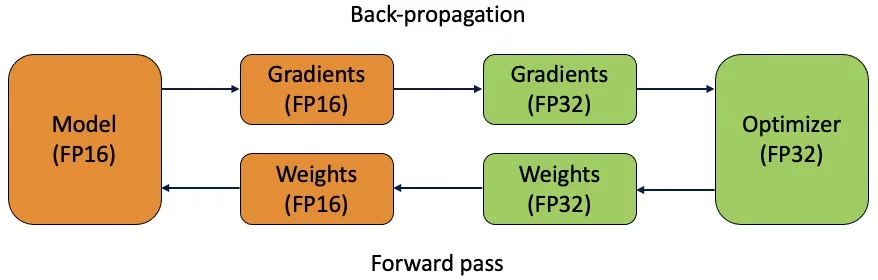

混合精度训练同时使用16位 (FP16) 和32位 (FP32) 浮点类型。通过使用FP16进行更快的计算,并在需要时使用FP32来保持精度,从而利用FP16和FP32两者的优势。大多数神经网络的操作都在FP16中完成,以受益于更快的计算速度和更低的内存使用率。但是,模型的权重的主副本保留在FP32中,以确保权重更新步骤期间的准确性。您可以在相同的硬件约束下处理更大的模型或更大的批次大小。

要实现混合精度训练,您需要修改训练脚本并确保您的硬件(如 GPU)支持它。许多现代深度学习框架,如 PyTorch 和 TensorFlow,都提供对混合精度的内置支持。

使用 YOLO26 进行混合精度训练非常简单。您可以使用 amp 在您的训练配置中的标志。设置 amp=True 启用自动混合精度(AMP)训练。混合精度训练是一种简单而有效的优化模型训练过程的方法。

预训练权重

使用预训练权重是加速模型训练过程的明智方法。预训练权重来自已在大型数据集上训练过的模型,让您的模型有一个良好的开端。迁移学习将预训练模型适应于新的相关任务。微调预训练模型涉及从这些权重开始,然后在您的特定数据集上继续训练。这种训练方法可以缩短训练时间,并通常获得更好的性能,因为模型从一开始就对基本特征有了扎实的理解。

字段 pretrained 参数使 YOLO26 的迁移学习变得容易。设置 pretrained=True 将使用默认的预训练权重,或者您可以指定自定义预训练模型的路径。使用预训练权重和迁移学习可有效提升模型能力并降低训练成本。

处理大型数据集时要考虑的其他技术

在处理大型数据集时,还有一些其他的技术需要考虑:

- 学习率调度器:实现学习率调度器可在训练期间动态调整学习率。调整得当的学习率可以防止模型越过最小值并提高稳定性。在训练 YOLO26 时,

lrf参数通过将最终学习率设置为初始速率的一部分来帮助管理学习率调度。 - 分布式训练: 对于处理大型数据集,分布式训练可以改变游戏规则。您可以通过在多个GPU或机器上分配训练工作负载来减少训练时间。这种方法对于具有大量计算资源的企业级项目尤其有价值。

训练的轮数

在训练模型时,epoch 指的是完整训练数据集的一次完整传递。在一个 epoch 期间,模型会处理训练集中的每个示例一次,并根据学习算法更新其参数。通常需要多个 epoch 才能使模型能够随着时间的推移学习和改进其参数。

一个常见的问题是如何确定训练模型的epoch数。一个好的起点是300个epoch。如果模型过早地过拟合,您可以减少epoch的数量。如果在300个epoch后没有发生过拟合,您可以将训练延长到600、1200或更多个epoch。

然而,理想的 epoch 数量会根据数据集大小和项目目标而异。较大的数据集可能需要更多的 epoch 才能使模型有效学习,而较小的数据集可能需要较少的 epoch 以避免过拟合。对于 YOLO26,您可以设置 epochs 参数。

提前停止

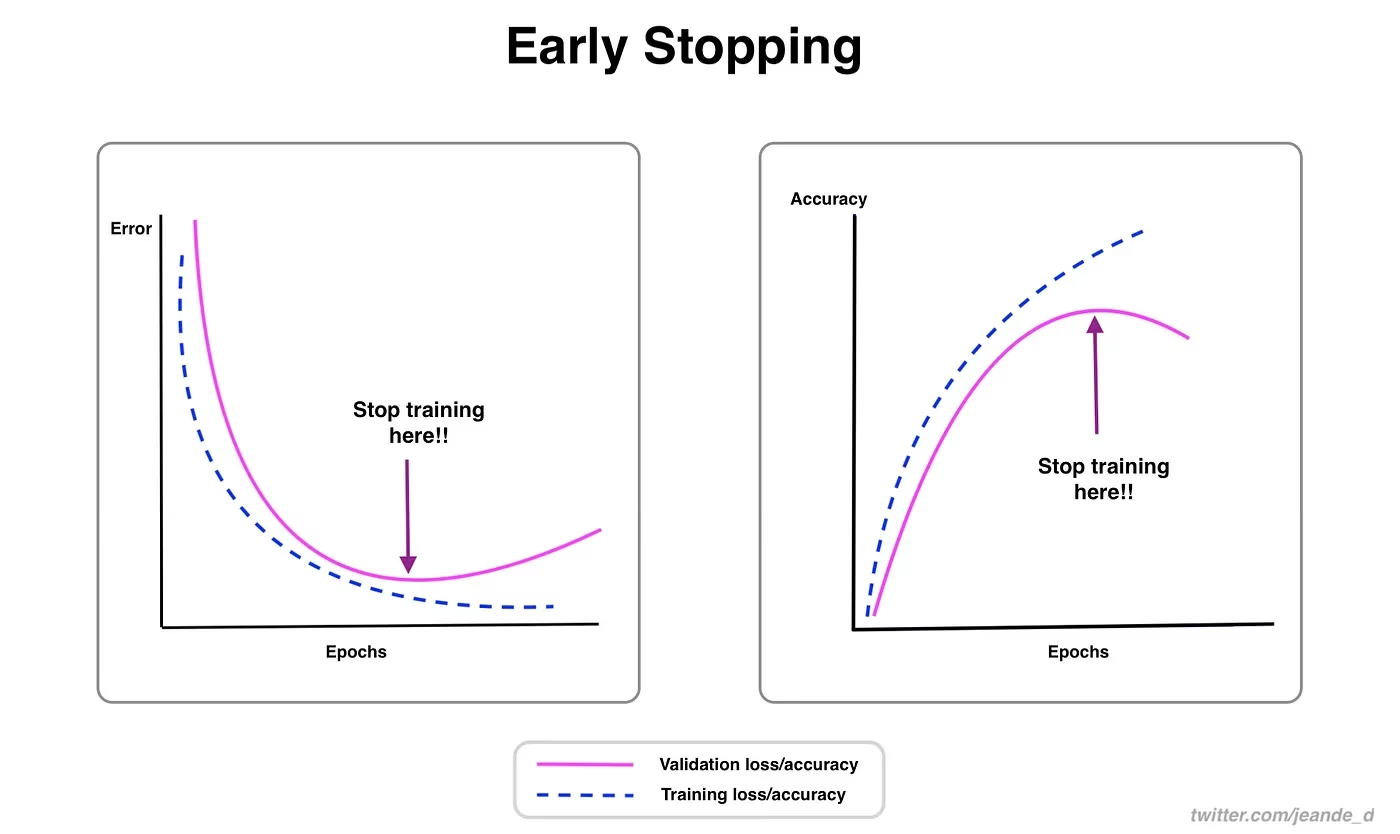

提前停止是优化模型训练的一种有价值的技术。通过监控验证性能,您可以在模型停止改进时停止训练。您可以节省计算资源并防止过拟合。

该过程包括设置一个耐心参数,该参数确定在停止训练之前等待验证指标改善的 epoch 数量。如果模型的性能在这些 epoch 内没有提高,则停止训练以避免浪费时间和资源。

对于 YOLO26,您可以通过设置训练配置中的 patience 参数来启用早期停止。例如, patience=5 意味着如果在连续5个epochs中验证指标没有改善,训练将停止。使用此方法可确保训练过程保持高效,并在不过度计算的情况下实现最佳性能。

云端训练与本地训练的选择

训练模型有两种选择:云训练和本地训练。

云训练提供可扩展性和强大的硬件,非常适合处理大型数据集和复杂模型。诸如 Google Cloud、AWS 和 Azure 等平台提供对高性能 GPU 和 TPU 的按需访问,从而加快训练时间并能够使用更大的模型进行实验。但是,云训练可能很昂贵,尤其是在长时间内,并且数据传输会增加成本和延迟。

本地训练提供了更大的控制和定制性,让您可以根据特定需求定制环境,并避免持续的云成本。对于长期项目来说,它可能更经济,而且由于您的数据保留在本地,因此更安全。但是,本地硬件可能存在资源限制,并且需要维护,这可能会导致大型模型的训练时间更长。

选择优化器

优化器是一种算法,它调整神经网络的权重,以最小化损失函数,损失函数衡量模型执行的优劣程度。简单来说,优化器通过调整模型的参数来减少误差,从而帮助模型学习。选择正确的优化器会直接影响模型学习的速度和准确性。

您还可以微调优化器参数以提高模型性能。调整学习率可以设置更新参数时的步长。为了稳定性,您可以从适中的学习率开始,然后随着时间的推移逐渐降低学习率,以改善长期学习效果。此外,设置动量可以确定过去更新对当前更新的影响程度。动量的常用值约为 0.9,通常可以提供良好的平衡。

常用优化器

不同的优化器各有优缺点。让我们简单了解一下几种常见的优化器。

SGD (随机梯度下降):

- 使用损失函数相对于参数的梯度来更新模型参数。

- 简单高效,但收敛速度可能较慢,并且可能会陷入局部最小值。

Adam (自适应矩估计):

- 结合了带动量的 SGD 和 RMSProp 的优点。

- 根据梯度的一阶矩和二阶矩的估计值,调整每个参数的学习率。

- 非常适合嘈杂的数据和稀疏梯度。

- 高效且通常需要较少调优,使其成为 YOLO26 的推荐优化器。

RMSProp(均方根传播):

- 通过将梯度除以最近梯度幅度的运行平均值来调整每个参数的学习率。

- 有助于处理梯度消失问题,并且对于循环神经网络有效。

对于 YOLO26, optimizer 参数允许您选择各种优化器,包括 SGD, Adam, AdamW, NAdam, RAdam 和 RMSProp,或者您可以将其设置为 auto 以根据模型配置自动选择。

与社区联系

成为计算机视觉爱好者社区的一员可以帮助您更快地解决问题和学习。以下是一些联系、获取帮助和分享想法的方式。

社区资源

- GitHub 问题: 访问 YOLO26 GitHub 仓库,并使用 Issues 选项卡提出问题、报告错误和建议新功能。社区和维护者非常活跃,随时准备提供帮助。

- Ultralytics Discord 服务器: 加入 Ultralytics Discord 服务器,与其他用户和开发人员聊天、获得支持并分享您的经验。

官方文档

- Ultralytics YOLO26 文档:查阅官方YOLO26文档,获取关于各种计算机视觉项目的详细指南和实用技巧。

使用这些资源将帮助您解决挑战,并及时了解计算机视觉社区中的最新趋势和实践。

主要内容

训练计算机视觉模型需要遵循良好实践、优化策略并解决出现的问题。调整批量大小、混合精度训练以及使用预训练权重等技术可以使您的模型表现更好,训练更快。子集训练和早期停止等方法有助于节省时间和资源。与社区保持联系并关注新趋势将有助于您不断提升模型训练技能。

常见问题

在使用Ultralytics YOLO训练大型数据集时,如何提高GPU利用率?

要提高 GPU 利用率,请设置 batch_size 训练配置中的参数设置为您的 GPU 支持的最大尺寸。这确保您充分利用 GPU 的能力,缩短训练时间。如果您遇到内存错误,请逐步减小批处理大小,直到训练顺利运行。对于 YOLO26,设置 batch=-1 将自动确定用于高效处理的最佳批量大小。更多信息请参考 训练配置.

什么是混合精度训练,以及如何在 YOLO26 中启用它?

混合精度训练利用 16 位 (FP16) 和 32 位 (FP32) 浮点类型,以平衡计算速度和精度。这种方法可以加快训练速度并减少内存使用,同时不影响模型 准确性。要在 YOLO26 中启用混合精度训练,请设置 amp 参数设置为 True 在您的训练配置中。这将激活自动混合精度 (AMP) 训练。有关此优化技术的更多详细信息,请参阅 训练配置.

多尺度训练如何提升 YOLO26 模型性能?

多尺度训练通过在不同尺寸的图像上进行训练,提升模型性能,使模型能够更好地泛化不同尺度和距离。在YOLO26中,您可以通过设置... scale 参数来启用多尺度训练。例如, scale=0.5 采样缩放因子在0.5至1.5之间,然后通过填充/裁剪恢复至 imgsz该技术可模拟不同距离的物体,使模型在各种场景下更具鲁棒性。有关设置和更多细节,请参阅 训练配置.

如何使用预训练权重来加速 YOLO26 中的训练?

使用预训练权重可以利用已熟悉基础视觉特征的模型,大大加速训练并提高模型准确性。在YOLO26中,只需设置... pretrained 参数设置为 True 或在训练配置中提供自定义预训练权重的路径。这种称为迁移学习的方法,允许在大数据集上训练的模型有效地适应您的特定应用。在...中了解更多关于如何使用预训练权重及其优势。 训练配置指南.

模型训练推荐的 epoch 数量是多少,以及如何在 YOLO26 中设置它?

周期数(epochs)是指模型训练期间对训练数据集的完整遍历次数。典型的起始点是300个周期。如果您的模型过早过拟合,可以减少周期数。另外,如果没有观察到过拟合,您可以将训练延长到600、1200或更多周期。要在YOLO26中设置此项,请使用... epochs 参数。有关确定理想 epoch 数的更多建议,请参阅关于 epoch 数的这一部分.