模型评估和微调的见解

简介

一旦你训练了你的计算机视觉模型,评估和优化它以达到最佳性能至关重要。仅仅训练你的模型是不够的。你需要确保你的模型是准确的、高效的,并且能够实现你的计算机视觉项目的目标。通过评估和微调你的模型,你可以识别弱点,提高其准确性,并提升整体性能。

观看: 模型评估和微调的深入分析 | 提高平均精度均值的技巧

在本指南中,我们分享了关于模型评估和微调的见解,以使计算机视觉项目的这一步更易于理解。我们讨论了如何理解评估指标并实施微调技术,为您提供提升模型能力的知识。

使用指标评估模型性能

评估模型表现的好坏有助于我们了解它的工作效率。各种指标用于衡量性能。这些性能指标提供了清晰的数值化见解,可以指导改进,以确保模型达到其预期目标。让我们仔细看看几个关键指标。

置信度评分

置信度评分代表模型对检测到的对象属于特定类别的确定性。它的范围从 0 到 1,分数越高表示置信度越高。置信度评分有助于过滤预测结果;只有置信度评分高于指定阈值的检测才被认为是有效的。

小提示: 在运行推理时,如果您没有看到任何预测结果,并且您已经检查了所有其他事项,请尝试降低置信度分数。有时,阈值设置得太高,导致模型忽略了有效的预测。降低分数可以让模型考虑更多的可能性。这可能不符合您的项目目标,但这是一个了解模型能做什么以及如何对其进行微调的好方法。

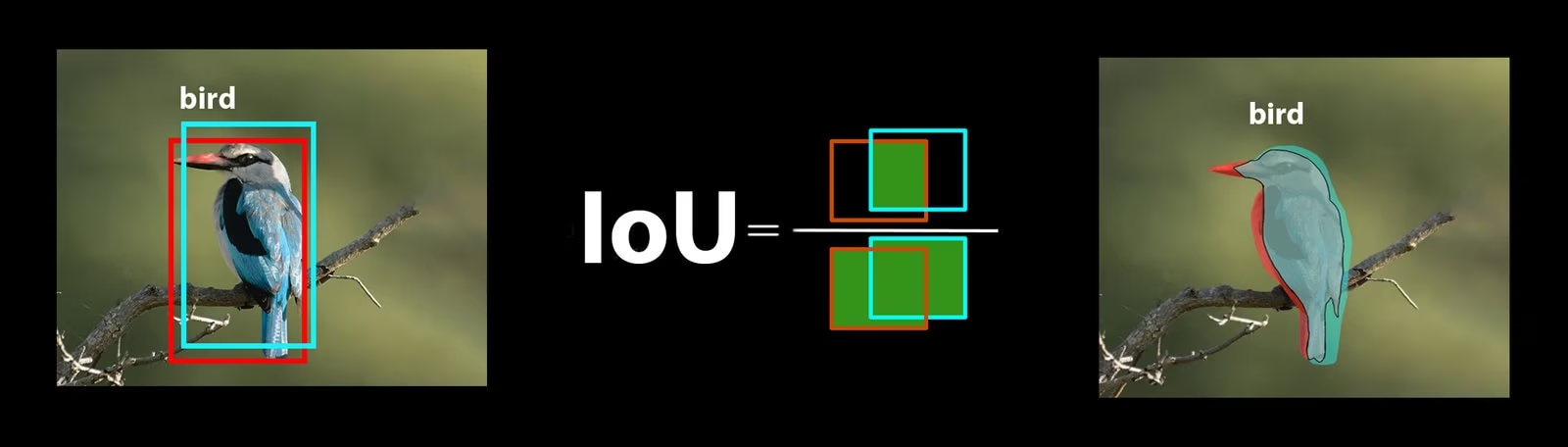

交并比

Intersection over Union (IoU) 是目标检测中的一个指标,用于衡量预测的边界框与真实边界框的重叠程度。IoU 值的范围从 0 到 1,其中 1 表示完全匹配。IoU 至关重要,因为它衡量了预测边界与实际对象边界的匹配程度。

平均精度均值

平均精度均值 (mAP) 是一种衡量对象检测模型性能的方法。它着眼于检测每个对象类别的精度,对这些分数进行平均,并给出一个总体数字,显示模型识别和classify对象的准确程度。

让我们关注两个特定的 mAP 指标:

- mAP@.5: 测量在 0.5 的单个 IoU(Intersection over Union)阈值下的平均精度。此指标检查模型是否能够以较宽松的accuracy要求正确找到对象。它侧重于对象是否大致在正确的位置,而不需要完美的放置。它有助于了解模型是否通常擅长发现对象。

- mAP@.5:.95: 对在多个 IoU 阈值(从 0.5 到 0.95,增量为 0.05)下计算的 mAP 值进行平均。此指标更加详细和严格。它更全面地描述了模型在不同严格程度下查找对象的准确程度,并且对于需要精确对象detect的应用特别有用。

其他mAP指标包括mAP@0.75(使用更严格的0.75 IoU阈值)以及mAP@small、medium和large(评估不同尺寸物体的精度)。

评估 YOLO26 模型性能

关于 YOLO26,您可以使用 验证模式 来评估模型。此外,请务必查阅我们深入探讨 YOLO26 性能指标 及其解释的指南。

常见的社区问题

在评估 YOLO26 模型时,您可能会遇到一些小问题。根据常见的社区问题,以下是一些帮助您充分利用 YOLO26 模型的技巧:

处理可变的图像尺寸

使用不同尺寸的图像评估 YOLO26 模型有助于您了解其在多样化数据集上的性能。使用 rect=true 验证参数,YOLO26 会根据图像尺寸调整每个批次的网络步长,从而使模型能够处理矩形图像,而无需强制它们变为单一尺寸。

字段 imgsz validation 参数设置了图像调整大小的最大尺寸,默认为640。您可以根据数据集的最大尺寸和可用的GPU内存来调整此设置。即使使用 imgsz 设置, rect=true 也能让模型通过动态调整步幅来有效地管理不同的图像尺寸。

访问 YOLO26 指标

如果您想更深入地了解 YOLO26 模型的性能,只需几行 Python 代码即可轻松访问特定的评估指标。下面的代码片段将允许您加载模型、运行评估并打印出显示模型性能的各种指标。

用法

from ultralytics import YOLO

# Load the model

model = YOLO("yolo26n.pt")

# Run the evaluation

results = model.val(data="coco8.yaml")

# Print specific metrics

print("Class indices with average precision:", results.ap_class_index)

print("Average precision for all classes:", results.box.all_ap)

print("Average precision:", results.box.ap)

print("Average precision at IoU=0.50:", results.box.ap50)

print("Class indices for average precision:", results.box.ap_class_index)

print("Class-specific results:", results.box.class_result)

print("F1 score:", results.box.f1)

print("F1 score curve:", results.box.f1_curve)

print("Overall fitness score:", results.box.fitness)

print("Mean average precision:", results.box.map)

print("Mean average precision at IoU=0.50:", results.box.map50)

print("Mean average precision at IoU=0.75:", results.box.map75)

print("Mean average precision for different IoU thresholds:", results.box.maps)

print("Mean results for different metrics:", results.box.mean_results)

print("Mean precision:", results.box.mp)

print("Mean recall:", results.box.mr)

print("Precision:", results.box.p)

print("Precision curve:", results.box.p_curve)

print("Precision values:", results.box.prec_values)

print("Specific precision metrics:", results.box.px)

print("Recall:", results.box.r)

print("Recall curve:", results.box.r_curve)

结果对象还包括速度指标,例如预处理时间、推理时间、损失和后处理时间。通过分析这些指标,您可以微调和优化 YOLO26 模型以获得更好的性能,使其更有效地应用于您的特定用例。

微调是如何工作的?

微调涉及采用预训练模型并调整其参数,以提高在特定任务或数据集上的性能。此过程也称为模型再训练,它使模型能够更好地理解和预测在实际应用中将遇到的特定数据的结果。您可以根据模型评估重新训练模型,以获得最佳结果。

微调模型的技巧

微调模型意味着密切关注几个重要的参数和技术,以实现最佳性能。以下是一些指导您完成此过程的重要提示。

从更高的学习率开始

通常,在初始训练 epochs 期间,学习率会从低开始并逐渐增加,以稳定训练过程。但是,由于您的模型已经从先前的数据集中学习了一些特征,因此立即以较高的 learning rate 开始可能更有益。

在评估 YOLO26 模型时,您可以设置 warmup_epochs validation 参数设置为 warmup_epochs=0 以防止学习率过低。通过遵循此过程,训练将从提供的权重继续,并根据新数据的细微差别进行调整。

针对小目标进行图像平铺

图像平铺可以提高小目标的检测精度。通过将大图像分割成更小的片段,例如将 1280x1280 图像分割成多个 640x640 片段,您可以保持原始分辨率,模型可以从高分辨率片段中学习。使用 YOLO26 时,请务必正确调整这些新片段的标签。

参与社区互动

与其他计算机视觉爱好者分享您的想法和问题可以激发解决项目中障碍的创造性解决方案。以下是一些学习、解决问题和建立联系的绝佳方法。

寻找帮助和支持

- GitHub 问题: 浏览 YOLO26 GitHub 仓库,并使用 Issues 选项卡 提问、报告错误和建议功能。社区和维护者随时可以协助您解决遇到的任何问题。

- Ultralytics Discord 服务器:加入Ultralytics Discord 服务器,与其他用户和开发人员联系、获得支持、分享知识和集思广益。

官方文档

- Ultralytics YOLO26 文档: 查阅 官方 YOLO26 文档,获取关于各种计算机视觉任务和项目的综合指南和宝贵见解。

最后的思考

评估和微调您的计算机视觉模型是成功模型部署的重要步骤。这些步骤有助于确保您的模型准确、高效并适合您的整体应用。训练最佳模型的关键在于持续的实验和学习。不要犹豫,调整参数、尝试新技术并探索不同的数据集。不断尝试并突破可能的界限!

常见问题

评估 YOLO26 模型性能的关键指标有哪些?

为了评估 YOLO26 模型性能,重要的指标包括置信度分数、交并比 (IoU) 和平均精度均值 (mAP)。置信度分数衡量模型对每个检测到的对象类别的确定性。IoU 评估预测边界框与真实框的重叠程度。平均精度均值 (mAP) 汇总了所有类别的精度分数,其中 mAP@.5 和 mAP@.5:.95 是用于不同 IoU 阈值的两种常见类型。在我们的 YOLO26 性能指标指南 中了解更多关于这些指标的信息。

如何针对我的特定数据集微调预训练的 YOLO26 模型?

微调预训练的 YOLO26 模型涉及调整其参数,以提高其在特定任务或数据集上的性能。首先使用指标评估您的模型,然后通过调整 warmup_epochs 参数设置为 0。使用诸如 rect=true 等参数可以有效地处理各种图像尺寸。有关更详细的指导,请参阅我们关于 微调 YOLO26 模型.

在评估 YOLO26 模型时,如何处理可变图像尺寸?

为了在评估期间处理可变的图像尺寸,请使用 YOLO11 中的 rect=true YOLO26 中的参数,它会根据图像尺寸调整每个批次的网络步长。 imgsz 参数设置图像调整大小的最大尺寸,默认为 640。调整 imgsz 以适应您的数据集和 GPU 内存。有关更多详细信息,请访问我们的 关于处理可变图像尺寸的部分.

我可以采取哪些实用步骤来提高 YOLO26 模型的平均精度均值?

提高YOLO26模型的平均精度均值(mAP)涉及以下几个步骤:

- 超参数调整: 尝试不同的学习率、批量大小和图像增强。

- 数据增强: 使用 Mosaic 和 MixUp 等技术创建多样化的训练样本。

- 图像切片:将大图像分割成小块,以提高小目标的detect准确性。有关具体策略,请参阅我们关于模型微调的详细指南。

如何在 Python 中访问 YOLO26 模型评估指标?

您可以使用 Python 通过以下步骤访问 YOLO26 模型评估指标:

用法

from ultralytics import YOLO

# Load the model

model = YOLO("yolo26n.pt")

# Run the evaluation

results = model.val(data="coco8.yaml")

# Print specific metrics

print("Class indices with average precision:", results.ap_class_index)

print("Average precision for all classes:", results.box.all_ap)

print("Mean average precision at IoU=0.50:", results.box.map50)

print("Mean recall:", results.box.mr)

分析这些指标有助于微调和优化您的 YOLO26 模型。如需深入了解,请查阅我们的 YOLO26 指标 指南。