모델 학습을 위한 머신 러닝 모범 사례 및 팁

소개

컴퓨터 비전 프로젝트를 진행할 때 가장 중요한 단계 중 하나는 모델 학습입니다. 이 단계에 도달하기 전에 목표를 정의하고 데이터를 수집 및 주석 처리해야 합니다. 데이터가 깨끗하고 일관성이 있는지 확인하기 위해 데이터를 전처리한 후 모델 학습으로 넘어갈 수 있습니다.

참고: 모델 학습 팁 | 대규모 데이터 세트 처리 방법 | 배치 크기, GPU 활용률 및 혼합 정밀도

그렇다면 모델 학습이란 무엇일까요? 모델 학습은 모델이 시각적 패턴을 인식하고 데이터를 기반으로 예측하도록 가르치는 프로세스입니다. 이는 애플리케이션의 성능과 정확성에 직접적인 영향을 미칩니다. 이 가이드에서는 컴퓨터 비전 모델을 효과적으로 학습하는 데 도움이 되는 모범 사례, 최적화 기술 및 문제 해결 팁을 다룹니다.

머신러닝 모델을 훈련하는 방법

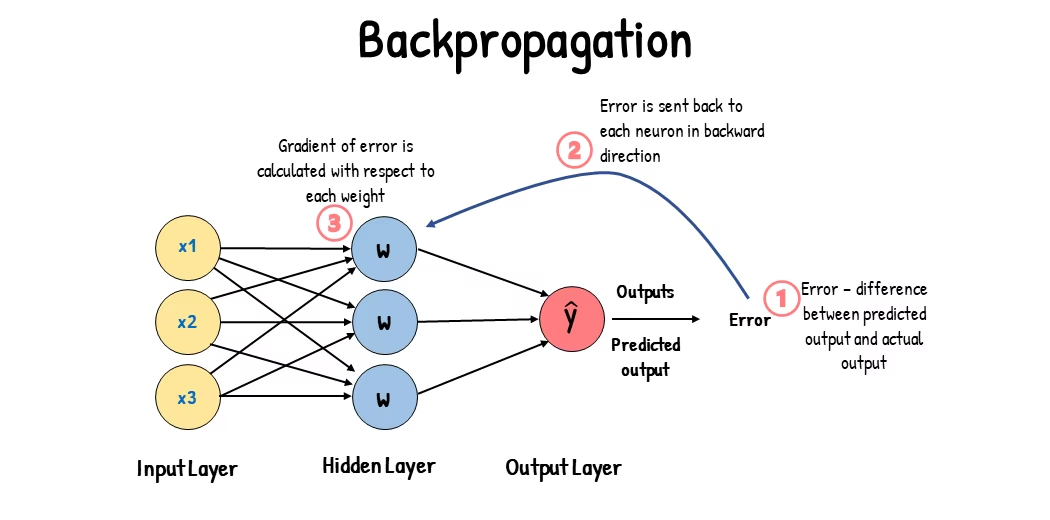

컴퓨터 비전 모델은 오류를 최소화하기 위해 내부 매개변수를 조정하여 학습됩니다. 처음에는 모델에 레이블이 지정된 이미지의 큰 세트가 제공됩니다. 모델은 이러한 이미지에 있는 내용에 대한 예측을 수행하고, 예측을 실제 레이블 또는 내용과 비교하여 오류를 계산합니다. 이러한 오류는 모델의 예측이 실제 값에서 얼마나 벗어났는지 보여줍니다.

학습하는 동안 모델은 반복적으로 예측을 수행하고, 오류를 계산하며, 역전파라는 과정을 통해 파라미터를 업데이트합니다. 이 과정에서 모델은 오류를 줄이기 위해 내부 파라미터(가중치 및 편향)를 조정합니다. 이 주기를 여러 번 반복함으로써 모델은 점차 정확도를 향상시킵니다. 시간이 지남에 따라 모양, 색상, 질감과 같은 복잡한 패턴을 인식하는 방법을 학습합니다.

이 학습 과정을 통해 컴퓨터 비전 모델은 객체 감지, 인스턴스 분할 및 이미지 분류를 포함한 다양한 작업을 수행할 수 있습니다. 궁극적인 목표는 학습 내용을 새로운 미지의 이미지에 일반화하여 실제 응용 분야에서 시각적 데이터를 정확하게 이해할 수 있는 모델을 만드는 것입니다.

이제 모델을 훈련할 때 어떤 일이 일어나는지 알았으므로 모델 훈련 시 고려해야 할 사항을 살펴보겠습니다.

대규모 데이터 세트 학습

대규모 데이터 세트를 사용하여 모델을 훈련할 계획을 세울 때 고려해야 할 몇 가지 다른 측면이 있습니다. 예를 들어, 배치 크기를 조정하고, GPU 사용률을 제어하고, 다중 스케일 훈련을 사용하도록 선택할 수 있습니다. 이러한 각 옵션을 자세히 살펴보겠습니다.

배치 크기 및 GPU 활용률

대규모 데이터 세트에서 모델을 훈련할 때 GPU를 효율적으로 활용하는 것이 중요합니다. 배치 크기는 중요한 요소입니다. 이는 머신 러닝 모델이 단일 훈련 반복에서 처리하는 데이터 샘플의 수입니다. GPU에서 지원하는 최대 배치 크기를 사용하면 GPU의 기능을 최대한 활용하고 모델 훈련에 걸리는 시간을 줄일 수 있습니다. 그러나 GPU 메모리가 부족해지는 것을 방지해야 합니다. 메모리 오류가 발생하면 모델이 원활하게 훈련될 때까지 배치 크기를 점진적으로 줄이세요.

참고: Ultralytics YOLO26으로 배치 추론 사용 방법 | python에서 객체 detect 속도 향상 🎉

YOLO26과 관련하여 다음을 설정할 수 있습니다. batch_size 의 parameter 학습 구성 GPU 용량에 맞게 조정합니다. 또한 다음을 설정합니다. batch=-1 훈련 스크립트에서 자동으로 다음을 결정합니다. 배치 크기 장치의 기능에 따라 효율적으로 처리할 수 있습니다. 배치 크기를 미세 조정하여 GPU 리소스를 최대한 활용하고 전체 학습 프로세스를 개선할 수 있습니다.

부분 집합 훈련

부분 집합 훈련은 더 큰 데이터 세트를 대표하는 더 작은 데이터 세트에서 모델을 훈련하는 스마트 전략입니다. 특히 초기 모델 개발 및 테스트 중에 시간과 리소스를 절약할 수 있습니다. 시간이 부족하거나 다양한 모델 구성을 실험하는 경우 부분 집합 훈련이 좋은 선택입니다.

YOLO26의 경우, 다음을 사용하여 부분집합 훈련을 쉽게 구현할 수 있습니다. fraction parameter입니다. 이 매개변수를 사용하면 학습에 사용할 데이터 세트의 비율을 지정할 수 있습니다. 예를 들어, fraction=0.1 데이터의 10%를 사용하여 모델을 훈련합니다. 전체 데이터 세트를 사용하여 모델을 훈련하기 전에 이 기술을 사용하여 빠른 반복을 수행하고 모델을 조정할 수 있습니다. 하위 집합 훈련은 빠른 진행을 돕고 잠재적인 문제를 조기에 식별하는 데 도움이 됩니다.

다중 스케일 학습

멀티스케일 학습은 다양한 크기의 이미지로 모델을 학습시켜 모델의 일반화 능력을 향상시키는 기법입니다. 모델은 다양한 스케일과 거리에서 객체를 detect하는 방법을 학습하고 더욱 견고해질 수 있습니다.

예를 들어, YOLO26을 훈련할 때 다음을 설정하여 다중 스케일 훈련을 활성화할 수 있습니다. scale parameter입니다. 이 매개변수는 지정된 요인으로 학습 이미지의 크기를 조정하여 다양한 거리에서 객체를 시뮬레이션합니다. 예를 들어, scale=0.5 학습 이미지의 배율을 학습 중에 0.5에서 1.5 사이의 임의의 값으로 조정합니다. 이 매개변수를 구성하면 모델이 다양한 이미지 크기를 경험하고 다양한 객체 크기와 시나리오에서 감지 기능을 향상시킬 수 있습니다.

Ultralytics 이미지를 통한 다중 스케일 훈련을 지원합니다. multi_scale 매개변수. 달리 scale이미지를 확대하고 나서 다시 패딩/자르기하여 imgsz, multi_scale 변경 사항 imgsz 각 배치 자체(모델 스트라이드로 반올림). 예를 들어, imgsz=640 및 multi_scale=0.25훈련 데이터 크기는 480부터 800까지 단계별로 샘플링됩니다(예: 480, 512, 544, ..., 800). multi_scale=0.0 고정된 크기를 유지합니다.

캐싱

캐싱은 머신 러닝 모델 훈련의 효율성을 향상시키는 중요한 기술입니다. 사전 처리된 이미지를 메모리에 저장함으로써 캐싱은 GPU가 디스크에서 데이터를 로드할 때까지 기다리는 시간을 줄입니다. 모델은 디스크 I/O 작업으로 인한 지연 없이 지속적으로 데이터를 수신할 수 있습니다.

YOLO26을 훈련할 때 다음을 사용하여 캐싱을 제어할 수 있습니다. cache parameter:

cache=True: 데이터 세트 이미지를 RAM에 저장하여 가장 빠른 액세스 속도를 제공하지만 메모리 사용량이 증가합니다.cache='disk': 이미지를 디스크에 저장하며, RAM보다 느리지만 매번 새로운 데이터를 로드하는 것보다 빠릅니다.cache=False: 캐싱을 비활성화하고 디스크 I/O에만 의존하므로 가장 느린 옵션입니다.

혼합 정밀도 학습

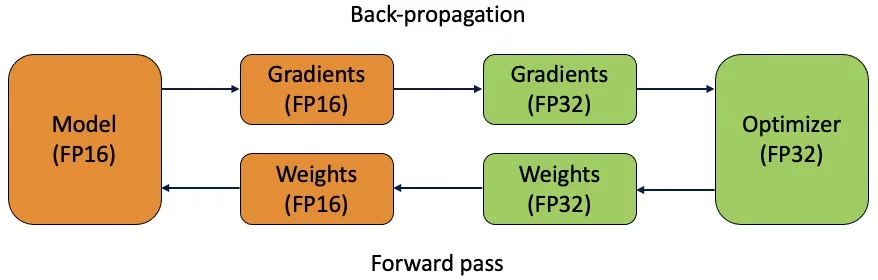

혼합 정밀도 학습은 16비트(FP16) 및 32비트(FP32) 부동 소수점 형식을 모두 사용합니다. FP16과 FP32의 강점을 활용하여 더 빠른 계산을 위해 FP16을 사용하고 필요한 경우 정밀도를 유지하기 위해 FP32를 사용합니다. 대부분의 신경망 연산은 더 빠른 계산과 낮은 메모리 사용량의 이점을 얻기 위해 FP16으로 수행됩니다. 그러나 모델 가중치의 마스터 사본은 가중치 업데이트 단계에서 정확성을 보장하기 위해 FP32로 유지됩니다. 동일한 하드웨어 제약 조건 내에서 더 큰 모델 또는 더 큰 배치 크기를 처리할 수 있습니다.

혼합 정밀도 학습을 구현하려면 학습 스크립트를 수정하고 하드웨어(예: GPU)가 이를 지원하는지 확인해야 합니다. PyTorch 및 TensorFlow와 같은 많은 최신 딥 러닝 프레임워크는 혼합 정밀도에 대한 기본 지원을 제공합니다.

YOLO26을 사용할 때 혼합 정밀도 훈련은 간단합니다. 다음을 사용할 수 있습니다. amp 훈련 구성에서 플래그를 설정합니다. 설정 amp=True AMP(Automatic Mixed Precision) 학습을 활성화합니다. 혼합 정밀도 학습은 모델 학습 프로세스를 최적화하는 간단하면서도 효과적인 방법입니다.

사전 훈련된 가중치

사전 학습된 가중치를 사용하는 것은 모델 학습 프로세스 속도를 높이는 현명한 방법입니다. 사전 학습된 가중치는 이미 대규모 데이터셋으로 학습된 모델에서 가져온 것으로, 모델에 유리한 시작점을 제공합니다. 전이 학습은 사전 학습된 모델을 새롭고 관련 있는 작업에 적용합니다. 사전 학습된 모델을 미세 조정하는 것은 이러한 가중치로 시작하여 특정 데이터셋에서 학습을 계속하는 것을 포함합니다. 이 학습 방법은 모델이 기본 특징에 대한 견고한 이해를 바탕으로 시작하기 때문에 더 빠른 학습 시간과 종종 더 나은 성능을 가져옵니다.

에 지정되어 있습니다. pretrained 매개변수는 YOLO26으로 전이 학습을 쉽게 만듭니다. 설정은 pretrained=True 기본 사전 학습 가중치를 사용하거나 사용자 지정 사전 학습 모델의 경로를 지정할 수 있습니다. 사전 학습 가중치와 전이 학습을 사용하면 모델의 기능을 효과적으로 향상시키고 학습 비용을 절감할 수 있습니다.

대규모 데이터 세트를 처리할 때 고려해야 할 다른 기술

대규모 데이터 세트를 처리할 때 고려해야 할 몇 가지 다른 기술이 있습니다.

- Learning Rate 스케줄러: 학습률 스케줄러를 구현하면 훈련 중 학습률을 동적으로 조정합니다. 잘 조정된 학습률은 모델이 최솟값을 지나치는 것을 방지하고 안정성을 향상시킬 수 있습니다. YOLO26을 훈련할 때, 다음은

lrfparameter는 최종 학습률을 초기 학습률의 비율로 설정하여 학습률 스케줄링을 관리하는 데 도움이 됩니다. - Distributed Training: 대규모 데이터 세트를 처리하는 데 있어 분산 학습은 획기적인 변화를 가져올 수 있습니다. 여러 GPU 또는 시스템에 학습 워크로드를 분산하여 학습 시간을 줄일 수 있습니다. 이 접근 방식은 상당한 컴퓨팅 리소스를 사용하는 엔터프라이즈 규모 프로젝트에 특히 유용합니다.

훈련할 Epoch 수

모델을 훈련할 때 epoch는 전체 훈련 데이터 세트를 한 번 완전히 통과하는 것을 의미합니다. epoch 동안 모델은 훈련 세트의 각 예제를 한 번 처리하고 학습 알고리즘을 기반으로 해당 파라미터를 업데이트합니다. 모델이 시간이 지남에 따라 파라미터를 학습하고 개선할 수 있도록 하려면 일반적으로 여러 epoch가 필요합니다.

일반적으로 제기되는 질문은 모델을 훈련할 에포크 수를 결정하는 방법입니다. 좋은 시작점은 300 에포크입니다. 모델이 일찍 과적합되면 에포크 수를 줄일 수 있습니다. 300 에포크 후에도 과적합이 발생하지 않으면 훈련을 600, 1200 에포크 이상으로 확장할 수 있습니다.

하지만 이상적인 에포크 수는 데이터셋 크기와 프로젝트 목표에 따라 달라질 수 있습니다. 더 큰 데이터셋은 모델이 효과적으로 학습하기 위해 더 많은 에포크를 필요로 할 수 있으며, 반면 더 작은 데이터셋은 과적합을 피하기 위해 더 적은 에포크를 필요로 할 수 있습니다. YOLO26과 관련하여 다음을 설정할 수 있습니다. epochs 학습 스크립트의 parameter입니다.

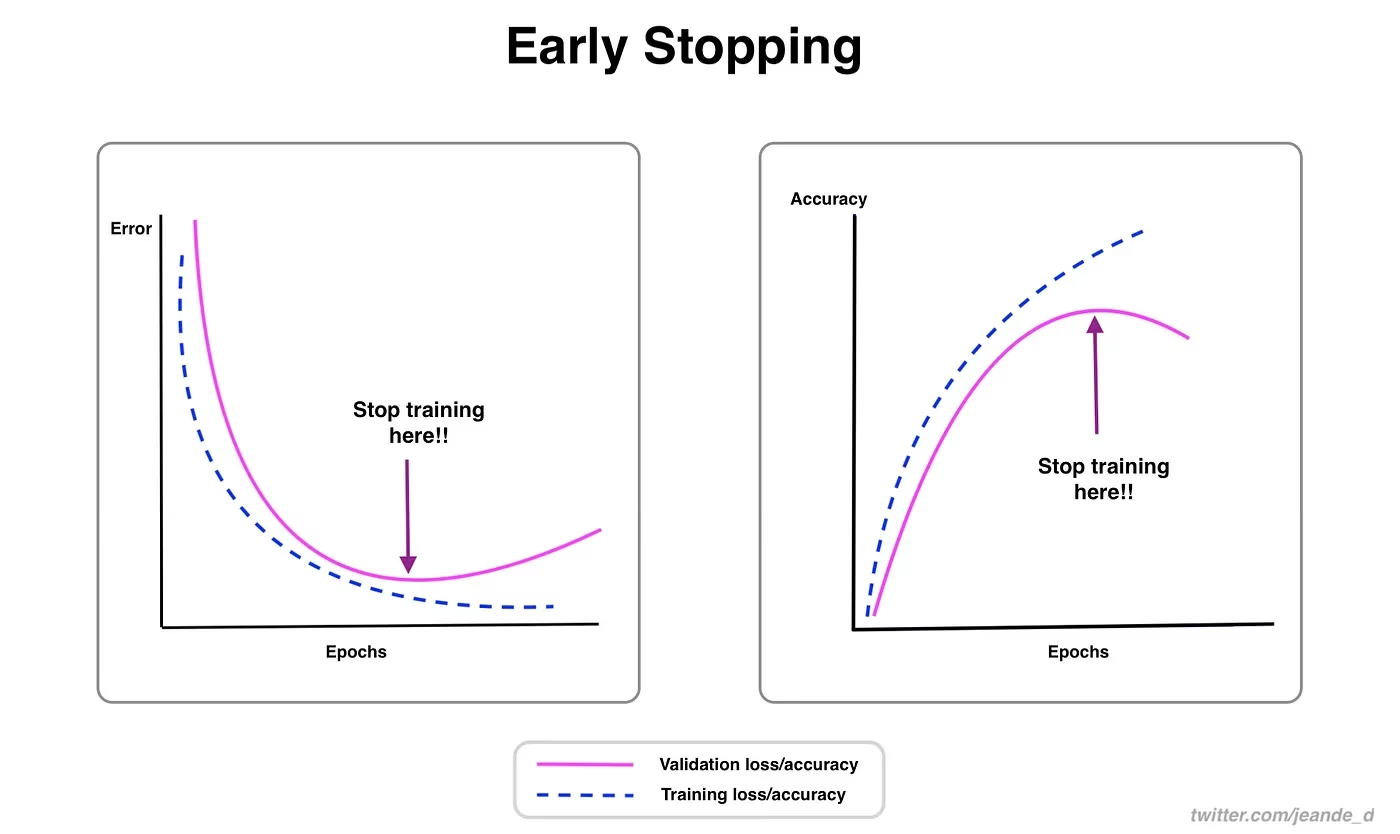

조기 종료

조기 종료는 모델 학습을 최적화하는 데 유용한 기술입니다. 유효성 검사 성능을 모니터링하여 모델 개선이 중단되면 학습을 중단할 수 있습니다. 이를 통해 컴퓨팅 리소스를 절약하고 과적합을 방지할 수 있습니다.

이 프로세스에는 훈련 중단을 결정하기 전에 유효성 검사 메트릭의 개선을 기다리는 에포크 수를 결정하는 내성 매개변수를 설정하는 작업이 포함됩니다. 모델의 성능이 이러한 에포크 내에서 향상되지 않으면 시간과 리소스 낭비를 방지하기 위해 훈련이 중단됩니다.

YOLO26의 경우, 훈련 설정에서 patience 매개변수를 설정하여 조기 종료를 활성화할 수 있습니다. 예를 들어, patience=5 이는 5회 연속 에포크 동안 유효성 검사 메트릭이 개선되지 않으면 학습이 중단됨을 의미합니다. 이 방법을 사용하면 학습 프로세스가 효율적으로 유지되고 과도한 계산 없이 최적의 성능을 달성할 수 있습니다.

클라우드 및 로컬 학습 중에서 선택

모델 훈련에는 클라우드 훈련과 로컬 훈련의 두 가지 옵션이 있습니다.

클라우드 학습은 확장성과 강력한 하드웨어를 제공하며 대규모 데이터 세트와 복잡한 모델을 처리하는 데 이상적입니다. Google Cloud, AWS 및 Azure와 같은 플랫폼은 고성능 GPU 및 TPU에 대한 주문형 액세스를 제공하여 학습 시간을 단축하고 더 큰 모델로 실험할 수 있도록 합니다. 그러나 클라우드 학습은 특히 장기간 동안 비용이 많이 들 수 있으며 데이터 전송으로 인해 비용과 대기 시간이 추가될 수 있습니다.

로컬 학습은 더 큰 제어 및 사용자 정의를 제공하여 특정 요구 사항에 맞게 환경을 조정하고 지속적인 클라우드 비용을 피할 수 있습니다. 장기 프로젝트에 더 경제적일 수 있으며 데이터가 온프레미스에 유지되므로 더 안전합니다. 그러나 로컬 하드웨어는 리소스 제한이 있을 수 있고 유지 관리가 필요하므로 대규모 모델의 경우 학습 시간이 더 길어질 수 있습니다.

최적화 프로그램 선택

옵티마이저는 모델의 성능을 측정하는 손실 함수를 최소화하기 위해 신경망의 가중치를 조정하는 알고리즘입니다. 더 간단히 말해서, 옵티마이저는 오류를 줄이기 위해 매개변수를 조정하여 모델이 학습하도록 돕습니다. 올바른 옵티마이저를 선택하는 것은 모델이 얼마나 빠르고 정확하게 학습하는지에 직접적인 영향을 미칩니다.

모델 성능을 향상시키기 위해 옵티마이저 파라미터를 미세 조정할 수도 있습니다. 학습률을 조정하면 파라미터를 업데이트할 때 단계 크기가 설정됩니다. 안정성을 위해 적당한 학습률로 시작하여 장기적인 학습을 개선하기 위해 시간이 지남에 따라 점진적으로 줄일 수 있습니다. 또한 모멘텀을 설정하면 과거 업데이트가 현재 업데이트에 미치는 영향의 정도가 결정됩니다. 모멘텀의 일반적인 값은 0.9 정도입니다. 일반적으로 좋은 균형을 제공합니다.

일반적인 옵티마이저

최적화 도구마다 강점과 약점이 다릅니다. 몇 가지 일반적인 최적화 도구를 간략하게 살펴보겠습니다.

SGD (확률적 경사 하강법):

- 파라미터에 대한 손실 함수의 기울기를 사용하여 모델 파라미터를 업데이트합니다.

- 단순하고 효율적이지만 수렴하는 데 시간이 오래 걸릴 수 있으며 로컬 최소값에 갇힐 수 있습니다.

Adam (Adaptive Moment Estimation):

- 모멘텀을 사용한 SGD와 RMSProp의 장점을 모두 결합합니다.

- 기울기의 첫 번째 및 두 번째 모멘트 추정치를 기반으로 각 파라미터에 대한 학습률을 조정합니다.

- 노이즈가 많은 데이터와 희소한 기울기에 적합합니다.

- 효율적이며 일반적으로 튜닝이 덜 필요하여 YOLO26에 권장되는 옵티마이저입니다.

RMSProp (Root Mean Square Propagation):

- 최근 기울기 크기의 실행 평균으로 기울기를 나누어 각 파라미터에 대한 학습률을 조정합니다.

- 기울기 소실 문제를 처리하는 데 도움이 되며 순환 신경망에 효과적입니다.

YOLO26의 경우, 다음은 optimizer 매개변수를 통해 SGD, Adam, AdamW, NAdam, RAdam, RMSProp을 포함한 다양한 옵티마이저 중에서 선택하거나 다음으로 설정할 수 있습니다. auto 모델 구성에 따른 자동 선택을 위해.

커뮤니티와 연결

컴퓨터 비전 매니아 커뮤니티에 참여하면 문제를 해결하고 더 빠르게 학습하는 데 도움이 될 수 있습니다. 연결하고, 도움을 받고, 아이디어를 공유하는 몇 가지 방법이 있습니다.

커뮤니티 리소스

- GitHub 이슈: YOLO26 GitHub 저장소를 방문하여 Issues 탭을 사용하여 질문하고, 버그를 보고하고, 새로운 기능을 제안할 수 있습니다. 커뮤니티와 관리자들은 매우 활발하며 도움을 줄 준비가 되어 있습니다.

- Ultralytics Discord 서버: Ultralytics Discord 서버에 가입하여 다른 사용자 및 개발자와 채팅하고, 지원을 받고, 경험을 공유하세요.

공식 문서

- Ultralytics YOLO26 문서: 공식 YOLO26 문서를 확인하여 다양한 컴퓨터 비전 프로젝트에 대한 상세 가이드와 유용한 팁을 얻으십시오.

이러한 리소스를 사용하면 과제를 해결하고 컴퓨터 비전 커뮤니티의 최신 트렌드와 사례를 최신 상태로 유지하는 데 도움이 됩니다.

주요 내용

컴퓨터 비전 모델을 학습시키는 것은 모범 사례를 따르고, 전략을 최적화하며, 발생하는 문제를 해결하는 것을 포함합니다. 배치 크기 조정, 혼합 정밀도 학습, 사전 학습 가중치로 시작하는 것과 같은 기술은 모델의 성능을 향상시키고 학습 속도를 높일 수 있습니다. 부분 집합 학습 및 조기 중단과 같은 방법은 시간과 자원을 절약하는 데 도움이 됩니다. 커뮤니티와 소통하고 새로운 트렌드를 따라가는 것은 모델 학습 기술을 계속 향상시키는 데 도움이 될 것입니다.

FAQ

Ultralytics YOLO로 대규모 데이터 세트를 훈련할 때 GPU 활용률을 어떻게 향상시킬 수 있습니까?

GPU 활용률을 높이려면 다음을 설정하십시오. batch_size 훈련 설정에서 매개변수를 GPU가 지원하는 최대 크기로 설정하세요. 이는 GPU의 기능을 최대한 활용하여 훈련 시간을 단축합니다. 메모리 오류가 발생하면 훈련이 원활하게 실행될 때까지 배치 크기를 점진적으로 줄이세요. YOLO26의 경우, 설정은 batch=-1 훈련 스크립트에서 효율적인 처리를 위해 최적의 배치 크기를 자동으로 결정합니다. 자세한 내용은 다음을 참조하십시오. 학습 구성.

혼합 정밀도 훈련이란 무엇이며, YOLO26에서 어떻게 활성화하나요?

혼합 정밀도 학습은 16비트(FP16) 및 32비트(FP32) 부동 소수점 형식을 모두 활용하여 계산 속도와 정밀도의 균형을 맞춥니다. 이 접근 방식은 모델을 희생하지 않고 학습 속도를 높이고 메모리 사용량을 줄입니다. 정확도. YOLO26에서 혼합 정밀도 훈련을 활성화하려면 다음을 설정하세요. amp parameter를 True 훈련 구성에서. 이렇게 하면 AMP(Automatic Mixed Precision) 훈련이 활성화됩니다. 이 최적화 기술에 대한 자세한 내용은 다음을 참조하십시오. 학습 구성.

다중 스케일 훈련은 YOLO26 모델 성능을 어떻게 향상시키나요?

다중 스케일 훈련은 다양한 크기의 이미지로 훈련하여 모델이 다양한 스케일과 거리에서 더 잘 일반화할 수 있도록 함으로써 모델 성능을 향상시킵니다. YOLO26에서 다음을 설정하여 다중 스케일 훈련을 활성화할 수 있습니다. scale 학습 구성의 parameter입니다. 예를 들어, scale=0.5 0.5에서 1.5 사이의 확대 비율을 샘플링한 후, 다시 채우기/자르기하여 imgsz이 기술은 서로 다른 거리에 있는 물체를 시뮬레이션하여 다양한 시나리오에서 모델의 견고성을 높입니다. 설정 및 자세한 내용은 다음을 참조하세요. 학습 구성.

YOLO26에서 사전 훈련된 가중치를 사용하여 훈련 속도를 높이는 방법은 무엇인가요?

사전 훈련된 가중치를 사용하면 기본적인 시각적 특징에 이미 익숙한 모델을 활용하여 훈련을 크게 가속화하고 모델 정확도를 향상시킬 수 있습니다. YOLO26에서 단순히 다음을 설정하세요. pretrained parameter를 True 또는 학습 설정에서 사용자 지정 사전 학습 가중치 경로를 제공합니다. 전이 학습(transfer learning)이라고 불리는 이 방법은 대규모 데이터셋으로 학습된 모델을 특정 애플리케이션에 효과적으로 적용할 수 있도록 합니다. 사전 학습 가중치 사용 방법 및 이점에 대한 자세한 내용은 다음에서 확인할 수 있습니다. 학습 구성 가이드.

모델 훈련을 위한 권장 에포크 수는 얼마이며, YOLO26에서 이를 어떻게 설정하나요?

에포크 수는 모델 훈련 중 훈련 데이터셋을 완전히 통과하는 횟수를 의미합니다. 일반적인 시작점은 300 에포크입니다. 모델이 조기에 과적합되면 에포크 수를 줄일 수 있습니다. 또는 과적합이 관찰되지 않으면 훈련을 600, 1200 또는 그 이상의 에포크로 확장할 수 있습니다. YOLO26에서 이를 설정하려면 다음을 사용하세요. epochs 학습 스크립트의 parameter입니다. 최적의 epoch 수를 결정하는 데 대한 추가 조언은 다음 섹션을 참조하십시오. epoch 수.