SKU-110k 데이터 세트

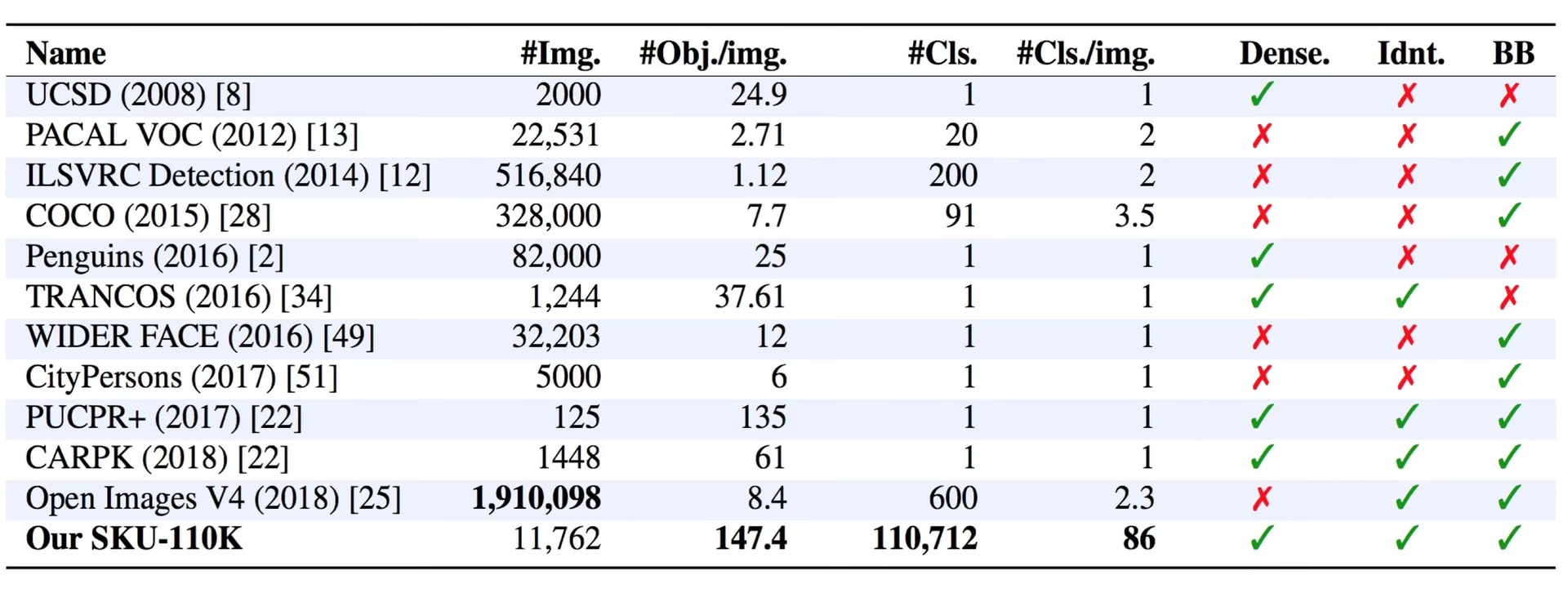

SKU-110k 데이터 세트는 빽빽하게 포장된 소매 선반 이미지 모음으로, 객체 감지 작업 연구를 지원하도록 설계되었습니다. Eran Goldman 등이 개발한 이 데이터 세트에는 빽빽하게 포장된 객체가 있는 110,000개 이상의 고유한 SKU(Store Keeping Unit) 범주가 포함되어 있으며, 종종 유사하거나 동일하게 보이며 근접하게 배치됩니다.

참고: Ultralytics를 사용하여 SKU-110k 데이터셋에서 YOLOv10 훈련 방법 | 소매 데이터셋

주요 기능

- SKU-110k는 전 세계 상점 선반의 이미지를 포함하며, 최첨단 객체 검출기에 어려움을 주는 빽빽하게 채워진 객체를 특징으로 합니다.

- 이 데이터 세트는 110,000개 이상의 고유한 SKU 카테고리를 포함하여 다양한 범위의 객체 모양을 제공합니다.

- 어노테이션에는 객체에 대한 경계 상자 및 SKU 범주 레이블이 포함됩니다.

데이터 세트 구조

SKU-110k 데이터 세트는 세 가지 주요 하위 세트로 구성됩니다.

- 학습 데이터 세트: 이 하위 세트는 객체 감지 모델 학습에 사용되는 8,219개의 이미지와 어노테이션을 포함합니다.

- 검증 세트: 이 하위 세트는 훈련 중 모델 검증에 사용되는 588개의 이미지와 주석으로 구성됩니다.

- 테스트 세트: 이 하위 세트에는 훈련된 객체 탐지 모델의 최종 평가를 위해 설계된 2,936개의 이미지가 포함되어 있습니다.

응용 분야

SKU-110k 데이터 세트는 객체 감지 작업, 특히 소매 선반 디스플레이와 같이 빽빽하게 포장된 장면에서 딥 러닝 모델을 훈련하고 평가하는 데 널리 사용됩니다. 응용 분야는 다음과 같습니다.

- 소매 재고 관리 및 자동화

- 전자 상거래 플랫폼에서의 제품 인식

- 플래노그램 준수 확인

- 상점의 셀프 계산대 시스템

- 창고에서의 로봇 Picking 및 Sorting

이 데이터 세트는 다양한 SKU 카테고리와 밀집된 객체 배열을 통해 컴퓨터 비전 분야의 연구자와 실무자에게 귀중한 자료를 제공합니다.

데이터세트 YAML

YAML(Yet Another Markup Language) 파일은 데이터 세트 구성을 정의하는 데 사용됩니다. 여기에는 데이터 세트의 경로, 클래스 및 기타 관련 정보가 포함되어 있습니다. SKU-110K 데이터 세트의 경우, SKU-110K.yaml 파일은 다음 위치에서 관리됩니다. https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/SKU-110K.yaml.

ultralytics/cfg/datasets/SKU-110K.yaml

# Ultralytics 🚀 AGPL-3.0 License - https://ultralytics.com/license

# SKU-110K retail items dataset https://github.com/eg4000/SKU110K_CVPR19 by Trax Retail

# Documentation: https://docs.ultralytics.com/datasets/detect/sku-110k/

# Example usage: yolo train data=SKU-110K.yaml

# parent

# ├── ultralytics

# └── datasets

# └── SKU-110K ← downloads here (13.6 GB)

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: SKU-110K # dataset root dir

train: train.txt # train images (relative to 'path') 8219 images

val: val.txt # val images (relative to 'path') 588 images

test: test.txt # test images (optional) 2936 images

# Classes

names:

0: object

# Download script/URL (optional) ---------------------------------------------------------------------------------------

download: |

import shutil

from pathlib import Path

import numpy as np

import polars as pl

from ultralytics.utils import TQDM

from ultralytics.utils.downloads import download

from ultralytics.utils.ops import xyxy2xywh

# Download

dir = Path(yaml["path"]) # dataset root dir

parent = Path(dir.parent) # download dir

urls = ["http://trax-geometry.s3.amazonaws.com/cvpr_challenge/SKU110K_fixed.tar.gz"]

download(urls, dir=parent)

# Rename directories

if dir.exists():

shutil.rmtree(dir)

(parent / "SKU110K_fixed").rename(dir) # rename dir

(dir / "labels").mkdir(parents=True, exist_ok=True) # create labels dir

# Convert labels

names = "image", "x1", "y1", "x2", "y2", "class", "image_width", "image_height" # column names

for d in "annotations_train.csv", "annotations_val.csv", "annotations_test.csv":

x = pl.read_csv(dir / "annotations" / d, has_header=False, new_columns=names, infer_schema_length=None).to_numpy() # annotations

images, unique_images = x[:, 0], np.unique(x[:, 0])

with open((dir / d).with_suffix(".txt").__str__().replace("annotations_", ""), "w", encoding="utf-8") as f:

f.writelines(f"./images/{s}\n" for s in unique_images)

for im in TQDM(unique_images, desc=f"Converting {dir / d}"):

cls = 0 # single-class dataset

with open((dir / "labels" / im).with_suffix(".txt"), "a", encoding="utf-8") as f:

for r in x[images == im]:

w, h = r[6], r[7] # image width, height

xywh = xyxy2xywh(np.array([[r[1] / w, r[2] / h, r[3] / w, r[4] / h]]))[0] # instance

f.write(f"{cls} {xywh[0]:.5f} {xywh[1]:.5f} {xywh[2]:.5f} {xywh[3]:.5f}\n") # write label

사용법

SKU-110K 데이터셋에서 YOLO26n 모델을 100 에포크 동안 640 크기의 이미지로 훈련하려면 다음 코드 스니펫을 사용할 수 있습니다. 사용 가능한 인수의 전체 목록은 모델 훈련 페이지를 참조하십시오.

훈련 예제

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="SKU-110K.yaml", epochs=100, imgsz=640)

# Start training from a pretrained *.pt model

yolo detect train data=SKU-110K.yaml model=yolo26n.pt epochs=100 imgsz=640

샘플 데이터 및 주석

SKU-110k 데이터 세트는 빽빽하게 채워진 객체가 있는 다양한 소매 선반 이미지 세트를 포함하여 객체 감지 작업에 대한 풍부한 컨텍스트를 제공합니다. 다음은 데이터 세트의 데이터 예와 해당 주석입니다.

- 빽빽하게 포장된 소매 선반 이미지: 이 이미지는 소매 선반 환경에서 빽빽하게 포장된 객체의 예를 보여줍니다. 객체는 경계 상자 및 SKU 카테고리 레이블로 주석 처리됩니다.

이 예는 SKU-110k 데이터 세트의 데이터 다양성과 복잡성을 보여주고 객체 감지 작업에 고품질 데이터가 중요하다는 점을 강조합니다. 제품의 밀집된 배열은 감지 알고리즘에 고유한 문제를 제기하므로 이 데이터 세트는 강력한 소매 중심 컴퓨터 비전 솔루션을 개발하는 데 특히 유용합니다.

인용 및 감사의 말씀

연구 또는 개발 작업에 SKU-110k 데이터 세트를 사용하는 경우 다음 논문을 인용해 주십시오:

@inproceedings{goldman2019dense,

author = {Eran Goldman and Roei Herzig and Aviv Eisenschtat and Jacob Goldberger and Tal Hassner},

title = {Precise Detection in Densely Packed Scenes},

booktitle = {Proc. Conf. Comput. Vision Pattern Recognition (CVPR)},

year = {2019}

}

컴퓨터 비전 연구 커뮤니티를 위한 귀중한 리소스인 SKU-110k 데이터 세트를 만들고 유지 관리해 주신 Eran Goldman 외 여러 분께 감사를 드립니다. SKU-110k 데이터 세트 및 제작자에 대한 자세한 내용은 SKU-110k 데이터 세트 GitHub 저장소를 방문하십시오.

FAQ

SKU-110k 데이터 세트는 무엇이며 객체 탐지에서 왜 중요합니까?

SKU-110k 데이터 세트는 객체 감지 작업 연구를 돕기 위해 설계된 빽빽하게 채워진 소매 선반 이미지로 구성됩니다. Eran Goldman 등이 개발했으며 110,000개 이상의 고유한 SKU 범주를 포함합니다. 이 데이터 세트가 중요한 이유는 다양한 객체 모양과 근접성으로 최첨단 객체 감지기에 도전하여 컴퓨터 비전 분야의 연구원과 실무자에게 귀중한 리소스가 되기 때문입니다. SKU-110k 데이터 세트 섹션에서 데이터 세트의 구조 및 응용 프로그램에 대해 자세히 알아보십시오.

SKU-110k 데이터셋을 사용하여 YOLO26 모델을 어떻게 훈련시키나요?

SKU-110k 데이터셋으로 YOLO26 모델을 훈련하는 것은 간단합니다. 다음은 이미지 크기를 640으로 설정하고 100 에포크 동안 YOLO26n 모델을 훈련하는 예시입니다:

훈련 예제

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="SKU-110K.yaml", epochs=100, imgsz=640)

# Start training from a pretrained *.pt model

yolo detect train data=SKU-110K.yaml model=yolo26n.pt epochs=100 imgsz=640

사용 가능한 인수에 대한 포괄적인 목록은 모델 훈련 페이지를 참조하세요.

SKU-110k 데이터 세트의 주요 하위 세트는 무엇입니까?

SKU-110k 데이터 세트는 세 가지 주요 하위 세트로 구성됩니다.

- 학습 데이터 세트: 객체 감지 모델 학습에 사용되는 8,219개의 이미지와 어노테이션을 포함합니다.

- 검증 세트: 훈련 중 모델 검증에 사용되는 588개의 이미지와 주석으로 구성됩니다.

- 테스트 세트: 훈련된 객체 탐지 모델의 최종 평가를 위해 설계된 2,936개의 이미지를 포함합니다.

자세한 내용은 데이터 세트 구조 섹션을 참조하십시오.

훈련을 위해 SKU-110k 데이터 세트를 어떻게 구성합니까?

SKU-110k 데이터 세트 구성은 YAML 파일에 정의되어 있으며, 여기에는 데이터 세트의 경로, 클래스 및 기타 관련 정보에 대한 세부 정보가 포함되어 있습니다. SKU-110K.yaml 파일은 다음 위치에서 관리됩니다. SKU-110K.yaml. 예를 들어, 다음에서 볼 수 있듯이 이 구성을 사용하여 모델을 훈련할 수 있습니다. 사용법 섹션에서 확인할 수 있습니다.

딥러닝 환경에서 SKU-110k 데이터 세트의 주요 특징은 무엇입니까?

SKU-110k 데이터 세트는 전 세계 상점 선반 이미지를 특징으로 하며 객체 감지기에 상당한 어려움을 주는 빽빽하게 채워진 객체를 보여줍니다.

- 110,000개 이상의 고유한 SKU 카테고리

- 다양한 객체 모양

- 어노테이션에는 경계 상자 및 SKU 범주 레이블이 포함됩니다.

이러한 기능은 SKU-110k 데이터 세트를 객체 감지 작업에서 딥 러닝 모델을 학습하고 평가하는 데 특히 유용하게 만듭니다. 자세한 내용은 주요 기능 섹션을 참조하십시오.

SKU-110k 데이터 세트를 어떻게 인용합니까?

연구 또는 개발 작업에 SKU-110k 데이터 세트를 사용하는 경우 다음 논문을 인용해 주십시오:

@inproceedings{goldman2019dense,

author = {Eran Goldman and Roei Herzig and Aviv Eisenschtat and Jacob Goldberger and Tal Hassner},

title = {Precise Detection in Densely Packed Scenes},

booktitle = {Proc. Conf. Comput. Vision Pattern Recognition (CVPR)},

year = {2019}

}

데이터세트에 대한 자세한 내용은 인용 및 감사의 말씀 섹션에서 확인할 수 있습니다.