COCO-Pose 데이터셋

COCO-Pose 데이터셋은 자세 추정(pose estimation) 작업을 위해 설계된 COCO(Common Objects in Context) 데이터셋의 특수 버전입니다. 이 데이터셋은 COCO Keypoints 2017 이미지와 레이블을 활용하여 YOLO와 같은 모델을 자세 추정 작업에 훈련할 수 있도록 합니다.

COCO-Pose 사전 훈련 모델

| 모델 | 크기 (픽셀) | mAPpose 50-95(e2e) | mAPpose 50(e2e) | 속도 CPU ONNX (ms) | 속도 T4 TensorRT10 (ms) | 파라미터 (M) | FLOPs (B) |

|---|---|---|---|---|---|---|---|

| YOLO26n-pose | 640 | 57.2 | 83.3 | 40.3 ± 0.5 | 1.8 ± 0.0 | 2.9 | 7.5 |

| YOLO26s-pose | 640 | 63.0 | 86.6 | 85.3 ± 0.9 | 2.7 ± 0.0 | 10.4 | 23.9 |

| YOLO26m-pose | 640 | 68.8 | 89.6 | 218.0 ± 1.5 | 5.0 ± 0.1 | 21.5 | 73.1 |

| YOLO26l-pose | 640 | 70.4 | 90.5 | 275.4 ± 2.4 | 6.5 ± 0.1 | 25.9 | 91.3 |

| YOLO26x-pose | 640 | 71.6 | 91.6 | 565.4 ± 3.0 | 12.2 ± 0.2 | 57.6 | 201.7 |

주요 기능

- COCO-Pose는 자세 추정 작업을 위한 키포인트가 레이블링된 20만 개의 이미지를 포함하는 COCO Keypoints 2017 데이터셋을 기반으로 합니다.

- 이 데이터 세트는 인체에 대한 17개의 키포인트를 지원하여 자세한 포즈 추정을 용이하게 합니다.

- COCO와 마찬가지로, 자세 추정 작업을 위한 OKS(Object Keypoint Similarity)를 포함한 표준화된 평가 지표를 제공하여 모델 성능 비교에 적합합니다.

데이터 세트 구조

COCO-Pose 데이터셋은 세 가지 하위 집합으로 나뉩니다:

- Train2017: 이 하위 집합은 COCO 데이터셋에서 가져온 56,599개의 이미지를 포함하며, 자세 추정 모델 훈련을 위해 주석이 달려 있습니다.

- Val2017: 이 하위 집합은 모델 훈련 중 검증 목적으로 사용되는 2346개의 이미지를 가지고 있습니다.

- Test2017: 이 하위 집합은 훈련된 모델의 테스트 및 벤치마킹에 사용되는 이미지로 구성됩니다. 이 하위 집합에 대한 실제(ground truth) 주석은 공개적으로 제공되지 않으며, 성능 평가를 위해 결과는 COCO 평가 서버에 제출됩니다.

응용 분야

COCO-Pose 데이터셋은 OpenPose와 같은 키포인트 detect 및 자세 추정 작업에서 딥러닝 모델을 훈련하고 평가하는 데 특별히 사용됩니다. 이 데이터셋의 방대한 주석 이미지와 표준화된 평가 지표는 자세 추정에 중점을 둔 컴퓨터 비전 연구원 및 실무자에게 필수적인 리소스입니다.

데이터세트 YAML

데이터셋 구성을 정의하는 데 yaml(Yet Another Markup Language) 파일이 사용됩니다. 이 파일에는 데이터셋의 경로, 클래스 및 기타 관련 정보가 포함됩니다. COCO-Pose 데이터셋의 경우, coco-pose.yaml 파일은 다음 위치에서 관리됩니다. https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco-pose.yaml.

ultralytics/cfg/datasets/coco-pose.yaml

# Ultralytics 🚀 AGPL-3.0 License - https://ultralytics.com/license

# COCO 2017 Keypoints dataset https://cocodataset.org by Microsoft

# Documentation: https://docs.ultralytics.com/datasets/pose/coco/

# Example usage: yolo train data=coco-pose.yaml

# parent

# ├── ultralytics

# └── datasets

# └── coco-pose ← downloads here (20.1 GB)

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: coco-pose # dataset root dir

train: train2017.txt # train images (relative to 'path') 56599 images

val: val2017.txt # val images (relative to 'path') 2346 images

test: test-dev2017.txt # 20288 of 40670 images, submit to https://codalab.lisn.upsaclay.fr/competitions/7403

# Keypoints

kpt_shape: [17, 3] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

flip_idx: [0, 2, 1, 4, 3, 6, 5, 8, 7, 10, 9, 12, 11, 14, 13, 16, 15]

# Classes

names:

0: person

# Keypoint names per class

kpt_names:

0:

- nose

- left_eye

- right_eye

- left_ear

- right_ear

- left_shoulder

- right_shoulder

- left_elbow

- right_elbow

- left_wrist

- right_wrist

- left_hip

- right_hip

- left_knee

- right_knee

- left_ankle

- right_ankle

# Download script/URL (optional)

download: |

from pathlib import Path

from ultralytics.utils import ASSETS_URL

from ultralytics.utils.downloads import download

# Download labels

dir = Path(yaml["path"]) # dataset root dir

urls = [f"{ASSETS_URL}/coco2017labels-pose.zip"]

download(urls, dir=dir.parent)

# Download data

urls = [

"http://images.cocodataset.org/zips/train2017.zip", # 19G, 118k images

"http://images.cocodataset.org/zips/val2017.zip", # 1G, 5k images

"http://images.cocodataset.org/zips/test2017.zip", # 7G, 41k images (optional)

]

download(urls, dir=dir / "images", threads=3)

사용법

이미지 크기 640으로 COCO-Pose 데이터셋에서 YOLO26n-pose 모델을 100 에포크 동안 학습시키려면 다음 코드 스니펫을 사용할 수 있습니다. 사용 가능한 인수의 전체 목록은 모델 학습 페이지를 참조하십시오.

훈련 예제

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="coco-pose.yaml", epochs=100, imgsz=640)

# Start training from a pretrained *.pt model

yolo pose train data=coco-pose.yaml model=yolo26n-pose.pt epochs=100 imgsz=640

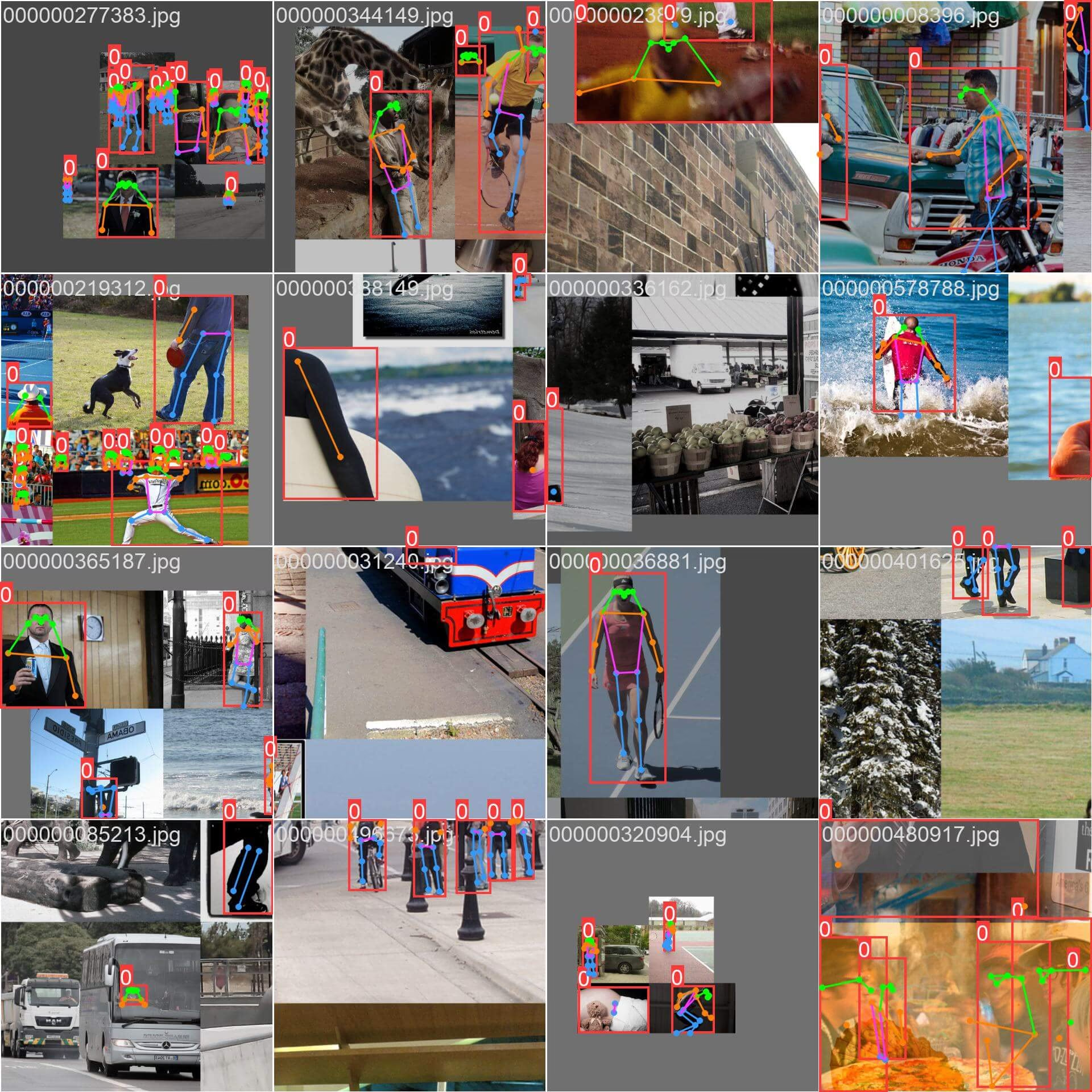

샘플 이미지 및 주석

COCO-Pose 데이터셋은 키포인트가 주석 처리된 다양한 사람 형상 이미지를 포함합니다. 다음은 데이터셋의 이미지 예시와 해당 주석입니다:

- 모자이크 이미지: 이 이미지는 모자이크 데이터 세트 이미지로 구성된 훈련 배치를 보여줍니다. 모자이킹은 각 훈련 배치 내에서 객체와 장면의 다양성을 높이기 위해 여러 이미지를 단일 이미지로 결합하는 훈련 중 사용되는 기술입니다. 이는 모델이 다양한 객체 크기, 종횡비 및 컨텍스트로 일반화하는 능력을 향상시키는 데 도움이 됩니다.

이 예시는 COCO-Pose 데이터셋 이미지의 다양성과 복잡성 및 훈련 과정에서 모자이싱(mosaicing) 사용의 이점을 보여줍니다.

인용 및 감사의 말씀

연구 또는 개발 작업에서 COCO-Pose 데이터셋을 사용하는 경우, 다음 논문을 인용해 주십시오:

@misc{lin2015microsoft,

title={Microsoft COCO: Common Objects in Context},

author={Tsung-Yi Lin and Michael Maire and Serge Belongie and Lubomir Bourdev and Ross Girshick and James Hays and Pietro Perona and Deva Ramanan and C. Lawrence Zitnick and Piotr Dollár},

year={2015},

eprint={1405.0312},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

컴퓨터 비전 커뮤니티를 위한 이 귀중한 리소스를 만들고 유지 관리해 준 COCO 컨소시엄에 감사드립니다. COCO-Pose 데이터셋 및 그 생성자에 대한 자세한 내용은 COCO 데이터셋 웹사이트를 방문하십시오.

FAQ

COCO-Pose 데이터셋은 무엇이며 Ultralytics YOLO와 함께 자세 추정(pose estimation)에 어떻게 사용됩니까?

COCO-Pose 데이터셋은 포즈 추정 작업을 위해 설계된 COCO(Common Objects in Context) 데이터셋의 특수 버전입니다. 이 데이터셋은 COCO Keypoints 2017 이미지와 주석을 기반으로 하며, Ultralytics YOLO와 같은 모델을 상세한 포즈 추정을 위해 학습할 수 있도록 합니다. 예를 들어, 사전 학습된 모델을 로드하고 yaml 구성으로 학습시켜 COCO-Pose 데이터셋을 사용하여 YOLO26n-pose 모델을 학습시킬 수 있습니다. 학습 예시는 학습 문서를 참조하십시오.

COCO-Pose 데이터셋에서 YOLO26 모델을 어떻게 학습시킬 수 있습니까?

COCO-Pose 데이터셋에서 YOLO26 모델을 학습시키는 것은 python 또는 CLI 명령을 사용하여 수행할 수 있습니다. 예를 들어, 이미지 크기 640으로 100 에포크 동안 YOLO26n-pose 모델을 학습시키려면 다음 단계를 따를 수 있습니다.

훈련 예제

from ultralytics import YOLO

# Load a model

model = YOLO("yolo26n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="coco-pose.yaml", epochs=100, imgsz=640)

# Start training from a pretrained *.pt model

yolo pose train data=coco-pose.yaml model=yolo26n-pose.pt epochs=100 imgsz=640

훈련 과정 및 사용 가능한 인수에 대한 자세한 내용은 훈련 페이지를 확인하십시오.

COCO-Pose 데이터셋이 모델 성능 평가를 위해 제공하는 다양한 지표는 무엇입니까?

COCO-Pose 데이터셋은 원본 COCO 데이터셋과 유사하게 포즈 추정 작업을 위한 여러 표준화된 평가 지표를 제공합니다. 주요 지표로는 예측된 키포인트의 정확도를 실제 주석과 비교하여 평가하는 Object Keypoint Similarity (OKS)가 있습니다. 이러한 지표를 통해 다양한 모델 간의 철저한 성능 비교가 가능합니다. 예를 들어, YOLO26n-pose, YOLO26s-pose 등과 같은 COCO-Pose 사전 학습 모델은 mAPpose50-95 및 mAPpose50과 같은 특정 성능 지표가 문서에 나열되어 있습니다.

COCO-Pose 데이터셋은 어떻게 구성되고 분할됩니까?

COCO-Pose 데이터셋은 세 가지 하위 집합으로 나뉩니다:

- Train2017: 포즈 추정 모델 학습을 위해 주석이 달린 56599개의 COCO 이미지를 포함합니다.

- Val2017: 모델 훈련 중 검증을 위해 2346개의 이미지가 사용됩니다.

- Test2017: 학습된 모델의 테스트 및 벤치마킹에 사용되는 이미지입니다. 이 서브셋에 대한 Ground truth 주석은 공개적으로 제공되지 않으며, 성능 평가를 위해 결과는 COCO 평가 서버에 제출됩니다.

이러한 하위 집합은 훈련, 유효성 검사 및 테스트 단계를 효과적으로 구성하는 데 도움이 됩니다. 구성 세부 정보는 다음에서 제공되는 coco-pose.yaml 파일을 살펴보십시오. GitHub.

COCO-Pose 데이터셋의 주요 기능과 응용 분야는 무엇입니까?

COCO-Pose 데이터셋은 COCO Keypoints 2017 주석을 확장하여 사람 형상에 대한 17개의 키포인트를 포함하며, 상세한 포즈 추정을 가능하게 합니다. 표준화된 평가 지표(예: OKS)는 다양한 모델 간의 비교를 용이하게 합니다. COCO-Pose 데이터셋의 응용 분야는 스포츠 분석, 헬스케어, 인간-컴퓨터 상호작용 등 사람 형상의 상세한 포즈 추정이 필요한 다양한 영역에 걸쳐 있습니다. 실용적인 사용을 위해, 문서에 제공된 사전 학습 모델(예: YOLO26n-pose)을 활용하면 프로세스를 크게 간소화할 수 있습니다(주요 기능).

연구 또는 개발 작업에서 COCO-Pose 데이터셋을 사용하는 경우, 다음 BibTeX 항목으로 논문을 인용해 주십시오.