Model Pruning and Sparsity in YOLOv5

📚 This guide explains how to apply pruning to YOLOv5 🚀 models to create more efficient networks while maintaining performance.

What is Model Pruning?

Model pruning is a technique used to reduce the size and complexity of neural networks by removing less important parameters (weights and connections). This process creates a more efficient model with several benefits:

- Reduced model size for easier deployment on resource-constrained devices

- Faster inference speeds with minimal impact on accuracy

- Lower memory usage and energy consumption

- Improved overall efficiency for real-time applications

Pruning works by identifying and removing parameters that contribute minimally to the model's performance, resulting in a more lightweight model with similar accuracy.

Before You Start

Clone repo and install requirements.txt in a Python>=3.8.0 environment, including PyTorch>=1.8. Models and datasets download automatically from the latest YOLOv5 release.

git clone https://github.com/ultralytics/yolov5 # clone

cd yolov5

pip install -r requirements.txt # install

Test Baseline Performance

Before pruning, establish a baseline performance to compare against. This command tests YOLOv5x on COCO val2017 at image size 640 pixels. yolov5x.pt is the largest and most accurate model available. Other options are yolov5s.pt, yolov5m.pt and yolov5l.pt, or your own checkpoint from training a custom dataset ./weights/best.pt. For details on all available models, see the README table.

python val.py --weights yolov5x.pt --data coco.yaml --img 640 --half

Output:

val: data=/content/yolov5/data/coco.yaml, weights=['yolov5x.pt'], batch_size=32, imgsz=640, conf_thres=0.001, iou_thres=0.65, task=val, device=, workers=8, single_cls=False, augment=False, verbose=False, save_txt=False, save_conf=False, save_json=True, project=runs/val, name=exp, exist_ok=False, half=True, dnn=False

YOLOv5 🚀 v6.0-224-g4c40933 torch 1.10.0+cu111 CUDA:0 (Tesla V100-SXM2-16GB, 16160MiB)

Fusing layers...

Model Summary: 444 layers, 86705005 parameters, 0 gradients

val: Scanning '/content/datasets/coco/val2017.cache' images and labels... 4952 found, 48 missing, 0 empty, 0 corrupt: 100% 5000/5000 [00:00<?, ?it/s]

Class Images Labels P R mAP@.5 mAP@.5:.95: 100% 157/157 [01:12<00:00, 2.16it/s]

all 5000 36335 0.732 0.628 0.683 0.496

Speed: 0.1ms pre-process, 5.2ms inference, 1.7ms NMS per image at shape (32, 3, 640, 640) # <--- base speed

Evaluating pycocotools mAP... saving runs/val/exp2/yolov5x_predictions.json...

...

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.507 # <--- base mAP

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.689

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.552

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.345

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.559

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.652

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.381

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.630

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.682

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.526

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.731

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.829

Results saved to runs/val/exp

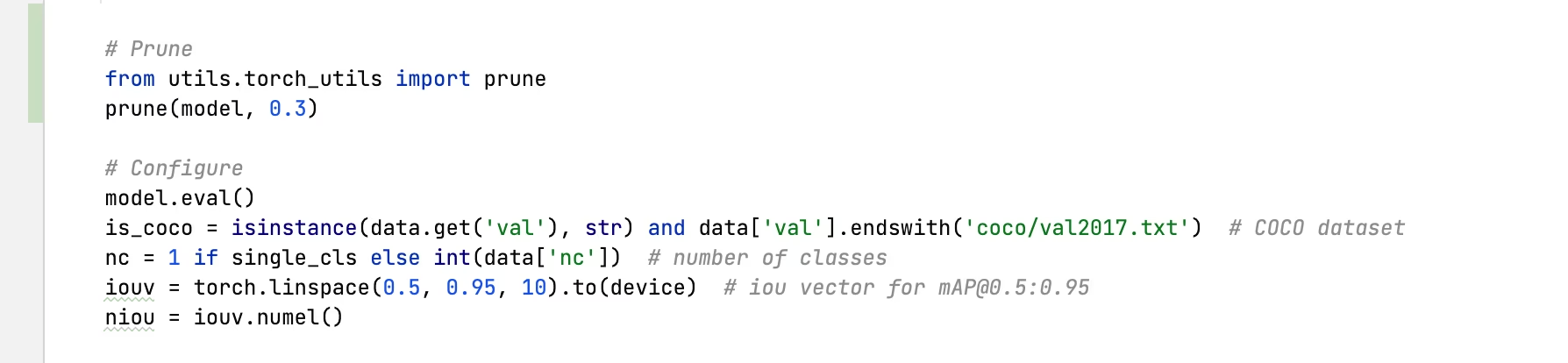

Apply Pruning to YOLOv5x (30% Sparsity)

We can apply pruning to the model using the torch_utils.prune() command defined in utils/torch_utils.py. To test a pruned model, we update val.py to prune YOLOv5x to 0.3 sparsity (30% of weights set to zero):

30% pruned output:

val: data=/content/yolov5/data/coco.yaml, weights=['yolov5x.pt'], batch_size=32, imgsz=640, conf_thres=0.001, iou_thres=0.65, task=val, device=, workers=8, single_cls=False, augment=False, verbose=False, save_txt=False, save_conf=False, save_json=True, project=runs/val, name=exp, exist_ok=False, half=True, dnn=False

YOLOv5 🚀 v6.0-224-g4c40933 torch 1.10.0+cu111 CUDA:0 (Tesla V100-SXM2-16GB, 16160MiB)

Fusing layers...

Model Summary: 444 layers, 86705005 parameters, 0 gradients

Pruning model... 0.3 global sparsity

val: Scanning '/content/datasets/coco/val2017.cache' images and labels... 4952 found, 48 missing, 0 empty, 0 corrupt: 100% 5000/5000 [00:00<?, ?it/s]

Class Images Labels P R mAP@.5 mAP@.5:.95: 100% 157/157 [01:11<00:00, 2.19it/s]

all 5000 36335 0.724 0.614 0.671 0.478

Speed: 0.1ms pre-process, 5.2ms inference, 1.7ms NMS per image at shape (32, 3, 640, 640) # <--- prune speed

Evaluating pycocotools mAP... saving runs/val/exp3/yolov5x_predictions.json...

...

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.489 # <--- prune mAP

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.677

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.537

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.334

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.542

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.635

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.370

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.612

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.664

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.496

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.722

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.803

Results saved to runs/val/exp3

Results Analysis

From the results, we can observe:

- 30% sparsity achieved: 30% of the model's weight parameters in

nn.Conv2dlayers are now zero - Inference time remains unchanged: Despite pruning, the processing speed is essentially the same

- Minimal performance impact: mAP dropped slightly from 0.507 to 0.489 (only 3.6% reduction)

- Model size reduction: The pruned model requires less memory for storage

This demonstrates that pruning can significantly reduce model complexity with only a minor impact on performance, making it an effective optimization technique for deployment in resource-constrained environments.

Fine-tuning Pruned Models

For best results, pruned models should be fine-tuned after pruning to recover accuracy. This can be done by:

- Applying pruning with a desired sparsity level

- Training the pruned model for a few epochs with a lower learning rate

- Evaluating the fine-tuned pruned model against the baseline

This process helps the remaining parameters adapt to compensate for the removed connections, often recovering most or all of the original accuracy.

Supported Environments

Ultralytics provides a range of ready-to-use environments, each pre-installed with essential dependencies such as CUDA, CUDNN, Python, and PyTorch, to kickstart your projects.

- Free GPU Notebooks:

- Google Cloud: GCP Quickstart Guide

- Amazon: AWS Quickstart Guide

- Azure: AzureML Quickstart Guide

- Docker: Docker Quickstart Guide

Project Status

This badge indicates that all YOLOv5 GitHub Actions Continuous Integration (CI) tests are successfully passing. These CI tests rigorously check the functionality and performance of YOLOv5 across various key aspects: training, validation, inference, export, and benchmarks. They ensure consistent and reliable operation on macOS, Windows, and Ubuntu, with tests conducted every 24 hours and upon each new commit.