在 Raspberry Pi 上使用 Coral Edge TPU 和 Ultralytics YOLO26 🚀

什么是 Coral Edge TPU?

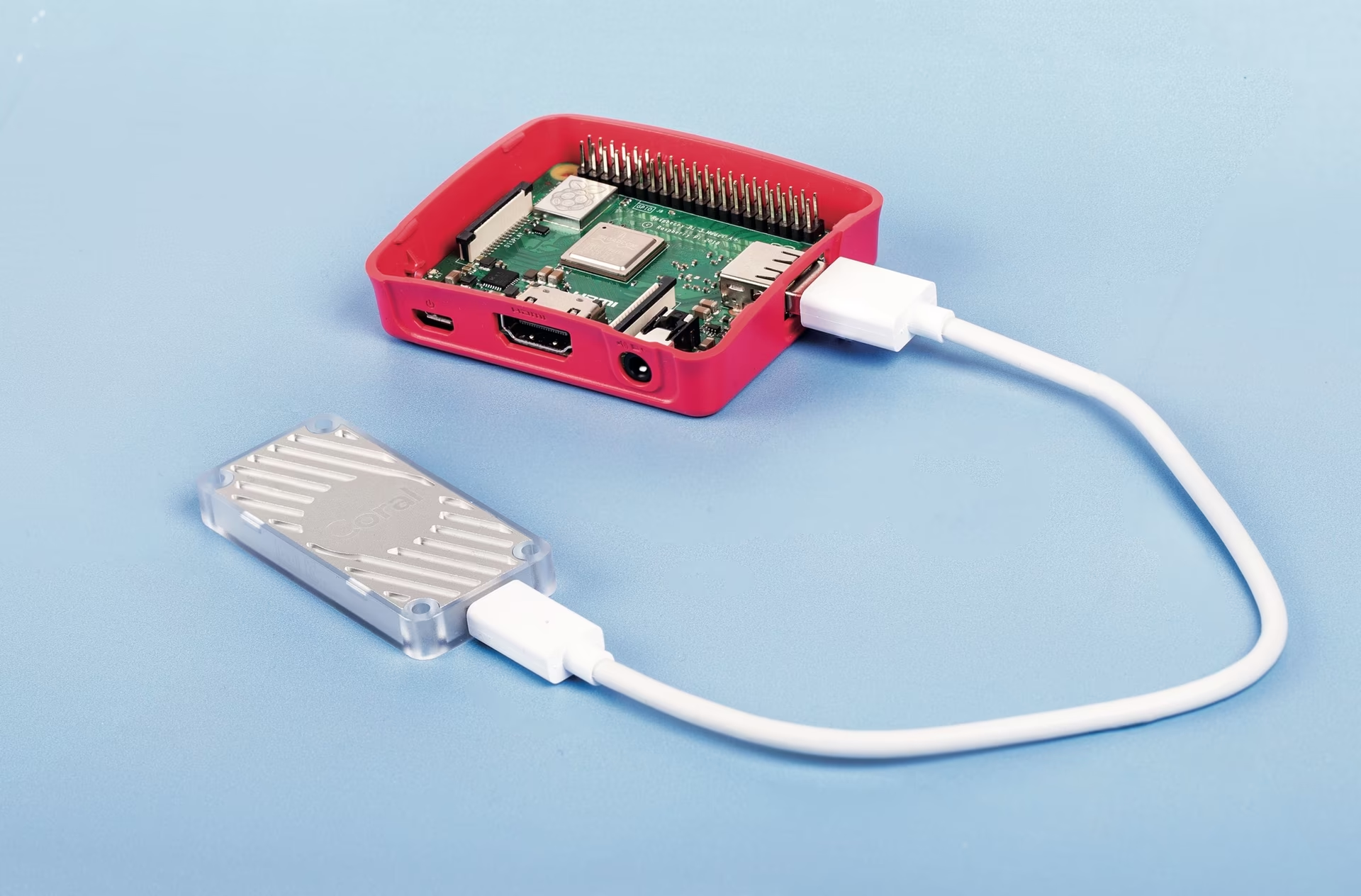

Coral Edge TPU 是一款紧凑型设备,可为您的系统添加 Edge TPU 协处理器。它支持针对 TensorFlow Lite 模型的低功耗、高性能 ML 推理。请访问 Coral Edge TPU 主页了解更多信息。

观看: 如何使用 Google Coral Edge TPU 在 Raspberry Pi 上运行推理

使用 Coral Edge TPU 提升 Raspberry Pi 型号的性能

许多人希望在嵌入式或移动设备(如 Raspberry Pi)上运行他们的模型,因为它们非常节能,并且可以用于许多不同的应用。但是,即使使用 ONNX 或 OpenVINO 等格式,这些设备上的推理性能通常也很差。Coral Edge TPU 是解决此问题的一个很好的方案,因为它可以与 Raspberry Pi 结合使用,并大大提高推理性能。

带有 TensorFlow Lite 的树莓派上的 Edge TPU(新)⭐

Coral 提供的关于如何将 Edge TPU 与 Raspberry Pi 配合使用的现有指南已过时,并且当前的 Coral Edge TPU 运行时版本不再适用于当前的 TensorFlow Lite 运行时版本。除此之外,Google 似乎已经完全放弃了 Coral 项目,并且在 2021 年到 2025 年之间没有任何更新。本指南将向您展示如何在 Raspberry Pi 单板计算机 (SBC) 上使用最新版本的 TensorFlow Lite 运行时和更新的 Coral Edge TPU 运行时来使 Edge TPU 正常工作。

准备工作

- Raspberry Pi 4B(建议 2GB 或更多)或 Raspberry Pi 5(推荐)

- 带有桌面的 Raspberry Pi OS Bullseye/Bookworm (64 位)(推荐)

- Coral USB 加速器

- 用于导出 Ultralytics PyTorch 模型的非基于 ARM 的平台

安装步骤详解

本指南假定您已经安装了可用的 Raspberry Pi OS。 ultralytics 以及所有依赖项。要获取 ultralytics 已安装,请访问 快速入门指南 在此继续之前进行设置。

安装 Edge TPU 运行时

首先,我们需要安装 Edge TPU 运行时。有许多不同的版本可用,因此您需要为您的操作系统选择正确的版本。 高频版本以更高的时钟速度运行 Edge TPU,从而提高了性能。但是,这可能会导致 Edge TPU 热节流,因此建议采取某种冷却机制。

| Raspberry Pi OS | 高频模式 | 要下载的版本 |

|---|---|---|

| Bullseye 32位 | 否 | libedgetpu1-std_ ... .bullseye_armhf.deb |

| Bullseye 64位 | 否 | libedgetpu1-std_ ... .bullseye_arm64.deb |

| Bullseye 32位 | 是 | libedgetpu1-max_ ... .bullseye_armhf.deb |

| Bullseye 64位 | 是 | libedgetpu1-max_ ... .bullseye_arm64.deb |

| Bookworm 32位 | 否 | libedgetpu1-std_ ... .bookworm_armhf.deb |

| Bookworm 64位 | 否 | libedgetpu1-std_ ... .bookworm_arm64.deb |

| Bookworm 32位 | 是 | libedgetpu1-max_ ... .bookworm_armhf.deb |

| Bookworm 64位 | 是 | libedgetpu1-max_ ... .bookworm_arm64.deb |

下载文件后,您可以使用以下命令安装它:

sudo dpkg -i path/to/package.deb

安装运行时后,将您的 Coral Edge TPU 插入 Raspberry Pi 的 USB 3.0 端口,以便新的 udev 规则可以生效。

重要

如果您已经安装了 Coral Edge TPU 运行时,请使用以下命令卸载它。

# If you installed the standard version

sudo apt remove libedgetpu1-std

# If you installed the high-frequency version

sudo apt remove libedgetpu1-max

导出到 Edge TPU

要使用 Edge TPU,您需要将模型转换为兼容格式。建议在 Google Colab、x86_64 Linux 机器上,使用官方的Ultralytics Docker 容器,或使用Ultralytics Platform 进行导出,因为 Edge TPU 编译器在 ARM 上不可用。有关可用参数,请参阅导出模式。

导出模型

from ultralytics import YOLO

# Load a model

model = YOLO("path/to/model.pt") # Load an official model or custom model

# Export the model

model.export(format="edgetpu")

yolo export model=path/to/model.pt format=edgetpu # Export an official model or custom model

导出的模型将保存在 <model_name>_saved_model/ 名称为 <model_name>_full_integer_quant_edgetpu.tflite。确保文件名以 _edgetpu.tflite 后缀;否则,Ultralytics 将无法 detect 到您正在使用 Edge TPU 模型。

运行模型

在实际运行模型之前,您需要安装正确的库。

如果您已经安装了 TensorFlow,请使用以下命令卸载它:

pip uninstall tensorflow tensorflow-aarch64

然后安装或更新 tflite-runtime:

pip install -U tflite-runtime

现在您可以使用以下代码运行推理:

运行模型

from ultralytics import YOLO

# Load a model

model = YOLO("path/to/<model_name>_full_integer_quant_edgetpu.tflite") # Load an official model or custom model

# Run Prediction

model.predict("path/to/source.png")

yolo predict model=path/to/MODEL_NAME_full_integer_quant_edgetpu.tflite source=path/to/source.png # Load an official model or custom model

有关完整预测模式的详细信息,请访问预测页面,获取全面信息。

使用多个 Edge TPU 进行推理

如果您有多个 Edge TPU,可以使用以下代码选择特定的 TPU。

from ultralytics import YOLO

# Load a model

model = YOLO("path/to/<model_name>_full_integer_quant_edgetpu.tflite") # Load an official model or custom model

# Run Prediction

model.predict("path/to/source.png") # Inference defaults to the first TPU

model.predict("path/to/source.png", device="tpu:0") # Select the first TPU

model.predict("path/to/source.png", device="tpu:1") # Select the second TPU

基准测试

基准测试

经 Raspberry Pi OS Bookworm 64位和 USB Coral Edge TPU 测试。

注意

显示的是推理时间,不包括预处理/后处理。

| 图像大小 | 模型 | 标准推理时间 (ms) | 高频推理时间 (ms) |

|---|---|---|---|

| 320 | YOLOv8n | 32.2 | 26.7 |

| 320 | YOLOv8s | 47.1 | 39.8 |

| 512 | YOLOv8n | 73.5 | 60.7 |

| 512 | YOLOv8s | 149.6 | 125.3 |

| 图像大小 | 模型 | 标准推理时间 (ms) | 高频推理时间(毫秒) |

|---|---|---|---|

| 320 | YOLOv8n | 22.2 | 16.7 |

| 320 | YOLOv8s | 40.1 | 32.2 |

| 512 | YOLOv8n | 53.5 | 41.6 |

| 512 | YOLOv8s | 132.0 | 103.3 |

平均:

- 在标准模式下,Raspberry Pi 5 比 Raspberry Pi 4B 快 22%。

- 树莓派 5 在高频模式下比树莓派 4B 快 30.2%。

- 高频模式比标准模式快 28.4%。

常见问题

什么是 Coral Edge TPU,以及它如何提升 Raspberry Pi 与 Ultralytics YOLO26 结合使用时的性能?

Coral Edge TPU 是一款紧凑型设备,旨在为您的系统添加一个 Edge TPU 协处理器。该协处理器可实现低功耗、高性能的机器学习推理,尤其针对 TensorFlow Lite 模型进行了优化。当与 Raspberry Pi 结合使用时,Edge TPU 可加速 ML 模型推理,显著提升性能,特别是对于 Ultralytics YOLO26 模型。您可以在其主页上阅读更多关于 Coral Edge TPU 的信息。

如何在 Raspberry Pi 上安装 Coral Edge TPU 运行时?

要在 Raspberry Pi 上安装 Coral Edge TPU 运行时,请下载相应的 .deb 适用于您的 Raspberry Pi 操作系统版本的软件包,来自 此链接。下载完成后,使用以下命令安装它:

sudo dpkg -i path/to/package.deb

请务必按照安装步骤部分中概述的步骤卸载之前所有 Coral Edge TPU 运行时版本。

我是否可以将我的 Ultralytics YOLO26 模型导出以兼容 Coral Edge TPU?

是的,您可以导出您的 Ultralytics YOLO26 模型,使其与 Coral Edge TPU 兼容。建议在 Google Colab、x86_64 Linux 机器上或使用Ultralytics Docker 容器执行导出操作。您也可以使用Ultralytics Platform 进行导出。以下是使用 python 和 CLI 导出模型的方法:

导出模型

from ultralytics import YOLO

# Load a model

model = YOLO("path/to/model.pt") # Load an official model or custom model

# Export the model

model.export(format="edgetpu")

yolo export model=path/to/model.pt format=edgetpu # Export an official model or custom model

更多信息,请参考导出模式文档。

如果我的 Raspberry Pi 上已经安装了 TensorFlow,但我想使用 tflite-runtime,我该怎么办?

如果您的 Raspberry Pi 上安装了 TensorFlow,并且需要切换到 tflite-runtime,您需要先卸载 TensorFlow,使用命令:

pip uninstall tensorflow tensorflow-aarch64

然后,安装或更新 tflite-runtime 使用以下命令:

pip install -U tflite-runtime

有关详细说明,请参阅运行模型部分。

如何使用 Coral Edge TPU 在 Raspberry Pi 上运行导出的 YOLO26 模型推理?

将 YOLO26 模型导出为 Edge TPU 兼容格式后,您可以使用以下代码片段运行推理:

运行模型

from ultralytics import YOLO

# Load a model

model = YOLO("path/to/edgetpu_model.tflite") # Load an official model or custom model

# Run Prediction

model.predict("path/to/source.png")

yolo predict model=path/to/edgetpu_model.tflite source=path/to/source.png # Load an official model or custom model

关于完整预测模式功能的详细信息,请访问预测页面。