PyTorch Hub에서 YOLOv5 로드 중

📚 이 가이드에서는 PyTorch Hub(https://pytorch.org/hub/ultralytics_yolov5)에서 YOLOv5 🚀를 로드하는 방법을 설명합니다.

시작하기 전에

requirements.txt를 Python>=3.8.0 환경에 설치하고 PyTorch>=1.8도 포함합니다. 모델 및 데이터 세트는 최신 YOLOv5 릴리스에서 자동으로 다운로드됩니다.

pip install -r https://raw.githubusercontent.com/ultralytics/yolov5/master/requirements.txt

💡 ProTip: https://github.com/ultralytics/yolov5를 복제하는 것은 필수가 아닙니다. 😃

PyTorch Hub로 YOLOv5 로드

간단한 예제

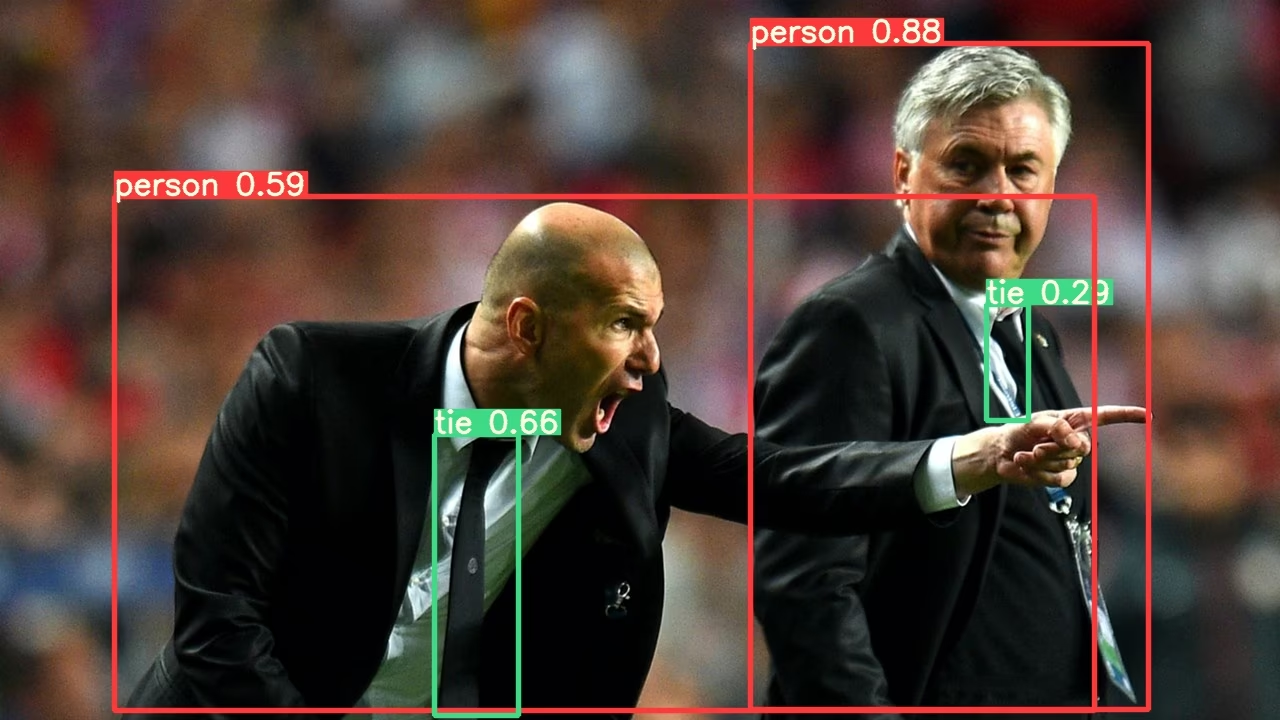

이 예제는 PyTorch Hub에서 사전 훈련된 YOLOv5s 모델을 다음과 같이 로드합니다. model 추론을 위해 이미지를 전달합니다. 'yolov5s' 은(는) 가장 가볍고 빠른 YOLOv5 모델입니다. 사용 가능한 모든 모델에 대한 자세한 내용은 다음을 참조하십시오. README.

import torch

# Model

model = torch.hub.load("ultralytics/yolov5", "yolov5s")

# Image

im = "https://ultralytics.com/images/zidane.jpg"

# Inference

results = model(im)

results.pandas().xyxy[0]

# xmin ymin xmax ymax confidence class name

# 0 749.50 43.50 1148.0 704.5 0.874023 0 person

# 1 433.50 433.50 517.5 714.5 0.687988 27 tie

# 2 114.75 195.75 1095.0 708.0 0.624512 0 person

# 3 986.00 304.00 1028.0 420.0 0.286865 27 tie

자세한 예제

이 예제는 다음을 보여줍니다. 배치 추론 와 함께 PIL 및 OpenCV 이미지 소스. results 다음과 같이 될 수 있습니다. 인쇄됨 콘솔에 저장됨 에서 runs/hub, 표시됨 지원되는 환경에서 화면에 표시하고 다음 형식으로 반환합니다. tensor 또는 pandas 데이터프레임.

import cv2

import torch

from PIL import Image

# Model

model = torch.hub.load("ultralytics/yolov5", "yolov5s")

# Images

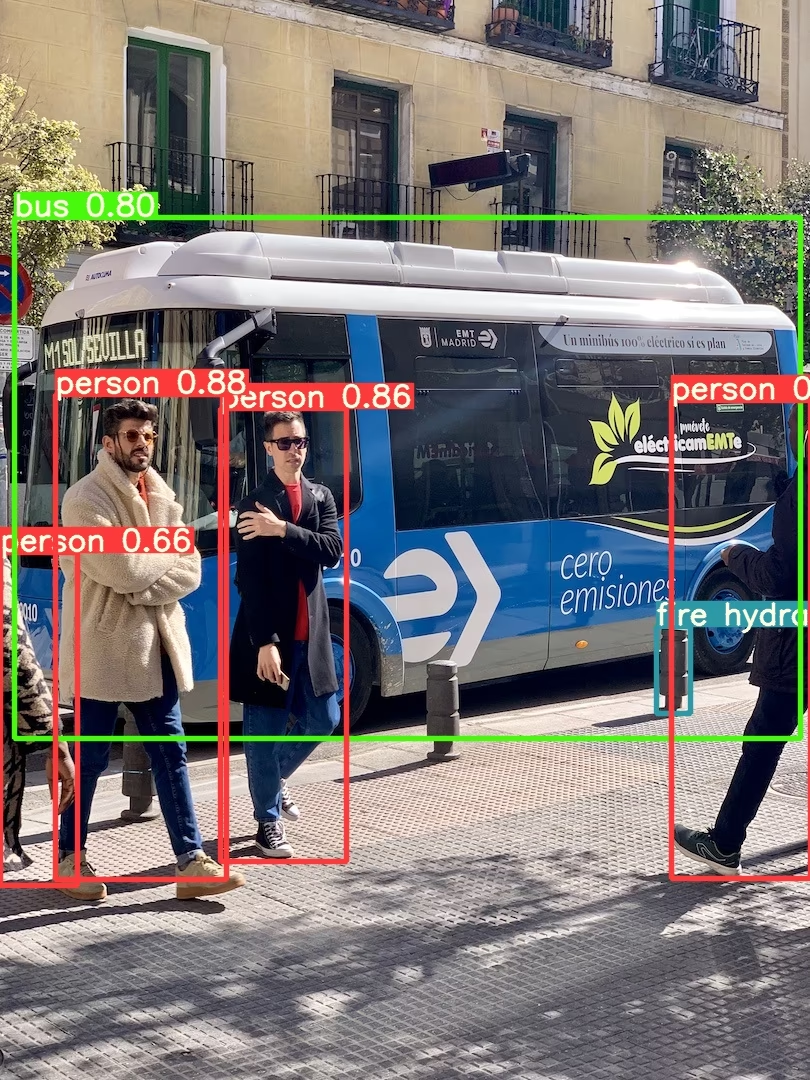

for f in "zidane.jpg", "bus.jpg":

torch.hub.download_url_to_file("https://ultralytics.com/images/" + f, f) # download 2 images

im1 = Image.open("zidane.jpg") # PIL image

im2 = cv2.imread("bus.jpg")[..., ::-1] # OpenCV image (BGR to RGB)

# Inference

results = model([im1, im2], size=640) # batch of images

# Results

results.print()

results.save() # or .show()

results.xyxy[0] # im1 predictions (tensor)

results.pandas().xyxy[0] # im1 predictions (pandas)

# xmin ymin xmax ymax confidence class name

# 0 749.50 43.50 1148.0 704.5 0.874023 0 person

# 1 433.50 433.50 517.5 714.5 0.687988 27 tie

# 2 114.75 195.75 1095.0 708.0 0.624512 0 person

# 3 986.00 304.00 1028.0 420.0 0.286865 27 tie

모든 추론 옵션은 YOLOv5를 참조하십시오. AutoShape() 전달 메서드.

추론 설정

YOLOv5 모델은 신뢰도 임계값, IoU 임계값 등 다양한 추론 속성을 포함하며, 이는 다음과 같이 설정할 수 있습니다:

model.conf = 0.25 # NMS confidence threshold

model.iou = 0.45 # NMS IoU threshold

model.agnostic = False # NMS class-agnostic

model.multi_label = False # NMS multiple labels per box

model.classes = None # (optional list) filter by class, i.e. = [0, 15, 16] for COCO persons, cats and dogs

model.max_det = 1000 # maximum number of detections per image

model.amp = False # Automatic Mixed Precision (AMP) inference

results = model(im, size=320) # custom inference size

장치

모델은 생성 후 모든 장치로 전송할 수 있습니다.

model.cpu() # CPU

model.cuda() # GPU

model.to(device) # i.e. device=torch.device(0)

모델은 모든 환경에서 직접 생성할 수도 있습니다. device:

model = torch.hub.load("ultralytics/yolov5", "yolov5s", device="cpu") # load on CPU

💡 ProTip: 입력 이미지는 추론 전에 올바른 모델 장치로 자동 전송됩니다.

출력 음소거

모델은 다음을 사용하여 자동으로 로드할 수 있습니다. _verbose=False:

model = torch.hub.load("ultralytics/yolov5", "yolov5s", _verbose=False) # load silently

입력 채널

기본 3개 채널 대신 4개의 입력 채널로 사전 훈련된 YOLOv5s 모델을 로드하려면:

model = torch.hub.load("ultralytics/yolov5", "yolov5s", channels=4)

이 경우 모델은 사전 훈련된 가중치로 구성되지만, 첫 번째 입력 레이어는 사전 훈련된 입력 레이어와 모양이 더 이상 동일하지 않습니다. 입력 레이어는 임의 가중치로 초기화된 상태로 유지됩니다.

클래스 수

기본 80개 클래스 대신 10개의 출력 클래스로 사전 훈련된 YOLOv5s 모델을 로드하려면:

model = torch.hub.load("ultralytics/yolov5", "yolov5s", classes=10)

이 경우 모델은 사전 훈련된 가중치로 구성되지만, 출력 레이어는 사전 훈련된 출력 레이어와 모양이 더 이상 동일하지 않습니다. 출력 레이어는 임의 가중치로 초기화된 상태로 유지됩니다.

강제 재로드

위의 단계에서 문제가 발생하는 경우 다음을 설정합니다. force_reload=True 를 사용하면 기존 캐시를 삭제하고 PyTorch 허브에서 최신 YOLOv5 버전을 강제로 새로 다운로드하는 데 도움이 될 수 있습니다. 캐시된 사본은 ~/.cache/torch/hub; 해당 폴더를 삭제하면 동일한 효과를 얻을 수 있습니다.

model = torch.hub.load("ultralytics/yolov5", "yolov5s", force_reload=True) # force reload

스크린샷 추론

데스크톱 화면에서 추론을 실행하려면:

import torch

from PIL import ImageGrab

# Model

model = torch.hub.load("ultralytics/yolov5", "yolov5s")

# Image

im = ImageGrab.grab() # take a screenshot

# Inference

results = model(im)

Multi-GPU 추론

YOLOv5 모델은 스레드 추론을 통해 여러 GPU에 병렬로 로드할 수 있습니다.

import threading

import torch

def run(model, im):

"""Performs inference on an image using a given model and saves the output; model must support `.save()` method."""

results = model(im)

results.save()

# Models

model0 = torch.hub.load("ultralytics/yolov5", "yolov5s", device=0)

model1 = torch.hub.load("ultralytics/yolov5", "yolov5s", device=1)

# Inference

threading.Thread(target=run, args=[model0, "https://ultralytics.com/images/zidane.jpg"], daemon=True).start()

threading.Thread(target=run, args=[model1, "https://ultralytics.com/images/bus.jpg"], daemon=True).start()

훈련

추론이 아닌 학습을 위해 YOLOv5 모델을 로드하려면 다음을 설정하십시오. autoshape=False. 무작위로 초기화된 가중치로 모델을 로드하려면(처음부터 학습하려면) 다음을 사용하십시오. pretrained=False. 이 경우 사용자 고유의 학습 스크립트를 제공해야 합니다. 또는 YOLOv5를 참조하십시오. 사용자 정의 데이터 학습 튜토리얼 모델 학습에 유용합니다.

import torch

model = torch.hub.load("ultralytics/yolov5", "yolov5s", autoshape=False) # load pretrained

model = torch.hub.load("ultralytics/yolov5", "yolov5s", autoshape=False, pretrained=False) # load scratch

Base64 결과

API 서비스와 함께 사용하기 위함입니다. 자세한 내용은 Flask REST API 예시를 참조하십시오.

import base64

from io import BytesIO

from PIL import Image

results = model(im) # inference

results.ims # array of original images (as np array) passed to model for inference

results.render() # updates results.ims with boxes and labels

for im in results.ims:

buffered = BytesIO()

im_base64 = Image.fromarray(im)

im_base64.save(buffered, format="JPEG")

print(base64.b64encode(buffered.getvalue()).decode("utf-8")) # base64 encoded image with results

잘린 결과

결과를 반환하고 탐지된 부분을 잘라내어 저장할 수 있습니다.

results = model(im) # inference

crops = results.crop(save=True) # cropped detections dictionary

Pandas 결과

결과는 Pandas DataFrames로 반환될 수 있습니다:

results = model(im) # inference

results.pandas().xyxy[0] # Pandas DataFrame

Pandas 출력 (클릭하여 확장)

print(results.pandas().xyxy[0])

# xmin ymin xmax ymax confidence class name

# 0 749.50 43.50 1148.0 704.5 0.874023 0 person

# 1 433.50 433.50 517.5 714.5 0.687988 27 tie

# 2 114.75 195.75 1095.0 708.0 0.624512 0 person

# 3 986.00 304.00 1028.0 420.0 0.286865 27 tie

정렬된 결과

결과를 열별로 정렬할 수 있습니다. 예를 들어, 차량 번호판 숫자 감지를 왼쪽에서 오른쪽(x축)으로 정렬할 수 있습니다.

results = model(im) # inference

results.pandas().xyxy[0].sort_values("xmin") # sorted left-right

JSON 결과

결과를 JSON 형식으로 변환하여 반환할 수 있으며, .pandas() 다음을 사용하여 데이터프레임을 .to_json() 메서드입니다. JSON 형식은 다음을 사용하여 수정할 수 있습니다. orient 인수입니다. pandas를 참조하세요. .to_json() 문서 자세한 내용은 참조하십시오.

results = model(ims) # inference

results.pandas().xyxy[0].to_json(orient="records") # JSON img1 predictions

JSON 출력 (클릭하여 확장)

[

{

"xmin": 749.5,

"ymin": 43.5,

"xmax": 1148.0,

"ymax": 704.5,

"confidence": 0.8740234375,

"class": 0,

"name": "person"

},

{

"xmin": 433.5,

"ymin": 433.5,

"xmax": 517.5,

"ymax": 714.5,

"confidence": 0.6879882812,

"class": 27,

"name": "tie"

},

{

"xmin": 115.25,

"ymin": 195.75,

"xmax": 1096.0,

"ymax": 708.0,

"confidence": 0.6254882812,

"class": 0,

"name": "person"

},

{

"xmin": 986.0,

"ymin": 304.0,

"xmax": 1028.0,

"ymax": 420.0,

"confidence": 0.2873535156,

"class": 27,

"name": "tie"

}

]

맞춤형 모델

이 예제는 사용자 정의 20개 클래스를 로드합니다. VOC- 훈련된 YOLOv5s 모델 'best.pt' PyTorch Hub 사용.

import torch

model = torch.hub.load("ultralytics/yolov5", "custom", path="path/to/best.pt") # local model

model = torch.hub.load("path/to/yolov5", "custom", path="path/to/best.pt", source="local") # local repo

TensorRT, ONNX 및 OpenVINO 모델

PyTorch Hub는 사용자 정의 학습 모델을 포함하여 대부분의 YOLOv5 내보내기 형식에서 추론을 지원합니다. 모델 내보내기에 대한 자세한 내용은 TFLite, ONNX, CoreML, TensorRT Export 튜토리얼을 참조하십시오.

💡 ProTip: TensorRT는 GPU 벤치마크에서 PyTorch보다 2~5배 더 빠를 수 있습니다. 💡 ProTip: ONNX 및 OpenVINO는 CPU 벤치마크에서 PyTorch보다 2~3배 더 빠를 수 있습니다.

import torch

model = torch.hub.load("ultralytics/yolov5", "custom", path="yolov5s.pt") # PyTorch

model = torch.hub.load("ultralytics/yolov5", "custom", path="yolov5s.torchscript") # TorchScript

model = torch.hub.load("ultralytics/yolov5", "custom", path="yolov5s.onnx") # ONNX

model = torch.hub.load("ultralytics/yolov5", "custom", path="yolov5s_openvino_model/") # OpenVINO

model = torch.hub.load("ultralytics/yolov5", "custom", path="yolov5s.engine") # TensorRT

model = torch.hub.load("ultralytics/yolov5", "custom", path="yolov5s.mlmodel") # CoreML (macOS-only)

model = torch.hub.load("ultralytics/yolov5", "custom", path="yolov5s.tflite") # TFLite

model = torch.hub.load("ultralytics/yolov5", "custom", path="yolov5s_paddle_model/") # PaddlePaddle

지원되는 환경

Ultralytics는 CUDA, CUDNN, Python 및 PyTorch와 같은 필수 종속성이 미리 설치된 다양한 즉시 사용 가능한 환경을 제공하여 프로젝트를 시작할 수 있도록 합니다.

- 무료 GPU 노트북:

- Google Cloud: GCP 빠른 시작 가이드

- Amazon: AWS 빠른 시작 가이드

- Azure: AzureML 빠른 시작 가이드

- Docker: Docker 빠른 시작 가이드

프로젝트 상태

이 배지는 모든 YOLOv5 GitHub Actions 지속적 통합(CI) 테스트가 성공적으로 통과되었음을 나타냅니다. 이러한 CI 테스트는 학습, 유효성 검사, 추론, 내보내기 및 벤치마크와 같은 다양한 주요 측면에서 YOLOv5의 기능과 성능을 엄격하게 검사합니다. macOS, Windows 및 Ubuntu에서 일관되고 안정적인 작동을 보장하며, 테스트는 24시간마다 그리고 새로운 커밋마다 수행됩니다.