Ultralytics YOLO26 on NVIDIA Jetson using DeepStream SDK and TensorRT

Watch: How to use Ultralytics YOLO26 models with NVIDIA Deepstream on Jetson Orin NX 🚀

This comprehensive guide provides a detailed walkthrough for deploying Ultralytics YOLO26 on NVIDIA Jetson devices using DeepStream SDK and TensorRT. Here we use TensorRT to maximize the inference performance on the Jetson platform.

Note

This guide has been tested with NVIDIA Jetson Orin Nano Super Developer Kit running the latest stable JetPack release of JP6.1, Seeed Studio reComputer J4012 which is based on NVIDIA Jetson Orin NX 16GB running JetPack release of JP5.1.3 and Seeed Studio reComputer J1020 v2 which is based on NVIDIA Jetson Nano 4GB running JetPack release of JP4.6.4. It is expected to work across all the NVIDIA Jetson hardware lineup including latest and legacy.

What is NVIDIA DeepStream?

NVIDIA's DeepStream SDK is a complete streaming analytics toolkit based on GStreamer for AI-based multi-sensor processing, video, audio, and image understanding. It's ideal for vision AI developers, software partners, startups, and OEMs building IVA (Intelligent Video Analytics) apps and services. You can now create stream-processing pipelines that incorporate neural networks and other complex processing tasks like tracking, video encoding/decoding, and video rendering. These pipelines enable real-time analytics on video, image, and sensor data. DeepStream's multi-platform support gives you a faster, easier way to develop vision AI applications and services on-premise, at the edge, and in the cloud.

Prerequisites

Before you start to follow this guide:

- Visit our documentation, Quick Start Guide: NVIDIA Jetson with Ultralytics YOLO26 to set up your NVIDIA Jetson device with Ultralytics YOLO26

- Install DeepStream SDK according to the JetPack version

- For JetPack 4.6.4, install DeepStream 6.0.1

- For JetPack 5.1.3, install DeepStream 6.3

- For JetPack 6.1, install DeepStream 7.1

Tip

In this guide we have used the Debian package method of installing DeepStream SDK to the Jetson device. You can also visit the DeepStream SDK on Jetson (Archived) to access legacy versions of DeepStream.

DeepStream Configuration for YOLO26

Here we are using marcoslucianops/DeepStream-Yolo GitHub repository which includes NVIDIA DeepStream SDK support for YOLO models. We appreciate the efforts of marcoslucianops for his contributions!

Install Ultralytics with necessary dependencies

cd ~ pip install -U pip git clone https://github.com/ultralytics/ultralytics cd ultralytics pip install -e ".[export]" onnxslimClone the DeepStream-Yolo repository

cd ~ git clone https://github.com/marcoslucianops/DeepStream-YoloCopy the

export_yolo26.pyfile fromDeepStream-Yolo/utilsdirectory to theultralyticsfoldercp ~/DeepStream-Yolo/utils/export_yolo26.py ~/ultralytics cd ultralyticsDownload Ultralytics YOLO26 detection model (.pt) of your choice from YOLO26 releases. Here we use yolo26s.pt.

wget https://github.com/ultralytics/assets/releases/download/v8.4.0/yolo26s.ptNote

You can also use a custom-trained YOLO26 model.

Convert model to ONNX

python3 export_yolo26.py -w yolo26s.ptPass the below arguments to the above command

For DeepStream 5.1, remove the

--dynamicarg and useopset12 or lower. The defaultopsetis 17.--opset 12To change the inference size (default: 640)

-s SIZE --size SIZE -s HEIGHT WIDTH --size HEIGHT WIDTHExample for 1280:

-s 1280 or -s 1280 1280To simplify the ONNX model (DeepStream >= 6.0)

--simplifyTo use dynamic batch-size (DeepStream >= 6.1)

--dynamicTo use static batch-size (example for batch-size = 4)

--batch 4Copy the generated

.onnxmodel file andlabels.txtfile to theDeepStream-Yolofoldercp yolo26s.pt.onnx labels.txt ~/DeepStream-Yolo cd ~/DeepStream-YoloSet the CUDA version according to the JetPack version installed

For JetPack 4.6.4:

export CUDA_VER=10.2For JetPack 5.1.3:

export CUDA_VER=11.4For JetPack 6.1:

export CUDA_VER=12.6Compile the library

make -C nvdsinfer_custom_impl_Yolo clean && make -C nvdsinfer_custom_impl_YoloEdit the

config_infer_primary_yolo26.txtfile according to your model (for YOLO26s with 80 classes)[property] ... onnx-file=yolo26s.pt.onnx ... num-detected-classes=80 ...Edit the

deepstream_app_configfile... [primary-gie] ... config-file=config_infer_primary_yolo26.txtYou can also change the video source in

deepstream_app_configfile. Here, a default video file is loaded... [source0] ... uri=file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4

Run Inference

deepstream-app -c deepstream_app_config.txt

Note

It will take a long time to generate the TensorRT engine file before starting the inference. So please be patient.

Tip

If you want to convert the model to FP16 precision, simply set model-engine-file=model_b1_gpu0_fp16.engine and network-mode=2 inside config_infer_primary_yolo26.txt

INT8 Calibration

If you want to use INT8 precision for inference, you need to follow the steps below:

Note

Currently INT8 does not work with TensorRT 10.x. This section of the guide has been tested with TensorRT 8.x which is expected to work.

Set

OPENCVenvironment variableexport OPENCV=1Compile the library

make -C nvdsinfer_custom_impl_Yolo clean && make -C nvdsinfer_custom_impl_YoloFor COCO dataset, download the val2017, extract, and move to

DeepStream-YolofolderMake a new directory for calibration images

mkdir calibrationRun the following to select 1000 random images from COCO dataset to run calibration

for jpg in $(ls -1 val2017/*.jpg | sort -R | head -1000); do cp ${jpg} calibration/ doneNote

NVIDIA recommends at least 500 images to get a good accuracy. On this example, 1000 images are chosen to get better accuracy (more images = more accuracy). You can set it from head -1000. For example, for 2000 images, head -2000. This process can take a long time.

Create the

calibration.txtfile with all selected imagesrealpath calibration/*jpg > calibration.txtSet environment variables

export INT8_CALIB_IMG_PATH=calibration.txt export INT8_CALIB_BATCH_SIZE=1Note

Higher INT8_CALIB_BATCH_SIZE values will result in more accuracy and faster calibration speed. Set it according to your GPU memory.

Update the

config_infer_primary_yolo26.txtfileFrom

... model-engine-file=model_b1_gpu0_fp32.engine #int8-calib-file=calib.table ... network-mode=0 ...To

... model-engine-file=model_b1_gpu0_int8.engine int8-calib-file=calib.table ... network-mode=1 ...

Run Inference

deepstream-app -c deepstream_app_config.txt

MultiStream Setup

Watch: How to Run Multiple Streams with DeepStream SDK on Jetson Nano using Ultralytics YOLO26 🎉

To set up multiple streams under a single DeepStream application, make the following changes to the deepstream_app_config.txt file:

Change the rows and columns to build a grid display according to the number of streams you want to have. For example, for 4 streams, we can add 2 rows and 2 columns.

[tiled-display] rows=2 columns=2Set

num-sources=4and add theurientries for all four streams.[source0] enable=1 type=3 uri=path/to/video1.jpg uri=path/to/video2.jpg uri=path/to/video3.jpg uri=path/to/video4.jpg num-sources=4

Run Inference

deepstream-app -c deepstream_app_config.txt

Benchmark Results

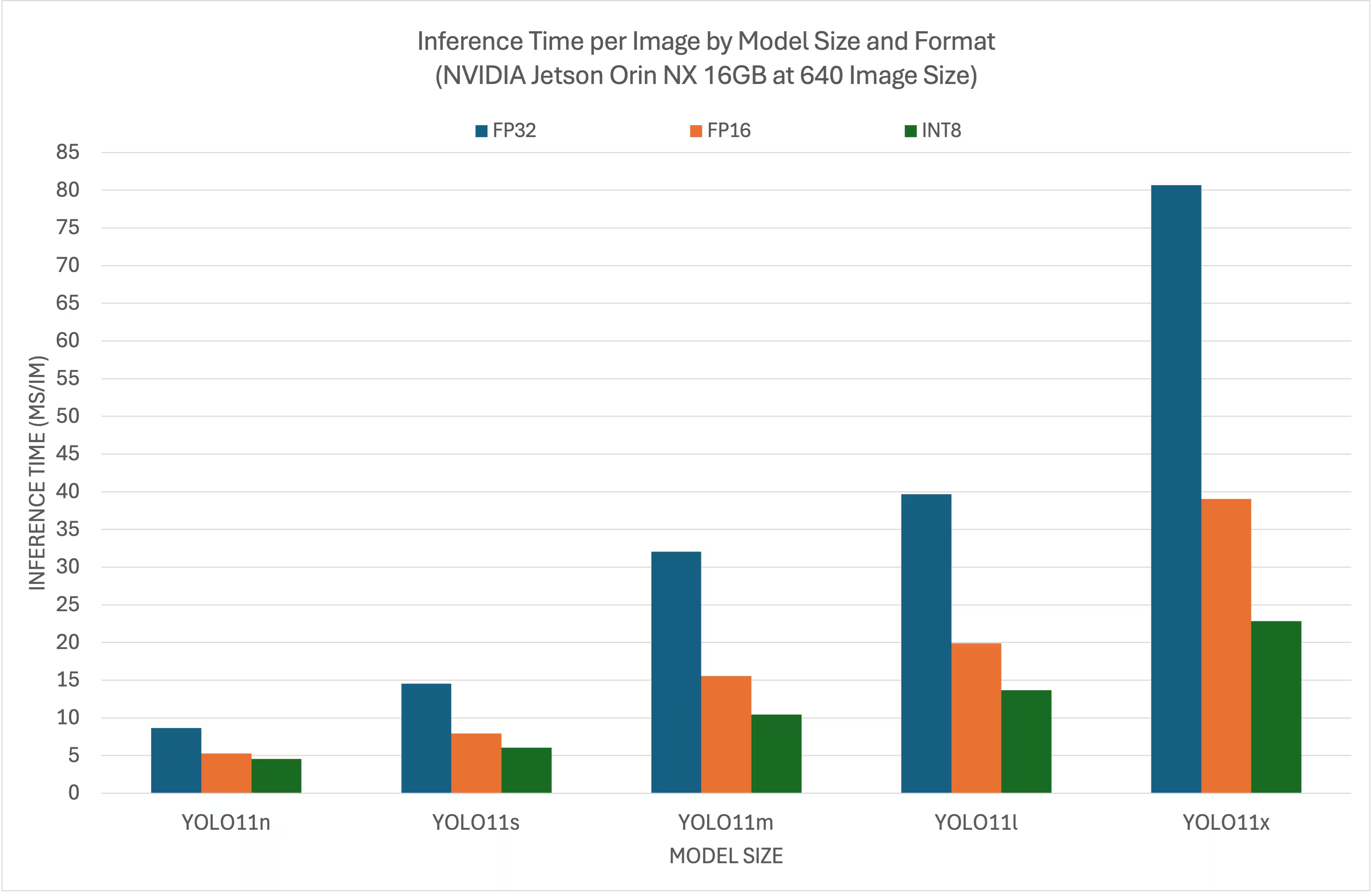

The following benchmarks summarizes how YOLO26 models perform at different TensorRT precision levels with an input size of 640x640 on NVIDIA Jetson Orin NX 16GB.

Comparison Chart

Detailed Comparison Table

Performance

| Format | Status | Inference time (ms/im) |

|---|---|---|

| TensorRT (FP32) | ✅ | 8.64 |

| TensorRT (FP16) | ✅ | 5.27 |

| TensorRT (INT8) | ✅ | 4.54 |

| Format | Status | Inference time (ms/im) |

|---|---|---|

| TensorRT (FP32) | ✅ | 14.53 |

| TensorRT (FP16) | ✅ | 7.91 |

| TensorRT (INT8) | ✅ | 6.05 |

| Format | Status | Inference time (ms/im) |

|---|---|---|

| TensorRT (FP32) | ✅ | 32.05 |

| TensorRT (FP16) | ✅ | 15.55 |

| TensorRT (INT8) | ✅ | 10.43 |

| Format | Status | Inference time (ms/im) |

|---|---|---|

| TensorRT (FP32) | ✅ | 39.68 |

| TensorRT (FP16) | ✅ | 19.88 |

| TensorRT (INT8) | ✅ | 13.64 |

| Format | Status | Inference time (ms/im) |

|---|---|---|

| TensorRT (FP32) | ✅ | 80.65 |

| TensorRT (FP16) | ✅ | 39.06 |

| TensorRT (INT8) | ✅ | 22.83 |

Acknowledgments

This guide was initially created by our friends at Seeed Studio, Lakshantha and Elaine.

FAQ

How do I set up Ultralytics YOLO26 on an NVIDIA Jetson device?

To set up Ultralytics YOLO26 on an NVIDIA Jetson device, you first need to install the DeepStream SDK compatible with your JetPack version. Follow the step-by-step guide in our Quick Start Guide to configure your NVIDIA Jetson for YOLO26 deployment.

What is the benefit of using TensorRT with YOLO26 on NVIDIA Jetson?

Using TensorRT with YOLO26 optimizes the model for inference, significantly reducing latency and improving throughput on NVIDIA Jetson devices. TensorRT provides high-performance, low-latency deep learning inference through layer fusion, precision calibration, and kernel auto-tuning. This leads to faster and more efficient execution, particularly useful for real-time applications like video analytics and autonomous machines.

Can I run Ultralytics YOLO26 with DeepStream SDK across different NVIDIA Jetson hardware?

Yes, the guide for deploying Ultralytics YOLO26 with the DeepStream SDK and TensorRT is compatible across the entire NVIDIA Jetson lineup. This includes devices like the Jetson Orin NX 16GB with JetPack 5.1.3 and the Jetson Nano 4GB with JetPack 4.6.4. Refer to the section DeepStream Configuration for YOLO26 for detailed steps.

How can I convert a YOLO26 model to ONNX for DeepStream?

To convert a YOLO26 model to ONNX format for deployment with DeepStream, use the utils/export_yolo26.py script from the DeepStream-Yolo repository.

Here's an example command:

python3 utils/export_yolo26.py -w yolo26s.pt --opset 12 --simplify

For more details on model conversion, check out our model export section.

What are the performance benchmarks for YOLO on NVIDIA Jetson Orin NX?

The performance of YOLO26 models on NVIDIA Jetson Orin NX 16GB varies based on TensorRT precision levels. For example, YOLO26s models achieve:

- FP32 Precision: 14.6 ms/im, 68.5 FPS

- FP16 Precision: 7.94 ms/im, 126 FPS

- INT8 Precision: 5.95 ms/im, 168 FPS

These benchmarks underscore the efficiency and capability of using TensorRT-optimized YOLO26 models on NVIDIA Jetson hardware. For further details, see our Benchmark Results section.